Recently, Paul Meehan submitted this question via a comment on the “Memory reclamation, when and how” article:

Hi,

we are currently considering virtualising some pretty significant SQL workloads. While the VMware best practices documents for SQL server inside VMware recommend turning on ballooning, a colleague who attended a deep dive with a SQL Microsoft MVP came back and the SQL guy strongly suggested that ballooning should always be turned off for SQL workloads. We have 165 SQL instances, some of which will need 5-10000 IOPS so performance and memory management is critical. Do you guys have a view on this from experience?

Thx,

Paul

I receive this kind of question a lot, whether it is SQL, Oracle or Citrix. And there always seem to be expert that is recommending disabling ballooning. Now this statement can be interpreted in two ways.

1. Disable the memory reclaimation mechanism by adding a particular parameter (sched.mem.maxmemctl) in the settings of the virtual machine.

– or –

2. Ensure that enough physical memory resources are available to the virtual machine to keep the VMkernel from reclaiming memory of that particular virtual machine.

I always hope that they mean guarantee enough memory to the virtual machine to stop the VMkernel from reclaiming memory from that specific VM. But unfortunately most specialists insist on disabling the mechanism.

Why is disabling the ballooning mechanism bad?

Many organizations that deploy virtual infrastructures rely on memory overcommitment to reach a higher consolidation ratio and higher memory utilization. In a virtual infrastructure not every virtual machine is actively using its assigned memory at the same time and not every virtual machine is making use of its configured memory footprint. To allow memory overcommitment, the VMkernel uses different virtual machine memory reclamation mechanisms.

1. Transparent Page Sharing

2. Ballooning

3. Memory compression

4. Host swapping

Except from Transparent Page Sharing, all memory reclamation techniques only become active when the ESX host experiences memory contention. The VMkernel will use a specific memory reclamation technique depending on the level of the host free memory. When the ESX host has 6% or less free memory available it will use the balloon driver to reclaim idle memory from virtual machines. The VMkernel selects the virtual machines with the largest amounts of idle memory (detected by the idle memory tax process) and will ask the virtual machine to select idle memory pages.

Now to fully understand the beauty of the balloon driver, it’s crucial to understand that the VMkernel is not aware of the Guest OS internal memory management mechanisms. Guest OS’s commonly use an allocated memory list and a free memory list. When a guest OS makes a request for a page, ESX will back that page with physical memory. When the Guest OS stops using the page internally, it will remove the page from the allocated memory list and place it on the free memory list. Because no data is changed, ESX will keep storing this data in physical memory.

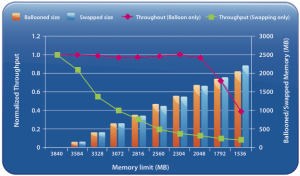

When the Balloon driver is utilized, the balloon driver request the guest OS to allocated a certain amount of pages. Typically the Guest OS will allocate memory that has been idle or registered in the Guest OS free list. If the virtual machine has enough idle pages no guest-level paging or even worse kernel level paging is necessary. Scott Drummonds tested an Oracle database VM against an OLTP load generation tool and researched the (lack of) impact of the balloon driver on the performance of the virtual machine. The results are displayed in this image:

Scott’s explanation:

Results of two experiments are shown on this graph: in one memory is reclaimed only through ballooning and in the other memory is reclaimed only through host swapping. The bars show the amount of memory reclaimed by ESX and the line shows the workload performance. The steadily falling green line reveals a predictable deterioration of performance due to host swapping. The red line demonstrates that as the balloon driver inflates, kernel compile performance is unchanged.

So the beauty of ballooning lies in the fact that it allows the guest OS itself to make the hard decision about which pages to be paged out without the hypervisor’s involvement. Because the Guest OS is fully aware of the memory state, the virtual machine will keep on performing as long as it has idle or free pages.

When ballooning is disabled

When we follow the recommendations of Non-VMware experts we would disable ballooning resulting in the following available memory reclamation techniques:

1. Transparent Page Sharing

2. Memory compression

3. Host-level swapping (.vswp)

Memory compression

Memory compression is offered in vSphere 4.1. The VMkernel will always try to compress memory before swapping. This feature is very helpful and a lot faster than swapping. However, the VMkernel will only compress a memory page if the compression ratio is 50% or more, otherwise the page will be swapped. Furthermore, the default size of the compression cache is 10%, if the compression cache is full, one compressed page must be replaced in order to make room for a new page. The older page will be swapped out. During heavy contention memory compression will become the first stop before ultimately ending up as a swapped page.

Increasing the memory compression cache can have a contradictive effect, as the memory compression cache is a part of the virtual machine memory usage, introducing memory pressure or contention due to configuring large memory compression caches.

Host-level Swapping

In contrast to ballooning, host-level swapping does not communicate with the Guest OS. The VMkernel has no knowledge about the status of the page in the Guest OS only that the physical page belongs to a specific virtual machine. Because the VMkernel is unaware of the content of the stored data inside the page and its significance to the Guest OS, it could happen that the VMkernel decides to swap out specific Guest OS kernel pages. Guest OS kernel pages will never be swapped by the Guest OS itself as they are crucial to maintaining kernel performance.

So by disabling ballooning, you have just deactivated the the most intelligent memory reclamation technique. Leaving the VMkernel with the option to either compress a memory page or just rip out complete random (and maybe crucial) page, significantly increasing the possibility of deteriorating the virtual machine performance. Which to me does not sound something worth recommending.

Alternative to disabling the balloon driver while guaranteeing performance?

The best option to guarantee performance is to use the resource allocation settings; shares and reservations.

Use shares to define priority levels and use reservations to guarantee physical resources even when the VMkernel is experiencing resource contention.

How reservations work are described in the articles: Setting reservations does have impact on the virtual infrastructure, described in the articles “Impact of Memory reservations” and “Resource Pools memory reservations” and “Reservations and CPU scheduling”.

However setting reservations will impact the virtual infrastructure, a well know impact of setting a reservation is on the HA slot size if the cluster is configured with “Host failures cluster tolerates”. More info on HA can be found in the HA deep dive on yellow-bricks. To circumvent this impact one might choose to configure the HA cluster with the HA policy “Percentage of cluster resources reserved as fail over spare capacity”. Due to the HA-DRS integration introduced in vSphere 4.1 the main caveat of dealing with defragmented clusters is dissolved.

Disabling the balloon-driver will likely worsen the performance of the virtual machine when the ESX host experiences resource contention. I suspect that the advice given by other-vendor-experts is to avoid memory reclamation and the only two build-in recommended mechanisms to help avoid memory reclaimation are the resource allocation unit settings: Shares and Reservations.

very interesting, these non-vmware expert or aka – MS people.

This was a very good read and I have to say very informative. I thought the approach of reservations/resource pools was really a nice way of addressing the issue altogether. Going back to the basics. The things you pointed out were very helpful especially when you are a large enterprise and you don’t weigh the decisions or have time to actually test things thoroughly. In class I also remember going over these things as well. We just disabled LPS in our production clusters but right now we are not even close to capacity on systems (%75). Since we are looking at running SQL on our next cluster this was very helpful. Thank Frank!

Hi Frank,

Firstly, thank you for this great informative article.

I am one of those ‘non-vmware experts’ that recommend disabling the VMWare Memory Balloon Driver due to it’s adverse effects on virtualised SQL Servers. These are effects seen in the field, not in some controlled test.

SQL Server requires sufficient RAM to perform efficiently. This is typically the majority of RAM accessible to the OS (leaving 2GB plus for the OS itself) on dedicated SQL Servers. The VMWare Memory Balloon Driver has potential to reduce the RAM accessible to the OS in an unpredictable way (as does other VMWare memory reclamation techniques). By unpredicatable I mean that, from the Virtual Machine perspective, there is no way of knowing how much memory will be reclaimed, where this memory will be reclaimed from (kernal or user), and whether this reclaimed memory will be returned. This can also result in ‘swapping’ at the guest level which is disastorous for performance.

We have also seen many instances of the VMWare Memory Balloon Driver never releasing it’s reclaimed memory (not deflating the Balloon) leaving virtualised SQL Servers in a degraded state. There is anecdotal evidence that the VMWare Memory Balloon Driver activates well above the threshold you mentioned (6% of RAM free on Host).

I agree that the intention is to ‘Ensure that enough physical memory resources are available to the virtual machine to keep the VMkernel from reclaiming memory of that particular virtual machine’ and would further recommend ‘reservations’ for this purpose.

In conclusion, I would still recommend a client disable the VMWare Memory Balloon Driver in order to prevent it’s unpredictable effects on the virtualised SQL Server. I accept that other measures (reservations) should also be taken to ensure the performance of the virtualised SQL Server from a memory perspective.

Just disabling ballooning isn’t enough because other reclamation could happen that’s worse. So then you have to set a reservation anyway. But setting the reservation prevents ballooning from happening anyhow.

So just do the reservation.

I’ve seen in 4.1 this behaviour several times. No matter that you reserve the total amount of configured memory and wait hours. No matter that you induce a lot of memory activity on the VM, once the balloon has inflated it won’t deflate even if 100% of the configured memory is reserved.

The result display in that image are for 1 Host on dedicated storage, therefore it is only in the fantasy you get that throughput..

I think I am one of this so called experts that recommends turning this off, the reason is basically it doesn’t work the way VMware says it work ,either in White Papers or in Blogs.

A lot of blogger and a lot of White Paper people are not practical, they know the theory but haven’t a clue about reality.

So, what is my problem? My problem is, that it you use VMware ‘s Memory Balloning, setup by VMware expert, when things breake people complain about a slow SQL, a slow Exchange a slow Citrix XenApp, 99% of the times I have found that the problem is on the hypervisor side. Turning this feature of fixes the problem.

Yes, I know there is a sales argument to reach a higher consolidation ratio and higher memory utilization, but I am not in the business of sale, I am in the business of stability, functionality and usability. Unfortunately most customer start overcommitting resources, before they even get some load in it, for example they love to have 24VMs on a vShpere host, because VMware sales told them that it works, even though the machine only has like 8 Cores…. and every VM requires 2 vCPU’s.

Not to talk about Memory, have you ever done a VDI project? Have you seen that some customer, mostly those working with VMware vShpere preferable overcommitts memory as a start, causing a stress on the disk subsystem out of the box? So, in what way is then this feature good, if people can not control it don’t use it.. if educated VMware consultans can not make this work without breaking things,. dont use it.. it is very simple of the “why not” argument.

I saw a sign a year ago, VMware had a booth and doing some commercials for themselves, it said 87% of our customers are using our software for test.. well maybe it is just that.. VMware assumes people use the software for test and not for production.

I am a bit of set reading this type of blogs, because blogs like this causes caos, all the customers I know have had issues with this feature, all I know that turned it off hasn’t! And I am not the expert who set the VMware vShpere environment up, others are.

Peter W

Peter,

Maybe it is me, but if you turn off ballooning the only thing you are doing is pushing the problem down the stack. If you over commit you will swap instead of balloon. Regardless of what anyone says I am certain that ballooning has less performance impact than pure swapping. Disabling ballooning doesn’t solve the problem as it is not ballooning that causes the problem, it is the Admin who decides to overcommit his systems to such a level that ballooning and other reclamation techniques are required to ensure the stability of the hypervisor. If you don’t want to overcommit and avoid ballooning reserve memory, but don’t disable your life saver. If you are responsible for stability of the environment why would you disable your lifeline?

Chaos isn’t caused by a blog like this one, chaos is caused by severe overcommitment and misunderstanding of fundamental concepts and features. On top of that, VMware is very much aware that our customers use the software in production environments. We work with these customers on a day-to-day basis!

If needed don’t hesitate to open a topic on the VMTN community if you ever face issues which are cause by the balloon driver in your opinion and make me aware of it and I promise to look into it.

Duncan

yellow-bricks.com (chaos causing blog ;-))

Hi Frank,

I’m currently studying for my VCAP-DCA and on chapter 3 DRS/Performance and have been researching a lot of info from the blueprint…

I came across my previous question I posed on yellow bricks above regarding SQL and vmware best practices not necessarily converging.

I had another related question regarding the thresholds of 94% before ballooning kicks in. What about in the situation where you use admission control in an N+2 scenario, like we are. We have sets of 5-node clusters with 40% failover capacity under Admission Control to allow for 2 host failures in a “POD”. Does the ballooning threshold now related to the 60% of available resources on each host that is actually “available” to be used. It seems this would make sense as in normal operation HA would prefer not to use the top slice of 40% on each host. Maybe it just behaves as normally as the HA and memory management modules probably are independent, but I’d appreciate your view anyway…

All the best,

Paul

Hi Frank,

This was an excellent read and very informative. I have been banging my head for weeks trying to figure out why my VM’s were triggering our monitoring system by consuming 98%+ of the RAM. The VM initally was allocated 4GB, it used it up. We allocated another 4GB and all 8GB were gone. Finally we moved it up to 16GB with the same results. After reading, I set the VM to 8GB, with a reservation and limit of 8GB and no more alerts. Even the performance is greatly improved. I have to wait and see what the longer term side effects but for now things look good. I hope your article helps others as much as it did me. Thank you!

I still dont get whats the balloning concept

I still dont get whats the balloning concept. could some one please make me understand in a simple manner with example if possible..Please