vSphere 5.1 vMotion enables a virtual machine to change its datastore and host simultaneously, even if the two hosts don’t have any shared storage in common. For me this is by far the coolest feature in the vSphere 5.1 release, as this technology opens up new possibilities and lays the foundation of true portability of virtual machines. As long as two hosts have (L2) network connection we can live migrate virtual machines. Think about the possibilities we have with this feature as some of the current limitations will eventually be solved, think inter-cloud migration, think follow the moon computing, think big!

The new vMotion provides a new level of ease and flexibility for virtual machine migrations and the beauty of this is that it spans the complete range of customers. It lowers the barrier for vMotion use for small SMB shops, allowing them to leverage local disk and simpler setups, while big datacenter customers can now migrate virtual machines between clusters that may not have a common set of datastores between them.

Let’s have a look at what the feature actually does. In essence, this technology combines vMotion and Storage vMotion. But instead of either copying the compute state to another host or the disks to another datastore, it is a unified migration where both the compute state and the disk are transferred to different host and datastore. All is done via the vMotion network (usually). The moment the new vMotion was announced at VMworld, I started to receive questions. Here are the most interesting ones that allows me to give you a little more insight of this new enhancement.

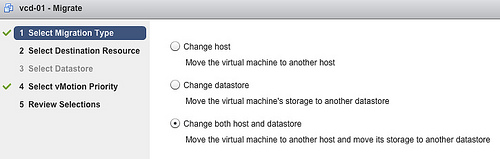

Migration type

One of the questions I have received is, will the new vMotion always move the disk over the network? This depends on the vMotion type you have selected. When selecting the migration type; three options are available:

This may be obvious to most, but I just want to highlight it again. A Storage vMotion will never move the compute state of a VM to another host while migrating the data to another datastore. Therefore when you just want to move a VM to another host, select vMotion, when you only want to change datastores, select Storage vMotion.

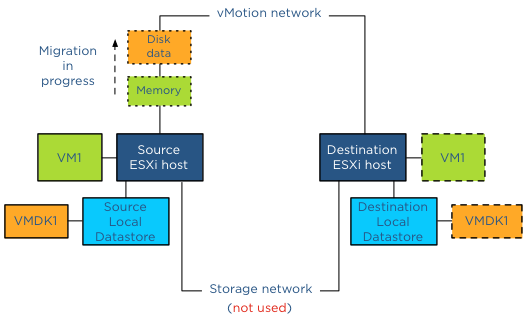

Which network will it use?

vMotion will use the designated vMotion network to copy the compute state and the disks to the destination host when copying disk data between non-shared disks. This means that you need to the extra load into account when the disk data is being transferred. Luckily the vMotion team improved the vMotion stack to reduce the overhead as much as possible.

Does the new vMotion support multi-NIC for disk migration?

The disk data is picked up by the vMotion code, this means vMotion transparently load balances the disk data traffic over all available vMotion vmknics. vSphere 5.1 vMotion leverages all the enhancements introduced in the vSphere 5.0 such as Multi-NIC support and SDPS. Duncan wrote a nice article on these two features.

Is there any limitation to the new vMotion when the virtual machine is using shared vs. unshared swap ?

No, either will work, just as with the traditional vMotion.

Will the new vMotion features be leveraged by DRS/DPM/Storage DRS ?

In vSphere 5.1 DRS, DPM and Storage DRS will not issue a vMotion that copies data between datastores. DRS and DPM remains to leverage traditional vMotion, while Storage DRS issues storage vMotions to move data between datastores in the datastore cluster. Maintenance mode, a part of the DRS stack, will not issue a data moving vMotion operation. Data moving vMotion operations are more expensive than traditional vMotion and the cost/risk benefit must be taken into account when making migration decisions. A major overhaul of the DRS algorithm code is necessary to include this into the framework, and this was not feasible during this release.

How many concurrent vMotion operations that copies data between datastores can I run simultaneously?

A vMotion that copies data between datastores will count against the limitations of concurrent vMotion and Storage vMotion of a host. In vSphere 5.1 one cannot perform more than 2 concurrent Storage vMotions per host. As a result no more than 2 concurrent vMotions that copy data will be allowed. For more information about the costs of the vMotion process, I recommend to read the article: “Limiting the number of Storage vMotions”

How is disk data migration via vMotion different from a Storage vMotion?

The main difference between vMotion and Storage vMotion is that vMotion does not “touch” the storage subsystem for copy operations of non-shared datastores, but transfers the disk data via an Ethernet network. Due to the possibilities of longer distances and higher latency, disk data is transferred asynchronously. To cope with higher latencies, a lot of changes were made to the buffer structure of the vMotion process. However if vMotion detects that the Guest OS issues I/O faster than the network transfer rate, or that the destination datastore is not keeping up with the incoming changes, vMotion can switch to synchronous mirror mode to ensure the correctness of data.

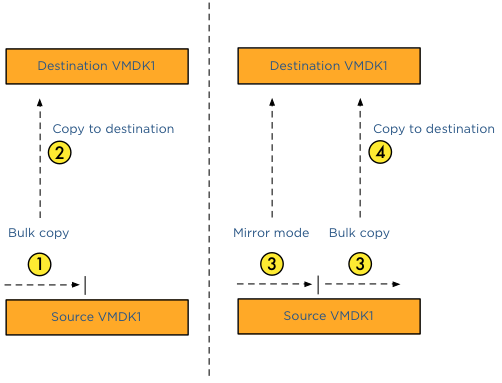

I understand that the vMotion module transmits the disk data to the destination, but how are changed blocks during migration time handled?

For disk data migration vMotion uses the same architecture as Storage vMotion to handle disk content. There are two major components in play – bulk copy and the mirror mode driver. vMotion kicks off a bulk copy and copies as much as content possible to the destination datastore via the vMotion network. During this bulk copy, blocks can be changed, some blocks are not yet copied, but some of them can already reside on the destination datastore. If the Guest OS changes blocks that are already copied by the bulk copy process, the mirror mode drive will write them to the source and destination datastore, keeping them both in lock-step. The mirror mode driver ignores all the blocks that are changed but not yet copied, as the ongoing bulk copy will pick them up. To keep the IO performance as high as possible, a buffer is available for the mirror mode driver. If high latencies are detected on the vMotion network, the mirror mode driver can write the changes to the buffer instead of delaying the I/O writes to both source and destination disk. If you want to know more about the mirror mode driver, Yellow bricks contains a out-take of our book about the mirror mode driver.

What is copied first, disk data or the memory state?

If data is copied from non-shared datastores, vMotion must migrate the disk data and the memory across the vMotion network. It must also process additional changes that occur during the copy process. The challenge is to get to a point where the number of changed blocks and memory are so small that they can be copied over and switch over the virtual machine between the hosts before any new changes are made to either disk or memory. Usually the change rate of memory is much higher than the change rate of disk and therefore the vMotion process start of with the bulk copy of the disk data. After the bulk data process is completed and the mirror mode driver processes all ongoing changes, vMotion starts copying the memory state of the virtual machine.

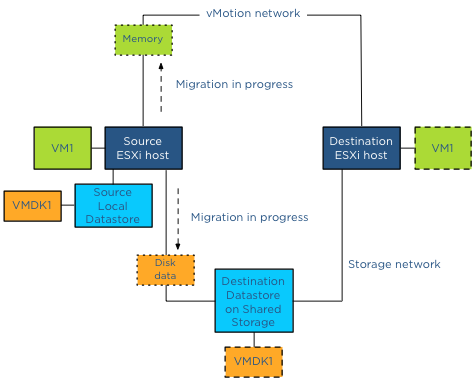

But what if I share datastores between hosts; can I still use this feature and leverage the storage network?

Yes and this is very cool piece of code, to avoid overhead as much as possible, the storage network will be leveraged if both the source and destination host have access to the destination datastore. For instance, if a virtual machine resides on a local datastore and needs to be copied to a datastore located on a SAN, vMotion will use the storage network to which the source host is connected. In essence a Storage vMotion is used to avoid vMotion network utilization and additional host CPU cycles.

Because you use Storage vMotion, will vMotion leverage VAAI hardware offloading?

If both the source and destination host are connected to the destination datastore and the datastore is located on an array that has VAAI enabled, Storage vMotion will offload the copy process to the array.

Hold on, you are mentioning Storage vMotion, but I have Essential Plus license, do I need to upgrade to Standard?

To be honest I try to keep away from the licensing debate as far as I can, but this seems to be the most popular question. If you have an Essential Plus license you can leverage all these enhancements of vMotion in vSphere 5.1. You are not required to use a standard license if you are going to migrate to a shared storage destination datastore. For any other licensing question or remark, please contact your local VMware SE / account manager.

Update: Essential plus customers, please update to vCenter 5.1.0A. For more details read the follow article: “vMotion bug fixed in vCenter Server 5.1.0a”.

Get notification of these blogs postings and more DRS and Storage DRS information by following me on Twitter: @frankdenneman

vSphere 5.1 vMotion Deep Dive

5 min read

Hi Frank,

“vSphere 5.1 Enhanced vMotion” (Cross-Host Storage vMotion) with Essentials Plus doesn’t work !

I’ve downloaded and tested 5.1 in my test environment.

I’ve applied an Essentials Plus license and try to move a VM between the hosts (with Web Client, without shared storage) .

But unfortunately this doesn’t work (as advertised). You can only choose “Change Host”, all other options are greyed out (“Storage vMotion is not licensed ..” ….)

If even this doesn’t work, i don’t need to test Essentials Plus + shared storage + enhanced vmotion ..

Hey Max,

I would suggest you submit a bug to VMware support, this shouldn’t be happening

Thanks for reply.

As a VMware Customer I would expect that VMware tested this “exotic” scenario …

But maybe I expected too much …

Max,

I’m curious, which browser (type and version please) do you use?

Tested with IE8 + Firefox 15.0.1 .

Do CPU family limitations still exist for the enhanced vMotion in vSphere 5.1?

For example, can I vMotion a VM running on local storage between these two hosts:

Source: 5.1 host with an Intel 5500 series chip (Nehalem)

Destination: 5.1 host with an Intel E5-2600 series chip (Sandy Bridge)

In a cluster we’d have to enable EVC mode at the Nehalem level for this to work.

How is this handled between 2 stand-alone hosts with just local storage on each in vSphere 5.1?

Thanks!

@Frank

I’ve filed a SR for this issue. VMware Support contacted me and the statement was that Storage vMotion is not in Essentials Plus License and therefore vMotion without the shared storage requirement (“enhanced vMotion”) does NOT work in Essentials Plus.

Then I have given them your blogpost (http://blogs.vmware.com/vsphere/2012/09/vmotion-without-shared-storage-requirement-does-it-have-a-name.html).

Now it is under investigation …

Thanks Max,

Do you have the SR number for me?

SR #12220089109

Hello Frank,

I am handling this SR. Our pricing guides show:

5.x: http://kb.vmware.com/kb/2001113 (Enterprise)

5.1 http://www.vmware.com/files/pdf/vsphere_pricing.pdf (Standard)

Will a cross-host vMotion req. storage vMotion capabilities?

Hello.

I am running in the same issue regarind Essentials Plus!

As far as I understood the vMotion of storage over network will also work in my license…Is this true or not??

At the moment this feature is greyed out in my webclient …

Thanks.

Just to be clear, should this be possible with the essentials plus license or not? (It certainly doesn’t seem to be possible at present.)

Maybe I am missing the point here, but if Enhanced vMotion allows a client to negate the need for a shared datastore – does this then apply to HA features – as if one of the hosts that is suddenly fallen over (ie: loss of power) is then unavailable for access to the local data disks how exactly would a vMotion (via HA) exercise be possible ?

It’s just that vMotion is not necessarily a feature that a SMB would be dying to have as they generally have a far more relaxed change request cycle and as such can probably handle having a VM be shut down and then migrated as has been possible since earlier versions (maybe not Storage vMotion – but copying data from one data store to another via the vCenter host has always been available – may be slow/timely but once again refers to the fact that a SMB can probably handle this sort of downtime).

So with the fact that VSA is now also available with almost all of the versions (Essentials Plus has a “Essentials variant”) – would it not be preferred to rather use VSA which (as far as I am aware) supports all of the HA features as well ?

Frank,

In vCloud, using an Allocation Pool OVDC attached to a PVDC backed by multiple root clusters, it seems we have the ability to manually transfer VM’s between those clusters? I’m assuming that because the root clusters don’t share the same datastores (FC), it’s leveraging the L2 network to v/svmotion, Can you confirm this is the case across vSphere5.1 root clusters in vCloud? My other question is, if this works for Allocated Pools, why doesn’t this work for Reserved Pool when all that’s being done automatically by vCloud is initial vApp placement?

Hi,

Update from my SR (#12220089109):

VMware has acknowledged that this is a bug and it will be fixed in a Express Patch (coming soon).

Max, thanks for filing the SR, we managed to get the bugfix in the express patch. For more information: http://frankdenneman.nl/vmware/vmotion-bug-fixed-in-vcenter-server-5-1-0a/

Hi Frank,

Thanks for the article, looking forward to read more in your book, however I have a question for which I could not find anything in the documentation and which needs a quick response (I could not test this in our testlab, due to a HW upgrade we are performing):

– Will Unified vMotion work from ESXi 4.x to ESXi 5.1 hosts? My educated guess would be no, but I would appreciate your comments on this.

Thanks.

how exactly are you able to limit the amount of concurrent vmotion operations? Let’s say I am on a less than optimal network and want to limit to 3 vmotions at a time so when I place a host in a cluster into maint mode, I don’t overkill the network?

Hello. Thanks for great articles! Read your posts for a while. Now I faced some issues with vMotion and hope you’ll give me an expert advice. I have the following configuration: blades with Infiniband mezzanines and vSphere 4.1 installed connected to non-branded storage. Primary path to storage uses iSCSI over IPoIB (I know this conf is “unusual” in VMware world but that’s what I must deal with). It has 10Gbps throughput (with current drivers) so I was comfortable with this. (S)vMotion traffic was going through this pipe and it was quite fast. Some time ago I decided to configure the reserve path with iSCSI over Ethernet. I created new portgroup in another distributed switch. So I have 2 distributed switch: first one has IPoIB uplink interface and the second one – two 1Gbps uplinks. iSCSI portgroup uses different VLAN from management network (which also resides in 2nd dvs) and has it’s own vmkernel interface. After I configured this reserve channel my Storage vMotion traffic goes through this pipe which as you can understand is much slower. How can I force this traffic to switch back to IPoIB instead Ethernet?

Hi thanks for your feedback.

My question would be, why have you configured the secondary network? How does it benefit your environment?

My purpose is to have some kind of multipath configuration so I can switch to ethernet in case of IB goes down (or IB infrastructure maintenance). Now SAN isn’t heavy loaded and I think 2Gbps would be enough to keep vms working before I switch back to IB.

Hi ,

I have just started learning vMotion feature.

I have some basic questions

1. Say, I have enabled vMotion on Management network and on dedicated other network (dedicated) as well. Which NIC will be used for vMotion if MultiNIC is not configured?

2. Every VM use to have one unique ID to identify VM across our virtual environment, when/how does vMotion keeps the track when VM starts it execution on destination host?

3. When VM starts processing all its application on destination host during vMotion?

I mean, which phase?. Phase 2: Precopy Phase or Phase 3: Switchover Phase?

please clarify .

-Vikas

Sorry to drag up an old topic, but I’m trying to design (re-design!) my storage.

I currently run Essentials Plus 5.0 on 3 hosts and use an iSCSI based SAN consisting of two storage machines in active:passive failover. My VMWare datastores are running on the SAN storage. Currently, should one storage box fail the other takes over quick enough for the VMs to continue running. Sadly, performance isn’t great (SATA drives) and I’m looking increase it before I move my last physical server (Exchange) onto a VM.

Thanks for any answers!

If I upgrade to 5.1, install HDs on the hosts and use VMWare vSphere Storage Appliance to create a shared store from nice fast SAS drives on the hosts, would I be able to set up the cluster so that VMs will automatically be moved to a running host in the event of a hardware failure on one host?

The other option is simply to upgrade the existing SAN boxes, but the cost is high (my employer is at the S end of SME!) 🙂 I would rather have a smaller faster store for the VMs to use as system/application partitions and keep the existing SAN arrangement for User data, for which is is perfectly adequate.

Fantastic write up.

Soon all we will need is a backblaze as vmware is taking over the smarts from storage the storage vendors.

http://blog.backblaze.com/2009/09/01/petabytes-on-a-budget-how-to-build-cheap-cloud-storage/

Running ZFS with dedupe or compression and you have yourself some cheap storage.

Frank,

Great article! I do have a couple questions about VMotion without shared storage. They came up in class and in a discussion with colleagues. We could not find answers anywhere. Your article did the best job of answering most of our questions. Here are our last few. I see that the data migration begins first then the memory migrations begins after the bulk copy, What would happen if my host already had 2 S VMotions initiated when we started a VMotion without shared storage? Would it queue as a normal 3rd SVMotion would? Also what would happen if I started a VMotion without shared storage and while the bulk copy was in progress and before the memory migration beaga, someone else initiated 4 VMotion migrations with VMs that had shared storage? Would the 4 newer VMs cut in front of the VMotion without shared storage or would they be behind it. In which case 3 of the 4 would migrate and the 4th would wait until one of the 3 finished? Lastly, can you point me to any good resources to learn more about VMotion without shared storage?

Thanks again for your article!