Duncan posted an article today in which he brings up the question: Should I use many small LUNs or a couple large LUNs for Storage DRS? In this article he explains the differences between Storage I/O Control (SIOC) and Storage DRS and why they work well together, to re-emphasize, the goal of Storage DRS load balancing is to fix long term I/O imbalances, while SIOC addresses short term burst and loads. SIOC is all about managing the queue’s while Storage DRS is all about intelligent placement and avoiding bottlenecks.

Julian Wood makes an interesting remark, and both Duncan and I hear this remark when discussing SIOC. Don’t get me wrong I’m not picking on Julian, I’m merely stating the fact he made a frequently used argument.

“There is far less benefit in using Storage IO Control to load balance IO across LUNs ultimately backed by the same physical disks than load balancing across separate physical storage pools. “

Well when you look at the way SIOC works I tend to disagree with this statement. As stated before, SIOC manages queues, queues to the datastores used by the virtual machines in the virtual datacenter. Typically speaking these virtual machines differ from workload types, from peak moments and also they differ in importance to the organization. With the use of disk shares, important virtual machine can be assigned a higher priority within the disk queue. When contention occurs, and this is important to realize, when contention occurs these business critical virtual machine get prioritized over other virtual machines. Not all important virtual machines generate a constant stream of I/O, while other virtual machines, maybe with a lower priority do generate a constant stream of IO. The disk shares provide the high priority low IO virtual machines to get a foot between the door and get those I/Os to the datastore and back. Without SIOC and disk shares you need to start thinking of increasing the queue depth of each hosts and think about smart placement of these virtual machines (both high and low I/O load) to avoid those high I/O load getting on the same host. These placement adjustment might impact DRS load balancing operations, possibly affecting other virtual machines along the way. Investing time in creating and managing a matrix of possible vm to datastore placement is not the way to go in this time with rapidly expanding datacenters.

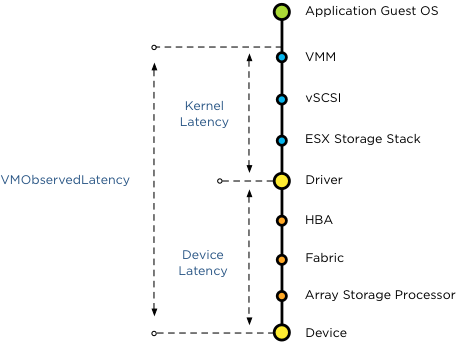

Because SIOC is a datastore-wide scheduler, SIOC determines the queue-depth of the ESX hosts connected to the datastores running virtual machines on those datastores. Hosts with higher priority virtual machines get “deeper” queue depths to the datastore and hosts with lower priority virtual machines running on the datastore receive shorter queue-depths. To be more precise, SIOC calculates the datastore wide latency and each local host scheduler determines the queue depth for the queues of the datastore.

But remember queue depth changes only occur when there is contention, when the datastore exceeds the SIOC latency threshold. For more info about SIOC latency read “To which Host level latency statistic is the SIOC threshold related”

Coming back to the argument, I firmly believe that SIOC has benefits in a shared diskpool structure, between the VMM and the datastore a lot of queue’s exists.

Because SIOC takes the avg device latency off all hosts connected to the datastore into account, it understands the overall picture when determining the correct queue depth for the virtual machines. Keep in mind, queue depth changes occur only during contention. Now the best part of SIOC in 5.1 is that it has the Automatic Latency Threshold Computation. By leveraging the SIOC injector it understands the peak value of a datastore and adjust the SIOC threshold. The SIOC threshold will be set to 90% of its peak value, therefor having an excellent understanding of the performance capability of the datastore. This is done on a regular basis so it keeps actual workload in mind. This dynamic system will give you far more performance benefit that statically setting the queue-depth and DNSRO for each host.

One of the main reasons of creating multiple datastores that are backed by a single datapool is because of creating a multi-path environment. Together with advanced multi-pathing policies and LUN to controller port mappings, you can get the most out of your storage subsystem. With SIOC, you can manage your queue depths dynamically and automatically, by understanding actually performance levels, while having the ability to prioritize on virtual machine level.

What if the shared backend disk pool is composed of different kind of disks (FC 15k and SATA 7.2K for example)? I’m thinking about a storage with auto-tiering capabilities: doesn’t it make sense to disable SIOC in cases like this one?

Thanks for your great (and very usefull! )articles,

Michael

Hi Frank,

More than happy for my misunderstanding to be used as an example to teach others!

I was under the mistaken impression that SIOC wasn’t just using queue based “learning” and doing IO control on some more fixed based metrics. I can see that SIOC is far more intelligent and by measuring the latency in a datastore it knows exactly what its options are even if there are other non-SIOC enabled datastores backed on the same disks.

I will say though that having datastore clusters spanning across separate physical pools will allow more flexibility in moving VMs around to alleviate IO issues as if all datastores are backed by the same physical disks then moving VMs around based on IO contention would be useless.

Thanks for the clarification.

Can you provide your thoughts on using SIOC on an array where all disks have all LUNS striped over them (such as 3PAR), and there are non-ESX workloads on the array? Or multiple, ESXi clusters managed by different vCenter instances but backed by the same physical disks? An to throw another wrinkle..say that same array used sub-LUN data tiering so response times from the probes may vary?

@ Michael thats the same question I asked on Duncans site, based on the SDRS Interop guide Frank and Duncan put together it states to Disable I/O and place it in manual mode. (pg 6. of the doc).

Any insight here would be great. Thanks.

First and foremost, thanks for allowing me to refer to your blog in my upcoming book.

From what I’ve read, it seems to me SIOC does not have inter-datastore shares. It only has shares within 1 datastores. This can create less than ideal result when the number of VMs are not the same across all the datastores. In a sense, it’s like Resource Pools with uneven number of VMs on each RP.

Here is an example:

We have 2 datastores: 1 large VM on datastore A, 9 small VM on datastore B.

We have 2 hosts. Let’s say the large VM is on host A because it’s a 16 vCPU VM, and the 9 small VMs on host B because they are 2 vCPU each. The hosts has 16 cores. Actually, the distribution on the hosts does not matter, as in the result of SIOC will be the same.

We enable SIOC. All are default settings. So every VM gets the normal share.

In normal situation, the large VM sends 1000 IOPS, and the small VM sends 4000 IOPS in total. The array can handle 5000 IOPS well. SIOC did not kick in as all latency is below threshold. Everyone is happy.

Let’s say something bad happen, as they always do 🙂 Assume someone did AV scan at the same time, or patch Windows.

The 9 small VM generates 9000 IOPS total.

So Host A is sending 1000 IOPS, while Host B is sending 9000 IOPS.

Total is 10K IOPS. But the array can only handle 5000 IOPS. Half of it. SIOC kicked in on both hosts.

SIOC effectively makes every datastore to get half the IOPS.

So datastore A only gets 500 IOPS, and Datastore B gets 4500 IOPS.

This is not what we want. What we want is Datastore A gets 50% of the IOPS, and Datastore B gets 50%. There is a need for inter-datastore sharing.

In this example, the large VM will get 1000 IOPS, while the 9 small VMs will get 4000 IOPS.

Thanks from the little red dot.

e1