A well designed vMotion network will benefit the environment in many ways. Before vSphere 5, designing a vMotion network was relative easy, select the fastest NIC and assign it to a vMotion vmknic. vSphere 4.x supports both 1GB and 10GB networks and since vSphere 5.0 vMotion is able to leverage multiple NICs. Multi-NIC in vSphere 5.x makes it a bit more challenging. The combination of NICs, the failover mode and which load balancing policy need to be taken into consideration when configuring your vMotion network.

The benefit of multi-NIC vMotion network

In vSphere 5.x vMotion balances the vMotion operations across all available NICs. Both for a single vMotion operation and for multiple concurrent vMotions operations. By using multiple NICs it reduces the duration of a vMotion operation. This benefits:

- Manual vMotion processes: Allocating more bandwidth to the vMotion process will result in faster migration times. The less time spend on monitoring a manual process means more time you can spend on other – more important – operations.

- DRS load balancing: Based on the average time per migration and the number of concurrent vMotion process, DRS restrict the number of load balancing operations it can process between two load balancing runs. By providing more bandwidth to the vMotion network, DRS is able to run more load balancing operations in between load balancing runs, which in turn benefits the load balance of the cluster. Better load balance means better resource availability for the virtual machines, which in turn means better performance for your applications.

- Maintenance mode: Faster migration times, means reducing the time a host enters maintenance mode. With the increase of consolidation ratio, the time it takes to migrate all the virtual machines off the host is increased as well. This can have impact on your SLA and the ability to service the host within the allowed time.

Multi-NIC setup

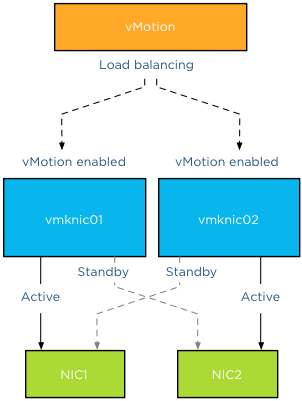

The vMotion process leverages the vmknic instead of sending packets directly to a physical NIC.In order to use multiple NICs for vMotion, multiple vmknics are required. Duncan wrote an excellent article how to setup multi-NIC vMotion network.

Failover order and Load balancing policy

Each vmknic should only use one active NIC, this result in only one valid load balancing policy and that is “Route based on originating virtual port”. This setup inhibits physical NIC load balancing such as IP-hash or the load balancing policy “based on physical load (LBT)”. The reason why the active/standby failover order is the only valid and supported configuration is because of the way vMotion load balancing works. The vMotion process itself handles load balancing, based on its own algorithm, vMotion picks a vmknic for a specific network packet. As vMotion expects the vmknic to be backed by a single physical NIC, sending out data through a given vmknic ensures vMotion that the data traverses that dedicated physical NIC. If the physical NICs were configured in a load-balancing mode, this could interfere with the vMotion level load balancing logic. vMotion would not be able to predict which physical NIC is used by the vmknic and possible sending all vMotion traffic over the same NIC, even if Motion sends the data to different vmknics.

Consistent configuration

Ensure that each physical NIC is configured in a consistent and correct way across the vMotion network on the host and across the hosts inside the cluster. vCenter is very conservative and if it detects a mismatch it drops the number of concurrent vMotion operations on that host back to 2 regardless of the available bandwidth. Therefore always check on each level if the link speed, MTU and duplex settings are identical, both on the NIC side as well as the switch side. Please keep in mind that the all the vMotion vmknics should exist in the same subnet. Although its possible to set static routes, “routable vMotion” configurations are not supported by VMware.

Link speed

By default vCenter allows 4 concurrent vMotion operations on a host with a 1 GB vMotion network and 8 concurrent vMotion operations on a host with a 10 GB vMotion network. Be aware that this is based on the detected link-speed. In other words, if the physical NIC reports at least 10GbE, link speed, vCenter allows 8 vMotions, but if the physical NIC reports less than 10GBe, vCenter allows a maximum of 4 concurrent vMotions on that host. To stress it again, the number of concurrent vMotions is based on the detected link speed. For example, take the HP Flex technology. This sets a hard limit on the flexnics, resulting in the reported link speed equal or less to the configured bandwidth on Flex virtual connect level. I’ve come across many Flex environments configured with more than 1GB bandwidth, ranging between 2GB to 8GB. Although they will offer more bandwidth per vMotion process, it will not offer an increase in the number of concurrent vMotions, limiting the number of concurrent vMotion operations to 4.

As mentioned before, vCenter is very conservative; multi-NIC vMotion limits are currently determined by the slowest available vMotion NIC. This means that if you include a 1GB NIC in your 10GB vMotion network configuration, that host is restricted to maximum of 4 concurrent vMotion operations per host. I’ve seen a few vMotion network designs where a 1GB link was added to the 10GB vMotion network configuration, primarily used as a safety net. Just in case the 10GB network drops, well that safety net just restricted that host to 4 concurrent vMotion operations.

Increase in NICS does not impact number of concurrent vMotions

Using multiple NICs increases the bandwidth available for the vMotion process; it does not increase the number of concurrent vMotions. When 10Gb uplinks are used for the vMotion, the maximum concurrent vMotions allowed is eight, even with multiple NICs are assigned, the limit remains at eight. However the increase in bandwidth will decrease the duration of each vMotion process.

A Multi-NIC vMotion configuration is slightly more complex that single NIC vMotion networks, but this setup will benefit you in many ways. Reducing vMotion operation times allows DRS to schedule more load balancing operations per invocation and the increased bandwidth allows the host to complete the transition to maintenance mode faster. Multi-VM is very helpful when leveraging the new vMotion possibility by migrating the virtual machine between hosts and datastores simultaneously.

Part 2 – Multi-NIC vMotion failover order configuration

Part 3 – Multi-NIC vMotion and NetIOC

Part 4 – Choose link aggregation over Multi-NIC vMotion?

Part 5 – 3 reasons why I use a distributed switch for vMotion networks