When a virtual machine is provisioned to the datastore cluster, Storage DRS algorithm runs to determine the best placement of the virtual machine. The interesting part of this process is the method Storage DRS determines the free space of a datastore or to be more precise the improvement made in vSphere 5.1 regarding free space calculation and the method of finding the optimal destination datastore.

vSphere 5.0 Storage DRS behavior

Storage DRS is designed to balance the utilization of the datastore cluster, it selects the datastore with the highest free space value to balance the space utilization of the datastores in the datastore cluster and avoids out-of-space situations.

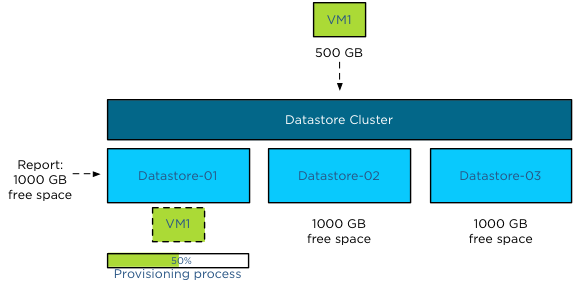

During the deployment of a virtual machine, Storage DRS initiates a simulation to generate an initial placement operation. This process is an isolated process and retrieves the current datastore free space values. However, when a virtual machine is deployed, the space usage of the datastore is updated once the virtual machine deployment is completed and the virtual machine is ready to power-on. This means that the initial placement process is unaware of any ongoing initial placement recommendations and pending storage space allocations. Let’s use an example that explains this behavior.

In this scenario the datastore cluster contains 3 datastores, the size of each datastore is 1TB, no virtual machines are deployed yet, and therefor they each report a 100% free space. When deploying a 500GB virtual machine, storage DRS selects the datastore with the highest reported number of free space and as all three datastores are equal it will pick the first datastore, Datastore-01. Until the deployment process is complete the datastore remains reporting 1000GB of free space.

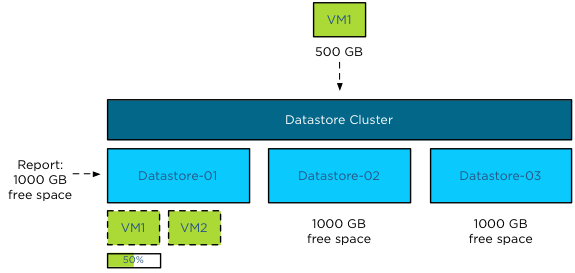

When deploying single virtual machines this behavior is not a problem, however when deploying multiple virtual machines this might result in an unbalanced distribution of virtual machine across the datastores.

As the available space is not updated during the deployment process, Storage DRS might select the same datastore, until one (or more) of the provisioning operations complete and the available free space is updated. Using the previous scenario, Storage DRS in vSphere 5.0 is likely to pick Datastore-01 again when deploying VM2 before the provisioning process of VM1 is complete, as all three datastore report the same free space value and Datastore-01 is the first datastore it detected.

vSphere 5.1 Storage DRS behavior

Storage DRS in vSphere 5.1 behaves differently and because Storage DRS in vSphere 5.1 supports vCloud Director, it was vital to support the provisioning process of a vApp that contains multiple virtual machines.

Enter the storage lease

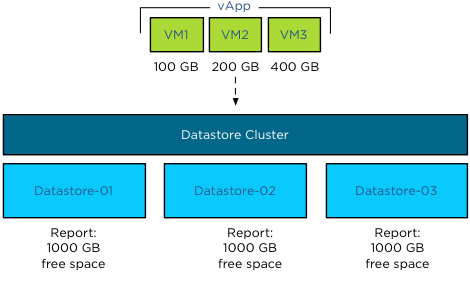

Storage DRS in vSphere 5.1 applies a storage lease when deploying a virtual machine on a datastore. This lease “reserves” the space and making deployments aware of each other, thus avoiding suboptimal/invalid placement recommendations. Let’s use the deployment of a vApp as an example.

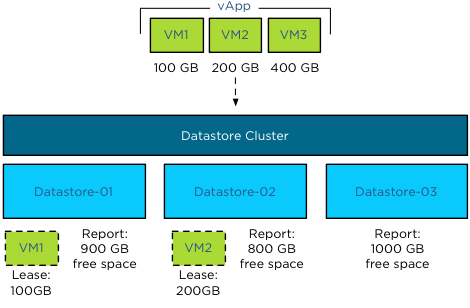

The same datastore cluster configuration is used, each datastore if empty, reporting 1000GB free space. The vApp exists of 3 virtual machines, VM1, VM2 and VM3. Respectively they are 100GB, 200GB and 400GB in size. During the provisioning process, Storage DRS needs to select a datastore for each virtual machine. As the main goal of Storage DRS is to balance the utilization of the datastore cluster, it determines which datastore has the highest free space value after each placement during the simulation.

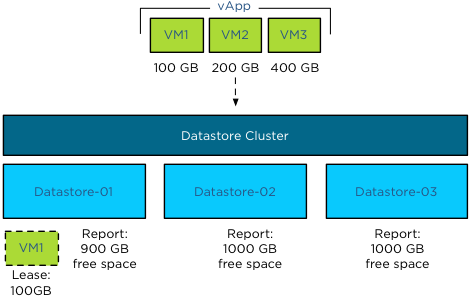

During the simulation VM1 is placed on Datastore-01, as all three datastores report an equal value of free space. Storage DRS then applies the lease of 100GB and reduces the available free space to 900GB.

When Storage DRS simulates the placement of VM2, it checks the free space and determines that Datastore-02 and Datastore-03 each have 1000GB of free space, while Datastore-01 reports 900GB. Although VM2 can be placed on Datastore-01 as it does not violate the space utilization threshold, Storage DRS prefers to select the datastore with the highest free space value. Storage DRS will choose Datastore-02 in this scenario as it picks the first datastore if multiple datastores report the same free space value.

The simulations determines that the optimal destination for VM3 is Datastore-03, as this reports a free space value of 1000GB, while Datastore-02 reports 800 free space and Datastore-01 reports 900GB of space.

This lease is applied during the simulation of placement for the generation of the initial placement recommendation and remains applied until the placement process of the virtual machine is completed or when the operation times out. This means that not only a vApp deployment is aware of the storage resource lease but also other deployment processes.

Update to vSphere 5.1

This new behavior is extremely useful when deploying multiple virtual machines in batches such as vApp deployment or vHadoop environments with the use of Serengeti.

Get notification of these blogs postings and more DRS and Storage DRS information by following me on Twitter: @frankdenneman

Add DRS cluster to existing Storage DRS Datastore Cluster?

Lately I have seen the following question popping up at multiple places: “How can I add hosts of a DRS cluster to a Storage DRS datastore cluster after the datastore cluster is created?”

This is an intriguing question as it gives insight to how datastore cluster construct is perceived and that a step in the “create datastore cluster” workflow in the user interface might be the culprit of this.

The workflow:

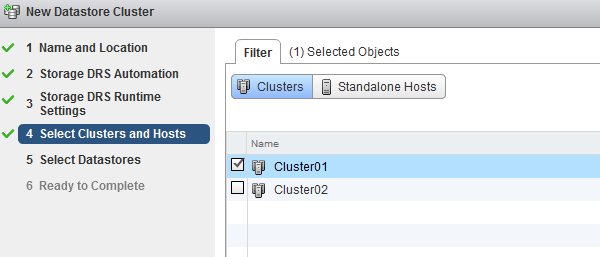

During the create datastore cluster, step 4 requires the use to connect the datastore cluster to a DRS cluster or stand-alone hosts.

The reason for incorporating the step of selecting clusters and stand-alone hosts is to help narrow down the list of datastores that are presented in step 5. This way, one can create a datastore cluster that consists of datastores that are connected to all the hosts in a particular DRS cluster. The article “Partially connected datastore clusters” provide more information on the impact partially connected datastores on Storage DRS load balancing. In short, the screen “Select Clusters and Hosts” is just a filter, no host to datastore connectivity is altered by this step.

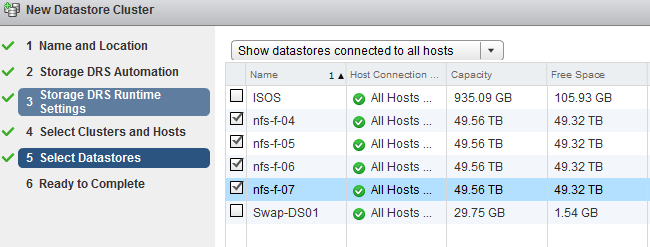

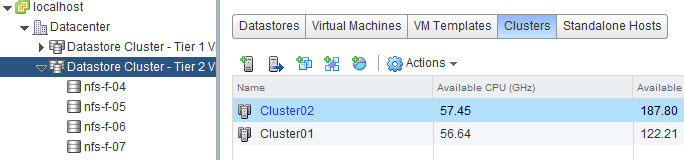

To prove this theory, I attached the datastores nfs-f-04, nfs-f-05, nfs-f-06 and nfs-f-07 to all the hosts in Cluster01 and Cluster02. However I selected the Cluster 01 in step 4. Step 5 provides the following overview, indicating that all the available datastores are connected to the hosts in Cluster01.

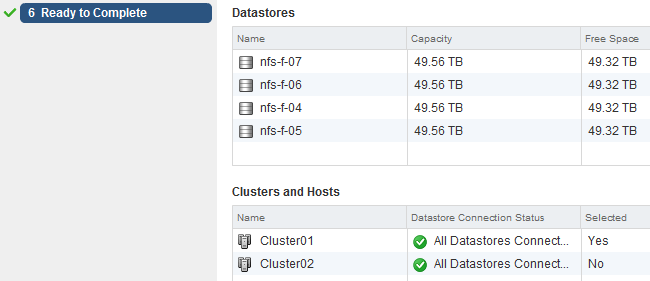

When you review the “Ready to Complete” screen, scroll down to the “Cluster and Host” overview, this screen shows that all the datastores are connected to Cluster01 and Cluster02, but only Cluster01 is selected. In my opinion this screen doesn’t make sense and I’m already working with the User Interface team and engineering to see if we can make some adjustments. (No promises though)!

Although this Selected column can make this screen a little confusing, in essence it displays the user selection during the configuration process. The “Datastore Connection Status” is the key message of this view.

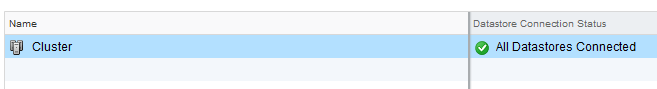

Once complete, select the Storage view, select the new datastore cluster and select the Cluster tab. This view shows that both clusters are connected and can utilize the datastore cluster as destination for virtual machine placement.

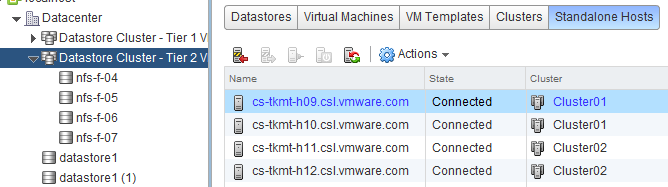

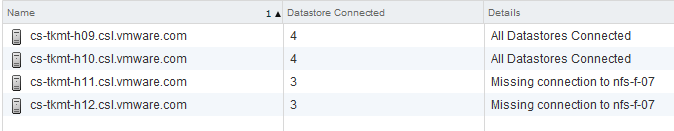

Another check is the “Standalone Hosts” tab. This displays the connectivity state of the hosts to the datastore cluster. Host *h09* and *h10* are part of Cluster01, host *h11* and *h12* are part of Cluster02.

In essence

Remember I didn’t select Cluster02 during the datastore cluster configuration, yet Cluster02 and its hosts are still connected. Long story short: DRS Cluster and Storage DRS Datastore clusters are independent load balancing domain constructs. In the end it drills down to the host to datastore connectivity, remember partially connected datastores can still be a part of a datastore cluster. DRS and Storage DRS take the connectivity of hosts and datastores into account during the initial placement and load balancing process, not the cluster constructs.

Before creating a datastore cluster, ensure the following:

• Check Storage adapter settings

• Check Array configuration (masking, LUN ID, exports)

• Check zoning

• Rescan hosts that are going to use the datastores inside the datastore cluster.

Get notification of these blogs postings and more DRS and Storage DRS information by following me on Twitter: @frankdenneman

vSphere 5.1 Storage DRS load balancing and SIOC threshold enhancements

Lately I have been receiving questions on best practices and considerations for aligning the Storage DRS latency and Storage IO Control (SIOC) latency, how they are correlated and how to configure them to work optimally together. Let’s start with identifying the purpose of each setting, review the enhancements vSphere 5.1 has introduced and discover the impact when misaligning both thresholds in vSphere 5.0.

Purpose of the SIOC threshold

The main goal of the SIOC latency is to give fair access to the datastores, throttling virtual machine outstanding I/O to the datastores across multiple hosts to keep the measured latency below the threshold.

It can have a restrictive effect on the I/O flow of virtual machines.

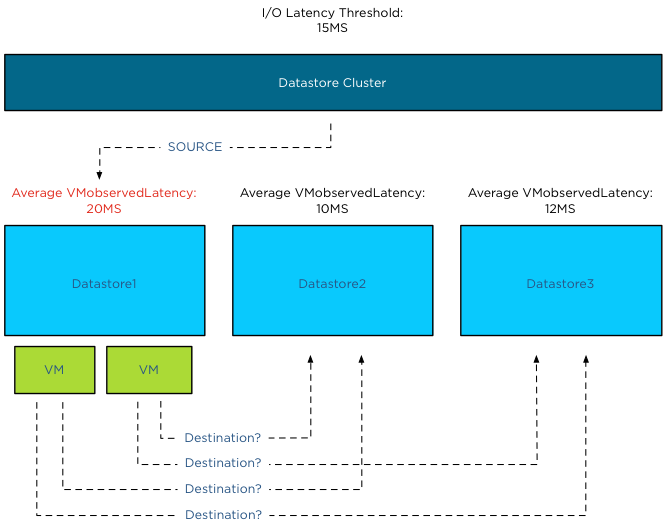

Purpose of the Storage DRS latency threshold

The Storage DRS latency is a threshold to trigger virtual machine migrations. To be more precise, if the average latency (VMObservedLatency) of the virtual machines on a particular datastore is higher than the Storage DRS threshold, then Storage DRS will mark that datastore as “source” for load balancing migrations. In other words, that datastore provide the candidates (virtual machines) for Storage DRS to move around to solve the imbalance of the datastore cluster.

This means that the Storage DRS threshold metric has no “restrictive” access limitations. It does not limit the ability of the virtual machines to send I/O to the datastore. It is just an indicator for Storage DRS which datastore to pick for load balance operations.

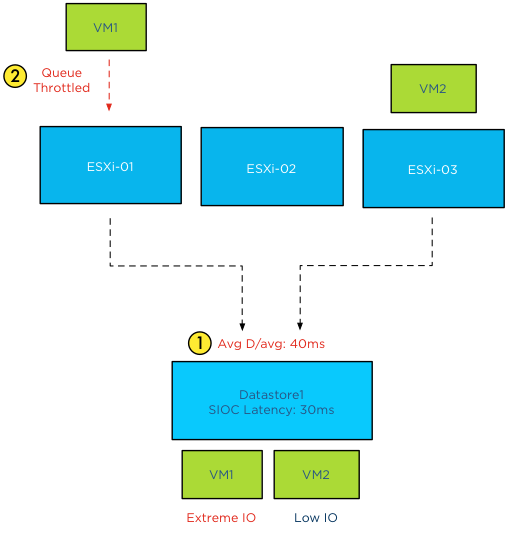

SIOC throttling behavior

When the average device latency detected by SIOC is above the threshold, SIOC throttles the outstanding IO of the virtual machines on the hosts connected to that datastore. However due to different number of shares, various IO sizes, random versus sequential workload and the spatial locality of the changed blocks on the array, we are almost certain that no virtual machine will experience the same performance. Some virtual machines will experience a higher latency than other virtual machines running on that datastore. Remember SIOC is driven by shares, not reservations, we cannot guarantee IO slots (reservations). Long story short, when the datastore is experiencing latency, the VMkernel manages the outbound queue, resulting in creating a buildup of I/O somewhere higher up in the stack. As the SIOC latency threshold is the weighted average of D/AVG per host, the weight is the number of IOPS on that host. For more information how SIOC calculates the Device average latency, please read the article: “To which host-level latency statistic is the SIOC congestion threshold related?”

Has SIOC throttling any effect on Storage DRS load balancing?

Depending on which vSphere version you run is the key whether SIOC throttling has impact on Storage DRS load balancing. As stated in the previous paragraph, if SIOC throttles the queues, the virtual machine I/O does not disappear, vSphere always allows the virtual machine to generate I/O to the datastore, it just builds up somewhere higher in the stack between the virtual machine and the HBA queue.

In vSphere 5.0, Storage DRS measures latency by averaging the device latency of the hosts running VMs on that datastore. This is almost the same metric as the SIOC latency. This means that when you set the SIOC latency equal to the Storage DRS latency, the latency will be build up in the stack above the Storage DRS measure point. This means that in worst-case scenario, SIOC throttles the I/O, keeping it above the measure point of Storage DRS, which in turn makes the latency invisible to Storage DRS and therefore does not trigger the load balance operation for that datastore.

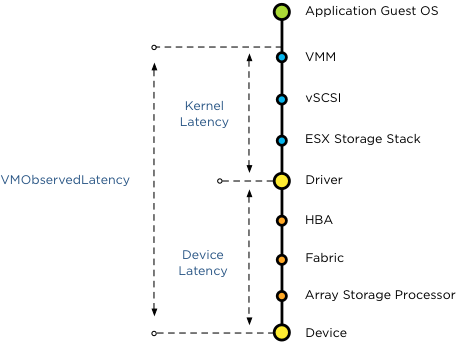

Introducing vSphere 5.1 VMObservedLatency

To avoid this scenario Storage DRS in vSphere 5.1 is using the metric VMObservedLatency. This metric measures the round-trip of I/O from the moment the VMkernel receives the I/O (Virtual Machine Monitor) to the datastore and all the way back to the VMM.

This means that when you set the SIOC latency to a lower threshold than the Storage DRS latency, Storage DRS still observes the latency build up in the kernel layer.

vSphere 5.1 Automatic latency SIOC

To help you avoid building up I/O in the host queue, vSphere 5.1 offers automatic threshold computation for SIOC. SIOC sets the latency to 90% of the throughput level of the device. To determine this, SIOC derives a latency setting after a series of tests, mapping maximum throughput to a latency value. During the tests SIOC detects where the throughput of I/O levels out, while the latency keeps on increasing. To be conservative, SIOC derives a latency value that allows the host to generate up to 90% of the throughput, leaving a burst space of 10%. This provides the best performance of the devices, avoiding unnecessary restrictions by building up latency in the queues. In my opinion, this feature alone warrants the upgrade to vSphere 5.1.

How to set the two thresholds to work optimally together

SIOC in vSphere 5.1 allows the host to go up to 90% of the throughput before adjusting the queue length of each host, and generate queuing in the kernel instead of queuing on the storage array. As Storage DRS uses VMObservedLatency it monitors the complete stack. It observes the overall latency, disregarding the location of the latency in the stack and tries to move VMs to other datastores to level out the overall experienced latency in the datastore cluster. Therefore you do not need to worry about misaligning the SIOC latency and the Storage DRS I/O latency.

If you are running vSphere 5.0 it’s recommended setting the SIOC threshold to a higher value than the Storage DRS I/O latency threshold. Please refer to your storage vendor to receive the accurate SIOC latency threshold.

Get notification of these blogs postings and more DRS and Storage DRS information by following me on Twitter: @frankdenneman

Storage DRS and alternative swap file locations

By default a virtual machine swap file is stored in the working directory of the virtual machine. However, it is possible to configure the hosts in the DRS cluster to place the virtual machine swapfiles on an alternative datastore. A customer asked if he could create a datastore cluster exclusively used for swap files. Although you can use the datastores inside the datastore cluster to store the swap files, Storage DRS does not load balance the swap files inside the datastore cluster in this scenario. Here’s why:

Alternative swap location

This alternative datastore can either be a local datastore or a shared datastore. Please note that placing the swapfile on a non-shared local datastore impacts the vMotion lead-time, as the vMotion process needs to copy the contents of the swap file to the swapfile location of the destination host. However a datastore that is shared by the host inside the DRS cluster can be used, a valid use case is a small pool of SSD drives, providing a platform that reduces the impact of swapping in case of memory contention.

Storage DRS

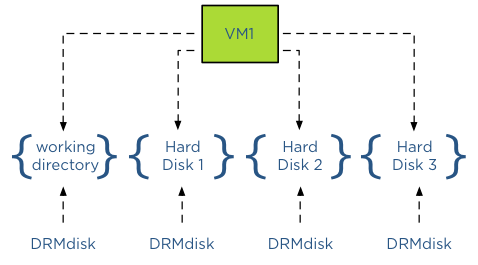

What about Storage DRS and using alternative swap file locations? By default a swap file is placed in the working directory of a virtual machine. This working directory is “encapsulated” in a DRMdisk. A DRMdisk is the smallest entity Storage DRS can migrate. For example when placing a VM with 3 hard disks in a datastore cluster, Storage DRS creates 4 DRMdisks, one DRMdisk for the working directory and separate DRMdisks for each hard disk.

Extracting the swap file out of the working directory DRMdisk

When selecting an alternative location for swap files, vCenter will not place this swap file in the working directory of the virtual machine, in essence extracting it from – or placing it outside – the working directory DRMdisk entity. Therefore Storage DRS will not move the swap file during load balancing operations or maintenance mode operations as it can only move DRMdisks.

Alternative Swap file location a datastore inside a datastore cluster?

Storage DRS does not encapsulate a swap file in its own DRMdisk and therefore it is not recommended to use a datastore that is part of a datastore cluster as a DRS cluster swap file location. As Storage DRS cannot move these files, it can impact load-balancing operations.

The user interface actually reveals the incompatibility of a datastore cluster as a swapfile location because when you configure the alternate swap file location, the user interface only displays datastores and not a datastore cluster entity.

DRS responsibility

Storage DRS can operate with swap files placed on datastores external to the datastore cluster. It will move the DRMdisks of the virtual machines and leave the swap file on its specified location. It is the task of DRS moving the swap file if it’s placed on a non-shared swap file datastore when migrating the compute state between two hosts.

Get notification of these blogs postings and more DRS and Storage DRS information by following me on Twitter: @frankdenneman

Partially connected datastore clusters – where can I find the warnings and how to solve it via the web client?

During my Storage DRS presentation at VMworld I talked about datastore cluster architecture and covered the impact of partially connected datastore clusters. In short – when a datastore in a datastore cluster is not connected to all hosts of the connected DRS cluster, the datastore cluster is considered partially connected. This situation can occur when not all hosts are configured identically, or when new ESXi hosts are added to the DRS cluster.

The problem

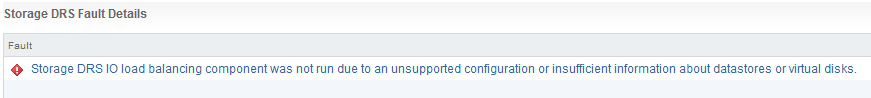

I/O load balancing does not support partially connected datastores in a datastore cluster and Storage DRS disables the IO load balancing for the entire datastore cluster. Not only on that single partially connected datastore, but the entire cluster. Effectively degrading a complete feature set of your virtual infrastructure. Therefore having an homogenous configuration throughout the cluster is imperative.

Warning messages

An entry is listed in the Storage DRS Faults window. In the web vSphere client:

1. Go to Storage

2. Select the datastore cluster

3. Select Monitor

4. Storage DRS

5. Faults.

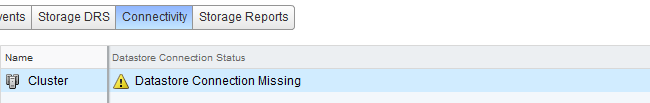

The connectivity menu option shows the Datastore Connection Status, in the case of a partially connected datastore, the message Datastore Connection Missing is listed.

When clicking on the entry, the details are shown in the lower part of the view:

Returning to a fully connected state

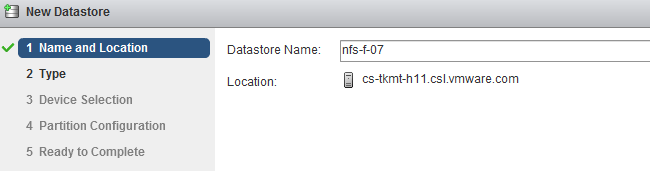

To solve the problem, you must connect or mount the datastores to the newly added hosts. In the web client this is considered a host-operation, therefore select the datacenter view and select the hosts menu option.

1. Right-click on a newly added host

2. Select New Datastore

3. Provide the name of the existing datastore

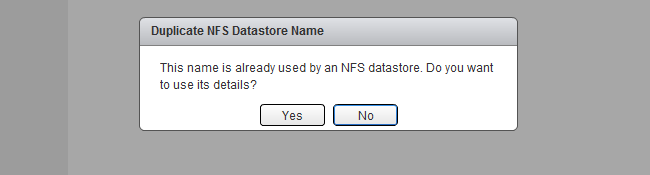

4. Click on Yes when the warning “Duplicate NFS Datastore Name” is displayed.

5. As the UI is using existing information, select next until Finish.

6. Repeat steps for other new hosts.

After connecting all the new hosts to the datastore, check the connectivity view in the monitor menu of the of the datastore cluster

Get notification of these blogs postings and more DRS and Storage DRS information by following me on Twitter: @frankdenneman