Recently I had some discussions where I needed to clarify the behavior of Storage DRS when the user adds a new disk to a virtual machine that is already running in the datastore cluster. Especially what will happen if the datastore cluster is near its capacity?

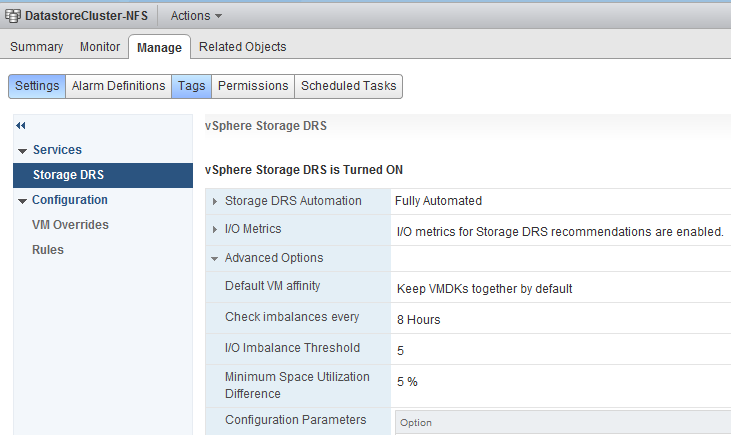

When adding a new disk, Storage DRS reviews the configured affinity rule of the virtual machine. By default Storage DRS applies an affinity rule (Keep VMDKs together by default) to all new virtual machines. vSphere 5.1 allows you to change the default behavior of the cluster, you can change the default affinity rule in the Datastore cluster settings:

Adding a new disk to an existing virtual machine

The first one to realize is that Storage DRS never can violate the affinity or anti-affinity rule of the virtual machine. For example, if the datastore cluster default affinity rule is set to “keep VMDKs together” then all the files are placed on the same datastore. Ergo if a new disk is added to the virtual machine, that disk must be stored on the same datastore in order not to violate the affinity rule.

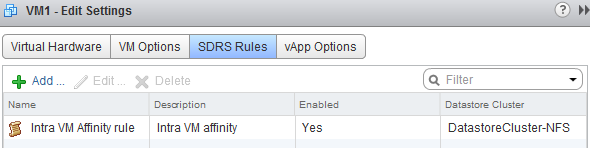

Let use an example, VM1 is placed in the datastore and is configured with the Intra-VM affinity rule (Keep the files inside the VM together).

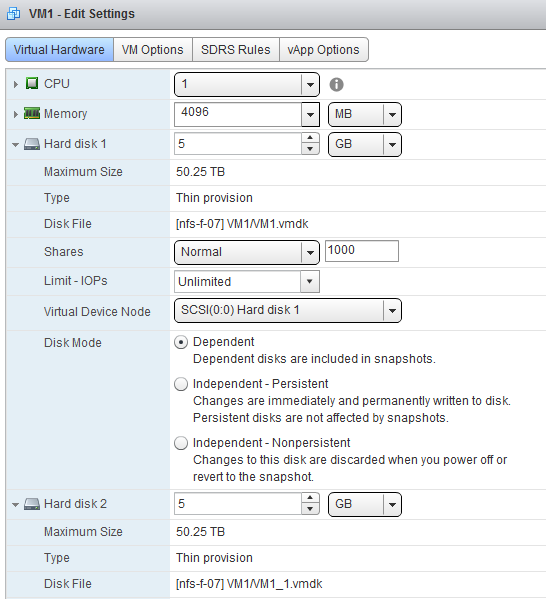

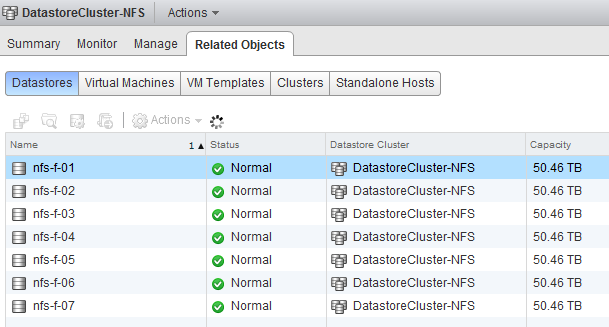

The virtual machine is configured with 2 hard disks and both reside on the datastore [nfs-f-07] of the datastore cluster

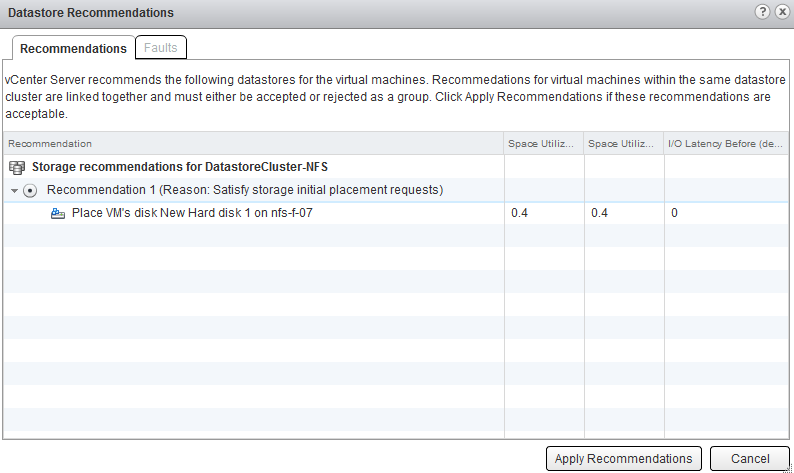

When adding another disk, Storage DRS provides me with an recommendation

Although all the other datastores inside the datastore cluster are excellent candidates as well, Storage DRS is forced to place the VMDK on the same datastore together with the rest of the virtual machine files.

Datastore cluster defragmentation

At one point you may find yourself in a situation where the datastores inside the datastore cluster are quite full. Not enough free space per datastore, but enough free space in the datastore cluster to host another disk of an exisiting virtual machine. In that situation, Storage DRS does not break the affinity rule but starts to “defragment” the datastore cluster. It will move virtual machines around to provide enough free space for the new VMDK. The article “Storage-DRS initial placement and datastore cluster defragmentation” can provide you more information about this mechanism.

Key takeaway

Therefore in a datastore cluster you will never see Storage DRS splitting up a virtual machine if the VM is configured with an affinity rule, but you will see pre-requisite moves, migrating virtual machines out of the datastore to make room for the new vmdk.

Adding new disk to an existing virtual machine in a Storage DRS Datastore Cluster

1 min read

Hi Frank, we are seeing the behaviour you describe in your ‘Key Takeaway’ – but not every time, sometimes Storage DRS fails to do pre-req moves to make space. Simply reporting that there is not enough space (which there is) and the only option is to cancel the adding of the virtual disk. Any ideas? Thanks.

Hi Gary,

Interesting! I have a few questions regarding your environment.

Are you using thin provisioned LUNs backing the datastore?

What default affinity rule is applied on the datastore cluster?

I’ve just read your article on Update 2 SDRS fixes and it appears to be the answer for us. We are mid migration and right now it seems the mix of update 2/non update 2 hosts was causing the intermittent errors!!

We are using thin-provisioned LUNS. Default affinity rule to keep VMDKs together is applied.

If you have enabled VAAI/VASA it could also be that the thin provisioned LUNs are reporting 70% fill rate. An alarm would have popped up in vCenter. But if that alarm is triggered, Storage DRS does not consider the datastores backed by those LUNs as destination as they are above the 70% utilization threshold.