It seems that the expandable reservation setting of a resource pool appears to be shrouded in mystery. How does it work, what is it for, and what does it really expand? The expandable reservation allows the resource pool to allocate physical resource (CPU/memory) protected by a reservation from a parent source to satisfy its child object reservation. Let’s dig a little deeper into this.

Parent-child relation

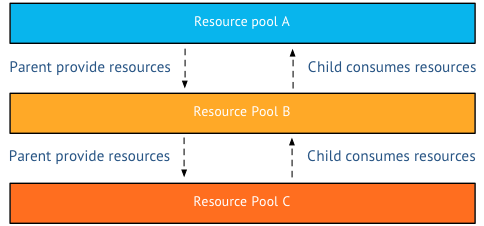

A resource pool provides resources to its child objects. A child object can either be a virtual machine or a resource pool. This is what called the parent-child relationship. If a resource pool (A), contains a resource pool (B), which contains a resource pool (C), then C is the child of B. B is the parent of C, but is the child of A, A is the parent of B. There is no terminology for the relation A-C as A only provides resource to B, it does not care if B provide any resource to C.

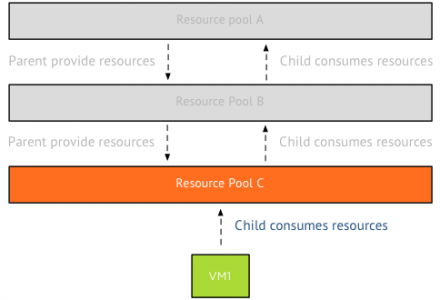

As a virtual machine is placed in to a resource pool, the virtual machine becomes a child-object of the resource pool. It is the responsibility of the resource pool to provide the resources the virtual machine requires. If a virtual machine is configured with a reservation, than it will request the physical resources from its parent resource pool.

Remember that a reservation guarantees that the resources protected by the reservation will and cannot be reclaimed by the VMkernel, even during memory pressure. Therefor the reservation of the virtual machine is directed to its parent and the parent must exclusively provide this to the virtual machine. It can only provide these resources from its own pool of protected resources. The resource pool can only distribute the resources it has obtained itself.

Protected or reserved resources?

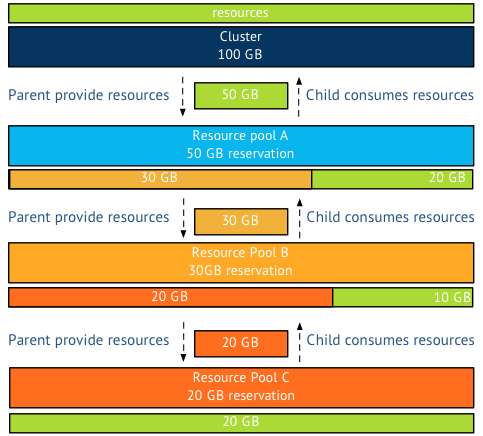

I’m deliberately calling a resource claimed by a reservation a protected resource, as the VMkernel cannot reclaim it. However when a resource pool is configured with a reservation, it immediately claims this memory from its parent. This goes on all the way up to the cluster level. The cluster is the root resource pool and all the resources provided by the ESXi hosts are owned by the resource pool and protected by a reservation. Therefor the cluster – root resource pool – contains and manages the protected pool of resources.

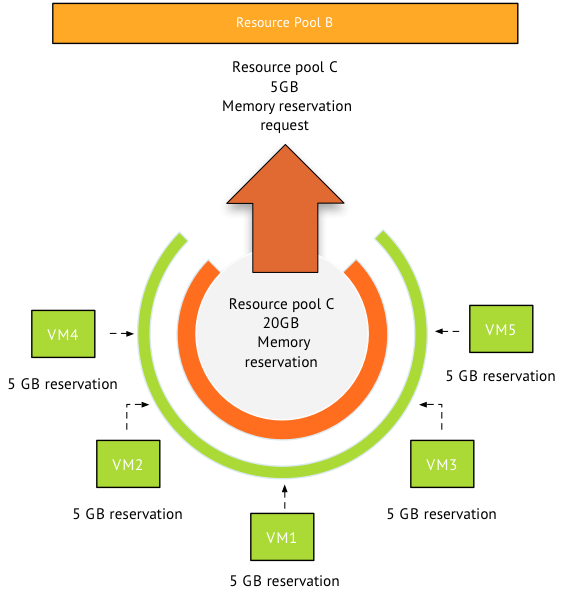

For example, the cluster has 100GB of resources, meaning that the root resource pool consists of 100GB of protected memory. Resource pool A is configured with a 50GB reservation, consuming this 50Gb from the root resource pool. However resource pool B is configured with a 30GB reservation, immediately claiming 30 GB of resources protected by the reservation of resource pool A. Leaving resource pool A with only 20 GB of protected resources for itself. Resource Pool C is configured with a 20GB memory reservation. Resource pool C claims this from its parent, resource pool B which is left with 10GB of protected resources for itself.

But what happens if the resource pool runs out of protected resources? Or is not configured with a reservation at all? In other words, If the child objects in the resource pool are configured with reservations that exceeds the reservation set on the resource pool, the resource pool needs to request protected resources from its parent. This can only be done if expandable reservation is enabled.

Please note that the resource pool request protected resources, it will not accept resources that are not protected by a reservation.

Now in this scenario, the five virtual machines in the resource pool are each configured with 5GB memory reservation, totaling it to 25GB. Resource pool C is configured with a 20GB memory reservation. Therefor resource pool is required to make a request for 5GB of protected memory resources on behalf of the virtual machines to its parent resource pool B.

If resource pool B does not have the protected resources itself, it can request these protected resources from its parent. This can only occur when the resource pool is configured with expandable reservation enabled. The last stop in the cluster it the cluster itself. What can stop this river of requests? Two things, the request for protected resources is stopped by a resource limit or by a disabled expandable reservation. If a resource pool has expandable reservation disabled, it will try to satisfy the reservation itself if it’s unable to do so, it will deny the reservation request. If a resource pool is set with a limit, the resource pool is limited to that amount of physical resources.

For example if the parent resource pool has a reservation and a limit of 20GB, the reservation on behalf of its child need to be satisfied by the protected pool itself otherwise it will deny the resource request.

Now lets use a more complex scenario, resource pool B is configured with expandable reservation enabled and a 30 GB reservation. A limit is set to 35GB. Resource pool C is requesting an additional 10GB on top of the 20GB it is already granted. Resource pool B is running 2 VM with a total reservation of 10GB. This means the protected pool of Resource pool B is servicing 20GB resource request from resource pool C and 10 GB for its own virtual machines. Its protected pool is depleted, the additional 10GB request of resource pool C is denied, as this would raise the protected pool of resource pool B to a total of 40GB memory, which exceeds the 35GB limit.

Virtual machine memory overhead

Please remember that each virtual machine is configured with a memory reservation. To run the virtual machine a small amount of memory resources are required by the VMkernel. This is called the virtual machine memory overhead. To be able to run a virtual machine inside a resource pool, either the expandable reservation should be enabled or a memory reservation is configured on the resource pool.

Adjusting the cost of vMotion – a word of caution

Yesterday I posted an article on how to change the cost of vMotion in order to change the default number of concurrent vMotion. As I mentioned in the article, I’m not a proponent of changing advanced settings.

Today Kris posted a very interesting question;

How about the scenario where one uses multi NIC vMotion for against two 5Gbps virtual adapters)? I know a cost of 4 will be set for the network by the VMkernel, however as the aggregate bandwidth becomes 10Gbps is it safe enough to raise the limit? Perhaps not to the full 8 for 10Gbps, but 6?

Please note that this article does not bash Kris. He provides a use case that I’ve heard a couple of times, making his comment an example use case. Although Kris’s scenario sounds like a very good use case to adjust the cost settings to circumvent the line-speed detection of the VMkernel to determine the max-cost of the network resource, it does not solve the other dynamic elements using line speed.

DRS MaxMovesPerHost

ESX 4.1 Introduces the MaxMovesPerHost setting, allowing the host to dynamically set the limit on moves. The limit is based on how many moves DRS thinks can be completed in one DRS evaluation interval. DRS adapts to the frequency it is invoked (pollPeriodSec, default 300 seconds) and the average migration time observed from previous migrations. However, this limit is still bound by the detected line speed and the associated Max cost. Although the proposed environment has 10GB line speed in total available, the VMkernel will still set the max cost to allow 4 vMotions on the host. Restricting the number of migrations, DRS can initiate during a load balance operation.

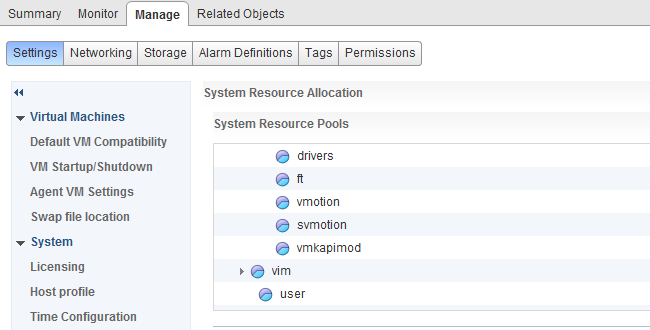

vMotion system resource pool CPU reservation

vMotion tries to move the used memory blocks as fast as possible. vMotion uses all the available bandwidth depending on the available CPU speed and bandwidth. Depending on the detected line speed, vMotion reserves an X amount of CPU speed at the start of a vMotion process. vMotion computes its desired host vMotion CPU reservation. For every 1GBe vMotion link speed it detects vMotion in vSphere 5.1 reserved 10% of a CPU core with a minimum desired CPU reservation of 30%. This means that if you use a single 1GBe, vMotion reserves 30% of a core, if you use 4 x 1GBe connections, that means vMotion reserves 40% of a core. A 10GBe link is special as vMotion reserves 100% of a single core.

vMotion creates a (system) resource pool and sets the appropriate CPU reservation on the resource pool. It’s important to note that this is being done to the vMotion resource pool, which means that the reservation is shared across all vMotions happening on the host.

Using two 5GB links, results in a 40% CPU core reservation (default 30% plus 10% for the extra link). However, this dynamic behavior might get unnoticed if you have enough spare CPU cycles in your source and destination host.

Word of caution

I hope these two examples show that there are multiple dynamic elements working together on various levels in your virtual infrastructure. Adjusting a setting might improve the performance of a specific use case, but to change the overall behavior, lots of settings have to be changed. Due to the lack of time and specific information correlating various settings is impossible for many of us most of the time. Therefore I would like to repeat my recommendation. Please do not adjust advanced settings only if VMware supports ask you to.

(Alternative) VM swap file locations Q&A – part 2

After writing the article “(Alternative) VM swap file locations Q&A” I received a lot of questions about the destination of the swapped pages and reading back my article I didn’t do a good job clarifying that part of the process.

Which network is used for copying swapped pages?

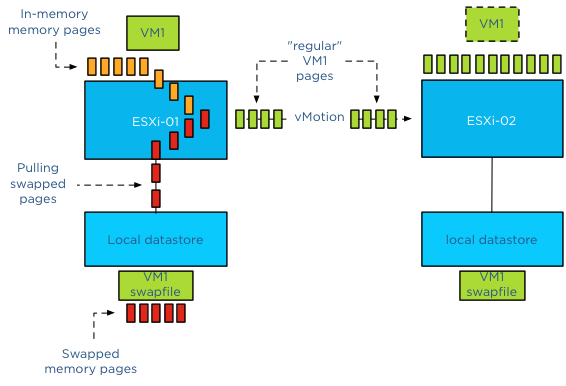

As mentioned in the previous post the swap file itself is not copied over to the destination host, but only the swapped pages itself. Raphael Schitz (@hypervisor_fr) was the first to ask, which network is used to copy over the swapped pages? The answer is vMotion network.

The reason why the vMotion network is used, is that the source host running the active virtual machine, pulls the swapped pages back in to memory when migrating the memory pages to the destination host.

Are swapped pages on the source host swapped out on the destination host?

As the pages are copied out from the swap file to the destination host, swapped pages are copied into the stream of the in-memory pages from the source host to the destination host. That means that the destination host is not aware which pages orginate from swap file and which pages come from in-memory, they are just memory pages that need to be stored and made available to the new virtual machine.

To describe the behavior in a different way, the source host pulls the swapped pages from disk before sending them over, therefor the destination host sees a continues stream of memory pages, unmarked, all equal and are therefor stored in memory by the destination host.

What if the destination host is experiencing contention?

Well it’s up to the destination host to decide which pages to swap out to disk. During a vMotion process, the destination VM starts out with a clean slate, meaning that the memory target is not determined by the source host but by the destination host. Memory targets are local memory schedule metrics and thus not shared. The source host shares the percentage of active pages but it’s the destination hosts’ memory scheduler that determines the appropriate swap target for the new virtual machine. It can possibly push out memory pages back to its swap file as needed. The pages could be the same as the pages on the old host, but they can also completely different pages.

What about compressed pages?

For every rule there is an exception and the exception is compressed pages. During a vMotion process the destination host will maintain the compressed pages by keeping them compressed. This behavior occurs even with an unshared swap migration.

Get notification of these blogs postings and more DRS and Storage DRS information by following me on Twitter: @frankdenneman

(Alternative) VM swap file locations Q&A – part 2

After writing the article “(Alternative) VM swap file locations Q&A” I received a lot of questions about the destination of the swapped pages and reading back my article I didn’t do a good job clarifying that part of the process.

Which network is used for copying swapped pages?

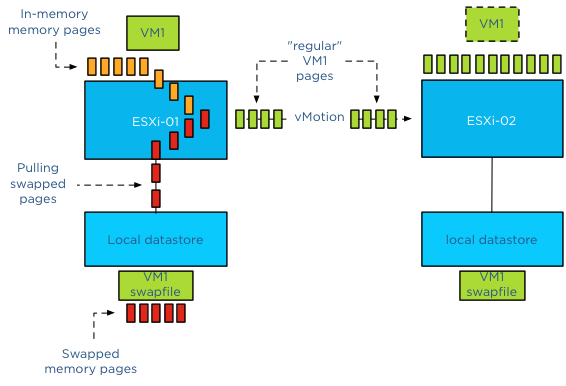

As mentioned in the previous post the swap file itself is not copied over to the destination host, but only the swapped pages itself. Raphael Schitz (@hypervisor_fr) was the first to ask, which network is used to copy over the swapped pages? The answer is vMotion network.

The reason why the vMotion network is used, is that the source host running the active virtual machine, pulls the swapped pages back in to memory when migrating the memory pages to the destination host.

Are swapped pages on the source host swapped out on the destination host?

As the pages are copied out from the swap file to the destination host, swapped pages are copied into the stream of the in-memory pages from the source host to the destination host. That means that the destination host is not aware which pages orginate from swap file and which pages come from in-memory, they are just memory pages that need to be stored and made available to the new virtual machine.

To describe the behavior in a different way, the source host pulls the swapped pages from disk before sending them over, therefor the destination host sees a continues stream of memory pages, unmarked, all equal and are therefor stored in memory by the destination host.

What if the destination host is experiencing contention?

Well it’s up to the destination host to decide which pages to swap out to disk. During a vMotion process, the destination VM starts out with a clean slate, meaning that the memory target is not determined by the source host but by the destination host. Memory targets are local memory schedule metrics and thus not shared. The source host shares the percentage of active pages but it’s the destination hosts’ memory scheduler that determines the appropriate swap target for the new virtual machine. It can possibly push out memory pages back to its swap file as needed. The pages could be the same as the pages on the old host, but they can also completely different pages.

What about compressed pages?

For every rule there is an exception and the exception is compressed pages. During a vMotion process the destination host will maintain the compressed pages by keeping them compressed. This behavior occurs even with an unshared swap migration.

Get notification of these blogs postings and more DRS and Storage DRS information by following me on Twitter: @frankdenneman

(Alternative) VM swap file locations Q&A

Lately I have received a couple of questions about Swap file placement. As I mentioned in the article “Storage DRS and alternative swap file locations”, it is possible to configure the hosts in the DRS cluster to place the virtual machine swapfiles on an alternative datastore. Here are the questions I received and my answer:

Question 1: Will placing a swap file on a local datastore increase my vMotion time?

Yes, as the destination ESXi host cannot connect to the local datastore, the file has to be placed on a datastore that is available for the new ESXi host running the incoming VM.Therefor the destination host creates a new swap file in its swap file destination. vMotion time will increase as a new file needs to be created on the local datastore of the destination host and swapped memory pages potentially need to be copied.

Question 2: Is the swap file an empty file during creation or is it zeroed out?

When a swap file is created an empty file equal to the size of the virtual machine memory configuration. This file is empty and does not contain any zeros.

Please note that if the virtual machine is configured with a reservation than the swap file will be an empty file with the size of (virtual machine memory configuration – VM memory reservation). For example, if a 4GB virtual machine is configured with a 1024MB memory reservation, the size of the swap file will be 3072MB.

Question 3: What happens with the swap file placed on a non-shared datastore during vMotion?

During vMotion, the destination host creates a new swap file in its swap file destination. If the source swap file contains swapped out pages, only those pages are copied over to the destination host.

Question 4: What happens if I have an inconsistent ESXi host configuration of local swap file locations in a DRS cluster?

When selecting the option “Datastore specified by host”, an alternative swap file location has to be configured on each host separately. If one host is not configured with an alternative location, then the swap file will be stored in the working directory of the virtual machine. When that virtual machine is moved to another host configured with an alternative swap file location, the contents of the swap file is copied over to the specified location, regardless of the fact that the destination host can connect to the swap file in the working directory.

Question 5: What happens if my specified alternative swap file location is full and I want to power-on a virtual machine?

If the alternative datastore does not have enough space, the VMkernel tries to store the VM swap file in the working directory of the virtual machine. You need to ensure enough free space is available in the working directory otherwise the VM not allowed to be powered up.

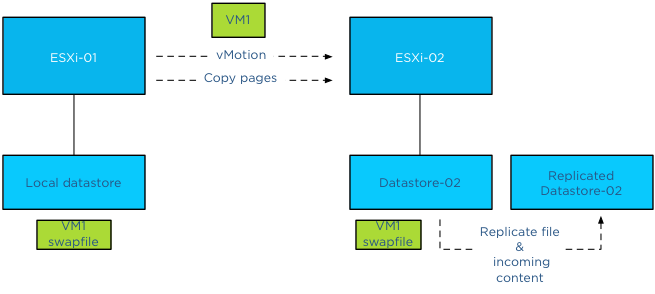

Question 6: Should I place my swap file on a replicated datastore?

Its recommended placing the swap file on a datastore that has replication disabled. Replication of files increases vMotion time. When moving the contents of a swap file into a replicated datastore, the swap file and its contents need to replicated to the replica datastore as well. If synchronous replication is used, each block/page copied from the source datastore to the destination datastore, it needs to wait until the destination datastore receives an acknowledgement from its replication partner datastore (the replica datastore).

Question 7: Should I place my swap file on a datastore with snapshots enabled?

To save storage space and design for the most efficient use of storage capacity, it is recommended not to place the swap files on a datastore with snapshot enabled. The VMkernel places pages in a swap file if it’s there is memory pressure, either by an overcommitted state or the virtual machine requires more memory than it’s configured memory limit. It only retrieves memory from the swap file if it requires that particular page. The VMkernel will not transfer all the pages out of the swap file if the memory pressure on the host is resolved. It keeps unused swapped out pages in the swap file, as transferring unused pages is nothing more than creating system overhead. This means that a swapped out page could stay there as long as possible until the virtual machine is powered-off. Having the possibility of snapshotting idle and unused pages on storage could reduce the pools capacity used for snapshotting useful data.

Question 8: Should I place my swap file on a datastore on a thin provisioned datastore (LUN)?

This is a tricky one and it all depends on the maturity of your management processes. As long as thin provisioned datastore is adequately monitored for utilization and free space and controls are in place that ensures sufficient free space is available to cope with bursts of memory use, than it could be a viable possibility.

The reason for the hesitation is the impact a thin provisioned datastores has on the continuity of the virtual machine.

Placement of swap files by VMkernel is done at the logical level. The VMkernel determines if the swap file can be placed on the datastore based on its file size. That means that it checks the free space of a datastore reported by the ESX host, not the storage array. However the datastore could exist in a heavily over-provisioned datapool.

Once the swap file is created the VMkernel assumes it can store pages in the entire swap file, see question 2 for swap file calculation. As the swap file is just an empty file until the VMkernel places a page in the swap file, the swap file itself takes up a little space on the thin disk datastore. Now this can go on for a long time and nothing will happen. But what if the total reservation consumed, memory overcommit-level and workload spikes on the ESXi host layer are not correlated with the available space in the thin provisioning storage pool? Understand how much space the datastore could possibly obtain and calculate the maximum configured size of all existing swap files on the datastore to avoid an Out-of space condition.

(Alternative) VM swap file locations Q&A – part 2

Get notification of these blogs postings and more DRS and Storage DRS information by following me on Twitter: @frankdenneman