Lately I have received a couple of questions about Swap file placement. As I mentioned in the article “Storage DRS and alternative swap file locations”, it is possible to configure the hosts in the DRS cluster to place the virtual machine swapfiles on an alternative datastore. Here are the questions I received and my answer:

Question 1: Will placing a swap file on a local datastore increase my vMotion time?

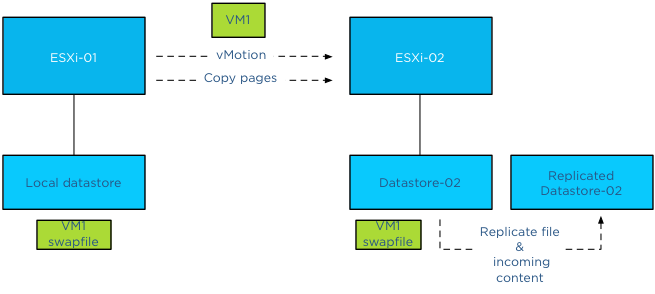

Yes, as the destination ESXi host cannot connect to the local datastore, the file has to be placed on a datastore that is available for the new ESXi host running the incoming VM.Therefor the destination host creates a new swap file in its swap file destination. vMotion time will increase as a new file needs to be created on the local datastore of the destination host and swapped memory pages potentially need to be copied.

Question 2: Is the swap file an empty file during creation or is it zeroed out?

When a swap file is created an empty file equal to the size of the virtual machine memory configuration. This file is empty and does not contain any zeros.

Please note that if the virtual machine is configured with a reservation than the swap file will be an empty file with the size of (virtual machine memory configuration – VM memory reservation). For example, if a 4GB virtual machine is configured with a 1024MB memory reservation, the size of the swap file will be 3072MB.

Question 3: What happens with the swap file placed on a non-shared datastore during vMotion?

During vMotion, the destination host creates a new swap file in its swap file destination. If the source swap file contains swapped out pages, only those pages are copied over to the destination host.

Question 4: What happens if I have an inconsistent ESXi host configuration of local swap file locations in a DRS cluster?

When selecting the option “Datastore specified by host”, an alternative swap file location has to be configured on each host separately. If one host is not configured with an alternative location, then the swap file will be stored in the working directory of the virtual machine. When that virtual machine is moved to another host configured with an alternative swap file location, the contents of the swap file is copied over to the specified location, regardless of the fact that the destination host can connect to the swap file in the working directory.

Question 5: What happens if my specified alternative swap file location is full and I want to power-on a virtual machine?

If the alternative datastore does not have enough space, the VMkernel tries to store the VM swap file in the working directory of the virtual machine. You need to ensure enough free space is available in the working directory otherwise the VM not allowed to be powered up.

Question 6: Should I place my swap file on a replicated datastore?

Its recommended placing the swap file on a datastore that has replication disabled. Replication of files increases vMotion time. When moving the contents of a swap file into a replicated datastore, the swap file and its contents need to replicated to the replica datastore as well. If synchronous replication is used, each block/page copied from the source datastore to the destination datastore, it needs to wait until the destination datastore receives an acknowledgement from its replication partner datastore (the replica datastore).

Question 7: Should I place my swap file on a datastore with snapshots enabled?

To save storage space and design for the most efficient use of storage capacity, it is recommended not to place the swap files on a datastore with snapshot enabled. The VMkernel places pages in a swap file if it’s there is memory pressure, either by an overcommitted state or the virtual machine requires more memory than it’s configured memory limit. It only retrieves memory from the swap file if it requires that particular page. The VMkernel will not transfer all the pages out of the swap file if the memory pressure on the host is resolved. It keeps unused swapped out pages in the swap file, as transferring unused pages is nothing more than creating system overhead. This means that a swapped out page could stay there as long as possible until the virtual machine is powered-off. Having the possibility of snapshotting idle and unused pages on storage could reduce the pools capacity used for snapshotting useful data.

Question 8: Should I place my swap file on a datastore on a thin provisioned datastore (LUN)?

This is a tricky one and it all depends on the maturity of your management processes. As long as thin provisioned datastore is adequately monitored for utilization and free space and controls are in place that ensures sufficient free space is available to cope with bursts of memory use, than it could be a viable possibility.

The reason for the hesitation is the impact a thin provisioned datastores has on the continuity of the virtual machine.

Placement of swap files by VMkernel is done at the logical level. The VMkernel determines if the swap file can be placed on the datastore based on its file size. That means that it checks the free space of a datastore reported by the ESX host, not the storage array. However the datastore could exist in a heavily over-provisioned datapool.

Once the swap file is created the VMkernel assumes it can store pages in the entire swap file, see question 2 for swap file calculation. As the swap file is just an empty file until the VMkernel places a page in the swap file, the swap file itself takes up a little space on the thin disk datastore. Now this can go on for a long time and nothing will happen. But what if the total reservation consumed, memory overcommit-level and workload spikes on the ESXi host layer are not correlated with the available space in the thin provisioning storage pool? Understand how much space the datastore could possibly obtain and calculate the maximum configured size of all existing swap files on the datastore to avoid an Out-of space condition.

(Alternative) VM swap file locations Q&A – part 2

Get notification of these blogs postings and more DRS and Storage DRS information by following me on Twitter: @frankdenneman

vSphere 5.1 DRS advanced option LimitVMsPerESXHost

During the Resource Management Group Discussion here in VMworld Barcelona a customer asked me about limiting the number of VMs per Host. vSphere 5.1 contains an advanced option on DRS clusters to do this. If the advanced option: “LimitVMsPerESXHost” is set, DRS will not admit or migrate more VMs to the host than that number. For example, when setting the LimitVMsPerESXHost to 40, each host allows up to 40 virtual machines.

No correction for existing violation

Please note that DRS will not correct any existing violation if the advanced feature is set while virtual machines are active in the cluster. This means that if you set LimitVMsPerESXHost to 40 and at the time 45 virtual machines are running on an ESX host, DRS will not migrate the virtual machines out of that host. However It does not allow any more virtual machines on the host. DRS will not allow any power-ons or migration to the host, both manual (by administrator) and automatic (by DRS).

High Availability

As this is a DRS cluster setting, HA will not honor the setting during a host failover operation. This means that HA can power on as many virtual machines on a host it deems necessary. This is to avoid any denial of service by not allowing virtual machines to power-on if the “LimitVMsPerESXHost” is set too conservative.

Impact on load balancing

Please be aware that this setting can impact VM happiness. This setting can restrict DRS in finding a balance with regards to CPU and Memory distribution.

Use cases

This setting is primary intended to contain the failure domain. A popular analogy to describe this setting would be “Limiting the number of eggs in one basket”. As virtual infrastructures are generally dynamic, try to find a setting that restricts the impact of a host failure without restricting growth of the virtual machines.

I’m really interested in feedback on this advanced setting, especially if you consider implementing it, the use case and if you want to see this setting to be further developed.

Get notification of these blogs postings and more DRS and Storage DRS information by following me on Twitter: @frankdenneman

DRS and memory balancing in non-overcomitted clusters

First things first, I normally do not recommend changing advanced settings. Always try to tune system behavior by changing the settings provided by the user interface or try to understand system behavior and how it aligns with your design.

The “problem”

DRS load balancing recommendations could be sub-optimal when no memory overcommitment is preferred.

Some customers prefer not to use memory overcommitment. The clusters contain (just) enough memory capacity to ensure all running virtual machines have their memory backed by physical memory. Nowadays it is not uncommon seeing virtual machines with fairly highly allocated (consumed) memory and due to the use of large pages on hosts with recent CPU architectures, little to no memory is shared. Common scenario with this design is a usual host memory load of 80-85% consumed. In this situation, DRS recommendations may have a detrimental effect on performance as DRS does not consider consumed memory but active memory.

DRS behavior

When analyzing the requirements of a virtual machine during load balancing operations, DRS calculates the memory demand of the virtual machine.

The main memory metric used by DRS to determine the memory demand is memory active. The active memory represents the working set of the virtual machine, which signifies the number of active pages in RAM. By using the working-set estimation, the memory scheduler determines which of the allocated memory pages are actively used by the virtual machine and which allocated pages are idle. To accommodate a sudden rapid increase of the working set, 25% of idle consumed memory is allowed. Memory demand also includes the virtual machine’s memory overhead.

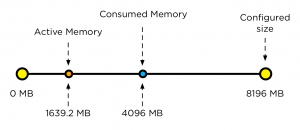

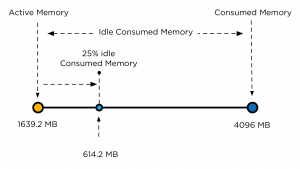

Let’s use an 8 GB virtual machine as example on how DRS calculates the memory demand. The guest OS running in this virtual machine has touched 50% of its memory size since it was booted but only 20% of its memory size is active. This means that the virtual machine has consumed 4096 MB and 1639.2 MB is active.

As mentioned, DRS accommodate a percentage of the idle consumed memory to accommodate a sudden increase of memory use. To calculate the idle consumed memory, the active memory 1639.2 MB is subtracted from the consumed memory, 4096 MB, resulting in a total 2456.8 MB. By default DRS includes 25% of the idle consumed memory, i.e. 614.2 MB.

The virtual machine has a memory overhead of 90 MB. The memory demand DRS uses in it’s load balancing calculation is as follows: 1639.2 MB + 614.2 MB + 90 MB = 2343.4 MB. This means that DRS will select a host that has 2343.4 MB available for this machine and the move to this host improves the load balance of the cluster.

DRS and corner stone of virtualization resource overcommitment

Resource sharing and overcommitment of resources are primary elements of the virtualization. When designing virtual infrastructure it is a challenge to build the environment in such a way that it can handle virtual machine workloads while improving server utilization. Because every workload is not equal, applying resource allocation settings such as shares, reservations and limits can make distinction in priority.

DRS is designed with this corner stone in mind. And that’s makes DRS sometimes a hard act to follow. DRS is all about solving imbalance and providing enough resources to the virtual machines aligned to their demand. This means that DRS balances workload on demand and trust in its core value that overcommitment is allowed. It then relies on the host local scheduler to figure out the priority of the virtual machines. And this behavior is sometimes not in line with the perception of DRS.

A common perception is that DRS is about optimizing performance. This is partially true. As mentioned before DRS looks at the demand of the VM, and will try to mix and match activity of the virtual machines with the available resources in the cluster. As it relies on resource allocation settings, it assumes that priority is defined for each virtual machine and that the host local schedulers can reclaim memory safely. For this reason the DRS memory imbalance metric is tuned to focus on VM active memory to allow efficient sharing of host memory resources. Allowing to run with less cluster memory than the sum of all running virtual machine memory sizes and reclaiming idle consumed memory from lower priority virtual machines for other virtual machines’ active workloads.

Unfortunately DRS does not know when the environment is designed in such a way to avoid overcommitment. Based on the input it can place a virtual machine on a host with virtual machine that have lots of idle consumed memory laying around. Instigating memory reclamation. In most cases this reclamation is hardly noticeable due to the use of the balloon driver. However in the case where all hosts are highly utilized, ballooning might not be as responsive as required, forcing the kernel to compress memory and swap. This means that migrations for the sole purpose of balancing active memory are not useful in environments like these and, if the target host memory is highly consumed, can cause a performance impact on the migrating virtual machine as it waits to obtain memory and on the other virtual machines on the target host as they do processing to allow reclamation of their idle memory.

The solution? You might want to change the 25% idle consumed memory setting

The solution I recommend to start with is to lower the migration threshold by moving the slider to the left. This allows the DRS cluster to have an higher imbalance and allows DRS to be more conservative when recommending migrations.

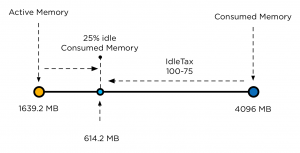

If this is not satisfactory, then I would suggest changing the DRS advanced option called IdleTax. Please note that this DRS advanced option is not the same setting as the memory kernel setting. Mem.IdleTax.

The DRS IdleTax advanced option (default 75) controls how much consumed idle memory should be added to active memory in estimating memory demand. The calculation is as follows: 100-IdleTax. Default caluculation = 100-75=25

This means that the smaller the value of IdleTax, more consumed idle memory is added to the active memory by DRS for load balancing.

Be aware that the value of IdleTax is a heuristic, tuned to facilitate memory overcommitment; tuning it to a lower value is appropriate for environments not using overcommitment. Note that the option is set per cluster, and would need to be changed for all DRS clusters as appropriate.

Again, try to use a lower migration threshold setting and monitor if this setting provides satisfying results before setting this advanced feature.

Considerations when modifying the individual VM automation level

Recently I received some questions about the behavior of DRS when the automation level of an individual virtual machine is modified. DRS allows customization of the automation levels for individual virtual machines to override the DRS cluster automation level. The most common reason for modifying the automation level is to prevent DRS move a virtual machine automatically. Selecting an automation level mode other than the default cluster automation level or fully automated impacts (daily) operational procedures. It might impact cluster balance and/or resource availability if the operational procedures are not adjusted to align with the “new” behavior of DRS when dealing with non-default automation levels. Before continuing with the impact and caveats of a non-default automation level, let’s zoom into their behavior.

Level of automation

There are five automation level modes:

• Fully Automated

• Partially Automated

• Manual

• Default

• Disabled

Each automation level behaves differently:

| Automation level | Initial placement | Load Balancing |

| Fully Automated | Automatic Placement | Automatic execution of migration recommendation |

| Partially Automated | Automatic Placement | Migration recommendation is displayed |

| Manual | Recommended host is displayed | Migration recommendation is displayed |

| Disabled | VM powered-on on registered host | No migration recommendation generated |

The default automation level is not listed in the table above as it aligns with the cluster automation level. When the automation level of the cluster is modified, the individual automation level is modified as well.

Disabled automation level

If the automation level of a virtual machine is set to disabled, then DRS is disabled entirely for the virtual machine. DRS will not generate a migration recommendation or generate an initial placement recommendation. The virtual machine will be powered-on on its registered host. A powered-on virtual machine with its automation level set to disabled will still impact the DRS load balancing calculation as its consumes cluster resources. During the recommendation calculation, DRS ignores the virtual machines set to disabled automation level and selects other virtual machines on that host. If DRS must choose between virtual machines set to the automatic automation levels and the manual automation level, DRS chooses the virtual machines set to automatic as it prefers them over virtual machines set to manual.

Manual automation level

When a virtual machine is configured with the manual automation level, DRS generate both initial placement and load balancing migration recommendations, however the user needs to manual approve these recommendations.

Partially automation level

DRS automatically places a virtual machine with a partially automation level, however it will generate a migration recommendation which requires manual approval.

The impact of manual and partially automation level on cluster load balance

When selecting any other automation level than disabled, DRS assumes that the user will manual apply the migration recommendation it recommends. This means that DRS will continue to include the virtual machines in the analysis of cluster balance and resource utilization. During the analysis DRS simulates virtual machine moves inside the cluster, every virtual machine that is not disabled will be included in the selection process of migration recommendations. If a particular move of a virtual machine offers the highest benefit and the least amount of cost and lowest risk, DRS generates a migration recommendation for this move. Because DRS is limited to a specific number of migrations, it might drop a recommendation of a virtual machine that provide almost similar goodness. Now the problem with this scenario is, that the recommended migration might be a virtual machine configured with a manual automation level, while the virtual machine with near-level goodness is configured with the default automation level. This should not matter if the user monitors each and every DRS invocation and reviews the migration recommendations when issued. This is unrealistic to expect as DRS runs each 5 minutes.

I’ve seen a scenario where a group of the virtual machines where configured with manual mode. It resulted in a host becoming a “trap” for the virtual machines during an overcommitted state. The user did not monitor the DRS tab in vCenter and was missing the migration recommendations. This resulted in resource starvation for the virtual machines itself but even worse, it impacted multiple virtual machines inside the cluster. Because DRS generated migration recommendations, it dropped other suitable moves and could not achieve an optimal balance.

For more information about the maximum number of moves, please read this article. Interested in more information about goodness values, please read this article.

Disabled versus partially and manual automatic levels

Disabling DRS on a virtual machines have some negative impact on other operation processes or resource availability, such as placing a host into maintenance mode or powering up a virtual machine after maintenance itself. As it selects the registered host, it might be possible that the virtual machine is powered on a host with ample available resources while more suitable hosts are available. However disabled automation level avoids the scenario described in the previous paragraph.

Partially automatic level automatically places the virtual machine on the most suitable host, while manual mode recommends placing the host on the most suitable host available. Partially automated offers the least operational overhead during placement, but can together with manual automation level introduce lots of overhead during normal operations.

Risk versus reward

Selecting an automation level is almost a risk versus reward game. Setting the automation level to disabled might impact some operation procedures, but allows DRS to neglect the virtual machines when generating migration recommendations and come up with alternative solutions that provide cluster balance as well. Setting the automation level to partially or manual will offer you better initial placement recommendations and a more simplified maintenance mode process, but will create the risk of unbalance or resource starvation when the DRS tab in vCenter is left unmonitored.

Disabling MinGoodness and CostBenefit

Over the last couple of months I’ve seen recommendations popping up on changing the MinGoodNess and CostBenefit settings to zero on a DRS cluster (KB1017291) . Usually after the maintenance window, when hosts where placed in maintenance mode, the hosts remain unevenly loaded and DRS won’t migrate virtual machines to the less loaded host.

By disabling these adaptive algorithms, DRS to consider every move and the virtual machines will be distributed aggressively across the hosts. Although this sounds very appealing, MinGoodness and CostBenefit calculations are created for a reason. Let’s explore the DRS algorithm and see why this setting should only be used temporarily and not as a permanent setting.

DRS load balance objectives

DRS primary objective is to provide virtual machines their required resources. If the virtual machine is getting the resources it request (dynamic entitlement), than there is no need to find a better spot. If the virtual machines do not get their resources specified in their dynamic entitlement, then DRS will consider moving the virtual machine depending on additional factors.

This means that DRS allow certain situations where the administrator feels like the cluster is unbalanced, such as an uneven virtual machine count on hosts inside the cluster. I’ve seen situations where one host was running 80% of the load while the other hosts where running a couple of virtual machines. This particular cluster was comprised of big hosts, each containing 1TB memory while the entire virtual machine memory footprint was no more than 800GB. One host could easily run all virtual machines and provide the resources the virtual machines were requesting.

This particular scenario describes the biggest misunderstanding of DRS, DRS is not primarily designed to equally distribute virtual machines across hosts in the cluster. It distributes the load as efficient as possible across the resources to provide the best performance of the virtual machines. And this is the key to understand why DRS does or does not generate migration recommendation. Efficiency! To move virtual machines around, it cost CPU cycles, memory resources and to a smaller extent datastore operation (stun/unstun) virtual machines. In the most extreme case possible, load balancing itself can be a danger to the performance of virtual machines by withholding resources from the virtual machines, by using it to move virtual machines. This is worst-case scenario, but the main point is that the load balancing process cost resources that could also be used by virtual machines providing their services, which is the primary reason the virtual infrastructure is created for. To manage and contain the resource consumption of load balancing operations, MinGoodness and CostBenefit calculations were created.

CostBenefit

DRS calculates the Cost Benefit (and risk) of a move. Cost: How many resources does it take to move a virtual machine by vMotion? A virtual machine that is constantly updating its large memory footprint cost more CPU cycles and network traffic than a virtual machine with a medium memory footprint that is idling for a while. Benefit: how many resources will it free up on the source host and what will the impact be on the normalized entitlement on the destination host? The normalized entitlement is the sum of dynamic entitlement of all the virtual machines running on that host divided by the capacity of the host. Risk is predicted how the workload might change on both the source and destination host and if the outcome of the move of the candidate virtual machine is still positive when the workload changes.

MinGoodness

To understand which host the virtual machine must move to, DRS uses the normalized entitlement of the host as the key metric and will only consider hosts that have a lower normalized entitlement than the source host. MinGoodness helps DRS understand what effect the move has on the overall cluster imbalance.

DRS awards every move a CostBenefit and MinGoodness rating and these are linked together. DRS will only recommend a move with a negative CostBenefit rating if the move has a highly positive MinGoodness rating. Due to the metrics used, CostBenefit ratings are usually more conservative than the MinGoodness ratings. Overpowering the decision to move virtual machine to host with a lower normalized entitlement due to the cost involved or risk of that particular move.

When MinGoodness and CostBenefit are set to zero, DRS calculates the cluster imbalance and recommend any move* that increases the balance of the normalized entitlement of each host within the cluster without considering the resource cost involved. In oversized environments, where resource supply is abundant, setting these options temporarily should not create a problem. In environments where resource demand rivals resource supply, setting these options can create resource starvation.

*The number of recommendations are limited to the MaxMovesPerHost calculation. This article contains more information about MaxMovesPerHost.

Recommendation

My recommendation is to use this advanced option sparingly, when host-load is extremely unbalanced and DRS does not provide any migration recommendation. Typically when the hosts in the cluster were placed in maintenance mode. Permanently activating this advanced option is similar to lobotomizing the DRS load balancing algorithm, this can do more harm in the long run as you might see virtual machines in an almost-constant state of vMotion.