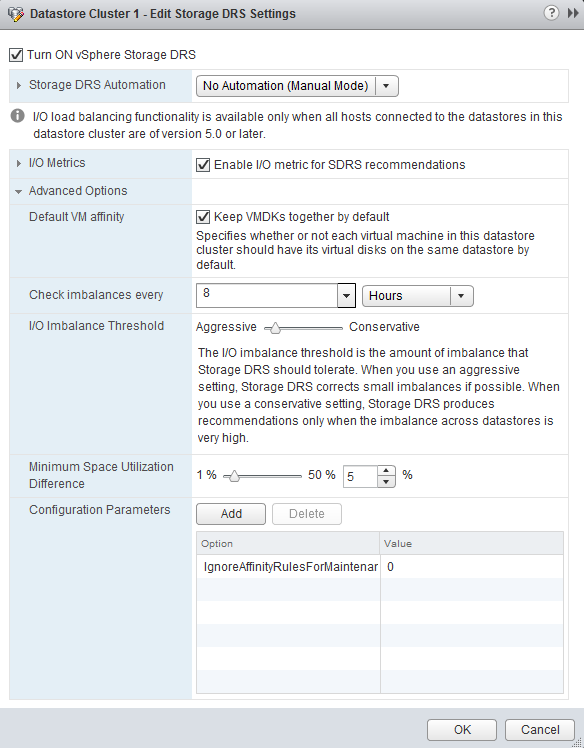

In vSphere 5.1 you can configure the default (anti) affinity rule of the datastore cluster via the user interface. Please note that this feature is only available via the web client. The vSphere client does not contain this option.

By default the Storage DRS applies an intra-VM vmdk affinity rule, forcing Storage DRS to place all the files and vmdk files of a virtual machine on a single datastore. By deselecting the option “Keep VMDKs together by default” the opposite becomes true and an Intra-VM anti-affinity rule is applied. This forces Storage DRS to place the VM files and each VDMK file on a separate datastore.

Please read the article: “Impact of intra-vm affinity rules on storage DRS” to understand the impact of both types of rules on load balancing.

Storage DRS datastore correlation detector

One of the cool new features of Storage DRS in vSphere 5.1 is the datastore correlation detector used by the SIOC injector. Storage arrays have many ways to configure datastores from among the available physical disk and controller resources in the array. Some arrays allow sharing of back-end disks and RAID groups across multiple datastores. When two datastores share backend resources, their performance characteristics are tied together: when one datastore experiences high latency, the other datastore will also experience similar high latency since IOs from both datastore are being serviced by the same disks. These datastores are considered “performance-related”. I/O load balancing operations in vSphere 5.1 avoid recommending migration of virtual machines between two performance-correlated datastores.

I/O load balancing algorithm

Storage DRS collects several virtual machine metrics to analyze the workload generated by the virtual machines within the datastore cluster. These metrics are aggregated in a workload model. To effectively distribute the different load of the virtual machines across the datastores, Storage DRS needs to understand the performance (latency) of each datastore. When a datastore violates its I/O load threshold, Storage DRS moves virtual machines out of the datastore. By linking workload models to device models, Storage DRS is able to select a datastore with a low I/O load when placing a virtual machine with a high I/O load during load balance operations.

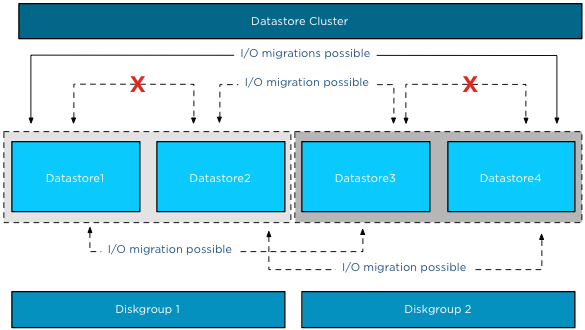

Performance related datastores

However if data is moved between datastores that are backed by the same disks, the move may not decrease the latency experienced on the source datastore as the same set of disks, spindles or RAID-groups service the destination datastore as well. I/O load balancing recommendations should avoid using two performance-correlated datastores, since moving a virtual machine from the source datastore to the destination datastore has no effect on the datastore latency. How does Storage DRS discover performance related datastores?

How does it work? The datastore correlation detector measures performance during isolation and when concurrent IOs are pushed to multiple datastores. The basic mechanism of correlation detector is rather straightforward: compare the overall latency when two datastores are being used alone in isolation and when there are concurrent IO streams on both of the datastores. If there is no performance correlation, the concurrent IO to the other datastore should have no effect. Contrariwise, if two datastores are performance correlated, then concurrent IO stream should amplify the average IO latency on both datastores. Please note that datastores will be checked for correlation on a regular basis. This allows Storage DRS to detect changes to the underlying storage configuration.

Example scenario

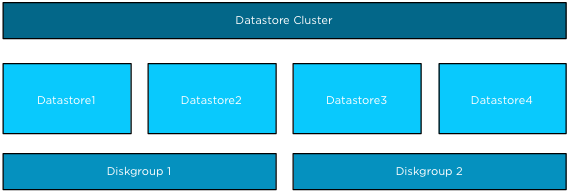

In this scenario Datastore1 and Datastore2 are backed by disk devices grouped in Diskgroup1, while Datastore3 and Datastore4 are backed by disk devices grouped in Diskgroup2. All four datastores belong to a single datastore cluster.

After SIOC has run the workload and device models on a datastore, SIOC picks a random datastore in the datastore cluster to check for correlations. If both datastores are idle, the datastore correlation detector uses the same workload to measure the average I/O latency in isolation and concurrent I/O mode.

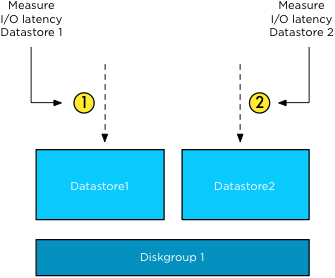

Isolation

The SIOC injector measures the average IO latency of Datastore1 in isolation. This means it measures the latency of the outstanding I/O of Datastore1 alone. Next, it measures the average IO latency of Datastore2 in isolation.

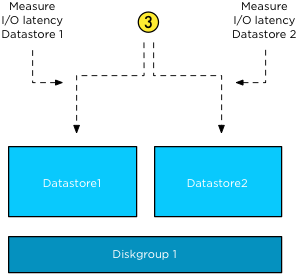

Concurrent I/Os

The first two steps are used to establish the baseline for each datastore. In the third step the SIOC injector sends concurrent I/O to both datastores simultaneously.

This results in the behavior that Storage DRS does not recommend any I/O load balancing operations between Datastore1 and 2 and Datastore3 and 4, but it can recommend for example to move virtual machines from Datastore1 to Datastore2 or from Datastore2 to Datastore3, etc. All moves are possible as long as the datastores are not correlated.

Enable Storage DRS on performance-correlated datastores?

When two datastores are marked as performance-correlated, Storage DRS does not generate IO load balancing recommendations between those two datastores. However Storage DRS can be used for initial placement and still generate recommendations to move virtual machines between two correlated datastores to address out of space situations or to correct rule violations. Please keep in mind that some arrays use a subset of disk out of a larger diskpool to back a single datastore. With these configurations, it appears that all disks in a diskpool back all the datastores but in reality they don’t. Therefor I recommend to set Storage DRS automation mode to manual and review the migration recommendations to understand if all datastores within the diskpool are performance-correlated.

VM templates and Storage DRS

Please note that Storage DRS cannot move VM templates via storage vMotion. This can impact load balancing operations or datastore maintenance mode operations. When initiating Datastore Maintenance mode, the following message is displayed:

As maintenance mode is commonly used for array migrations of datastore upgrade operations (VMFS-3 to VMFS-5), remember to convert the VM template to a virtual machine first before initiating maintenance mode.

Storage DRS enables SIOC on datastores only if I/O load balancing is enabled

Lately, I’ve received some comments why I don’t include SIOC in my articles when talking about space load balancing. Well, Storage DRS only enables SIOC on each datastore inside the datastore cluster if I/O load balancing is enabled. When you don’t enable I/O load balancing during the initial setup of the datastore cluster, SIOC is left disabled.

Keep in mind when I/O load balancing is enabled on the datastore cluster and you disable the I/O load balancing feature, SIOC remains enabled on all datastores within the cluster.

Limiting the number of Storage vMotions

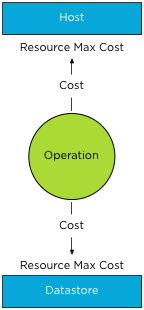

When enabling datastore maintenance mode, Storage DRS will move virtual machines out of the datastore as fast as it can. The number of virtual machines that can be migrated in or out of a datastore is 8. This is related to the concurrent migration limits of hosts, network and datastores. To manage and limit the number of concurrent migrations, either by vMotion or Storage vMotion, a cost and limit factor is applied. Although the term limit is used, a better description of limit is maximum cost.

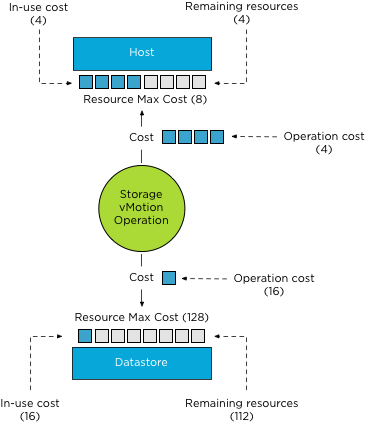

In order for a migration operation to be able to start, the cost cannot exceed the max cost (limit). A vMotion and Storage vMotion are considered operations. The ESXi host, network and datastore are considered resources. A resource has both a max cost and an in-use cost. When an operation is started, the in-use cost and the new operation cost cannot exceed the max cost.

The operation cost of a storage vMotion on a host is “4”, the max cost of a host is “8”. If one Storage vMotion operation is running, the in-use cost of the host resource is “4”, allowing one more Storage vMotion process to start without exceeding the host limit.

As a storage vMotion operation also hits the storage resource cost, the max cost and

in-use cost of the datastore needs to be factored in as well. The operation cost of a Storage vMotion for datastores is set to 16, the max cost of a datastore is 128. This means that 8 concurrent Storage vMotion operations can be executed on a datastore. These operations can be started on multiple hosts, not more than 2 storage vMotion from the same host due to the max cost of a Storage vMotion operation on the host level.

How to throttle the number of Storage vMotion operations?

To throttle the number of storage vMotion operations to reduce the IO hit on a datastore during maintenance mode, it preferable to reduce the max cost for provisioning operations to the datastore. Adjusting host costs is strongly discouraged. Host costs are defined as they are due to host resource limitation issues, adjusting host costs can impact other host functionality, unrelated to vMotion or Storage vMotion processes.

Adjusting the max cost per datastore can be done by editing the vpxd.cfg or via the advanced settings of the vCenter Server Settings in the administration view.

If done via the vpxd.cfg, the value vpxd.ResourceManager.MaxCostPerEsx41Ds is added as follows:

< config >

< vpxd >

< ResourceManager >

< MaxCostPerEsx41Ds > new value < /MaxCostPerEsx41Ds >

< /ResourceManager >

< /vpxd >

< /config >

As the max cost have not been increased since ESX 4.1, the value-name

Please remember to leave some room for vMotion when resizing the max cost of a datastore. The vMotion process has a datastore cost as well. During the stun/unstun of a virtual machine the vMotion process hits the datastore, the cost involved in this process is 1.

For example, Changing the

Please note that cost and max values are applied to each migration process, impact normal day to day DRS and Storage DRS load balancing operations as well as the manual vMotion and Storage vMotion operations occuring in the virtual infrastructure managed by the vCenter server.

As mentioned before adjusting the cost at the host side can be tricky as the costs of operation and limits are relative to each other and can even harm other host processes unrelated to migration processes. If you still have the urge to change the cost on the host, consider the impact on DRS! When increasing the cost of a Storage vMotion operation on the host, the available “slots” for vMotion operations are reduced. This might impact DRS load balancing efficiency when a storage vMotion process is active and should be avoided at all times.

Get notification of these blogs postings and more DRS and Storage DRS information by following me on Twitter: @frankdenneman