In its basic form Storage DRS can be used together with any array, however there are a few combinations of Storage array features and Storage DRS features that don’t mix easily. One of the most sought after question is can Storage DRS work with Array based Auto-tiering? And the answer is yes, yes you can use initial placement and out of space avoidance features that Storage DRS offers, however it is not recommended to enable the I/O metric feature.

Modeling

The main goal of the I/O metric function, popular called I/O load balancing, is to resolve the imbalance of performance delivered from datastores in the datastore cluster. To avoid hotspots in the datastore cluster and decrease overall latency imbalance, Storage DRS I/O load balancing uses device modeling and virtual machine workload modeling. Device modeling helps Storage DRS to understand the performance characteristics of the devices backing the datastores, while virtual machine workload modeling analyzes virtual machine workload running inside the datastore cluster. Both device and workload modeling assists Storage DRS to asses the improvement of I/O latency that will be achieved after a virtual machine migration.

Device modeling and the SIOC injector

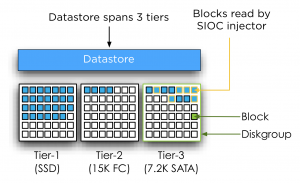

To understand and learn the performance of the devices backing the datastore, Storage DRS uses the Storage IO Control (SIOC) workload injector. To characterize the datastore, SIOC injector opens and read random blocks of the datastore. As the SIOC injector does not open every block backing the datastore, we cannot ensure that the SIOC injector opens an identical number of blocks of each performance tier to characterize the disk. As multiple performance tiers of disk back the datastore there is a possibility that the SIOC injector might open blocks located on similar speed disks, either slow or fast, while the datastore is primarily backed by disk with a different performance level. Let’s use an example to clarify this further.

In the diagram pictured above, SIOC opens random blocks and perform its tests. Unfortunately it doesn’t open blocks on other disks. While most of the blocks backing the datastore are located on faster performing disks, Storage DRS device modeling will characterize this disk with performance similar to 7.2K SATA disks. This inaccurate characterization of datastore performance might lead to an incorrect performance assessment and can lead to Storage DRS withholding a migration recommendation while there is sufficient performance available.

Segment migration triggered by auto-tiering algorithms

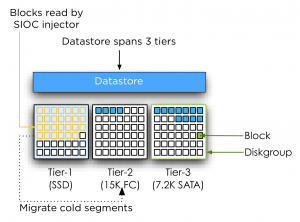

By using SIOC injector Storage DRS evaluate the performance of the disks, however Auto-tiering solutions migrate LUN segments (chunks) to different disk types based on the use pattern. Hot segments (frequently accessed) typically move to faster disks while cold segments move to slower disks. Depending on the array type and vendor there are different kind of policies and threshold for these migrations. By default Storage DRS is invoked every 8 hours and requires performance data over more than 16 hours to generate I/O load balancing decisions. Multiple storage vendors offer auto-tiering solutions, each using different time-cycles to collect and analyze workload before moving LUN segments. Some auto-tiering solutions move chunks based on real-time workload while other arrays move chunks after collecting performance data for 24 hours. This means that auto tiering solutions alter the landscape in which the SIOC injector performs its test. Let’s turn to another scenario for clarification.

In this scenario, SIOC is primarily opening blocks located in the Tier-1 diskgroup belonging to the datastore. As the datastore isn’t using these segments that often (cold) the auto tiering solution decides to migrate these segments to a lower tier. In this case the segments are migrated to 15K disks instead of SSD devices.

Storage DRS expects that the behavior of the device remains the same for at least 16 hours; it will base its calculation on these facts. Auto tiering solutions might change the underlying structure of the datastore based on its algorithm and timescales, conflicting with Storage DRS its calculation.

The misalignment of Storage DRS invocation and auto-tiering algorithms cycles makes it unpredictable when LUN segments may be moved, potentially colliding with the Storage DRS calculations and recommendations. Together with the transparency of auto tiering algorithms to Storage DRS and the non-existing communication between Storage DRS and Auto-tiering algorithms create the basis of the recommendation to disable I/O metric on datastore clusters backed by devices participating in an auto-tiering solution. Always verify these recommendations with your storage vendor.

Additional information:

Duncan wrote an excellent article about the Storage IO Control workload injector, which can be found here. More info on device modeling and load balancing can be found in the article impact of load balancing on datastore cluster configuration.

Note: This article is describing Storage DRS behavior based on vSphere 5.

(Storage) DRS (anti-) affinity rule types and HA interoperability

Lately I have received many questions about the interoperability between HA and affinity rules of DRS and Storage DRS. I’ve created a table listing the (anti-) affinity rules available in a vSphere 5.0 environment.

| Technology | Type | Affinity | Anti-Affinity | Respected by VMware HA |

| DRS | VM-VM | Keep virtual machines together | Separate virtual machines | No |

| VM-Host | Should run on hosts in group | Should not run on hosts in group | No | |

| Must run on hosts in group | Must not run on hosts in group | Yes | ||

| SDRS | Intra-VM | VMDK affinity | VMDK anti-affinity | N/A |

| VM-VM | Not available | VM Anti-Affinity | N/A |

As the table shows, HA will ignore most of the (anti-) affinity rules in its placement operations after a host failure except the “Virtual Machine to Host – Must rules”. Every type of rule is part of the DRS ecosystem and exists in the vCenter database only. A restart of a virtual machine performed by HA is a host-level operation and HA does not consult the vCenter database before powering-on a virtual machine.

Virtual machine compatibility list

The reason why HA respect the “must-rules” is because of DRS’s interaction with the host-local “compatlist” file. This file contains a compatibility info matrix for every HA protected virtual machine and lists all the hosts with which the virtual machine is compatible. This means that HA will only restart a virtual machine on hosts listed in the compatlist file.

DRS Virtual machine to host rule

A “virtual machine to hosts” rule requires the creation of a Host DRS Group, this cluster host group is usually a subset of hosts that are member of the HA and DRS cluster. Because of the intended use-case for must-rules, such as honoring ISV licensing models, the cluster host group associated with a must-rule is directly pushed down in the compatlist.

Note

Please be aware that the compatibility list file is used by all types of power-on operations and load-balancing operations. When a virtual machine is powered-on, whether manual (admin) or by HA, the compatibility list is checked. When DRS performs a load-balancing operation or maintenance mode operation, it checks the compatibility list. This means that no type of operation can override must- type affinity rules. For more information about when to use must and should rules, please read this article: Should or Must VM-Host affinity rules.

Contraint violations

After HA powers-on a virtual machine, it might violate any VM-VM or VM-host should (anti-) affinity rule. DRS will correct this constraint violation in the first following invocation and restore “peace” to the cluster.

Storage DRS (anti-) affinity rules

When HA restarts a virtual machine, it will not move the virtual machine files. Therefore creation of Storage DRS (anti-) affinity rules do not affect virtual machine placement after a host failure.

Storage DRS initial placement and datastore cluster defragmentation

Recently an interesting question was raised about what happens if enough free space is available in the datastore cluster but not enough space is available per datastore during placement of a virtual machine. This scenario is often referred as a defragmented datastore cluster.

The short answer is that if not enough space available on any given datastore, then Storage DRS starts to consider migrating existing virtual machines from the datastore to free up space. This article zooms in on the process of generating such an initial placement recommendation.

Rules and boundaries within a datastore cluster

Storage DRS will not violate the configured space utilization and IO latency threshold of the datastore cluster. This means that Storage DRS will place virtual machines that consume space up to the configured space utilization threshold, for example setting the space utilization threshold to 80% on a 1000GB datastore will allow Storage DRS to place virtual machines that consume space up to 800 GB. Be aware of this when monitoring free space available on the datastores in the cluster.

When creating or moving a virtual machine in the datastore, the first thing to consider is the affinity rules. By default virtual machine files are kept together in the working directory of the virtual machine. If the virtual machine needs to be migrated, all the files inside the virtual machines’ working directory are moved. This article features the use of the default affinity rule, however if the default affinity rule is disabled, Storage DRS will move the working directory and virtual disks separately allowing Storage DRS to distribute the virtual disk files on a more granular level.

Prerequisite migrations

During initial placement, if no datastore with enough space is available in the datastore cluster, Storage DRS starts by searching alternative locations for the existing virtual machines in the datastores and attempts to place the virtual machines to other datastores one by one. As a result Storage DRS may generate sets of migration recommendations of existing virtual machines that allow placement of the new virtual machine. These migrations generated are called prerequisite migrations and combined with the placement operations is called a recommendation set.

Depth of recursion

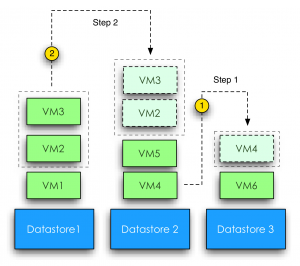

Storage DRS uses a recursive algorithm for searching alternative placements combinations. To keep Storage DRS from trying an extremely high number of combinations of virtual machine migrations, the “depth of recursion” is limited to 2 steps. What defines a steps and what counts towards a step? A step can be best defined as a set of migrations out of a datastore in preparation of (or to make room for) another migration into that same datastore. This step can contain one vmdk, but can also contain multiple virtual machines with multiple virtual disks attached. In some cases, room must be created on that target datastore first by moving a virtual machine out to another datastore, which results in an extra step. The following diagram visualizes the process.

Storage DRS has calculated that a new virtual machine can be placed in Datastore 1 if VM2 and VM3 are migrated to Datastore 2, however, placing these two virtual machines on datastore 2 will violate the space utilization, therefore room must be created. VM4 is moved out of Datastore2 as part of a step of creating space. This results in Step 1, moving out to Datastore 3, followed by Step 2, moving VM2 and VM3 to Datastore 2 to finally placing the new virtual machine on Datastore 1.

Storage DRS stops its search if there are no 2-step moves to satisfy the storage requirement of an initial placement. An advanced setting can be set to change the number of steps used by the search. As always, it is strongly discouraged to change the defaults, as many hours of testing has been invested in researching the setting that offers good performance while minimizing the impact of the operation. If you have a strong case of changing the number of steps, set the advanced configuration option “MaxRecursionDepth”. The default value is 1 the maximum value is 5. Because the algorithm starts counting at 0, default value of 1 allows 2 steps.

Goodness value

Storage DRS will cycle through all the datastores in the datastore cluster and initiates a search for space on each datastore. A search generates a set of prerequisites migration if it can provide space that allows the virtual machine placement within the depth of recursion. Storage DRS evaluates the generated sets and award each set a goodness value. The set with the least amount of cost (i.e. migrations) is the preferred migration recommendation and shown at the top of the list. Let’s explore this a bit more by using a scenario with 3 datastores.

Scenario

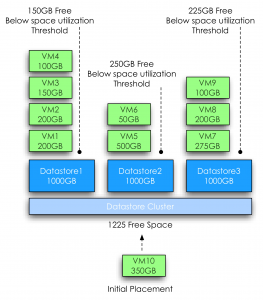

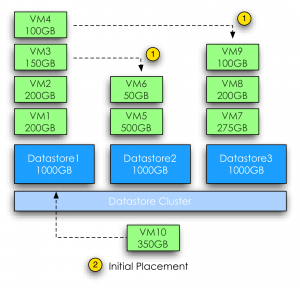

The datastore cluster contains 3 datastores; each datastore has a size of 1000GB and contains multiple virtual machines with various sizes. The space consumed on the datastores range from 550GB to 650GB, while the space utilization threshold is set to 80%. At this point the administrator creates a virtual machine that requests 350GB of space.

Although the datastore cluster itself contains 1225GB of free space, Storage DRS will not go forward and place the virtual machine on any of the three datastores, because placing the virtual machine will violate the space utilization threshold of the datastores.

Search process

As each ESXi host provide information about the overall datastore utilization and the vmdk statistics, Storage DRS has a clear overview of the most up to date situation and will use these statistics as input for its search. In the first step it will simulate all the necessary migrations to fit VM10 in Datastore 1. The prerequisite migration process with least number of migrations to fit the virtual machine on to Datastore 1 looks as follows:

Step 1: VM3 from Datastore 1 to Datastore 2

Step 1: VM4 from Datastore 1 to Datastore 3

Place new virtual machine on Datastore 1

Although VM3 and VM4 are each moved out to a different datastore, both migrations are counted as a one step prerequisite migration as both virtual machines are migrated OUT of Datastore 1.

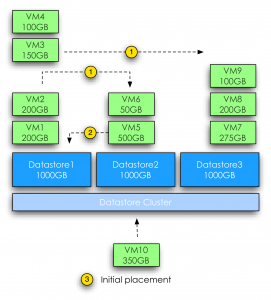

Next Storage DRS will evaluate Datastore 2. Due to the size of VM5, Storage DRS is unable to migrate VM5 out of Datastore 2 because it will immediately violate the utilization threshold of the selected destination datastore. One of the coolest parts of the algorithm is that it considers inbound migrations as valid moves. In this scenario, migrating virtual machines into Datastore 2 would free up space on another datastore to provide enough free space to place VM5, which in turn free up space on Datastore 2 allowing Storage DRS to place VM10 onto Datastore2.

The prerequisite migration process with least number of migrations to fit the virtual machine on to datastore 2 looks as follows:

Step 1: VM2 from Datastore 1 to Datastore 2

Step 1: VM3 from Datastore 1 to Datastore 3

Step 2: VM5 from Datastore 2 to Datastore 1

Place new virtual machine on Datastore 2

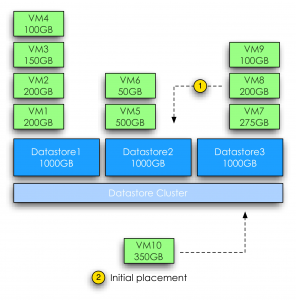

Datastore 3 generates a single prerequisite migration. By migrating VM8 from Datastore 3 to Datastore 2 it will free up enough space to allow placement of VM10. Selecting VM9 would not free up enough space and migrating VM7 generates more cost than migrating VM8. By default Storage DRS attempts to migrate the virtual machine or virtual machine disk which size is closest to the required space.

The prerequisite migration process with least number of migrations to fit the virtual machine on to datastore 3 looks as follows:

Step 1: VM8 from Datastore 3 to Datastore 2

Place new virtual machine on Datastore 3

After analyzing the cost and benefit of the three search results Storage DRS will assign the highest goodness factor to the migration set of Datastore3. Although each search result can provide enough free space after moves, recommendation set of Datastore 3 will result in the lowest number of moves and migrates the lowest amount of data. All three results will be shown; the recommended set will be placed at the top

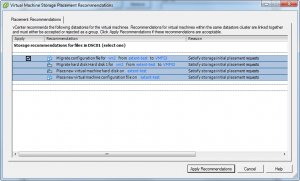

A example placement recommendation screen is displayed, note that you can only apply the complete recommendation set. Applying the recommendation results in triggering the prerequisite migrations before the initial placement of the virtual machine occurs.

Storage DRS and Multi-extents datastores

Somebody asked me if VMFS3 multi-extents datastores are supported by Storage DRS. Although they are supported and fully operational in Storage DRS, one must ask if this construct of large datastores should be used in a datastore cluster.

Resource aggregation and flexibility

Storage DRS Datastore clusters offer flexibility in adding and removing datastores dynamically and allow the administrator to focus on macro management by reducing the number of entities to be managed.

By using datastore cluster, micro management of single datastores is something from the past, such as the tedious task of virtual machine placement. The administrator no longer needs to find a datastore that provide adequate space, while still ensuring that placement of the virtual machine will not result in an I/O bottleneck. Let alone monitoring the current workload next to the ever-expanding workload; application lifecycles are changing drastically and virtual machine server sprawl is still one of the top concerns of the modern administrator. Keeping track and managing such an environment is very challenging. By allowing Storage DRS to manage (initial) placement of virtual machines, the administrator only needs to monitor overall available space and IO performance of the datastore cluster itself.

If the cluster requires more space of more IO performance the administrator can dynamically add more datastores to the datastore cluster and allow Storage DRS to find an optimal distribution of the current workload. The option “Run Storage now” in the datastore cluster view allows the administrator to trigger a Storage DRS invocation immediately.

Using Storage DRS and particularly space load-balancing can reduce the need of multi-extents as well. By allowing Storage DRS to monitor space utilization, the free space used as a safety buffer can be greatly reduced. Each ESXi host reports the virtual machine space utilization and the datastore utilization; Storage DRS will trigger an invocation if the configured space utilization is violated. A common practice is to assign a big chunk of space as safety buffer to avoid out of space situation of a datastore, which might lead to downtime of the active virtual machines. I’ve seen organization using requirements of 30% free space on datastores. By reducing slack space, a higher consolidation ratio can be achieved (if IO performance allows this), or a reduction in LUN sizes. Reducing LUN sizes can be used to provision additional datastores to the datastore cluster. More datastores benefits Storage DRS by offering more load balancing options, more datastores increase the number of queues, which benefits IO management at ESXi level and at SIOC at cluster level. Essentially this configuration is the complete opposite of VMFS extends. However if larger size datastores are necessary, vSphere 5 offers VMFS5.

VMFS5

VMFS5 allows datastores up to 64 terabyte of contiguous space. ESXi 5.0 allows a VMDK size up to 2 terabyte of space, providing sufficient space for most virtual machines configurations. If the virtual machine requires more than 32 virtual machine disks of 2 terabyte it’s recommended to disable the default affinity rule (keep all disks together) and allow Storage DRS to distribute the virtual machine disk files across all datastores inside the datastore cluster. This granularity allows Storage DRS to find a suitable datastore for each virtual disk that aligns with the performance requirements of that specific virtual disk.

Impact of load balancing on datastore cluster configuration

This article is a part of the series on architecture and design on datastore clusters. This article zooms in on why it’s recommended to use similar type disks in a datastore cluster.

In-tier balancing solution

SDRS can be considered as an “in-tier” balancing solution, suggesting that a datastore cluster should be populated with datastores that provide similar performance, continuity, capacity or service level. Although it’s not a technical requirement to have similar configured datastores, using heterogeneous configurations in a datastore cluster can lead to unexpected results. Understanding the SDRS’ main goal and the load balancing process can assist you in architecting your datastore cluster.

SDRS load balancing goal

The main focus of SDRS is to correct imbalance from both a space utilization and latency perspective on the datastore level. SDRS determines the imbalance level (space or latency) of the datastore cluster and migrates one or multiple virtual machine disk to solve the imbalance.

In order to select an appropriate migration candidate (virtual machine) SDRS relies on device and workload modeling to understand the impact of a workload on the latency of the datastore, SDRS uses virtual machine statistics and datastore utilization to understand the impact of virtual machine placement on the space utilization of a datastore.

Modeling

Let’s take a closer look at modeling. SDRS captures device performance to create a performance model; by using the SIOC injector and a reference workload it understands and learns the performance of each device. This way SDRS gets a clear picture of the datastores inside the datastore cluster. Workload modeling is used by SDRS to understand and learn the virtual machine workloads inside the datastore cluster. The workload modeling process creates a workload metric of each virtual disk and analyzes the impact of the data points on latency.

SDRS combines and correlates the outcome of device and workload modeling and space utilization into a unified recommendation. This means that when SDRS decides to migrate a specific VMDK, it considers the workload metric of the virtual disk and analyzes the impact of that specific workload on the latency of the destination datastore. If both IO metric and space utilization functions are enabled on the datastore cluster, SDRS combines the outcome of device modeling, workload modeling and space utilization and weights them regarding to violated threshold. Interesting enough, even when you disable IO load balancing, SDRS attempts to take overall IO statistics into account when finding a suitable datastore.

Impact of load balancing construct on datastore cluster configuration

Although SDRS analyzes devices and each virtual machines’ workload it’s is key to understand that SDRS’ main priority is to correct the threshold violation of datastore. Although it tries to find the best suitable datastore for a specific workload, modeling is still used as a metric to understand and achieve the goal of getting the best overall performance out of the datastore cluster.

In other words, modeling is used for balancing the load on the datastores and not to respect specific wishes of a virtual machine disk. In one way you can argue that SDRS load balancing has somewhat of a socialistic nature. Benefit for the society (datastores inside a datastore cluster) outweighs the individual need (single virtual machine performance). Let’s look at an example to better understand this concept.

Example scenario

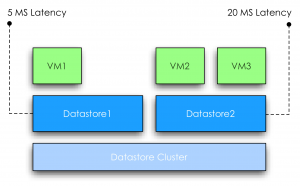

VM1 is running on a datastore1. SDRS determined that the normalized load* is 5ms latency. VM2 and VM3 are running on a datastore2. SDRS considers datastore2 to have a normalized load of 20ms latency, violating the default threshold of 15ms.

Normalized load: SDRS aggregates the device modeling and workload modeling into a metric called normalized load.

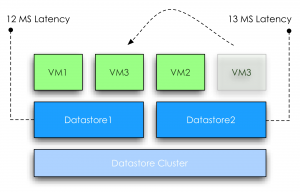

SDRS moves VM3 to datastore1; at this point the overall latency of the datastore2 is reduced 13ms. However due to moving VM3 to datastore1, the latency is increased from 5ms to 12ms. At this point the increase in latency will impact the workload of VM1, however the “society” benefits from the move because after the move no datastore is violating the latency threshold of the SDRS cluster anymore.

In this scenario the overall IOPS will be higher, which aligns with the goal of SDRS utilizing overall capacity and performance.

Note: As this subject is complex enough, I used a very simple example. In this scenario the latency “moved” with the VM. In real life this is not necessarily the fact, when a virtual machine is moved the latency will go up with the same amount at which the latency went down on the source.

Load Balancing in a Heterogeneous configuration

What if the datastore cluster contains a mix of datastores that are backed by different types of disks? For a moment, let’s focus on the performance impact of a heterogeneous configuration.

As mentioned before, device and workload modeling helps SDRS to find the most suitable datastore for a specific workload, however when combining different types of disk, for example, SSD, FC and SATA, it is not uncommon to see the fastest datastore fill up first.

If one of the smaller SSD’s run out of space, SDRS is required to solve the space utilization threshold violation and will migrate a workload from a faster datastore to a slower datastore, prioritizing space utilization over IO utilization. Although future invocations of the SDRS algorithm might solve the problem by moving VMDK’s around to find a more optimal balance, no priority or guarantees can be assigned to a specific virtual disk avoiding potential decrease in performance of a specific VMDK.

Now at this point most of you wonder if VASA and storage profiles can be used in such a configuration to associate specific profiles to virtual machines and make these VMs compliant to specific datastores. SDRS does not incorporate storage profiles compliancy in the load balancing algorithms and unfortunately not every storage vendor offers VASA providers of their arrays. Some excellent articles about VASA and Profile driven storage can be found at Yellow Bricks.com and blogs.vmware.com/vSphere/storage

VASA: http://blogs.vmware.com/vsphere/2011/08/vsphere-50-storage-features-part-10-vasa-vsphere-storage-apis-storage-awareness.html

Profile driven storage: http://www.yellow-bricks.com/2011/07/13/vsphere-5-0-profile-driven-storage-what-is-it-good-for/

To guarantee specific performance to virtual machines it is recommended to uses similar type disks to back the datastores of a datastore cluster. This configuration offers a stable and predictable service level to the virtual infrastructure. If multiple types of disks are available, it is recommended to split and create multiple datastore clusters each containing groups of identical types of disks.

Previous articles in the SDRS short series Architecture and design of Datastore clusters:

Part1: Architecture and design of datastore clusters

Part2: Partially connected datastore clusters