I’m looking forward to next week’s VMware Explore conference in Barcelona. It’s going to be a busy week. Hopefully, I will meet many old friends, make new friends, and talk about Gen AI all week. I’m presenting a few sessions, listed below, and meeting with customers to talk about the VMware Private AI foundation. If you are interested and you see me, walk by, come, and have a talk with me.

Hopefully, I will see you at one of the following sessions:

Monday, Nov 6:

Executive Summit

For invite only

Time: 11:00 AM – 1:00 PM CET

For VMware Certified Instructors only: VCI Forum Keynote.

Time: 2:15 PM – 3:00 PM CET

Location: Las Arenas II (2) Hotel Porta Fira, Pl. d’Europa, 45, 08908 L’Hospitalet de Llobregat, Barcelona, Spain 15 min walk from conference, 3 min taxi)

Meet the Experts Sessions

Machine Learning Accelerator Deep Dive [CEIM1199BCN]

Time: 4:30 PM – 5:00 PM CET

Location: Hall 8.0, Meet the Experts, Table 4

Tuesday, Nov 7:

Meet the Experts Sessions

Machine Learning Accelerator Deep Dive [CEIM1199BCN]

Time: 11:30 AM – 12:00 PM CET

Location: Hall 8.0, Meet the Experts, Table 1

Wednesday, Nov 8:

AI and ML Accelerator Deep Dive [CEIB1197BCN]

Time: 9:00 AM – 9:45 AM CET

Location: Hall 8.0, Room 31

CTEX: Building a LLM Deployment Architecture: Five Lessons Learned

Speakers: Shawn Kelly & Frank Denneman

Time: 4:00 PM – 5:00 PM CET

Location: Room CC5 501

Register here: https://lnkd.in/eiTbZusU

Recommended AI Sessions:

Monday, Nov 6:

‘Til the Last Drop of GPU: Run Large Language Models Efficiently [VIT2101BCN] (Tutorial)

Speakers: Agustin Malanco – Triple VCDX

Time: 11:00 AM – 12:30 PM CET

Location: Hall 8.0, Room 18

Tuesday, Nov 7:

Using VMware Private AI for Large Language Models and Generative AI on VMware Cloud Foundation and VMware vSphere [CEIB2050BCN]

Speakers: Justin Murray, Shawn Kelly

Time: 10:30 AM – 11:15 AM CET

Location: Hall 8.0, Room 12

Empowering Business Growth with Generative AI [VIB2368BCN]

Speakers: Robbie Jerrom, Serge Palaric, Shobhit Bhutani

Time: 2:15 PM – 3:00 PM CET

Location: Hall 8.0, Room 14

Why do I need AI in my Data Center? Does this help me become a differentiator?

Speaker: Gareth Edwards.

Time: Nov 7, 3:30 – 4:40 PM

Location: CTEX: Room CC5 501

Meet the Experts:

ML/AI and Large Language Models – Implications for VMware Infrastructure [CEIM2282BCN]

Expert: Justin Murray

Time: 12:30 PM – 1:00 PM CET

Location: Hall 8.0, Meet the Experts, Table 2

Wednesday, Nov 8

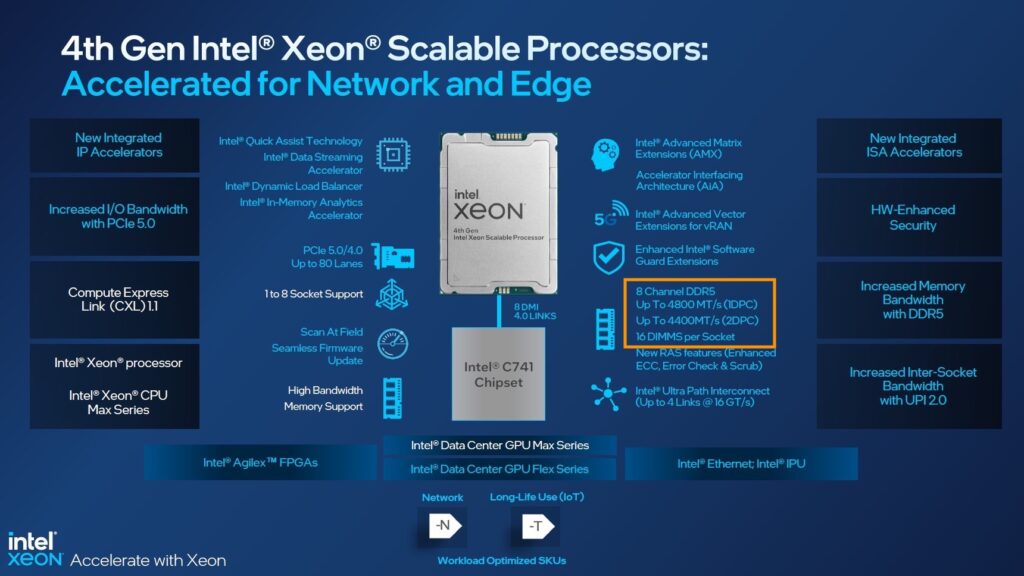

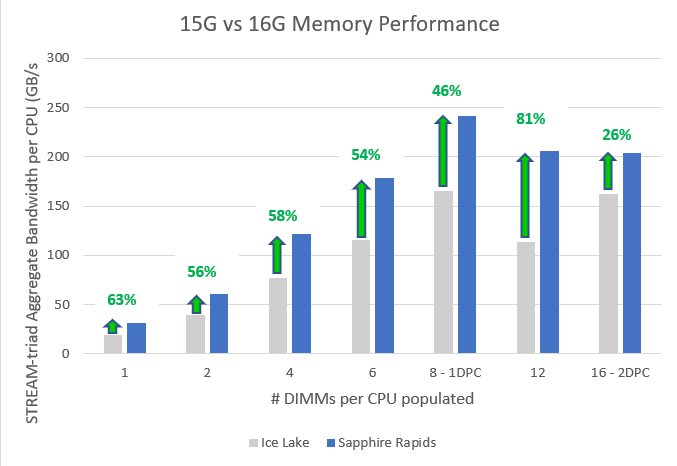

AI Without GPUs: Run AI/ML Workloads on Intel AMX CPUs with vSphere 8 and Tanzu [MAPB2367BCN]

Speakers: Chris J Gully, Earl Ruby

Time: 12:45 PM – 1:30 PM CET

Location: Hall 8.0, Room 33

Meet the Experts:

ML/AI and Large Language Models – Implications for VMware Infrastructure [CEIM2282BCN]

Expert: Justin Murray

Time: 4:30 PM – 5:00 PM CET

Location: Hall 8.0, Meet the Experts, Table 4