Designing a vMotion network can be quite a challenge. You want to provide vMotion as much bandwidth as possible but not at the expense of other network traffic streams. Network I/O Control (NetIOC) can provide you bandwidth management tools to shape and form your vMotion network.

NetIOC provides a QoS that allows vMotion to utilize as much bandwidth as possible until contention occurs. The moment a physical NIC is saturated NetIOC distributes network bandwidth according to the relative share value of the network resource pool. In the article “A primer of Network I/O Control” I explain the various resource management constructs of Network I/O Control. How does NetIOC work with a Multi-NIC vMotion network?

Multi-NIC vMotion network on a distributed switch

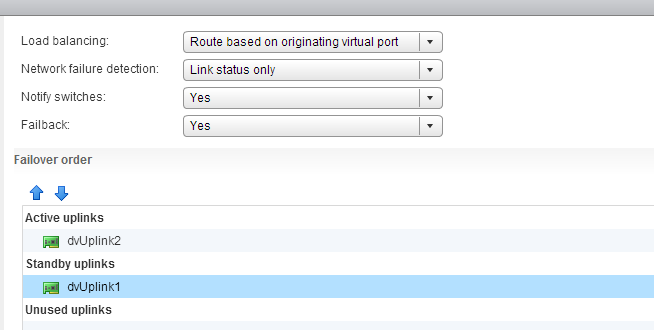

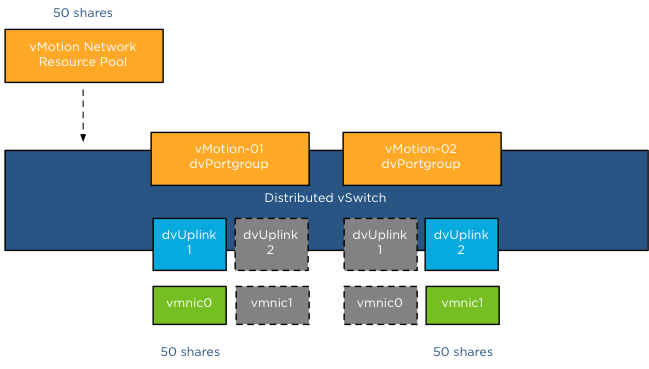

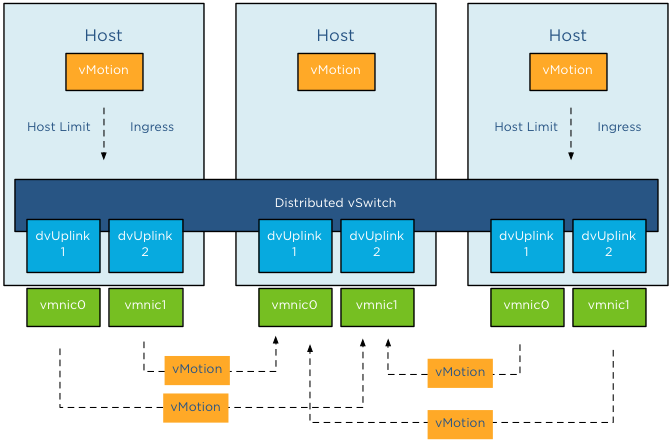

NetIOC is only supported on a distributed switch therefor you need to create multiple vMotion portgroups on your distributed switch. In order to have a supported vMotion network, both distributed port groups need to be configured with an alternate failover order configuration. In my lab I’ve named the two dvPortgroups vMotion-01 and vMotion-02. dvPortgroups vMotion-01 is configured with a failover order where dvUplink1 is active and dvUplink2 is standby. vMotion-02 is configured with dvUplink2 as active and dvUplink1 as standby.

If you wonder why I’m configuring the redundant uplink as Unused please review the article: “Multi-NIC vMotion – failover order configuration”. The reason why “Route Based on originating virtual port” is chosen is described in the initial article of this series: “Designing your vMotion network”.

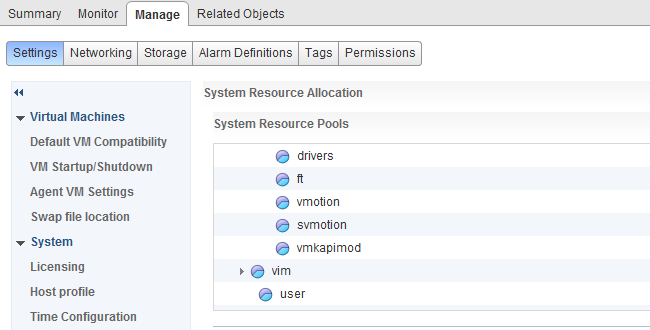

vMotion network resource pool

Each host in my lab is connected to the distributed switch has two dvUplink portgroups. A virtual adapter with vMotion enabled is connected to a uplink and vMotion is able to leverage both physical adapters to send out vMotion traffic. When enabling NetIOC, 7 predefined system network resource pools become active. One system network resource pool is vMotion, vMotion traffic binds to the vMotion system network resource pool and if contention occurs the network resource pool competes for bandwidth with the other active streams. It’s important to realize that the shares apply at the physical adapter layer. Therefore as vMotion is able to utilize both uplinks it “receives” 50 shares per physical adapter.

As mentioned in the NetIOC primer article, shares are only active when contention exists and they only count when it is transmitting. Consequently, if vMotion is not active, the shares are not counted when an adapter is congested. Also when vMotion uses a single link only 50 shares are active. Although the dvUplink portgroup is configured with 2 dvUplinks, one dvUplink is configured as standby. When the active NIC is operating normally, the dvPortgroups cannot utilize the standby link. This results in the utilization of only 1 link and therefor only 50 shares become active during congestion.

Example Scenario 1; vmnic0 saturated

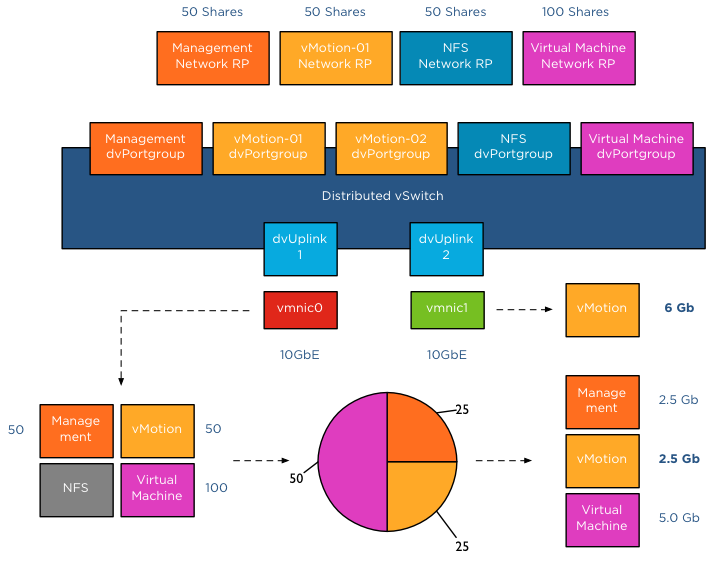

The distributed switch is configured with 5 dvPortgroups, management, vMotion01, vMotion02, NFS and a virtual machine portgroup. Each network resource pool is configured with the default physical adapter shares. In this scenario vMotion is load-balancing traffic across both dvPortgroups, however the VMkernel decides to also send management and virtual machine traffic through dvUplink1, saturating vmnic0.

In this scenario, bandwidth is distributed following relative share values. As NFS traffic isn’t transmitting across dvUplink1, its shares are not active. Available bandwidth for vMotion is reduced to 2.5Gb as long as this situation persists. vMotion is also using dvUplink2, as a result traffic flows through vmnic1 as well. Fortunately vmnic1 is not saturated and vMotion can utilize as much bandwidth it can allocate. Restricted by the CPU speed of the host, vMotion is able to utilize 6Gb of bandwidth on vmnic1 while having an additional bandwidth allocation of 2.5Gb on vmnic0. In total vMotion utilizes 8.5Gb at that moment.

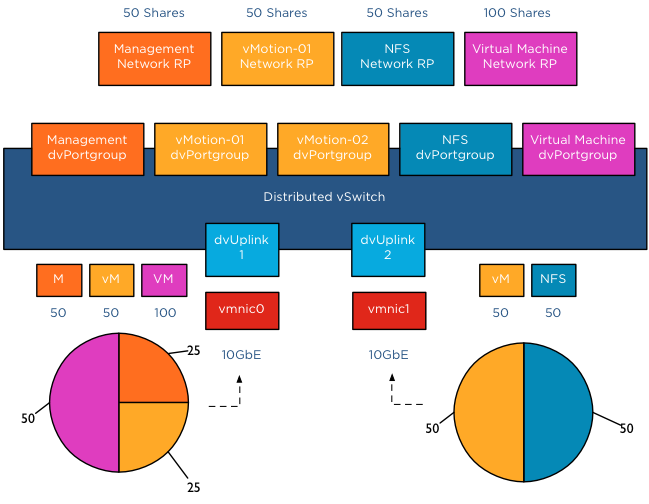

Example Scenario 2; Both NICs saturated

The moment both NICs are saturated, the available bandwidth available to vMotion is calculated on a NIC basis. vMotion traffic wanting to use vmnic0 is assigned bandwidth relative to their share value, similar to example scenario 1. vMotion traffic wanting to use vmnic1 is assigned bandwidth relative to their share value compared to the active traffic streams, in this case NFS and vMotion are sending traffic to the dvUplink.

The distributed switch receives traffic from NFS and vMotion destined for vmnic1, as a result NetIOC will assign half of the bandwidth to vMotion and NFS as each owns 50 shares of the total active 100 shares. In this case the amount of bandwidth vMotion can utilize is 2.5Gb on vmnic0 and 5 Gb on vmnic1, allowing vMotion to utilize 7.5Gb of the total available 20Gb.

Host Limits

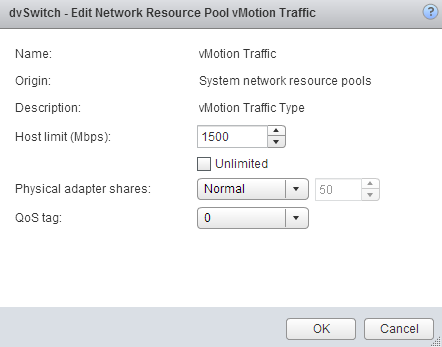

vSphere 5.1 introduced a big adjustment to hosts limits, the host limits now applies to each individual uplink. This means that when setting a host limit on the network resource pool for vMotion of 3000Mbps, vMotion is limited to transmit a maximum of 3Gb per uplink. In the case of a Multi-NIC vMotion configuration (2NICs) the maximum traffic vMotion can issue to the vmnics is 6Gb. Divide the host limit by the number of active NICs if you decide to set a limit on a particular network resource pool. If you want to limit vMotion to 3Gb with a 2 NIC Multi-NIC configuration, set the host limit on the network resource pool to 1500 Mbps.

As for limits, limits are always active! Host limits are always enforced on the physical adapter regardless of the utilization rate of the adapter. This means setting a host limit on a network resource pool restricts the traffic to utilize more bandwidth even if this bandwidth is available. Saturating an adapter is not by definition wrong you are utilizing the infrastructure. Taking precaution and limiting certain network streams unconditionally might hurt you more than your assumed gain. Before limiting a network stream my recommendation is always to measure traffic patterns over a longer period of time.

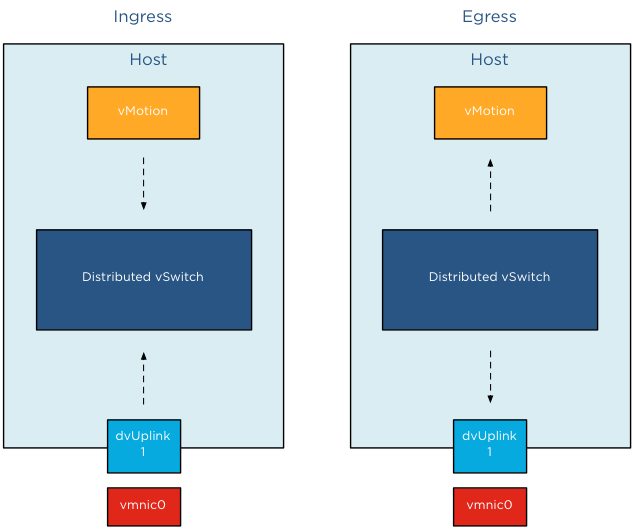

Ingress only

NetIOC shares and limits are only applied to ingress traffic. In ESX , the ingress and egress traffic are with respect to a distributed switch. Ingress traffic is traffic that flows from the VMkernel or vNic from the running VM towards the distributed switch. Egress is traffic that flows from the distributed vSwitch to the physical nic or to the vNIC

It is important to know NetIOC only controls ingress traffic initiated from within the ESX host. This means that you can set a limit the vMotion network resource pool, this only affects traffic send towards the uplink inside the host. If you have a cluster with a small number of hosts, it can happen that multiple vMotion operations are inbound to that host. In that scenario, NetIOC cannot prevent the Uplinks to get saturated by incoming vMotion traffic.

To avoid this situation, traffic shaping needs to be configured on the dvPortgroups. The upcoming article Designing your vMotion network – vMotion dvPortgroups traffic shaping explores this feature in depth.

Part 1 – Designing your vMotion network

Part 2 – Multi-NIC vMotion failover order configuration

Part 4 – Choose link aggregation over Multi-NIC vMotion?

Part 5 – 3 reasons why I use a distributed switch for vMotion networks

Adjusting the cost of vMotion – a word of caution

Yesterday I posted an article on how to change the cost of vMotion in order to change the default number of concurrent vMotion. As I mentioned in the article, I’m not a proponent of changing advanced settings.

Today Kris posted a very interesting question;

How about the scenario where one uses multi NIC vMotion for against two 5Gbps virtual adapters)? I know a cost of 4 will be set for the network by the VMkernel, however as the aggregate bandwidth becomes 10Gbps is it safe enough to raise the limit? Perhaps not to the full 8 for 10Gbps, but 6?

Please note that this article does not bash Kris. He provides a use case that I’ve heard a couple of times, making his comment an example use case. Although Kris’s scenario sounds like a very good use case to adjust the cost settings to circumvent the line-speed detection of the VMkernel to determine the max-cost of the network resource, it does not solve the other dynamic elements using line speed.

DRS MaxMovesPerHost

ESX 4.1 Introduces the MaxMovesPerHost setting, allowing the host to dynamically set the limit on moves. The limit is based on how many moves DRS thinks can be completed in one DRS evaluation interval. DRS adapts to the frequency it is invoked (pollPeriodSec, default 300 seconds) and the average migration time observed from previous migrations. However, this limit is still bound by the detected line speed and the associated Max cost. Although the proposed environment has 10GB line speed in total available, the VMkernel will still set the max cost to allow 4 vMotions on the host. Restricting the number of migrations, DRS can initiate during a load balance operation.

vMotion system resource pool CPU reservation

vMotion tries to move the used memory blocks as fast as possible. vMotion uses all the available bandwidth depending on the available CPU speed and bandwidth. Depending on the detected line speed, vMotion reserves an X amount of CPU speed at the start of a vMotion process. vMotion computes its desired host vMotion CPU reservation. For every 1GBe vMotion link speed it detects vMotion in vSphere 5.1 reserved 10% of a CPU core with a minimum desired CPU reservation of 30%. This means that if you use a single 1GBe, vMotion reserves 30% of a core, if you use 4 x 1GBe connections, that means vMotion reserves 40% of a core. A 10GBe link is special as vMotion reserves 100% of a single core.

vMotion creates a (system) resource pool and sets the appropriate CPU reservation on the resource pool. It’s important to note that this is being done to the vMotion resource pool, which means that the reservation is shared across all vMotions happening on the host.

Using two 5GB links, results in a 40% CPU core reservation (default 30% plus 10% for the extra link). However, this dynamic behavior might get unnoticed if you have enough spare CPU cycles in your source and destination host.

Word of caution

I hope these two examples show that there are multiple dynamic elements working together on various levels in your virtual infrastructure. Adjusting a setting might improve the performance of a specific use case, but to change the overall behavior, lots of settings have to be changed. Due to the lack of time and specific information correlating various settings is impossible for many of us most of the time. Therefore I would like to repeat my recommendation. Please do not adjust advanced settings only if VMware supports ask you to.

Limiting the number of concurrent vMotions

After explaining how to limit the number of concurrent Storage vMotions operations, I received multiple questions on how to limit the number of concurrent vMotion operations. This article will cover the cost and max cost constructs and show you how to calculate the correct config key values to limit the number of concurrent vMotion operations.

Please note

I usually do not post on configuration keys that change default behavior simply because I feel that most defaults are sufficient, and it should only be changed as a last resort when all other avenues are exhausted. I would like to mention that this is an unsupported configuration. Support will request to remove these settings before troubleshooting your environment!

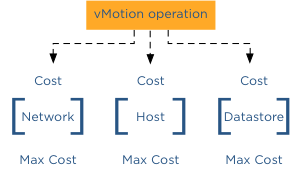

Cost

To manage and limit the number of concurrent migrations either by vMotion or Storage vMotion, a cost and maximum cost (max cost) factor is applied. Think of the maximum cost as a limit. A resource has a max cost, and an operation is assigned a cost. A vMotion and Storage vMotion are considered operations, and the ESXi host, network, and datastore are considered resources.

In order for a migration operation to be able to start, the cost cannot exceed the max cost. A resource has both a max cost and an in-use cost. When an operation is started, the resource records an in-use cost and allows additional operations until the maximum cost is reached. The in-use cost of an active operation and the new operation cost cannot exceed the max cost.

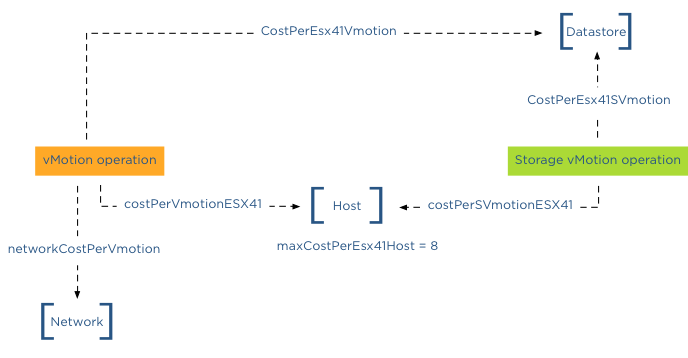

As mentioned, there are three resources, host, network, and datastore. A vMotion operation interacts with the host, network, and datastore resource, while Storage vMotion interacts with the host and datastore resource. This means that changing the host or datastore-related cost can impact both vMotion and Storage vMotion. Let’s look at the individual costs and max cost before looking into which config key to change.

Host

| Operation | Config Key | Cost |

| vMotion Cost | costPerVmotionESX41 | 1 |

| Storage vMotion Cost | costPerSVmotionESX41 | 4 |

| Maximum Cost | maxCostPerEsx41Host | 8 |

Network

| Operation | Config Key | Cost |

| vMotion Cost | networkCostPerVmotion | 1 |

| Storage vMotion Cost | networkCostPerSVmotion | 0 |

| Maximum Cost | maxCostPerNic | 2 |

| maxCostPer1GNic | 4 | |

| maxCostPer10GNic | 8 |

Datastore

| Operation | Config Key | Cost |

| vMotion Cost | CostPerEsx41Vmotion | 1 |

| Storage vMotion Cost | CostPerEsx41SVmotion | 16 |

| Maximum Cost | maxCostPerEsx41Ds | 128 |

Please note that because these values were not changed after 4.1, the advanced settings were unnecessary. Therefore these advanced settings apply to ESXi 5.0 and ESXi 5.1 as well.

Default concurrent vMotion limit

To limit vMotion we must identify which costs and max costs are involved;

Datastore Cost: As we know, a vMotion transfers the memory from the source ESX host to the destination ESX host, sends over the pages stored in a non-shared page file is this exists, and finally transfers the ownership to the new VMX file. For the new host to run the new virtual machine, a new VMX file is created on the datastore; therefore, a vMotion process also includes the cost on the datastore resource. Although it generates overhead, the impact is very low. Therefore, the cost involved in the data store is 1.

Datastore Max Cost: The maximum cost of a datastore is 128, therefore, a maximum of 128 concurrent vMotion operations can be active on a single datastore.

Network: The cost for a vMotion on the network resource is 1

Network Max Cost: This config key is very interesting as it is set dynamically. The config key depends on the line speed detected by the VMkernel. If the VMkernel detects a line speed between 1 GB and 10GB, then the max cost value is set to 4. If the VMkernel detects 10GB, then the max cost value is set to 8. Please note that the VMkernel will set the max cost to 10GB ONLY if it detects 10GB line speed. It does not matter if you use 10GB Ethernet cards. It’s the line speed that counts. Please read the article “The impact of QoS network traffic on VM performance” and “Adaptive MaxMovesPerHost” if you apply a QOS on your converged network and wonder what impact this might have on vMotion performance and DRS load balancing.

If the VMkernel detects a line speed below 1GB, it sets the max cost to 2, resulting in a maximum number of concurrent vMotions of 2 with the default network vMotion cost. Please note that the supported minimum required bandwidth is 1GB! This < 1gB line speeds setting is included for “just-in-case” scenarios where the vMotion network is temporarily incorrectly configured. It should not be used to justify a < 1gb line speed when designing the virtual infrastructure! Host cost: The cost for a vMotion on the host resource is 1

Host max cost: The host max config for all vMotion operations is 8.

In-use cost

If a vMotion is configured with a 1GB line speed, the max cost of the network allows for 4 concurrent vMotion, while the host max cost allows 8 concurrent vMotions. The most conservative max cost wins as the vMotion network does not allow the in-use cost to exceed the max cost. In the datastore cost section, I explained that the datastore allows for 128 concurrent vMotions. What usually is more common is to see multiple Storage vMotion operations active on a datastore due to Storage DRS Datastore Maintenance. If you are vMotioning a virtual machine that resides on the datastore to another host and you put a datastore into datastore maintenance mode, Storage DRS cannot initiate 8 storage vMotion because 8 Storage vMotion and the in-use cost of a vMotion exceeds the max cost of 128 of the data store. “Only” 7 concurrent Storage vMotions can be initiated while the vMotion is active. The in-use cost of the datastore is 7 x 16 = 112 + 1 (vMotion) = 113. Although it has 15 “points” left, it cannot start another Storage vMotion.

Let’s assume the vMotion network is configured with 10GB line speed. This means that the host will allow for 8 concurrent vMotions. But if a Storage vMotion is already active, the in-use cost of the host is 4; therefore, the host can only allow for 1 additional Storage vMotion or 4 concurrent vMotions.

networkCostPerVmotion

As both Storage vMotion and vMotion use the host resource max cost, it is “recommended” to adjust the config key “networkCostPerVmotion”. Setting this config key to 2 allows for 2 concurrent vMotions on a 1GB vMotion network per host or 4 concurrent vMotions on a 10GB vMotion per host.The networkCostPerVmotion can be adjusted by editing the vpxd.cfg or via the advanced settings of the vCenter Server Settings in the administration view.

If done via the vpxd.cfg, the value vpxd.ResourceManager.networkCostPerVmotion is added as follows:

< config >

< vpxd >

< ResourceManager >

< networkCostPerVmotion > new value < /networkCostPerVmotion >

< /ResourceManager >

< /vpxd >

< /config >

Word of caution

Please note that cost and max values are applied to each migration process within vCenter! Therefore modification of costs impacts normal day-to-day DRS and Storage DRS load balancing operations as well as the manual vMotion and Storage vMotion operations occurring in the virtual infrastructure managed by the vCenter server. Adjusting the cost at the host side can be tricky as the costs of operation and limits are relative to each other and can even harm other host processes unrelated to migration processes.

Manual storage vMotion migrations into a datastore cluster

Frequently I receive questions about the impact of a manual migration into a datastore cluster, especially about the impact of the VM disk file layout. Will Storage DRS take the initial disk layout into account or will it be changed? The short answer is that the virtual machine disk layout will be changed by the default affinity rule configured on the datastore cluster. The article describes several scenarios of migrating “distributed“ and “centralized” disk layout configurations into datastore cluster configured with different affinity rules.

Test scenario architecture

For the test scenarios I’ve build two virtual machines VM1 and VM2 Both virtual machines are of identical VM configuration, only the datastore location is different. VM1-centralized has a “centralized” configuration, storing all VMDKs on a single datastore, while VM2-distributed has a “distributed” configuration, storing all VMDKs on separate datastores.

| Hard disk | Size | VM 1 datastore | VM 2 datastore |

| Working directory | 8GB | FD-X4 | FD-X4 |

| Hard disk 1 | 60GB | FD-X4 | FD-X4 |

| Hard disk 2 | 30GB | FD-X4 | FD-X5 |

| Hard disk 1 | 10GB | FD-X4 | FD-X6 |

Two datastore clusters exists in the virtual infrastructure:

| Datastore cluster | Default Affinity rule | VMDK rule applied on VM |

| Tier-1 VMs and VMDKs | Do not keep VMDKs together | Intra-VM Anti-affinity |

| Tier-2 VMs and VMDKs | Keep VMDKs together | Intra-VM Affinity rule |

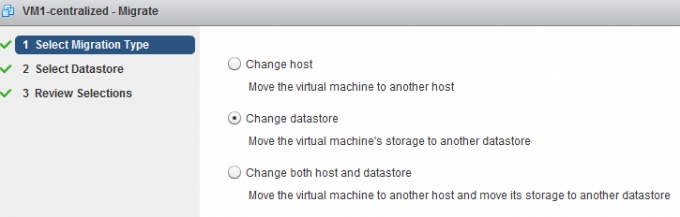

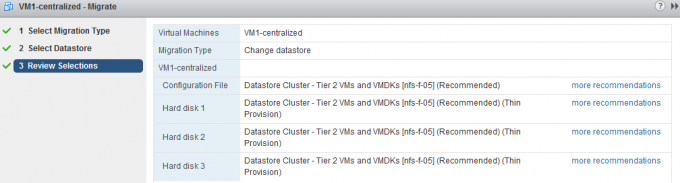

Test 1: VM1-centralized to Datastore Cluster Tier-2 VMs and VMDKs

Since the virtual machine is stored on a single datastore is makes sense to start of migrating the virtual machine to the datastore cluster which applies a VMDK affinity rule, keeping the virtual machine disk files together on a single datastore in the datastore cluster.Select the virtual machine, right click the virtual machine to display the submenu and select the option “Migrate…”. The first step is to select the migration type, select change datastore.

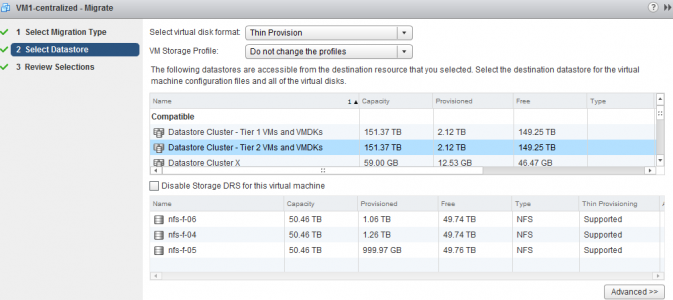

The second step is to select the destination datastore, as we are planning to migrate the virtual machine to a datastore cluster it is necessary to select the datastore cluster object.

After clicking next, the user interface displays the Review Selection screen; notice that the datastore cluster applied the default cluster affinity rule.

Storage DRS has evaluated the current load of the datastore cluster and the configuration of the virtual machine, it concludes that datastore nfs-f-05 is the best fit for the virtual machine, the existing virtual machines in the datastore cluster and the load balance state of the cluster. By clicking “more recommendations” other datastore destinations are presented.

Test result: Intra-VM affinity rule applied and all virtual machine disk files are stored on a single datastore

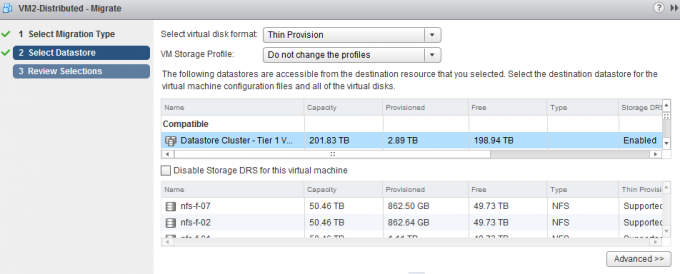

Selecting the Datastore cluster object

The user interface provides you two options, select the datastore cluster object or a datastore that is part of the datastore cluster, however for that option you explicitly need to disable Storage DRS for this virtual machine. By selecting the datastore cluster, you fully leverage the strength of Storage DRS. Storage DRS initiates it’s algorithms and evaluate the current state of the datastore cluster. It reviews the configuration of the new virtual machine and is aware of the I/O load of each datastore as well as the space utilization. Storage DRS weigh both metrics and will weigh either space of I/O load heavier if the utilization is higher.

Disable Storage DRS for this virtual machine

By default it’s not possible to select a specific datastore that is a part of a datastore cluster during the second step “Select Datastore”. In order to do that, one must activate (tick the option box) the “Disable Storage for this virtual machine”. By doing so the datastores in the lower part of the screen are available for selection. However this means that the virtual machine will be disabled for any Storage DRS load balancing operation. Not only will it affect have an effect for the virtual machine itself, it also impacts other Storage DRS operations such as Maintenance Mode and Datastore Cluster defragmentation. As Storage DRS is not allowed to move the virtual machine, it cannot migrate the virtual machine to find an optimum load balance state when Storage DRS needs to make room for an incoming virtual machine. For more information about cluster defragmentation, read the following article: Storage DRS initial placement and datastore cluster defragmentation.

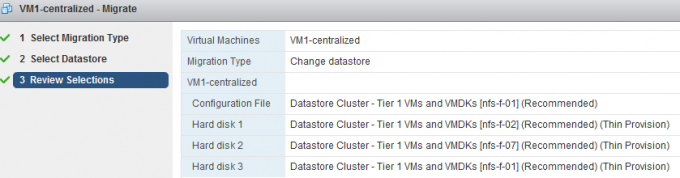

Test 2: VM1-centralized to Datastore Cluster Tier-1 VMs and VMDKs

Migrating a virtual machine stored on a single datastore to a datastore cluster with anti-affinity rules enabled results in a distribution of the virtual machine disk files:

Test result: Intra-VM anti-affinity rule applied and the virtual machine disk files are placed on separate datastores.

Working directory and default anti-affinity rules

Please note that in the previous scenario the configuration file (working directory) is placed on the same datastore as Hard disk 3. Storage DRS does not forcefully attempt to place the working directory on a different datastore. It weighs the load balance state of the cluster heavier than separation from the virtual machine VMDK files.

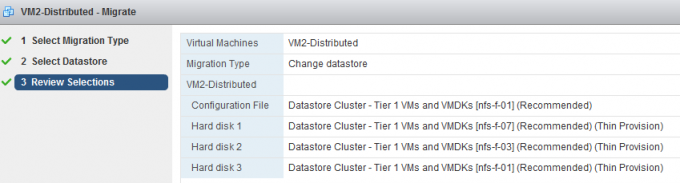

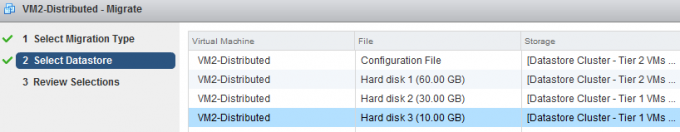

Test 3: VM2-distributed to Datastore Cluster Tier-1 VMs and VMDKs

Following the example of VM1, I started off by migrating VM2-Distributed to Tier-1 as the datastore cluster is configured to mimic the initial state of the virtual machine and that is to distributed the virtual machine across as many datastores as possible. After selecting Datastore Cluster Tier-1 VM and VMDKs, Storage DRS provided the following recommendation:

Test result: Intra-VM anti-affinity rule applied on VM and the virtual machine disk files are stored on separate datastores.

A nice tidbit, as every virtual disk file is migrated between two distinct datastores, this scenario leverages the new functionality of parallel disk migration introduced in vSphere 5.1.

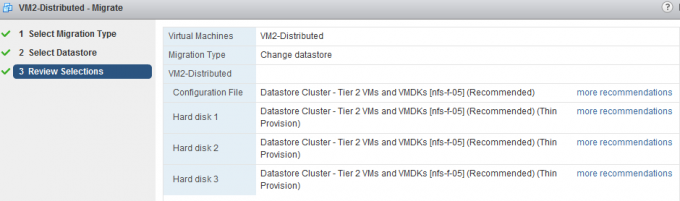

Test 4: VM2-distributed to Datastore Cluster Tier-2 VMs and VMDKs

What happens if you migrate a distributed virtual machine to a datastore cluster configured with a default affinity rule? Selecting Datastore Cluster Tier-2 VM and VMDKs, Storage DRS provided the following recommendation:

Test result: Intra-VM affinity rule applied on VM and the virtual machines are placed on a single datastore cluster.

Test 5: VM2-distributed to Multiple Datastore clusters

A common use case is to distribute a virtual machine across multiple tiers of storage to provide performance while taken economics into account. This test simulates the exercise of placing the working directory and guest OS disk (Hard disk 1) on datastore cluster Tier 2 and the database and logging hard disk (Hard disk 2 and Hard disk 3) on datastore cluster Tier 1.

In order to configure the virtual machine to use multiple datastores, click on the button Advanced during the second step of the migration:

This screen shows the current configuration, by selecting the current datastore of a hard disk a browse menu appears:

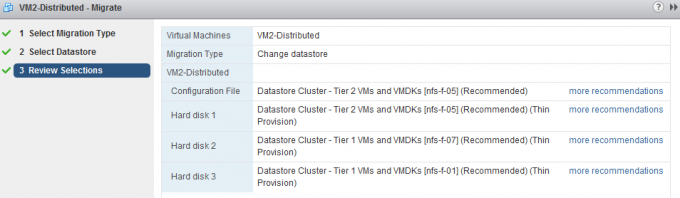

Select the appropriate datastore cluster for each hard disk and click on next to receive the destination datastore recommendation from Storage DRS.

The working directory of the VM and Hard disk 1 are stored on datastore cluster Tier 2 and Hard disk 2 and Hard disk 3 are stored in datastore cluster Tier 1.

As datastore cluster Tier 2 is configured to keep the virtual machine files together, both the working directory (designated as Configuration file in the UI) and Hard disk 1 are placed on datastore nfs-f-05. A default anti-affinity rule is applied to all new virtual machines in datastore cluster 2, therefore Storage DRS recommends to place Hard disk 2 on nfs-f-07 and Hard disk 3 on datastore nfs-f-01.

Test result: Intra-VM anti-affinity rule applied on VM. The files stored in Tier-2 are placed on a single datastore, while the virtual machine disk files stored in the Tier-1 datastore are located on different datastores.

| Initial VM configuration | Cluster default affinity rule | Result | Configured on: |

| Centralized | Affinity rule | Centralized | Entire VM |

| Centralized | Anti0Affinity rule | Distributed | Entire VM |

| Distributed | Anti-Affinity rule | Distributed | Entire VM |

| Distributed | Affinity rule | Centralized | Entire VM |

| Distributed | Affinity rule | Centralized | Working directory + Hard disk 1 |

| Anti-Affinity rule | Distributed | Hard disk 2 and Hard disk 3 |

All types of migrations with the UI lead to a successful integration with the datastore cluster. Every migration results in an application of the correct affinity or anti-affinity rule set by the default affinity rule of the cluster.

Storage DRS and Storage vMotion bugs solved in vSphere 5.0 Update 2.

Today Update 2 for vSphere ESXI 5.0 and vCenter Server 5.0 were released. I would like to highlight two bugs that have been fixed in this update, one for Storage DRS and one for Storage vMotion

Storage DRS

vSphere ESXi 5.0 Update 2 was released today and it contains a fix that should be interesting to customers running Storage DRS on vSphere 5.0. The release note states the following bug:

Adding a new hard disk to a virtual machine that resides on a Storage DRS enabled datastore cluster might result in Insufficient Disk Space error

When you add a virtual disk to a virtual machine that resides on a Storage DRS enabled datastore and if the size of the virtual disk is greater than the free space available in the datastore, SDRS might migrate another virtual machine out of the datastore to allow sufficient free space for adding the virtual disk. Storage vMotion operation completes but the subsequent addition of virtual disk to the virtual machine might fail and an error message similar to the following might be displayed:

Insufficient Disk Space

In essence Storage DRS made room for the incoming virtual machine, but failed to place the new virtual machine. This update fixes a bug in the datastore cluster defragmentation process. For more information about datastore cluster defragmentation read the article: Storage DRS initial placement and datastore cluster defragmentation.

Storage vMotion

vCenter Server 5.0 Update 2 contains a fix that allows you to rename your virtual machine files with a Storage vMotion.

vSphere 5 Storage vMotion is unable to rename virtual machine files on completing migration

In vCenter Server , when you rename a virtual machine in the vSphere Client, the vmdk disks are not renamed following a successful Storage vMotion task. When you perform a Storage vMotion of the virtual machine to have its folder and associated files renamed to match the new name. The virtual machine folder name changes, but the virtual machine file names do not change.

Duncan and I knew how many customers where relying on this feature for operational processes and pushed heavily to get it back in. We are very pleased to announce it’s back in vSphere 5.0, unfortunately this fix is not available in 5.1 yet!

For more info about the fixes in the updates please review the release notes:

ESXi 5.0 : https://www.vmware.com/support/vsphere5/doc/vsp_esxi50_u2_rel_notes.html

vCenter 5.0: https://www.vmware.com/support/vsphere5/doc/vsp_vc50_u2_rel_notes.html