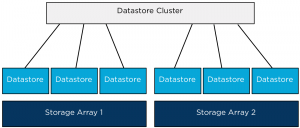

Combining datastores located on different storage arrays into a single datastore cluster is a supported configuration, such a configuration could be used during a storage array data migration project where virtual machines must move from one array to another array, using datastore maintenance mode can help speed up and automate this project. Recently I published an article about this method on the VMware vSphere blog. But what if multiple arrays are available to the vSphere infrastructure and you want to aggregate storage of these arrays to provide a permanent configuration? What are the considerations of such a configurations and what are the caveats?

Key areas to focus on are homogeneity of configurations of the arrays and datastores.

When combining datastores from multiple arrays it’s highly recommended to use datastores that are hosted on similar types of arrays. Using similar type of arrays, guarantees comparable performance and redundancy features. Although RAID levels are standardized by SNIA, implementation of RAID levels by different vendors may vary from the actual RAID specifications. An implementation used by a particular vendor may affect the read and write performance and the degree of data redundancy compared to the same RAID level implementation of another vendor.

Would VASA (vSphere Storage APIs – Storage Awareness) and Storage profiles be any help in this configuration? VASA enables vCenter to display the capabilities of the LUN/datastore. This information could be leveraged to create a datastore cluster by selecting the datastores that have similar Storage capabilities details, however the actual capabilities that are surfaced by VASA are being left to the individual array storage vendors. The detail and description could be similar however the performance or redundancy features of the datastores could differ.

Would it be harmful or will Storage DRS stop working when aggregating datastores with different performance levels? Storage DRS will still work and will load balance virtual machine across the datastores in the datastore cluster. However, Storage DRS load balancing is focused on distributing the virtual machines in such a way that the configured thresholds are not violated and getting the best overall performance out of the datastore cluster. By mixing datastores that provide different performance levels, virtual machine performance could not be consistent if it would be migrated between datastores belonging to different arrays. The article “Impact of load balancing on datastore cluster configuration” explains how storage DRS picks and selects virtual machine to distribute across the available datastores in the cluster.

Another caveat to consider is when virtual machines are migrated between datastores of different arrays; VAAI hardware offloading is not possible. Storage vMotion will be managed by one of the datamovers in the vSphere stack. As storage DRS does not identify “locality” of datastores, it does not incorporate the overhead caused by migrating virtual machines between datastores of different arrays.

When could datastores of multiple arrays be aggregated into a single datastore if designing an environment that provides a stable and continuous level of performance, redundancy and low overhead? Datastores and array should have the following configuration:

• Identical Vendor.

• Identical firmware/code.

• Identical number of spindles backing diskgroup/aggregate.

• Identical Raid Level.

• Same Replication configuration.

• All datastores connected to all host in compute cluster.

• Equal-sized datastores.

• Equal external workload (best non at all).

Personally I would rather create a multiple datastore clusters and group datastores belonging to a single storage array into one datastore cluster. This will reduce complexity of the design (connectivity), no multiple storage level entities to manage (firmware levels, replication schedules) and will leverage VAAI which helps to reduce load on the storage subsystem.

If you feel like I missed something, I would love to hear reasons or recommendations why you should aggregate datastores from multiple storage arrays.

More articles in the architecting and designing datastore clusters series:

Part1: Architecture and design of datastore clusters.

Part2: Partially connected datastore clusters.

Part3: Impact of load balancing on datastore cluster configuration.

Part4: Storage DRS and Multi-extents datastores.

Part5: Connecting multiple DRS clusters to a single Storage DRS datastore cluster.

Connecting multiple DRS clusters to a single Storage DRS datastore cluster.

Recently I received the question if you can connect multiple compute (HA and DRS) clusters to a single Storage DRS datastore cluster and specifically how this setup might impact Storage IO Control functionality. Let’s cover sharing a datastore cluster by multiple compute clusters first before diving into details of the SIOC mechanism.

Sharing datastore clusters

Sharing datastore clusters across multiple compute clusters is a supported configuration. During virtual machine placement the administrator selects which compute cluster the virtual machine will run in, Storage DRS selects the host that can provide the most resources to that virtual machine. A migration recommendation generated by Storage DRS does not move the virtual machine at host level, consequently a virtual machine cannot move from one compute cluster to another compute cluster by any operation initiated by Storage DRS.

Maximums

Please remember that the maximum supported number of hosts connected to a datastore is 64. Keep this in mind when sizing the compute cluster or connecting multiple compute clusters to the datastore cluster. As the maximum number of datastores inside a datastore cluster is 32 I think that the number of host connected is the first limit you hit in such a design as the total supported number of paths is 1024 and a host can connect up to 255 LUNs.

The VAAI-factor

If the datastores are formatted with the VMFS, it’s recommended to enable VAAI on the storage Array if supported. One of the important VAAI primitive is the Hardware assisted locking, also called Atomic Test and Set (ATS).

ATS replaces the need for hosts to place a SCSI-2 disk lock on the LUN while updating the metadata or growing a file. A SCSI-2 disk lock command locks out other host from doing I/O to the entire LUN, while ATS modifies the metadata or any other sector on the disk without the use of a SCSI-2 disk lock. This locking was the focus of many best practices around the connectivity of datastores. To reduce the amount of locking, the best practice was to reduce the number of host attached. By using newly formatted VMFS5 volumes in combination with a VAAI-enabled storage array, scsi-2 disk lock commands are a thing of the past. Upgraded VMFS5 volumes or VMFS3 volumes will fall back to using SCSI-2 disk locks if the ATS command fails. For more information about VAAI and ATS please read the KB article 1021976.

Note: If your array doesn’t support VAAI, be aware that SCSI-2 disk lock commands can impact scaling of the architecture.

Storage DRS IO Load balancing and Storage IO Control

When enabling the IO Metric on the datastore cluster, Storage DRS automatically enables Storage IO Control (SIOC) on all datastores in the cluster. Storage DRS uses the IO injector from SIOC to determine the capabilities of a datastore, however by enabling SIOC it also provides a method to fairly distribute I/O resources during times of contention.

SIOC uses virtual disk shares in order to distribute storage resources fairly and are applied on a datastore wide level. The virtual disk shares of the virtual machine running on that datastore are relative to the virtual disk shares of other virtual machines using that same datastore. To be more specific, SIOC is a host-level module and aggregates the per-host views into a single datastore view in terms of observed latency.

If the observed latency exceeds the SIOC level latency threshold, each host sets its own IO queue length based on the total virtual disks shares of the virtual machines in that host using the datastore. As SIOC and its shares are datastore focused cluster membership of the host has no impact on detecting the latency threshold violation and managing the I/O stream to the datastore.

Previous articles in the SDRS short series Architecture and design of Datastore clusters:

Part1: Architecture and design of datastore clusters.

Part2: Partially connected datastore clusters.

Part3: Impact of load balancing on datastore cluster configuration.

Part4: Storage DRS and Multi-extents datastores.

I/O Analyzer v1.1

I/O Analyzer v1.1 is now live on the Flings site:

http://labs.vmware.com/flings/io-analyzer

I/O Analyzer is a virtual appliance tool for measuring storage performance. This version of I/O Analyzer adds the ability to run trace replay – a function which allows a user to replay an I/O trace that was captured elsewhere (with vscsistats) on the target test system. This version also has cool data visualization charts, both for the characteristics of an imported trace, and performance results on the test system.

This is really cool stuff, go check it out.

Impact of Intra VM affinity rules on Storage DRS

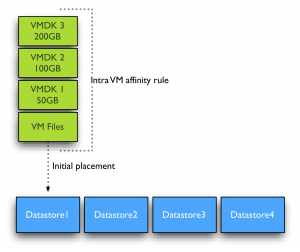

By default Storage DRS applies an Intra-VM affinity rule to all new virtual machines in the datastore cluster. The Intra-VM affinity rule keeps the virtual machine files, such as VMX file, log files, vSwap and VMDK files together on one datastore.

Keeping all files together on one datastore allows ease of troubleshooting. However Storage DRS load balance algorithms may benefit from distributing the virtual machine across datastores. Let’s zoom in how Storage DRS handles virtual machine with multiple disks when the Intra-VM affinity rule is removed from the virtual machine.

DrmDisk

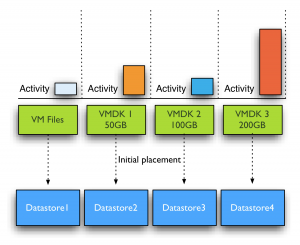

Storage DRS uses the construct “DrmDisk” as the smallest entity it can migrate. A DrmDisk represent a consumer of datastore resources. This means that Storage DRS creates a DrmDisk for each VMDK belonging to the virtual machine. The interesting part is the collection of system files and swap file belonging to virtual machines. Storage DRS creates a single Drmdisk for all the system files, if an alternate swapfile location is specified, the vSwap file is represented as a separate DrmDisk and Storage DRS will be disabled on the swap DrmDisk. More info about alternate swapfile locations can be found here. For example a virtual machine with three VMDK’s and with no alternate swapfile locations configured, Storage DRS creates 4 DrmDisk:

• A separate DrmDisk for each Virtual Machine Disk File

• A DrmDisk for system files (VMX, Swap, logs, etc)

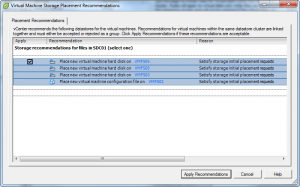

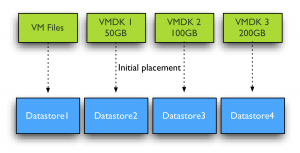

Initial placement recommendation will look similar to this screenshot when the Intra-VM affinity rule is disabled. Notice the separate recommendation for the “virtual machine configuration file”? This is the DrmDisk containing the system files.

Initial placement Space load balancing

Initial placement and Space load balancing benefit from this increased granularity tremendously. Instead of searching a suitable datastore that can fit the virtual machine as a whole, Storage DRS is able to seek for appropriate datastores for each DrmDisk file separately. Recently I wrote an article about datastore cluster fragmentation and Storage DRS ability to issue prerequisite migrations. You can imagine due to the increased granularity, datastore cluster fragmentation is less likely to happen and if prerequisite migrations are required, the number of migrations is expected to be a lot less.

IO load balancing

Similar to initial placement and load balancing, I/O load balancing benefit from the deeper level of detail. It can find a better fit for each workload generated by the VMDK files. The system file DrmDisk will not be migrated quite often as it small in size and does not generate a lot of I/O often. Storage DRS analyzes the workload and generates a workload model for each DrmDisk, it then decides which datastore it needs to place the DrmDisk to keep the load balanced within the datastore cluster while offering enough performance for each DrmDisk. You can imagine this becomes a lot harder when Storage DRS is required to keep all the VMDK files together. Usually the datastore chosen is the datastore that provides the best performance for the most demanding workload AND is able to store all the virtual machine disk files and system files. Now let’s dig into this a little deeper, for example the virtual machine used in the previous example has two DrmDisk generating heavy workloads, while the DrmDisks containing the system files and VMDK2 are “cold”.

If Intra-VM affinity rules are used, Space balancing is required to find a datastore that has 350+ GB free without exceeding the space utilization threshold. If I/O load balancing is enabled, this datastore also needs to provide enough performance to keep the latency below the I/O latency threshold (by default 15ms) after placing the 4 DrmDisks. You can imagine it’s a lot less complicated when space and I/O load balancing are allowed to place each DrmDisk on a datastore that suits their needs.

How to change default datastore cluster behavior?

Mentioned before, datastore cluster defaults in applying an Intra-VM affinity rule to each new virtual machine. Recently Duncan published an article on how to change the affinity rules on active virtual machines. Unfortunately there is not User-Interface option available that can disable this behavior, so I turned to my good friend and colleague Alan Renouf and he created some nice PowerCLI code to solve this problem:

As I’m not a powerCLI user at all, I’m relaying Alan’s instructions:

First you need to run the below code to put the function into memory:

function Set-DatastoreClusterDefaultIntraVmAffinity{

param(

[CmdletBinding()]

[parameter(Position = 0, Mandatory = $true, ValueFromPipeline = $true)]

[PSObject]$DSC,

[Switch]$Enabled

)

process{

$SRMan = Get-View StorageResourceManager

if($DSC.GetType().Name -eq “string”){

$DSC = Get-DatastoreCluster -Name $DSC | Get-View

}

elseif($DSC.GetType().Name -eq “DatastoreClusterImpl”){

$DSC = Get-DatastoreCluster -Name $DSC.Name | Get-View

}

$spec = New-Object VMware.Vim.StorageDrsConfigSpec

$spec.podConfigSpec = New-Object VMware.Vim.StorageDrsPodConfigSpec

$spec.podConfigSpec.DefaultIntraVmAffinity = $Enabled

$SRMan.ConfigureStorageDrsForPod($DSC.MoRef, $spec, $true)

}

}

Once this has been run you can use this function….

Get-DatastoreCluster “Shared Datastores” | Set-DatastoreClusterDefaultIntraVmAffinity

Shared datastores is the name of the Datastore cluster, you can change that into the name of your own datastore cluster. Or in the case you have multiple datastore clusters and want to disable the rule for all datastore clusters at once, omit the name of the datastore cluster at all.

If ease of troubleshooting is not your first concern, than it might be beneficial to the performance of Storage DRS to disable the default Intra-VM affinity rule on the virtual machines in the datastore cluster. However I’m interested in reasons why you wouldn’t want to disable the default affinity rule besides troubleshooting effort.

Note:

Unfortunately I’m unaware why VMware decided to use the Intra-VM affinity rule as default and I do not know if a future release of vSphere will provide a UI setting to change the affinity rule behavior of the datastore cluster. Please leave a comment if you would like this option included in a new version of vSphere. All I can do is relay this to the appopriate product manager.

Storage DRS I/O load balancing and Array-based Auto-Tiering

In its basic form Storage DRS can be used together with any array, however there are a few combinations of Storage array features and Storage DRS features that don’t mix easily. One of the most sought after question is can Storage DRS work with Array based Auto-tiering? And the answer is yes, yes you can use initial placement and out of space avoidance features that Storage DRS offers, however it is not recommended to enable the I/O metric feature.

Modeling

The main goal of the I/O metric function, popular called I/O load balancing, is to resolve the imbalance of performance delivered from datastores in the datastore cluster. To avoid hotspots in the datastore cluster and decrease overall latency imbalance, Storage DRS I/O load balancing uses device modeling and virtual machine workload modeling. Device modeling helps Storage DRS to understand the performance characteristics of the devices backing the datastores, while virtual machine workload modeling analyzes virtual machine workload running inside the datastore cluster. Both device and workload modeling assists Storage DRS to asses the improvement of I/O latency that will be achieved after a virtual machine migration.

Device modeling and the SIOC injector

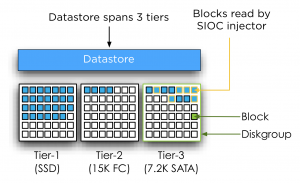

To understand and learn the performance of the devices backing the datastore, Storage DRS uses the Storage IO Control (SIOC) workload injector. To characterize the datastore, SIOC injector opens and read random blocks of the datastore. As the SIOC injector does not open every block backing the datastore, we cannot ensure that the SIOC injector opens an identical number of blocks of each performance tier to characterize the disk. As multiple performance tiers of disk back the datastore there is a possibility that the SIOC injector might open blocks located on similar speed disks, either slow or fast, while the datastore is primarily backed by disk with a different performance level. Let’s use an example to clarify this further.

In the diagram pictured above, SIOC opens random blocks and perform its tests. Unfortunately it doesn’t open blocks on other disks. While most of the blocks backing the datastore are located on faster performing disks, Storage DRS device modeling will characterize this disk with performance similar to 7.2K SATA disks. This inaccurate characterization of datastore performance might lead to an incorrect performance assessment and can lead to Storage DRS withholding a migration recommendation while there is sufficient performance available.

Segment migration triggered by auto-tiering algorithms

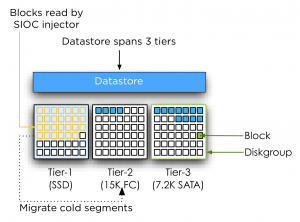

By using SIOC injector Storage DRS evaluate the performance of the disks, however Auto-tiering solutions migrate LUN segments (chunks) to different disk types based on the use pattern. Hot segments (frequently accessed) typically move to faster disks while cold segments move to slower disks. Depending on the array type and vendor there are different kind of policies and threshold for these migrations. By default Storage DRS is invoked every 8 hours and requires performance data over more than 16 hours to generate I/O load balancing decisions. Multiple storage vendors offer auto-tiering solutions, each using different time-cycles to collect and analyze workload before moving LUN segments. Some auto-tiering solutions move chunks based on real-time workload while other arrays move chunks after collecting performance data for 24 hours. This means that auto tiering solutions alter the landscape in which the SIOC injector performs its test. Let’s turn to another scenario for clarification.

In this scenario, SIOC is primarily opening blocks located in the Tier-1 diskgroup belonging to the datastore. As the datastore isn’t using these segments that often (cold) the auto tiering solution decides to migrate these segments to a lower tier. In this case the segments are migrated to 15K disks instead of SSD devices.

Storage DRS expects that the behavior of the device remains the same for at least 16 hours; it will base its calculation on these facts. Auto tiering solutions might change the underlying structure of the datastore based on its algorithm and timescales, conflicting with Storage DRS its calculation.

The misalignment of Storage DRS invocation and auto-tiering algorithms cycles makes it unpredictable when LUN segments may be moved, potentially colliding with the Storage DRS calculations and recommendations. Together with the transparency of auto tiering algorithms to Storage DRS and the non-existing communication between Storage DRS and Auto-tiering algorithms create the basis of the recommendation to disable I/O metric on datastore clusters backed by devices participating in an auto-tiering solution. Always verify these recommendations with your storage vendor.

Additional information:

Duncan wrote an excellent article about the Storage IO Control workload injector, which can be found here. More info on device modeling and load balancing can be found in the article impact of load balancing on datastore cluster configuration.

Note: This article is describing Storage DRS behavior based on vSphere 5.