On a regular basis I come across NFS based environments where the decision is made to store the virtual machine swap files on local VMFS datastores. Using host-local swap can affect DRS load balancing and HA failover in certain situations. So when designing an environment using host-local swap, some areas must be focused on to guarantee HA and DRS functionality.

VM swap file

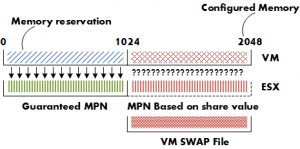

Lets start with some basics, by default a VM swap file is created when a virtual machine starts, the formula to calculate the swap file size is: configured memory – memory reservation = swap file. For example a virtual machine configured with 2GB and a 1GB memory reservation will have a 1GB swap file.

Reservations will guarantee that the specified amount of virtual machine memory is (always) backed by ESX machine memory. Swap space must be reserved on the ESX host for the virtual machine memory that is not guaranteed to be backed by ESX machine memory. For more information on memory management of the ESX host, please the article on the impact of memory reservation.

During start up of the virtual machine, the VMkernel will pre-allocate the swap file blocks to ensure that all pages can be swapped out safely. A VM swap file is a static file and will not grow or shrink not matter how much memory is paged. If there is not enough disk space to create the swap file, the host admission control will not allow the VM to be powered up.

Note: If the local VMFS does not have enough space, the VMkernel tries to store the VM swap file in the working directory of the virtual machine. You need to ensure enough free space is available in the working directory otherwise the VM is still not allowed to be powered up. Let alone ignoring the fact that you initially didn’t want the VM swap stored on the shared storage in the first place.

This rule also applies when migrating a VM configured with a host-local VM swap file as the swap file needs to be created on the local VMFS volume of the destination host. Besides creating a new swap file, the swapped out pages must be copied out to the destination host. It’s not uncommon that a VM has pages swapped out, even if there is not memory pressure at that moment. ESX does not proactively return swapped pages back into machine memory. Swapped pages always stays swapped, the VM needs to actively access the page in the swap file to be transferred back to machine memory but this only occurs if the ESX host is not under memory pressure (more than 6% free physical memory).

Copying host-swap local pages between source- and destination host is a disk-to-disk copy process, this is one of the reasons why VMotion takes longer when host-local swap is used.

Real-life scenario

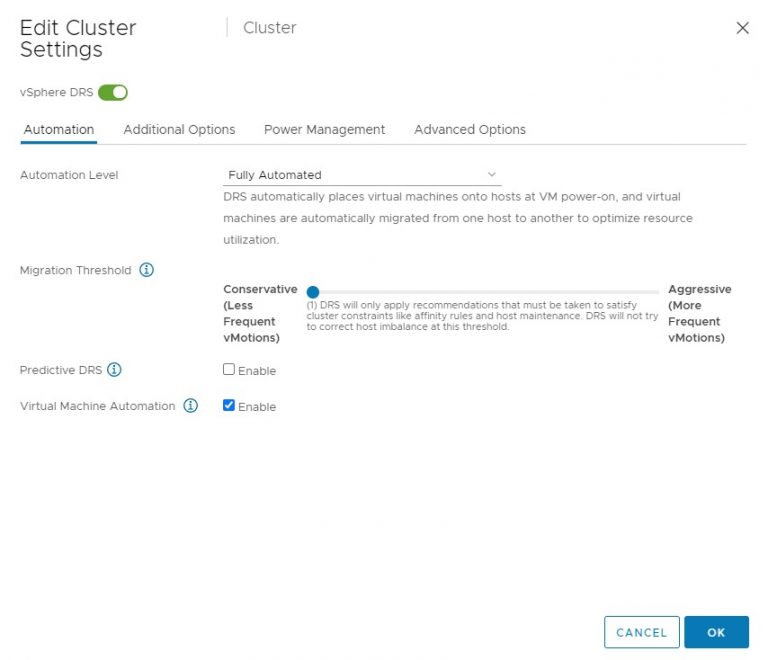

A customer of mine was not aware of this behavior and had discarded the multiple warnings of full local VMFS datastores on some of their ESX hosts. All the virtual machines were up and running and all seemed well. Certain ESX servers seemed to be low on resource utilization and had a few active VMs, while other hosts were highly utilized. DRS was active on all the clusters, fully automated and a default (3 stars) migration threshold. It looked like we had a major DRS problem.

DRS

If DRS decide to rebalance the cluster, it will migrate virtual machines to low utilized hosts. VMkernel tries to create a new swap file on the destination host during the VMotion process. In my scenario the host did not contain any free space in the VMFS datastore and DRS could not VMotion any virtual machine to that host because the lack of free space. But the host CPU active and host memory active metrics were still monitored by DRS to calculate the load standard deviation used for its recommendations to balance the cluster. (More info about the DRS algorithm can be found on the DRS deepdive page). The lack of disk space on the local VMFS datastores influenced the effectiveness of DRS and limited the options for DRS to balance the cluster.

High availability failover

The same applies when a HA isolation response occurs, when not enough space is available to create the virtual machine swap files, no virtual machines are started on the host. If a host fails, the virtual machines will only power-up on host containing enough free space on their local VMFS datastores. It might be possible that virtual machines will not power-up at-all if not enough free disk space is available.

Failover capacity planning

When using host local swap setting to store the VM swap files, the following factors must be considered.

• Amount of ESX hosts inside cluster.

• HA configured host failover capacity.

• Amount of active virtual machines inside cluster.

• Consolidation ratio (VM per host).

• Average swap file size.

• Free disk space local VMFS datastores.

| Number of hosts inside cluster: | 6 |

| HA configured host failover capacity: | 1 |

| Active virtual machines: | 162 |

| Average consolidation ratio: | 27:1 |

| Average memory reservation: | 0GB |

| Average swap file size: | 4GB |

For the sake of simplicity, let’s assume that DRS balanced the cluster load and that all (identical) virtual machines are spread evenly across every host.

In case of a host failure, 27 VMs will be restarted on the remaining 5 hosts inside the cluster, HA will start 5.4 virtual machines per host, as it is impossible to start 0.4 VM, some ESX hosts will start 6 virtual machines, while other hosts will start 5 VM’s.

The average swap file size is 4GB, this requires at least 24 GB of free space to be available on the local VMFS datastores to start the VM’s. Besides the 24GB, enough free space needs to be available to for DRS to move multiple VMs around to rebalance the load across the cluster.

If the design of the virtual infrastructure incorporates site failover as well, enough free disk space on all the ESX hosts must be reserved to power-up all the affected virtual machines from the failed site.

Closing remarks

Using host local swap can be a valid option for some environments, but additional calculation of the factors mentioned above is necessary to ensure sustained HA and DRS functionality.

Great article, it’s all about understanding the impact of your decisions…

One little side note, the formula to calculate the swap file size is: memory limit – memory reservation. Usually the configured memory is the same as the limit, but the limit can be lower.

Sorry, I’m wrong.

an Excellent article, it certainly is food for thought

Eric,

Thanks for the reply, but the correct calculation is configured memory- reservation.

See page 31 of the vSphere resource management guide:

You must reserve swap space for any unreserved virtual machine memory (the difference between the

reservation and the configured memory size) on per-virtual machine swap files.

I understand your point, as a limit will reduce the amount of memory allowed to be backed by machine memory, but you cannot configure a virtual machine with a limit less than its reservation setting. A limit will not have any effect on the size of the swap file. It only restricts the VM ability to use machine memory.

For example a machine configured with a 2GB and a 1GB memory reservation will end up with 1GB. A swap file is created with a size of 1GB. The minimum limit of that VM is 1GB. In this situation, all pages above 1GB are not allowed to be backed by physical memory and are paged in the swap file per default.

When no reservation is set, the swap file will be equal to the configured memory of the virtual machine.

Ah just spotted your correction.

But your question was a good exercise!

Nice article Frank! Can you think of any reasons why people want to store swap on local vmfs (beside the reason there is no shared storage)?

Most of the time it’s not the case that shared storage is unavailable, but just a reduction of IO load towards the shared storage environment.

VMware used to recommend placement of swap files on local VMFS datastores when NFS was used as shared storage.

vConsult also made a good point on Twitter, when your shared storage is replicated you don’t want to replicate the swap files to the disaster recovery site. That’s one reason to place the swap files a non replicated LUN or local VMFS.

Frank you’re right, it’s not the limit. I actually tested it in my lab. There’s still an error in the Fast-Track Guide which states (p.435).

“The size of the VMKernel swap file is determined by the difference between how much memory the virtual machine can use (the virtual machine’s maximum configured memory or its memory limit) and how much RAM is reserved for it (its reservation).”

That’s when I decided to test it.

If you don’t want to replicate your swap file why not store the swap file on a separate shared VMFS datastore?

And why even care about replication? If you’re not overcommitting the .vswp file is more or less static and should not increase replication traffic after the initial first replica.

Great article Frank. I don’t have any experience with local swap files, but this is something that could be easily overlooked.

I fully agree with Duncan. When you do a proper sizing of your environment you hardly get any ESX swapping, so replication overhead is minimized.

When you care about storage replication consider placing the Guest’s page/swap on a seperate datastore that is not replicated. The guest’s page/swap file is utilized more than the ESX swap.

Oh and before your host hits swapping it will try ballooning first, which utilizes the guest’s swap/page even more.

But every time you power off or power on a virtual machine the replication will be triggered again. Best practice: Locate the swap file on shared storage but not on replicated storage.

Great Article !

I wrote a PowerCLI one-liner to display the VMs and their average memory swapped and the amount of memory used by memory control, I was supprised to see some VMs with swapped memory even when the hosts look fine, this article helps to explain this, Thanks Frank !

How much would you expect was a non worying amount of data in a swap file ?

One-liner for those interested:

Get-VM | Where {$_.PowerState -eq “PoweredOn” }| Select Name, Host, @{N=”SwapKB”;E={(Get-Stat -Entity $_ -Stat mem.swapped.average -Realtime -MaxSamples 1 -ErrorAction SilentlyContinue).Value}}, @{N=”MemBalloonKB”;E={(Get-Stat -Entity $_ -Stat mem.vmmemctl.average -Realtime -MaxSamples 1 -ErrorAction SilentlyContinue).Value}} | Out-GridView

Good stuff that I’ll keep in mind when I’m thinking about putting swap’s locally.

That’s true Eric, but will that really saturate your link? if that’s the case you will need to do the math.

Question is though, will you also replicate your Windows swap?

Eric,

How often is a vm powered down in a production environment?

Guest OS reboots don’t count.

Allthough powering off/on a vm will trigger replication it will not necessarily replicate the complete swap file. Replication is active on the block level of the backend storage. Deleting the swapfile will only impact a small change in the allocation table. No disk scrubbing is done.

The same holds true for creating the swapfile. The swap file isn’t eagerzeroed, but zeroed out on first write AFAIK. Therefore the impact on replication is minimal.

I’m no storage specialist though.

Interesting topic.

Arnim,

Not entirely true, the VMkernel does not zero-out the swap file, it will reserve only the blocks.

For the case Frank was talking about, we actualy looked in to not replicating the windows swap, but decided that would make the setup too complex. Even with sufficient bandwith, you do not want to replicated data that is useless on the other side, especialy when you get a performance penalty by replicating.

Frank,

I mentioned that the swap file is NOT eagerzeroed. But aren’t the reserved blocks zeroed out before they are written to?

Oh apologies, I misunderstood your comment.

I think a block is zeroed out before writing, will come back on this.

That’s also true Arnim. The vswp file isn’t zeroed out. only when a write occurs it’s zeroed.

I can agree with Arnim on this one. In our production environment, the VMs are almost never shutdown and booted again. Instead, the OS inside of them are rebooted. However, now that I’m thinking of it, there is an option in Windows to clear the pagefile during boot. That could certainly impact the performance when rebooting 3000 VMs….. I would disable that setting. Great article Frank, it’s going to be a classic!

I know a large NFS shop that places all VMkernel swap on a no-snap volume, while the VMs are typically placed on snap volumes for backup purposes. The logic? No need to snap VMkernel swap wasting Tier 1 disk space. Furthermore, VMkernel swap doesn’t need to roll off to snap vault for the same reasons.

This discussion got me thinking and I provisioned a new thin VMFS from my LeftHand array and parked a new VM out there with 4 GB RAM. I put it into a resource pool that is capped at 1 GB and fired up MemTest x86. This was so I could ensure that the swap file was being used — simply starting the VM did allocate a file on the VMFS, but the array didn’t grab any more storage for the volume.

Once I started swapping, the array allocated a couple GB and I took a snapshot and powered off the VM. When I brought it back up, no additional space was allocated for the VSWP file until I again started using it… then the array grabbed another couple GB.

I guess the moral of the story is that the act of allocating the swap file does not seem to allocate much disk space, but that may depend on how your array handles provisioning. If it looks at changed blocks, you should be fine.

Still not sure if hosting swap files on separate non-replicated storage is such a wise idea.

If you have a setup in which you have two sites, then you must take into account the possibility of a site failure. In that case, VMs should be started up on the working site, and therefore, you also need space for those VM swapfiles. Because of this situation, I think it’s better to store swap on replicated storage.

Also of this, it’s better not to store swap files on local storage, because you need more local storage on each ESX host to facilitate a site failure.

Please, shoot me on this! xD

ps: Found some more interesting information on local swap (little outdated, but still applies I think): http://www.vmware.com/files/pdf/new_storage_features_3_5_v6.pdf#5

This blog was awesome. This site was very informative.Thank you for this information.

Windows pagefile: Bouke, Windows has an option to clear the pagefile at shutdown, but it’s disabled by default (I’m not aware of any setting that clears the pagefile at bootup). So this probably isn’t a concern in practice.

Replication traffic: there seems to be a soft consensus here that a VM startup reserves disk blocks but no block-level replication is triggered until there’s an actual write — which may well never occur since memory ballooning always precedes use of the VSWP file. It’s essential to verify this on your particular array, though.

Array storage: yeah, it does seem awfully expensive to “waste” Tier 1 storage on mere swap files. The idea Jason mentioned of using non-replicated NAS/NFS storage has a certain appeal but if the storage is too much cheaper, it may not be as well engineered (for example, against single-points-of-failure) as the Tier 1 stuff. Though presumably the NFS shops Frank is referring to have already done their homework.

Host-local may have slight performance benefits vs. SAN, but one could argue that if the host is so loaded that you’re in swapping territory, the location of your VSWP files is probably the least of your worries.

There’s no perfect solution, just tradeoffs, but personally I like the idea of using non-replicated, but well-engineered, NFS/NAS for swap files — you get the benefits of central storage (no performance hit when doing VMotion) at the cost of having to install a NAS in each site (production, D/R). That is, use Tier 1 (snapshots, replication) storage for VMs and Tier 2 (fast and no single-points-of-failure, but no snapshots or replication) for swap.

Richard, why waste often valuable WAN bandwidth replicating state that won’t be used at the other site? Sure you need to have a target for the swap at the other side, but it doesn’t need to be replicated from the first site.

That was a awesome post! I agree with your post. Great job again, and I hope you have a great day!

What I do with swap is to create thin provisioned NFS volumes that are the size of my aggregate (Netapp). This way I get the benefit of fast shared storage for swap and the storgae array only stores what swap actually needs. By oversubscribing to the size of the aggregate I get the protection that I’ll always have enough capacity available for swap. Of course I need to monitor the available space in my aggregate, but that’s not a problem.

So, thin provision swap on non-replicated, non snapshotted volumes is what I like to do.

Excellent info and banter, thanks Frank!

We’ve been running VMWare since 2.5 and I didn’t like when VMWare forced us to use SAN storage in 3.0 (since changed I realize). In my environment SAN storage is expensive and if you look about the amount that would be taken if all my swaps were moved to it would be in the TBs. All my ESX servers have a pair of 146 GB drives mirrored (with a few with 300s). As of our current servers (soon to change) the most RAM I have is 128 GB. If you subtract the ESX layer that leaves enough space on that local drive for a VMFS partition to hold a lot of swap space. I load our ESX servers as to be able to take an entire site down and still run all of production with very little to no over commit on RAM. I haven’t, as of yet, seen a reason to move all that useless space to the SAN, mirrored or not.

Now, as we start to eval going to ESXi and thus the removal of need for local storage things change, but at least in our environment to date I can’t justify spending the extra $$ on SAN storage.

As has been said, it goes back to knowing your environment, your needs and ramifications of your design decisions. Like most everything in this world one size does not fit all.

Very nice article Frank, really helpful.

Great Article, Cheers.

Frank, thanks for the article.

Another thing I’d like to clarify is what happens with in-RAM vm memory during vMotion when the target host is under the memory pressure. Does the current host swap vm memory or the target host tries to free memory to place the vMotion’ed vm first?