AMD’s current flagship model is the 12-core 6100 Opteron code name Magny-Cours. Its architecture is quite interesting to say at least. Instead of developing one CPU with 12 cores, the Magny Cours is actually two 6 core “Bulldozer” CPUs combined in to one package. This means that an AMD 6100 processor is actually seen by ESX as this:

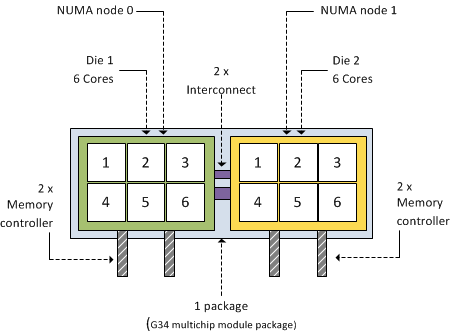

As mentioned before, each 6100 Opteron package contains 2 dies. Each CPU (die) within the package contains 6 cores and has its own local memory controllers. Even though many server architectures group DIMM modules per socket, due to the use of the local memory controllers each CPU will connect to a separate memory area, therefore creating different memory latencies within the package.

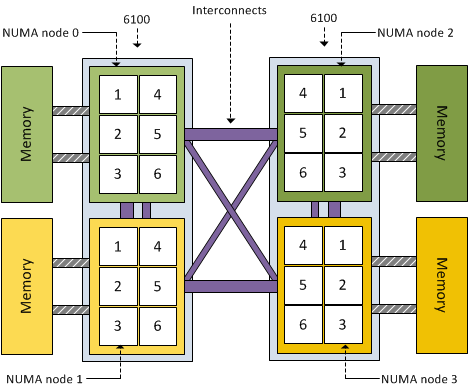

Because different memory latency exists within the package, each CPU is seen as a separate NUMA node. That means a dual AMD 6100 processor system is treated by ESX as a four-NUMA node system:

Impact on virtual machines

Because the AMD 6100 is actually two 6-core NUMA nodes, creating a virtual machine configured with more than 6 vCPUs will result in a wide-VM. In a wide-VM all vCPUs are split across a multitude of NUMA clients. At the virtual machine’s power on, the CPU scheduler determines the number of NUMA clients that needs to be created so each client can reside within a NUMA node. Each NUMA client contains as many vCPUs possible that fit inside a NUMA node.That means that an 8 vCPU virtual machine is split into two NUMA clients, the first NUMA client contains 6 vCPUs and the second NUMA client contains 2 vCPUs. The article “ESX 4.1 NUMA scheduling” contains more info about wide-VMs.

Distribution of NUMA clients across the architecture

ESX 4.1 uses a round-robin algorithm during initial placement and will often pick the nodes within the same package. However it is not guaranteed and during load-balancing the VMkernel could migrate a NUMA client to another NUMA node external to the current package.

Although the new AMD architecture in a two-processor system ensures a 1-hop environment due to the existing interconnects, the latency from 1 CPU to another CPU memory within the same package is less than the latency to memory attached to a CPU outside the package. If more than 2 processors are used a 2-hop system is created, creating different inter-node latencies due to the varying distance between the processors in the system.

Magny-Cours and virtual machine vCPU count

The new architecture should perform well, at least better that the older Opteron series due to the increased bandwidth of the HyperTransport interconnect and the availability of multiple interconnects to reduce the amounts of hops between NUMA nodes. By using Wide-VM structures, ESX reduces the amount of hops and tries to keep as much memory local. But –if possible- the administrator should try to keep the virtual machine CPU count beneath the maximum CPU count per NUMA node. In the 6100 Magny-Cours case that should be maximum 6 vCPUs per virtual machine

Great Article! Good information to know when making sizing recommendations.

I do have one question though. Since 5600 Intel CPU’s only have 8 cores in a dual socket machine, and these have 24 cores in a dual socket machine, would opteron CPU’s be better for higher density virtual desktop hosts? where you would normally have more 1 vCPU vm’s but more of them ?

At the risk of being a little pedantic, the magny-cores is not the “Bulldozer” architecture. Magny-Cores uses the same basic architecture as the AMD Phenom II. Bulldozer is a next-gen architecture that won’t debut until the 2nd half of 2011.

Is the Xeon 7500 multiple NUMA nodes or are the SMI links equidistant from all cores in the package?

Frank,

Great stuff! This is the article I was waiting for, we talked about this on VMWorld 😉

Am a bit dissapointed about this finding though, because this means there are only 2 memory channels/NUMA node with slower cores compared to Intel Westmere (EP architecture) where I have 3 memory channels/NUMA node (and 4 channels on EX architecture with 8 cores) with the possibility of faster CPU’s (X56xx).

So seems like the choice of CPU boils down to more but “slower” cores per server for AMD (24 cores with 4 NUMA nodes) against optionally (depending on CPU model) faster cores & 3 mem channels/NUMA node for Intel Westmere (12 cores with 2 NUMA nodes).

Wondering if there are any benchmarks comparing single AMD (Phenom II) cores against Intel Westmere cores, so we can have an idea on the true performance difference of each core type…

OMG, how glad am i to stumble across a great website, i have a AMD 6128 for learning VMware, thanks for this great article, this will be a TREAT to read.. definitely bookmarking your self 🙂

Oh what the heck, because you wrote this interesting article.

I just placed a order from amazon to support you!

Keep up the cool easy to read articles!

Finally, read this article quite interesting, what’s the best recommendation.

I have the 6128 (8 core), i’m guessing it’s 4 x 2 quad-core chips.

Would it perform better if i ran each VM with 4 vCPU?

Since it’d be less hops.

Next Question:

How are the NUMA node counted, i have the ASUS server motherboard, i have 4GB x 8 Per Socket, so i have a total of 64GB memory.

My question is, does each socket have 2 NUMA attached to it?

If so does that mean i have dual socket with quad NUMA?

Thanks for you time!

Does ESX see the 12 core processor as a single core for licensing purposes? Behind the scenes it looks like two six core CPUs so how many licensees do you need to buy per socket?