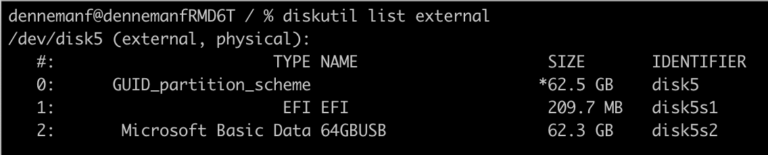

I’ve seen this image pop up quite a bit in my twitter timeline this week and it’s a very recognizable situation. Most of us have been in such conversation; I know I was when I was an Enterprise architect. And most tweets are wishing this situation changes in 2015, and I totally agree with it. However in my opinion it’s not the “app owner” who gives the wrong answer, it’s a wrong question to begin with. When I order a bread at the bakery, the baker ask what kind of bread I want, not how he needs to operate and fine tune his machinery in order to give me the product I want. Why do we think our industry, our service offering is different?

In reality, can you expect from app owners to truly understand the I/O characteristics of his application? Maybe they read all the documentation of the vendor, maybe they followed a couple of courses on how to configure and operate the application, or maybe they might even got a few certifications under their belt. But in reality there are no classes and courses in the dynamics of the workload you are running. The application stack is merely a framework in order to delivery a service to the business you are servicing.

The dynamics of workload is very complex, especially in a virtualized datacenter. Typically enterprise applications do not generate a consistent workload pattern. These patterns are different when servicing users or when interacting with infrastructure services. During their life cycle, applications are updated, code/query improves impacting application behaviour. Pete Koehler wrote down his experience in his article “Using a new tool to discover old problems”.

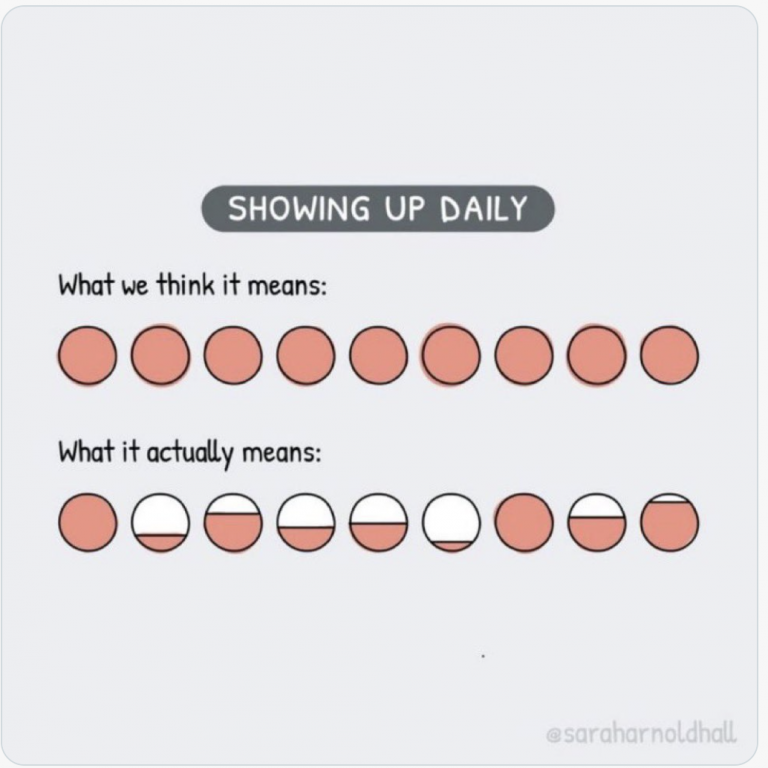

Besides generating a variety of different workload patterns, applications are subject to change during its lifecycle. Change in interaction and demand, impacting the underlying infrastructure differently throughout time. Typically an application experiences a lot of interaction during test/dev/acceptance process before going into production. After the introduction period, demand is low but increasing. During the maturity of the application, demand will peak. At one point application will be replaced and is phased out. During this phase workload demand will taper off, but the service still demands a particular level of service. During all these phases, the infrastructure needs to provide the service the organization demands. And this is just an isolated case of one particular application.

Typically the virtual datacenter infrastructure is shared. A virtual machine containing an application lands on a storage array, typically storing multiple virtual machines on that datastore. The datastore is backed by a LUN, backed by multiple physical devices. Access to the devices is done via shared controllers and the list continues all the way up the stack to hypervisor. Maybe the application owner understands what type of I/O the application is generally producing, but the underlying stack will impact the performance. Can you ensure the application gets the performance it requires? Do you know if the infrastructure is capable of delivering the service the application requires? And what about the impact of the application on the infrastructure. How will introducing this application impact the current active applications? Will it impact their service levels?

Therefor I believe that two things need to change, behavior and tooling. IT needs to switch from asking technical questions to asking functional questions. It’s better to understand the role and place in the business process. Typically this provides insight on the availability, concurrency and response requirements of the application. The second thing that needs to change, and this is what I hope 2015 will bring, is better tooling that provides insight on workload characteristics. Tooling that provides better analysis of application demand and it’s impact on infrastructure. At this stage, most tooling is ineffective in proving proper information. Virtualized Datacenters need tooling that provides a better view into the theatre of consumers and producers. Tooling that provides a more holistic view of the application workload characteristics while being able to monitor the resource usage. Having such tools allows IT departments to operationalize and manage their environments much better, ensuring proper service levels while being able to understand the capability of the environment. Looking at the current developments in the IT industry, it is incredible difficult to predict what type of workloads (and especially in what form/platform) will hit the enterprise IT landscape in the next two years. Understanding what your environment truly delivers is a necessity when discussing future workloads.

I think this is a necessary step for datacenter advancements. Once you know what’s going on, once you got proper tooling to provide better insights you can feed this data into advanced algorithms to distribute the load across the infrastructure to provide the performance it requires while optimizing resource utilization. All of this providing the correct priority aligning it with business needs. This goes beyond todays offering such as DRS, Storage DRS, SIOC in vSphere datacenters and Mesos in container landscapes.

My wish for 2015 – better tooling to provide better insight

2 min read