My background is Fibre Channel and beginning 2009 I implemented a large iSCSI environment. The “other” storage protocol supported by VMware, NFS, is rather unknown to me. And to be honest I really tried to keep away from it as much as possible, thinking it was not a proper enterprise worthy solution. That changed this month as I was asked to perform a design review of an environment which relies completely of NFS storage. This customer decided to use IP-Hash as load-balancing policy for their NFS vSwitch, but what Impact does this have on the NFS environment?

First of all, unlike the the default Port-based load balancing policy, IP-Hash has got some technical requirements.

The physical switch must be configured with STATIC LACP (a.k.a. etherchannel, for Cisco switches) Dynamic LACP is not supported by VMware. KB article 1004048 focuses on the configuration of LACP between the ESX server and physical switches.

Another technical requirement is that all uplinks in the vSwitch need to be active as the physicall switch is not aware of certain status of the uplink inside the vSwitch. David Marotta (VMware) explained the theory in such a way, I cannot forget this setting after hearing the story.

Because the pSwitch thinks it can send traffic to a particular VM down any of the pNICs. So all pNICs should be Active. Otherwise traffic to VM-A could be sent by the pSwitch to pNIC-2, and if the vSwitch thinks pNIC-2 is not Active for the port group used by VM-A, the vSwitch will drop it. Then VM-A will never get the packet. And it will sit in a corner, lonely and depressed, wondering why nobody calls it anymore.

Etherchannels and standby uplinks can introduce “Macflaps”, Duncan Epping (@DuncanYB) has written an excellent article on this as well Active / Standby etherchannels?

Besides the technical requirements of IP-hash, It will not do perfect load-balancing out of the box. Remembering Ken Cline’s (@clinek) the great vSwitch debate series about vSwitches I knew you must dive in to the algorithm used by IP-Hash load balancing and pick specific IP-addresses to make IP-hash load-balancing work.

But how does this IP-Hash algorithm work? As Ken cline so eloquently stated;

Take an exclusive OR of the Least Significant Byte (LSB) of the source and destination IP addresses and then compute the modulo over the number of pNICs.

OK, right! So how can we calculate it so we know if if the environment is balanced? Instead of the following the algorithm stated by Ken, I’ve used the method described in KB article 1007371

Waiver

The ip-addresses which are used in this example are ficticious, the ip-addresses are not based on real-life addresses, if those are in use by some company, it’s purely coincidental.

Step 1: Convert IP address to a HEX value

Use a IP Hex Converter tool to convert the IP addresses to Hex. An online Hex converter tool can be found at http://www.kloth.net/services/iplocate.php

In this example I use a vswitch with 2 uplinks. When using IP-hash, the first uplink has a IP binary representation of 0, the second uplink has a IP binary representation of 1.

The IP-Hash is calculated on source IP address, the VMkernel NFS IP address and the destination address, the NFS array IP address.

The VMknic has in this example the ip address of 145.10.44.10,

The first IP address of the NAS is 145.10.44.80 and the second address is 145.10.44.90

HEX: ESX VMkernel: 145.10.44.10 = 910A2C0A

HEX: NFS address 1: 145.10.44.80 = 910A2C50

HEX: NFS address 2: 145.10.44.90 = 910A2C5A

Step 2 Calculate the binary representation of the HASH

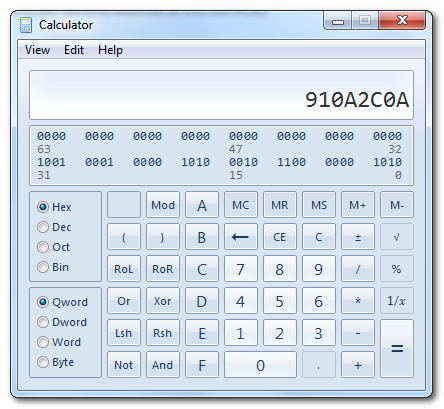

Now lets calculate the binary representation of the uplink’s IP address. I use windows for my desktop, so I use calc.exe for this example.

Open calc.exe and select Programmer, select the option HEX and Qword and paste the HEX value of the VMkernel NIC

Now press Xor , enter the (first) NFS IP address in HEX format (910A2C50) and click on the = button. The result is 5a, press the option Mod (modulo) and use the number of uplinks as value (2). Click on the = button to calculate the modulo.

The result of this calculation is the number 0 (zero). This means that IP-hash chooses the first uplink because the hash and the uplink both have an binary representation of 0.

Now lets calculate the second hash. In short;

HEX value “VMkernel NIC” Xor HEX value “NFS address 2”:

910A2C0A Xor 910A2C5A = 50 MOD 2 = 0

The result of this calculation is also 0 (zero), this means that the VMkernel does not balance traffic and will send traffic to across one uplink.

One ip-address of the NFS array needs to be changed to ensure that the VMkernel wil balance outbound traffic. For this example, IP-address 2: 145.10.44.90 is changed to 145.10.44.81, the HEX value of this address is 910A2C51.

Now lets calculate the binary representation.

910A2C0A Xor 910A2C51 = 5B MOD 2 = 1.

The result of this calculation is 1 (one) The VMkernel chooses the second uplink because it has the same binary representation of the Hash. Hereby balancing outbound NFS traffic across the two uplinks.

Using IP-Hash to load-balance is a excellent choice, but you do need to fulfill certain technical requirements to get it supported by VMware and plan your IP-address scheme accordingly to get the most out of this load-balancing Policy.

One last thing, because I knew little about NFS, I turned to my primary source of storage related VMware articles Chad Sakac (@sakacc) and he written an excellent article about the use of NFS and VMware together with Vaughn Stewart (@vstewed) of NetApp. Please read it if you haven’t already. A “Multivendor Post” to help our mutual NFS customers using VMware. Again it’s truely excellent!

How funny! I was preparing to write a piece on how VMware used the load balancing algorithms in the vSwitch to pick an uplink, but it looks like you’ve beat me to the punch! Good article, and helps reinforce the information I presented in some of my articles on using multiple NICs with IP-based storage:

http://blog.scottlowe.org/2008/10/08/more-on-vmware-esx-nic-utilization/

http://blog.scottlowe.org/2008/07/16/understanding-nic-utilization-in-vmware-esx/

Keep up the good work, Frank!

Thanks for the compliment Scott,

And thanks for posting the links, both articles are excellent and are a must-read!

You can also refer to the best practices guide release by Netapp for VMware deployment with NFS protocol.

Hi Craig,

I haven’t had the chance to read the NetApp documentation, could you post a link to the document?

On Cisco switches you can use the “test” command to determine which link a particular src/dst will use. The syntax looks like:

test etherchannel load-balance interface port-channel number {ip | l4port | mac} [source_ip_add | source_mac_add | source_l4_port] [dest_ip_add | dest_mac_add | dest_l4_port]

Complicated solutions to a non-problem?

Long ago, when EtherChannel and LACP were crafted, we had a very different network environment: multiple protocols, ranging from well behaved protocols like IP tot quick and dirty stuf that made all sorts of assumptions about the network. As a consequence, aggregating Ethernet links needed to insure that the sequence of network frames was maintained.

Parallel links can and will change the order of packets when a long packet is send of link A and a subsequent short packet is send of link B. The short packet arrives first and now leads the long packet.

Today, and especially in a dedicated storage network, we exclusively use IP, a protocol that incorporates fragmentation and reassembly at the IP-layer and has no problem dealing with out-of-order packets. iSCSI is wrapped in TCP, which has the same property: segments may arrive out of order without penalty.

The various algorithms used in load balancing exist solely to insure per-stream packet sequence, hence the use of source and destination (either MAC of IP) addresses. I doubt that this is a requirement in TCP/IP storage networks. As a consequence, load balancing parallel links can simply be based on shortest-queue-first, which is always optimal and deals nicely with broken links.