The behavior of thin provisioned disk VMDKs in a datastore cluster is quite interesting. Storage DRS supports the use of thin provisioned disks and is aware of both the configured size and the actual data usage of the virtual disk. When determining placement of a virtual machine, Storage DRS verifies the disk usage of the files stored on the datastore. To avoid getting caught out by instant data growth of the existing thin disk VMDKs, Storage DRS adds a buffer space to each thin disk. This buffer zone is determined by the advanced setting “PercentIdleMBinSpaceDemand”. This setting controls how conservative Storage DRS is with determining the available space on the datastore for load balancing and initial placement operations of virtual machines.

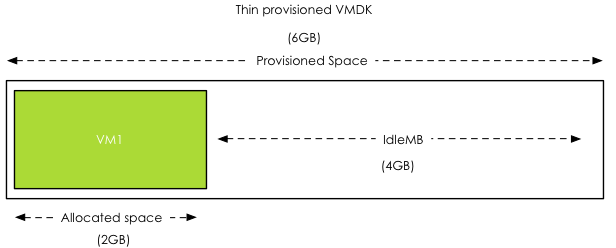

IdleMB

The main element of the advanced option “PercentIdleMBinSpaceDemand” is the amount of IdleMB a thin-provisioned VMDK disk file contains. When a thin disk is configured, the user determines the maximum size of the disk. This configured size is referred to as “Provisioned Space”. When a thin disk is in use, it contains an x amount of data. The size of the actual data inside the thin disk is referred to as “allocated space”. The space between the allocated space and the provisioned space is called identified as the IdleMB. Let’s use this in an example. VM1 has a single VMDK on Datastore1. The total configured size of the VMDK is 6GB. VM1 written 2GB to the VMDK, this means the amount of IdleMB is 4GB.

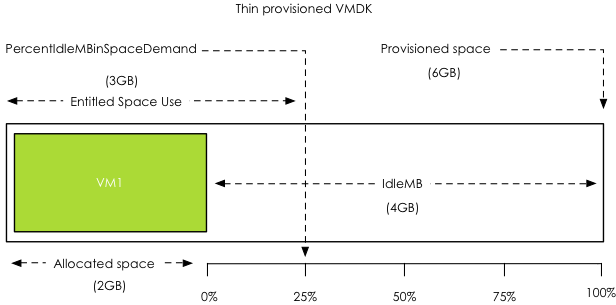

PercentIdleMBinSpaceDemand

The PercentIdleMBinSpaceDemand setting defines percentage of IdleMB that is added to the allocated space of a VMDK during free space calculation of the datastore. The default value is set to 25%. When using the previous example, the PercentIdleMBinSpaceDemand is applied to the 4GB unallocated space, 25% of 4GB = 1 GB.

Entitled Space Use

Storage DRS will add the result of the PercentIdleMBinSpaceDemand calculation to the consumed space to determine the “entitled space use”. In this example the entitled space use is: 2GB + 1GB = 3GB of entitled space use.

Calculation during placement

The size of Datastore1 is 10GB. VM1 entitled space use is 3GB, this means that Storage DRS determines that Datastore1 has 7GB of available free space.

Changing the PercentIdleMBinSpaceDemand default setting

Any value from 0% to 100% is valid. This setting is applied on datastore cluster level. There can be multiple reasons to change the default percentage. By using 0%, Storage DRS will only use the allocated space, allowing high consolidation. This is might be useful in environments with static or extremely slow data increase.

There are multiple use cases for setting the percentage to 100%, effectively disabling over-commitment on VMDK level. Setting the value to 100% forces Storage DRS to use the full size of the VMDK in its space usage calculations. Many customers are comfortable managing over-commitment of capacity only at storage array layer. This change allows the customer to use thin disks on thin provisioned datastores.

Use case 1: NFS datastores

A use case is for example using NFS datastores. Default behavior of vSphere is to create thin disks when the virtual machine is placed on a NFS datastore. This forces the customer to accept a risk of over-commitment on VMDK level. By setting it to 100%, Storage DRS will use the provisioned space during free space calculations instead of the allocated space.

Use case 2: Safeguard to protect against unintentional use of thin disks

This setting can also be used as safeguard for unintentional use of thin disks. Many customers have multiple teams for managing the virtual infrastructure, one team for managing the architecture, while another team is responsible for provisioning the virtual machines. The architecture team does not want over-commitment on VMDK level, but is dependent on the provisioning team to follow guidelines and only use thick disks. By setting “PercentIdleMBinSpaceDemand” to 100%, the architecture team is ensured that Storage DRS calculates datastore free space based on provisioned space, simulating “only-thick disks” behavior.

Use-case 3: Reducing Storage vMotion overhead while avoiding over-commitment

By setting the percentage to 100%, no over-commitment will be allowed on the datastore, however the efficiency advantage of using thin disks remains. Storage DRS uses the allocated space to calculate the risk and the cost of a migration recommendation when a datastore avoids its I/O or space utilization threshold. This allows Storage DRS to select the VMDK that generates the lowest amount of overhead. vSphere only needs to move the used data blocks instead of all the zeroed out blocks, reducing CPU cycles. Overhead on the storage network is reduced, as only used blocks need to traverse the storage network.

Get notification of these blogs postings and more DRS and Storage DRS information by following me on Twitter: @frankdenneman

Avoiding VMDK level over-commitment while using Thin disks and Storage DRS

2 min read

Great article Frank! One quick question.

“There are multiple use cases for setting the percentage to 100%, effectively disabling over-commitment on VMDK level. Setting the value to 100% forces Storage DRS to use the full size of the VMDK in its space usage calculations. ”

When this happens, I am assuming this will only help in calculating where to place the file. The vmdk will still remain thin and not use the full allocated space. Right?

Will this also potential stop manual placement of VMs that force a datastore to go into an over-committed state (with the 100% setting)?

Hi Bilal,

When you set the value to 100% it uses the provisioned space when determining the free space of the datastore. When using the example in the article of the VM with 6GB of provisioned space. Datastore1(10GB) will report that the free space is 10GB – 6GB = 4GB free space. When load balancing occurs, only VMDKs that are below 4GB can be placed on the datastore.

Preferably Storage DRS will actually try to place only files not larger than 2GB (80% space utilization) (6GB +2GB=8GB =80% of 10GB.

The VMDK will remain thin and Storage DRS will use the allocated space for it’s cost/benefit analysis.

Yes it can stop placement by load balancing operations as it cannot overcommit anymore. It is not a soft-rule. Please remember that the initial placement engine uses provisioned space to place a new VM in the datastore cluster.

Thanks Frank for the detailed reponse. Much appreciated.

Hi Frank, does this also work on vSphere 5.0 ?

I’ve tested this in my lab on some datastore clusters by setting “PercentIdleMBinSpaceDemand” to 100% in SDRS Automation –> Advanced Options but the setting seems to have no effect…

I tried creating a VM with thin disk of 200GB on a datastore cluster where none of the datastores had 200GB free space, yet it allows me to create the VM anyway…

Peter,

This setting is to calculate the free space of the datastore. It has no effect on placement of new virtual machines.

When you provision a VM into a datastore cluster, Storage DRS will always use the provisioned space, regardless of disk type (thick or thin). When you provision that VM to the datastore, please check the recommendations, I think it defragments the datastore cluster for you. For more information about datastore cluster defragmentation, please read the following article: http://frankdenneman.nl/2012/01/storage-drs-initial-placement-and-datastore-cluster-defragmentation/.

I’m curious what you mean with free space. Free space as in total free space of the datastore, or free space below the space utilization threshold?

If there is not enough space to be found after the defrag, Storage DRS will deny the initial placement of the virtual machine.

Hi Frank, i’m also interested to know if this setting is usable starting from 5.0 or 5.1 ? Thanks.

Hi, Frank, I needed to let this sink in a bit 😉

I meant “Free space as in total free space of the datastore”.

You are right that for initial placement if no datastore has enough space it will defragment the cluster as long as the total amount of free space in the datastore cluster exceeds the requested space for the VM.

I’m a bit confused though as you did mention in the end of your first paragraph of this article, this parameter was also used for initial placement:

“This setting controls how conservative Storage DRS is with determining the available space on the datastore for load balancing and initial placement operations of virtual machines.”

Anyway, I tested the setting on the latest build of vSphere 5.0 and this setting had no effect at all for initial placements…

So either this is indeed not used at all for initial placements (neither in vSphere 5 nor 5.1) or it simply is not working in vSphere 5 alone.

As I am waiting for my SRM SRA’s to become available for vSphere 5.1, I haven’t had the opportunity to upgrade my lab, so have not yet been able to do these tests on 5.1.

Hello Frank,

I have the same question as Peter. I’m running vSphere 5.0 and configured a datastore cluster and enabled SDRS. I want to use the solution offered in this article for use-case 2. I’ve put PercentIdleMBinSpaceDemand in the Advanced Options of SDRS Automation and set the value to 100. However SDRS doesn’t offer any recommendations.

I have a datastore cluster with 4 datastores, each 475GB. One of these has a provisioned space of 690GB and a free space of 290GB.

Using the setting you suggested doesn’t make SDRS move VMs to different datastores that are not overprovisioned.

Is this feature not supported in vSphere 5.0.0 or have I done something wrong in the configuration?

Thanks Frank, this 8 years old article explained why our sDRS was doing migrations which does not make sense – moving VMs from datastore with more free space to one with less. Now testing PercentIdleMBinSpaceDemand set to 0.

Hello Frank,

Thank you for this article. I’ve found it because I was searching a way to avoid overallocation on datastore cluster when using thin provision vmdks.

After some hours of headaches + 2 GSS cases it seems this parameters doesn’t work at all neither for intial placement nor space balance across datastores, at least for Vcenter 5.5 till 6.7 latest (U3h at the time of writing).

I don’t know if you have access to GSS case, the SR number was 20129757906

I know it’s an old article but I never found an article stating it doesn’t work at all, so I’m posting in case others people try to implement it.