Yesterday I had an interesting conversation with a colleague about affinity rules and if DRS reviews the complete state of the cluster and affinity rules when placing a virtual machine. The following scenario was used to illustrate to question:

The following affinity rules are defined:

1. VM1 and VM2 must stay on the same host

2. VM3 and VM4 must stay on the same host

3. VM1 and VM3 must NOT stay on the same host

If VM1 and VM3 is deployed first, everything will be fine. Because VM1 and

VM3 will be placed on 2 different hosts, and VM2 and VM 4 will also be

placed accordingly

However, if VM1 is deployed first, and then VM4, there isn’t an explicit

rule to say these two need to be on separate hosts, this is implied by

looking into dependencies of the 3 rules created above. Would DRS be

intelligent enough to recognize this? Or will it place VM1 and VM4 on the

same host, but by the time VM3 needs to be placed, there is a clear

deadlock.

The situation where its not logical to place VM4 and VM1 on the same host can be deemed as a implicit anti-affinity rule. It’s not a real rule, but if all virtual machines are operational, VM4 should not be on the same host as VM1. DRS doesn’t react to these implicit rules. Here’s why:

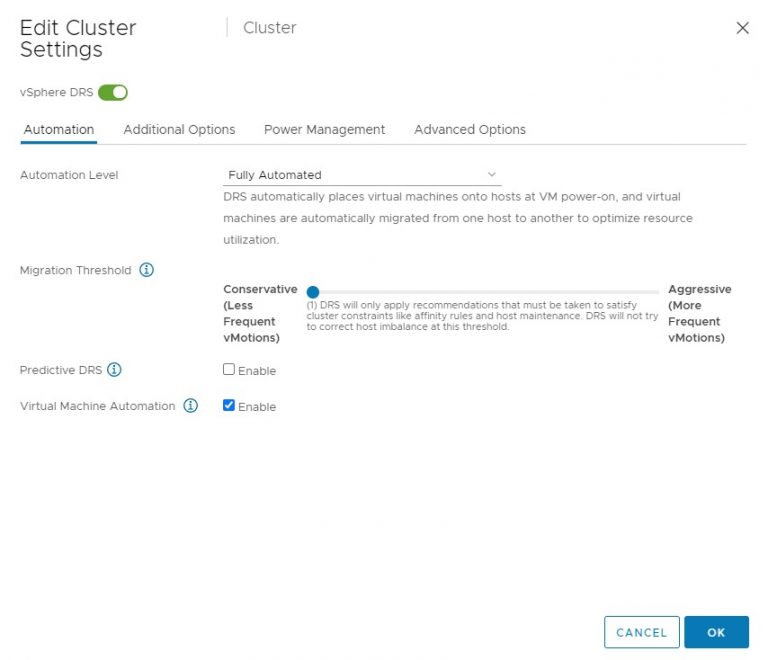

When provisioning a virtual machine, DRS sorts the available hosts on utilization first. Then it goes through a series of checks such as the compatibility between the virtual machine and the host. Does the host have a connection to the datastore? Is the vNetwork available at the host? And then it will check to see if placing the virtual machine violates any constraints. A constraint could be a VM-VM affinity/anti-affinity rule or a VM-Host affinity/anti-affinity rule.

In the scenario where VM1 is running, DRS is safe to place VM4 on the same host as it does not violate any affinity rule. When DRS wants to place VM3, it determines that placing VM3 on the same host VM4 is running violates the anti-affinity rule VM1 and VM3. Therefor it will migrate VM4 the moment VM3 is deployed.

During placement DRS only checks the current affinity rules and determines if placement violates any affinity rules. If not, then the host with the most connections and the lowest utilization is selected. DRS cannot be aware of any future power-on operations, there is no vCrystal bowl. The next power-on operation might be 1 minute away or might be 4 days away. By allowing DRS to select the best possible placement, the virtual machine is provided an operating environment that has the most resources available at that time. If DRS took al the possible placement configurations into account, it could either end up in gridlock or place the virtual machine on a higher utilized host for a long time in order to prevent a vMotion operation of another virtual machine to satisfy the affinity rule. All that time that virtual machine could be performing beter if it was placed on a lower utilized host. On the long run, dealing with constraints the moment they occur is far more economical.

Similar behavior occurs when creating a rule. DRS will not display a warning when creating a collections of rules that create a conflict when all virtual machines are turned on. As DRS is unaware of the intentions of the user, it cannot throw a warning. Maybe the virtual machines will not be powered on in the current cluster state. Or maybe this ruleset is in preparation for the new hosts that will be added to the cluster shortly. Also understands that if a host is in maintenance mode, this host is considered to be external to the cluster. It does not count as an valid destination and the resources are not used in the equation. However we as users still see the host part of the cluster. If those rule sets are created while a host is in maintenance mode, than according to the previous logic DRS must throw an error, while the user assumes the rules are correct as the cluster provides enough placement options. As clusters can grow and shrink dynamically, DRS deals only with violations when the rules become active and that is during power-on operations (DRS placement).