Today I was discussing with Duncan some great books to read. Disconnecting fully from work is difficult for me, so typically, the books I read are tech-related. I have read some brilliant books that I want to share with you, but mostly I want to hear your recommendations for other excellent tech novels.

Cyberwarfare

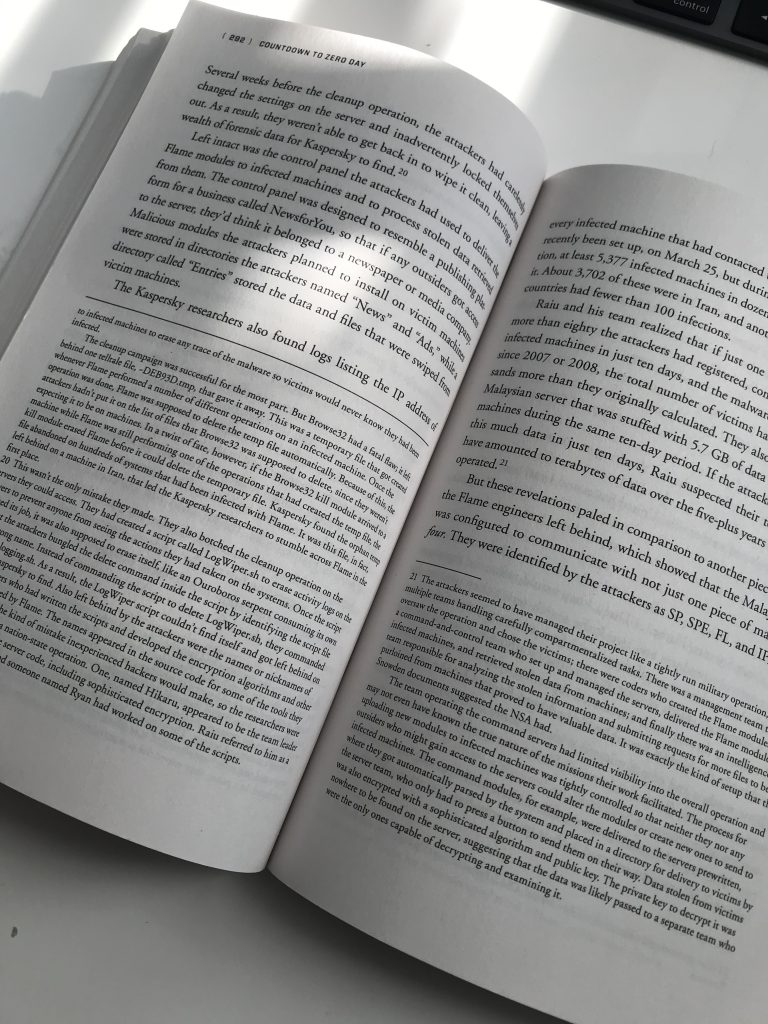

Cyberwarfare intrigues me, so any book covering Operation Olympic Games -or- Stuxnet interests me. One of the best books on this topic “Countdown to Zero day” by Kim Zetter. The book is filled with footnotes and references to corresponding research.

David Sanger, the NYT reporter who broke the Olympic Games story, wrote another brilliant piece on the future of Cyberwarfare, “The Perfect Weapon: War, Sabotage, and Fear in the Cyber Age“. This book is the basis of an upcoming HBO documentary.

“No Place to Hide,” tells the story of Glenn Greenwald (The Guardian Journalist) of the encounters with Snowden right after he walked away with highly classified material. Greenwald explores some of the technology used by the NSA uncovered by the Snowden leak.

Tech History

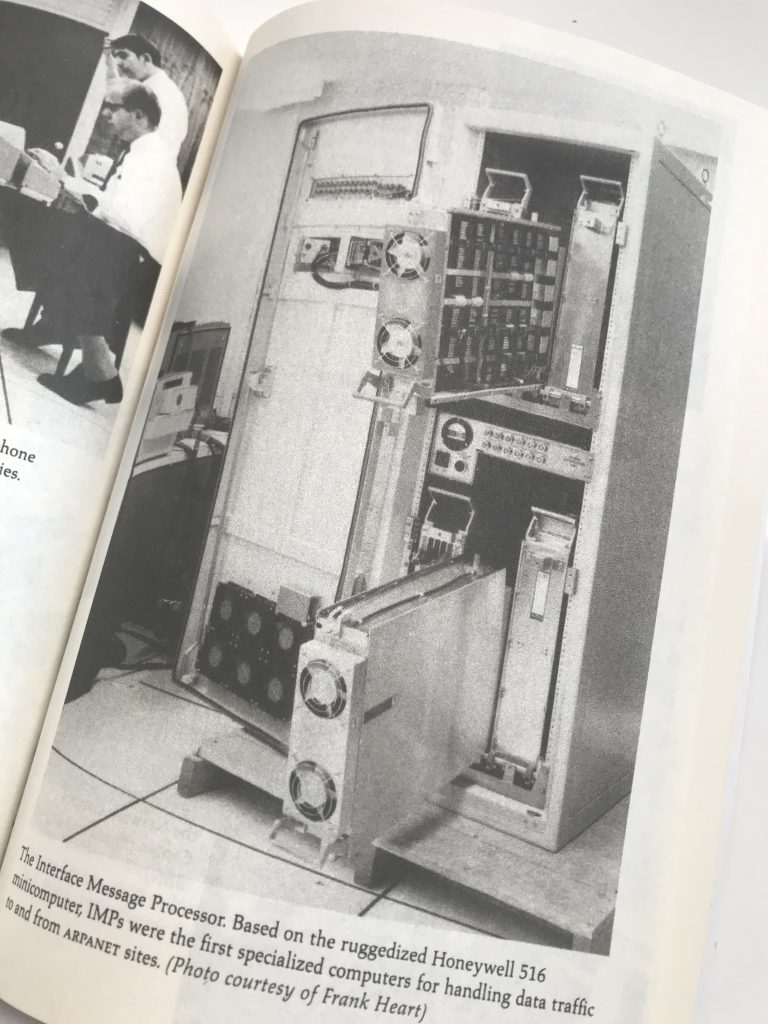

If you are interested in the inception of the internet, then “Where Wizards stay up late” should be on your bookshelf or a part of your digital library. Moving the computer from being a giant calculator to a communication device.

“Command and Control” explores the systems used to manage the American nuclear arsenal. It tells stories about near misses. If you think you’re behind on patching and updating your systems, do yourself a favor and read this book 😉

Technothriller

Old-timers know Mark Russinovich from the advanced system utility toolset called Sysinternals, the new generation knows him as CTO of Azure Webservices. It turns out, that Mark is a gifted author as well. He published three tech novels that are highly entertaining to read: “Zero day“, “Rogue Code” and “Trojan Horse“.

Little Brother by Cory Doctorow (Thanks to Mark Brookfield for recommending this) tells an entertaining story of how a young hacker takes on the department of homeland security.

Hacking

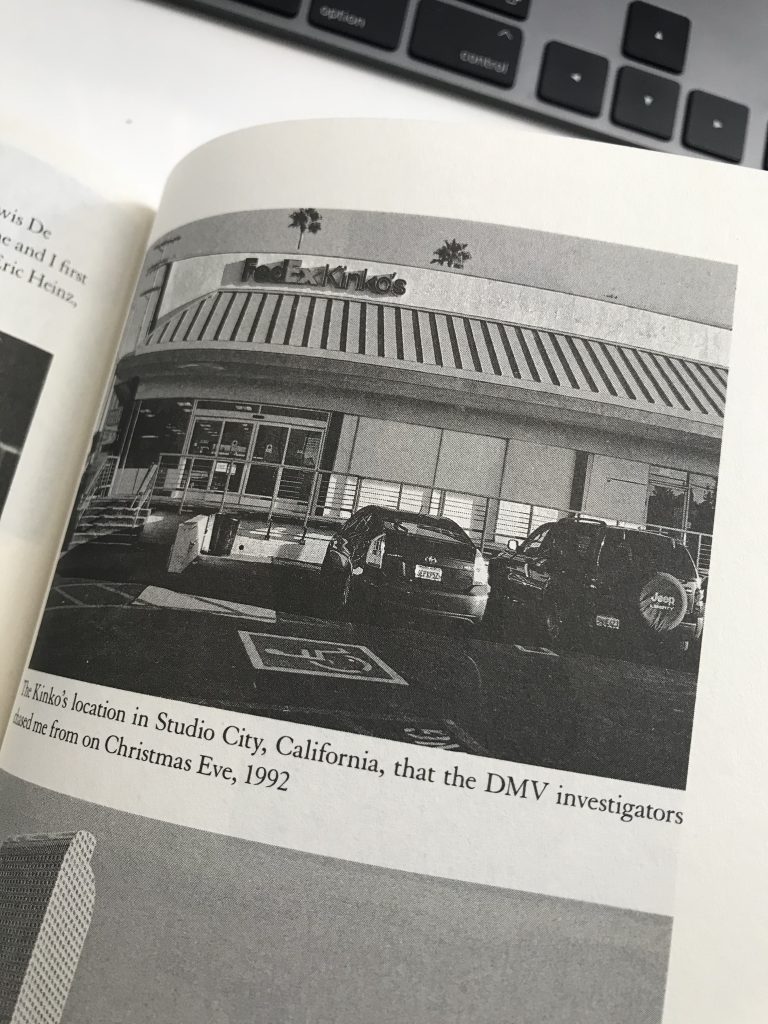

“Ghost in the Wires” reads like a technothriller, but tells the story of the hunt on Kevin Mitnick. A must-have.

“Kingpin: How One Hacker Took Over the Billion-Dollar Cybercrime Underground” tells the story of Kevin Poulsen, ofter referred to in Ghost in the Wires, as one of the most notorious hackers focusing on credit card fraud. An exciting and quick read.

What’s in your top 5?