Resource management within virtual infrastructures relies on distributed algorithms, as a result I became more and more interested in the application of computer algorithms in other areas. Today I found an English version of the multiple award winning Dutch documentary which I can finally share with my non-dutch speaking friends. The documentary reviews the flash crash on the U.S. Stock Market on May 6th 2010. In particular it explores the application of black box trading (algo-trading) and how algorithms shaped and formed the architecture of not only of the trade market institutions but also city architectures and human terraforming.

Money & Speed: Inside the Black Box (Marije Meerman, VPRO)

Sit back and be amazed.

Once viewed, view the TED presentation by Kevin Slavin: How algorithms shape our world. Kevin Slavin zooms in some of the algorithms applied in our lives and how it affect us and our surroundings.

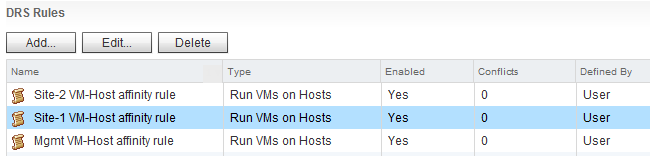

Overlapping DRS VM-Host affinity rule in a vSphere Stretched Cluster

A question on the VMware community forum triggered me to validate DRS behavior of applying VM to Host group rules. The scenario describes a stretched HA cluster with overlapping DRS group rules, allowing to run particular VMs on hosts in a single site and a subset of hosts of both sides. How does DRS handle overlapping groups?

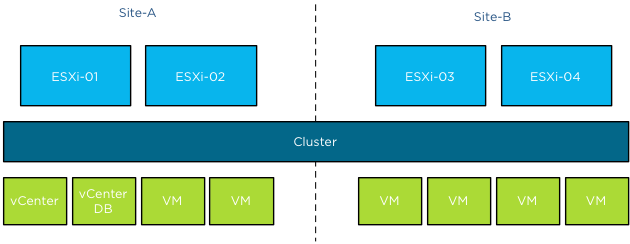

The architecture

In this scenario the stretched cluster contains four hosts; ESXi-01 and ESXi-02 are located in Site A, ESXi-03 and ESXi-04 are located in Site B.

A collection of virtual machines are run by the hosts in the cluster, two management virtual machines, vCenter and vCenterDB are a part of this group. Storage housing the virtual machines are available for all hosts. Storage architecture is not the focus of this article, for more information about storage configurations and stretched vSphere clusters, please read the white paper: VMware vSphere Metro Storage Cluster Case Study

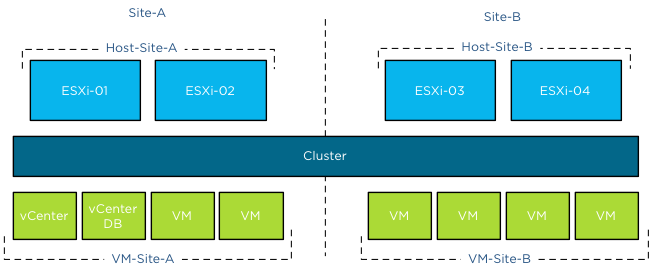

Site DRS VM-Host groups

ESXi-01 and ESXi-02 are grouped in Host DRS group Host-Site-A, ESXi-03 and ESXi-04 are grouped in Host DRS group Host-Site-B. All virtual machines running in Site-A are grouped in a VM DRS group VM-Site-A, all virtual machines running in Site-B are grouped in a VM DRS group VM-Site-B. All (Site) rules are configured as preferential rules (Should run on).

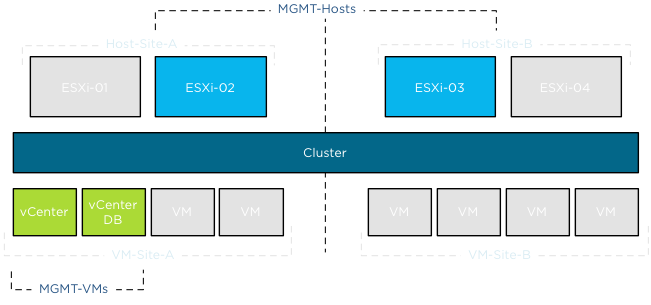

Management DRS VM-Host group

In the scenario described on the community forum, the virtual machines vCenter and vCenterDB are placed in an additional VM DRS group; MGMT-VMs.

This group should be run on a select set of hosts of both sides, to simulate similar behavior the Host DRS group configured in my environment contains ESXi-02 of Site-A and ESXi-03 of Site-B. The group is named MGMT-Hosts.

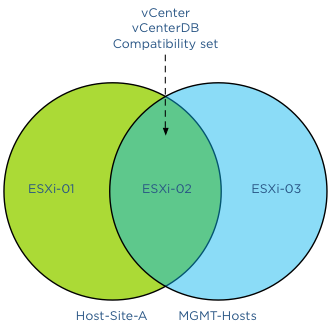

Overlapping rule-set

Because the management virtual machines are a part of the VM-Site-A VM group an overlap of compatible hosts exists. Please note that DRS allows virtual machines to be a member of multiple VM groups. When reviewing the active affinity rules in a DRS cluster, DRS extracts a subset of compatible hosts for each virtual machine and uses this subset for placement and load balancing decisions. A Venn diagram shows the compatible host for the VMs vCenter and vCenterDB and specifically the host(s) listed in the compatibility set.

This means that under normal operation DRS will choose to run the virtual machines on ESXi-02 as it satisfies both rules. With normal condition I want to indicate that there is not excessive load on any of the host and all hosts are configured identically without any hardware failures. Back to the scenario, what if there is a host failure or ESXi-02 is placed into maintenance mode?

Maintenance mode

Now what happens if ESXi-02 is placed into maintenance mode? As previously mentioned, DRS determines the set of compatible hosts. As ESXi-02 is placed into maintenance mode, DRS mark this host as a source host for migration. This way DRS knows which virtual machine to select for migration and excludes ESXi-02 as a valid destination for migrations. DRS must select other hosts listed in the Host DRS group. As ESXi-02 is not a valid destination, DRS needs to select either ESXi-01 or ESXi-03. Which host will it select? This depends on the creation date of the DRS rules, in other words, the newer DRS VM-Host rule is selected first. This is important to understand when applying overlapping VM-Host affinity rules in your environment. The last rule you create is applied first, overruling all other existing rules. In my lab, I created the MGMT rule as last, therefor having the youngest timestamp.

There is no option of showing the timestamp in the user-interface of the web and vSphere client. I have provided this feedback to the engineering team. Hopefully they can include it in future releases.

I placed ESXi-02 into maintenance mode and DRS migrated the virtual machines to ESXi-03, regulated by the MGMT VM-Host affinity rule. After placing ESXi-03 into maintenance mode, the virtual machines were moved to ESXi-01 as there were no compatible hosts available to satisfy the MGMT affinity rule. As ESXi-01 is listed in the Host-Site-A group of the Site-A affinity rule, DRS had no choice other than moving them to ESXi-01. After resetting the lab and destroying all the rules, I created the same set of host groups, VM groups and affinity rules, but I created the Site-A affinity rule as last. This resulted in the behavior that DRS moved the virtual machines to host ESXi-01 after placing ESXi-02 into maintenance mode, as DRS respected the Site-A affinity rule.

Alarms

As DRS supports non-contradicting overlapping affinity rules, no alarm was generated. During the scenario where both ESXi-02 and ESXi-03 were placed into maintenance mode, no alarm was triggered. It was expected to see an alarm that an affinity alarm is violated, however after digging through some code and contacting engineering this appears to be behavior by design. In the current release, the alarm is only triggered when mandatory rules (must run on) are violated.

HA behavior

All rules are configured with preferential rule sets (Should run on) and HA is not aware of DRS constructs. When a mandatory rule set (Must run on) is created, the hosts listed in the rule set are registered in the compatibility list of the virtual machine itself. Only those hosts registered in the compatibility list are viable destinations. During startup, HA checks the compatibility list and attempts to start up the virtual machine on any of these hosts listed. As the virtual machines are a part of a preferential affinity rule, all hosts are listed in the compatibility list and therefor HA could place them on a host outside the DRS Host Group.

Conclusion

If you want certain virtual machines to gravitate to specific hosts or a specific site, please take into account the way DRS sequence the active affinity rules.

vSphere 5.1 Clustering deepdive Cyber Monday deal

We are long time fascinated by the whole Black Friday and Cyber Monday craze in the USA. Unfortunately we do not celebrate Thanksgiving in the Netherlands and none of the shops are participating in something similar as Black Friday.

Similar to last year, we thought it was a great idea to participate in some form and what better than to offer our vSphere 5.1 Clustering Technical Deepdive book for a price you cannot resist. We just changed the price of the vSphere 5.1 Clustering Technical Deepdive to $17.95, Amazon Deutschland is offering the book for 16.00 EURO, while Amazon UK is selling the book for 11.01 Pounds sterling.

The book has some amazing reviews, here is one we like to share with you:

The book contains information critical to VMware administrators. Clustering is a critical technology, and the book covers the underlying concepts as well as the practical issues surrounding implementation. The information contained within will be important far past vSphere 5.1; the principles will apply for decades, and even the details of implementation are unlikely to change dramatically over the next few generations of the product. As with any good “deep dive,” the fundamental concepts discussed will ultimately help you in any clustering situation, even with non-VMware products.

From a reading comprehension standpoint, the book is easy to grok. The information flows quickly and you can read the entire work cover to cover with relative ease. This is a must have for any systems administrator.

What better way than recover from the madness of Black Friday and just sit back and relax reading this amazing piece of work? This is most definitely the deal of the year for all virtualization fanatics!

Duncan and Frank

Want to have a vSphere 5.1 clustering deepdive book for free?

Want to have a vSphere 5.1 clustering deepdive book for free? CloudPhysics are giving away some vSphere 5.1 clustering deepdive books, do the following if you want to receive a copy:

Action required

- Email info@cloudphysics.com with a subject of “Book”. No message is needed.

- Register at http://www.cloudphysics.com/ by clicking “SIGN UP”.

- Install the CloudPhysics Observer vApp to activate your dashboard.

Eligibility rules

- You are a new CloudPhysics user.

- You fully install the CloudPhysics ‘Observer’ vApp in your vSphere environment.

The first 150 users gets a free book, but what’s even better, the Cloudphysics service gives you great insights on your current environment. For more info read the following blogposts:

CloudPhysics in a nutshell and VM reservations and limits card – a closer look

Cloudphysics VM Reservation & Limits card – a closer look

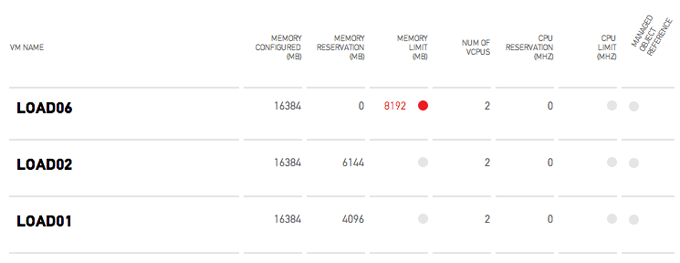

The VM Reservation and Limits card was released yesterday. CloudPhysics decided to create this card based on the popularity of this topic in the contest. So what does this card do? Let’s have a closer look.

This card provides you an easy overview of all the virtual machines configured with any reservation or limits for CPU and memory. Reservations are a great tool to guarantee the virtual machine continuous access to physical resources. When running business critical applications reservations could provide a constant performance baseline that helps you meet your SLA. However reservations can impact your environment as the VM reservations impacts the resource availability of other virtual machines in your virtual infrastructure. It can lower your consolidation ratio: The Admission Control Family and it can even impact other vSphere features such as vSphere High Availability. The CloudPhysics HA Simulation card can help you understand the impact of reservations on HA.

Besides reservations virtual machine limits are displayed. A limit restricts the use of physical access of the virtual machine. A limit could be helpful to test the application during various level of resource availability. However virtual machine limits are not visible to the Guest OS, therefor it cannot scale and size its own memory management (or even worse the application memory management) to reflect the availability of physical memory. For more information about memory limits, please read this post by Duncan: Memory limits. As the VMkernel is forced to provide alternative memory resources limits can lead to the increased use of VM swap files. This can lead to performance problems of the application but can also impact other virtual machines and subsystems used in the virtual infrastructure. The following article zooms into one of the many problems when relying on swap files: Impact of host local VM swap on HA and DRS.

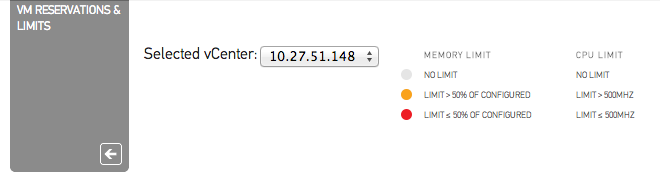

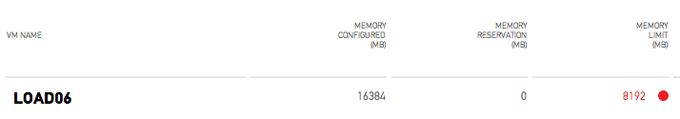

Color indicators

As virtual machine level limits can impact the performance of the entire virtual infrastructure, the CloudPhysics engineers decided to add an additional indicator to help you easily detect limits. When a virtual machine is configured with a memory limit still greater than 50% of its configured size an Amber dot is displayed next to the configured limit size. If the limit is smaller or equal to 50% of its configured size than a red dot is displayed next to the limit size. Similar for CPU limits, an amber dot is displayed when the limit of a virtual machine is set but is more than 500MHz, a red dot indicates that the virtual machine is configured with a CPU limit of 500MHz or less.

For example: Virtual Machine Load06 is configured with 16GB of memory. A limit is set to 8GB (8192MB), this limit is equal to 50% of the configured size. Therefore the VM reservation and Limits card displays the configured limit in red and presents an additional red dot.

Flow of information

The indicators are also a natural divider between the memory resource controls and the CPU controls. As memory resource control impacts the virtual infrastructure more than the CPU resource controls, the card displays the memory resource controls at the left side of the screen.

We are very interested in hearing feedback about this card, please leave a comment.

Get notification of these blogs postings and more DRS and Storage DRS information by following me on Twitter: @frankdenneman