This is just a small heads-up post for all the VCDX candidates.

Almost every VCDX application I read mentions the fact that they needed to increase the Disk TimeOutValue (HKEY_LOCAL_MACHINE/System/CurrentControlSet/Services/Disk) by to 60 seconds on Windows machines.

The truth is that the VMware Tools installation (ESX version 3.0.2 and up) will change this registry value automatically. You might want to check your operational procedures documentation and update this!

VMware KB 1014

Removing orphaned Nexus DVS

During the test of the Cisco Nexus 1000V the customer deleted the VSM first without removing the DVS using commands from within the VSM, ending up with an orphaned DVS. One can directly delete the DVS from the DB, but there are bunch of rows in multiple tables that need to be deleted. This is risky and may render DB in some inconsistent state if an error is made while deleting any rows. Luckily there is a more elegant way to remove an orphaned DVS without hacking and possibly breaking the vCenter DB.

A little background first:

When installing the Cisco Nexus 1000V VSM, the VSM uses an extension-key for identification. During the configuration process the VSM spawns a DVS and will configure it with the same extension-key. Due to the matching extension keys (extension session) the VSM owns the DVS essentially.

And only the VSM with the same extension-key as the DVS can delete the DVS.

So to be able to delete a DVS, a VSM must exist registered with the same extension key.

If you deleted the VSM and are stuck with an orphaned DVS, the first thing to do is to install and configure a new VSM. Use a different switch name than the first (deleted) VSM. The new VSM will spawn a new DVS matching the switch name configured within the VSM.

The first step is to remove the new spawned DVS and do this the proper way using commands from within the VSM virtual machine.

[Read more…] about Removing orphaned Nexus DVS

DRS Resource Distribution Chart

A customer of mine wanted more information about the new DRS Resource Distribution Chart in vCenter 4.0, so I thought after writing the text for the customer, why not share this? The DRS Resource Distribution Chart was overhauled in vCenter 4.0 and is quite an improvement over the resource distribution chart featured in vCenter 2.5. Not only does it use a better format, the new charts produce more in-depth information.

[Read more…] about DRS Resource Distribution Chart

Resource pools and avoiding HA slot sizing

Virtual machines configured with large amounts of memory (16GB+) are not uncommon these days. Most of the time these “heavy hitters” run mission critical applications so it’s not unusual setting memory reservations to guarantee the availability of memory resources. If such a virtual machine is placed in a HA cluster, these significant memory reservations can lead to a very conservative consolidation ratio, due to the impact on HA slot size calculation. (For more information about slot size calculation, please review the HA deep dive page on yellow-bricks.com.)

There are options to avoid creation of large slot sizes. Such as not setting reservations, disabling strict admission control, using vSphere new admission control policy “percentage of cluster resources reserved” or creating a custom slot size by altering the advanced settings das.vmMemoryMinMB.

But what if you are still using ESX 3.5, must guarantee memory resources for that specific VM, do not want to disable strict admission control or don’t like tinkering with the custom slot size setting? Maybe using the resource pool workaround can be an option.

Resource pool workaround

During a conversation with my colleague Craig Risinger, author of the very interesting article “The resource pool priority pie paradox”, we discussed the lack of relation between resource pools reservation settings and High Availability. As Craig so eloquently put it:

“RP reservations will not muck around with HA slot sizes”

High Availability ignores resource pools reservation settings when calculating the slot size, so if a single VM is placed in a resource pools with memory reservation configured, it will have the same effect on resource allocation as per VM memory reservation, but does not affect the HA slot size.

By creating a resource pool with a substantial memory setting you can avoid decreasing the consolidation ratio of the cluster and still guarantee the virtual machine its resources. Publishing this article does not automatically mean that I’m advocating using this workaround on a regular basis. I recommend implementing this workaround very sparingly as creating a RP for each VM creates a lot of administrative overhead and makes the host and cluster view a very unpleasant environment to work in.

A possible scenario to use this workaround can be when implementing MS Exchange 2010 mailbox servers. These mailbox servers are notorious for demanding a huge amount of memory and listed by many organizations as mission critical servers.

To emphasize it once more, this is not a best practice! But it might be useful in certain scenarios to avoid large slots and therefore low consolidation ratios.

Impact of host local VM swap on HA and DRS

On a regular basis I come across NFS based environments where the decision is made to store the virtual machine swap files on local VMFS datastores. Using host-local swap can affect DRS load balancing and HA failover in certain situations. So when designing an environment using host-local swap, some areas must be focused on to guarantee HA and DRS functionality.

VM swap file

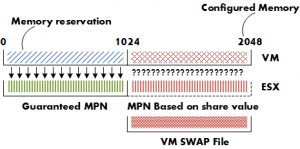

Lets start with some basics, by default a VM swap file is created when a virtual machine starts, the formula to calculate the swap file size is: configured memory – memory reservation = swap file. For example a virtual machine configured with 2GB and a 1GB memory reservation will have a 1GB swap file.

Reservations will guarantee that the specified amount of virtual machine memory is (always) backed by ESX machine memory. Swap space must be reserved on the ESX host for the virtual machine memory that is not guaranteed to be backed by ESX machine memory. For more information on memory management of the ESX host, please the article on the impact of memory reservation.

During start up of the virtual machine, the VMkernel will pre-allocate the swap file blocks to ensure that all pages can be swapped out safely. A VM swap file is a static file and will not grow or shrink not matter how much memory is paged. If there is not enough disk space to create the swap file, the host admission control will not allow the VM to be powered up.

Note: If the local VMFS does not have enough space, the VMkernel tries to store the VM swap file in the working directory of the virtual machine. You need to ensure enough free space is available in the working directory otherwise the VM is still not allowed to be powered up. Let alone ignoring the fact that you initially didn’t want the VM swap stored on the shared storage in the first place.

This rule also applies when migrating a VM configured with a host-local VM swap file as the swap file needs to be created on the local VMFS volume of the destination host. Besides creating a new swap file, the swapped out pages must be copied out to the destination host. It’s not uncommon that a VM has pages swapped out, even if there is not memory pressure at that moment. ESX does not proactively return swapped pages back into machine memory. Swapped pages always stays swapped, the VM needs to actively access the page in the swap file to be transferred back to machine memory but this only occurs if the ESX host is not under memory pressure (more than 6% free physical memory).

Copying host-swap local pages between source- and destination host is a disk-to-disk copy process, this is one of the reasons why VMotion takes longer when host-local swap is used.

Real-life scenario

A customer of mine was not aware of this behavior and had discarded the multiple warnings of full local VMFS datastores on some of their ESX hosts. All the virtual machines were up and running and all seemed well. Certain ESX servers seemed to be low on resource utilization and had a few active VMs, while other hosts were highly utilized. DRS was active on all the clusters, fully automated and a default (3 stars) migration threshold. It looked like we had a major DRS problem.

DRS

If DRS decide to rebalance the cluster, it will migrate virtual machines to low utilized hosts. VMkernel tries to create a new swap file on the destination host during the VMotion process. In my scenario the host did not contain any free space in the VMFS datastore and DRS could not VMotion any virtual machine to that host because the lack of free space. But the host CPU active and host memory active metrics were still monitored by DRS to calculate the load standard deviation used for its recommendations to balance the cluster. (More info about the DRS algorithm can be found on the DRS deepdive page). The lack of disk space on the local VMFS datastores influenced the effectiveness of DRS and limited the options for DRS to balance the cluster.

High availability failover

The same applies when a HA isolation response occurs, when not enough space is available to create the virtual machine swap files, no virtual machines are started on the host. If a host fails, the virtual machines will only power-up on host containing enough free space on their local VMFS datastores. It might be possible that virtual machines will not power-up at-all if not enough free disk space is available.

Failover capacity planning

When using host local swap setting to store the VM swap files, the following factors must be considered.

• Amount of ESX hosts inside cluster.

• HA configured host failover capacity.

• Amount of active virtual machines inside cluster.

• Consolidation ratio (VM per host).

• Average swap file size.

• Free disk space local VMFS datastores.

| Number of hosts inside cluster: | 6 |

| HA configured host failover capacity: | 1 |

| Active virtual machines: | 162 |

| Average consolidation ratio: | 27:1 |

| Average memory reservation: | 0GB |

| Average swap file size: | 4GB |

For the sake of simplicity, let’s assume that DRS balanced the cluster load and that all (identical) virtual machines are spread evenly across every host.

In case of a host failure, 27 VMs will be restarted on the remaining 5 hosts inside the cluster, HA will start 5.4 virtual machines per host, as it is impossible to start 0.4 VM, some ESX hosts will start 6 virtual machines, while other hosts will start 5 VM’s.

The average swap file size is 4GB, this requires at least 24 GB of free space to be available on the local VMFS datastores to start the VM’s. Besides the 24GB, enough free space needs to be available to for DRS to move multiple VMs around to rebalance the load across the cluster.

If the design of the virtual infrastructure incorporates site failover as well, enough free disk space on all the ESX hosts must be reserved to power-up all the affected virtual machines from the failed site.

Closing remarks

Using host local swap can be a valid option for some environments, but additional calculation of the factors mentioned above is necessary to ensure sustained HA and DRS functionality.