vSwitch configuration and load-balancing policy selection are major parts of a virtual infrastructure design. Selecting a load-balancing policy can have impact on the performance of the virtual machine and can introduce additional requirements at the physical network layer. Not only do I spend lots of time discussing the various options during design sessions, it is also an often discussed topic during the VCDX defense panels.

More and more companies seem to use IP-hash as there load balancing policy. The main argument seems to be increased bandwidth and better redundancy. Even when the distributed vSwitch is used, most organizations still choose IP-hash over the new load balancing policy “Route based on physical NIC load”. This article compares both load-balancing policies and lists the characteristics, requirements and constraints of both load-balancing policies.

IP-Hash

The main reason for selecting IP-Hash seems to be increased bandwidth as you aggregate multiple uplinks, unfortunately adding more uplinks does not proportionally increase the available bandwidth for the virtual machines.

How IP-Hash works

Based on the source and destination IP address together the VMkernel distributes the load across the available NICs in the vSwitch. The calculation of outbound NIC selection is described in KB article 1007371. To calculate the IP-hash yourself convert both the source and destination IP-addresses to a Hex value and compute the modulo over the number of available uplinks in the team. For example

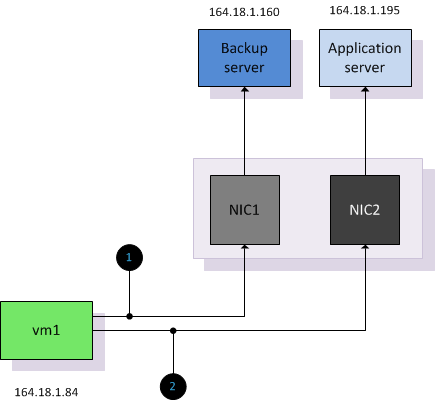

Virtual Machine 1 opens two connections, one connection to a backup server and one connection server to an application server.

| Virtual Machine | IP-Address | Hex Value |

| VM1 | 164.18.1.84 | A4120154 |

| Backup Server | 164.18.1.160 | A41201A0 |

| Application Server | 164.18.1.195 | A41201C3 |

The vSwitch is configured with two uplinks.

Connection 1: VM1 > Backup Server (A4120154 Xor A41201A0 = F4) % 2 = 0

Connection 2: VM1 > Application Server (A4120154 Xor A41201C3 = 97) % 2 = 1

IP-Hash treats each connection between a source and destination IP address as a unique route and the vSwitch will distributed each connection across the available uplinks in the vSwitch. However due to the pNIC to vNIC affiliation, any connection is on a per flow basis. A flow can’t overflow to another uplink; this means that a connection is still limited to the speed of a single physical NIC. A real-world user case for IP-hash would be a backup server which requires a lot of bandwidth across multiple connections other than that; there are very few workloads that require bandwidth that can’t be satisfied by a single adapter.

Complexity –In order for IP-hash to function correctly additional configuration at the network layer is required:

EtherChannel: IP-hash needs to be configured on the vSwitch if EtherChannel technology is used at the physical switch layer. With EtherChannel the switch will load balance connections over multiple ports in the EtherChannel. Without IP-hash, the VMkernel only expects to receive information on a specific MAC address on a single vNIC. Resulting in some sessions go through to the virtual machine while other sessions will be dropped. When IP-hash is selected, then the VMkernel will accept inbound mac addresses on both active NICs

EtherChannel configuration: As vSphere does not support dynamic link aggregation (LACP), none of the members can be set up to auto-negotiate membership and therefore physical switches have to be configured with static EtherChannel.

Switch configuration: vSphere supports EtherChannel from one switch to the vSwitch. This switch can be a single switch or a stack of individual switches that act as one, but vSphere does not support EtherChannel from two separate – non stacked – switches, when the EtherChannel connect to the same vSwitch.

Additional overhead – For each connection the VMkernel needs to select the appropriate uplink. If a virtual machine is running a front-end application and communicates 95% of its time to the backend database, the IP-Hash calculation is almost pointless. The VMkernel needs to perform the math for every connection and 95% of the connections will use the same uplink because the Algorithm will always result in the same hash.

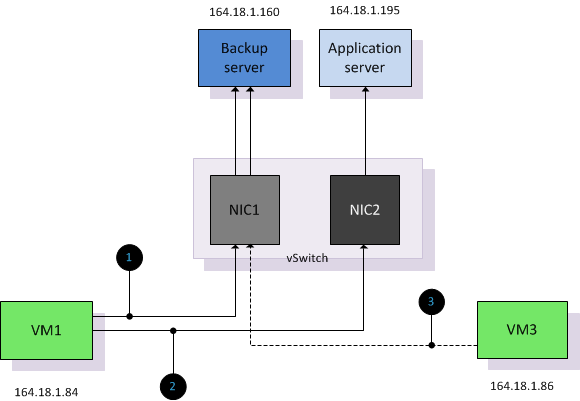

Utilization-unaware – It is possible that a second virtual machine is assigned to use the same uplink as the virtual machine that is already saturating the link. Let’s use the first example and introduce a new virtual machine VM3. Due to the backup window, VM3 connects to the backup server.

| Virtual Machine | IP-Address | Hex Value |

| VM3 | 164.18.1.86 | A4120156 |

Connection 3: VM3> Backup Server (A4120156 Xor A41201A0 = F6) % 2 = 0

Due to IP-HASH load balancing policy being unaware of utilization it will not rebalance if the uplink is saturated or if virtual machine are added or removed due to power-on or (DRS) migrations. DRS is unaware of network utilization and does not initiate a rebalance if a virtual machine cannot send or receive packets due to physical NIC saturation. In worst-case scenario DRS can migrate virtual machines to other ESX servers, leaving all the virtual machine that are saturating a NIC while the other virtual machines utilizing the other NICs are migrated. Admitted it’s a little bit of a stretch, but being aware of this behavior allows you to see the true beauty of the Load-Based Teaming team policy.

Possible Denial of Service –Due to the pNIC-to-vNIC affiliation per connection a misbehaving virtual machine generating many connections can cause some sort of denial of service on all uplinks on the vSwitch. If this application would connect to a vSwitch with “Port-ID” or “based on physical load” only one uplink would be affected.

Network failover detection Beacon Probing – Beacon probe does not work correctly if EtherChannel is used. ESX broadcast beacon packets out of all uplinks in a team. The physical switch is expected to forward all packets to other ports. In EtherChannel mode, the physical switch will not send the packets because it’s considered as one link. No beacon packets will be received and can interrupt network connections. Cisco switches will report flapping errors. See KB article 1012819.

Route based on physical NIC Load

VMware vSphere 4.1 introduced a new load-balancing policy available on distributed vSwitches. Route based on physical NIC load, also known as Load Based Teaming (LBT) takes the virtual machine network I/O load into account and tries to avoid congestion by dynamically reassigning and balancing the virtual switch port to physical NIC mappings.

How LBT works

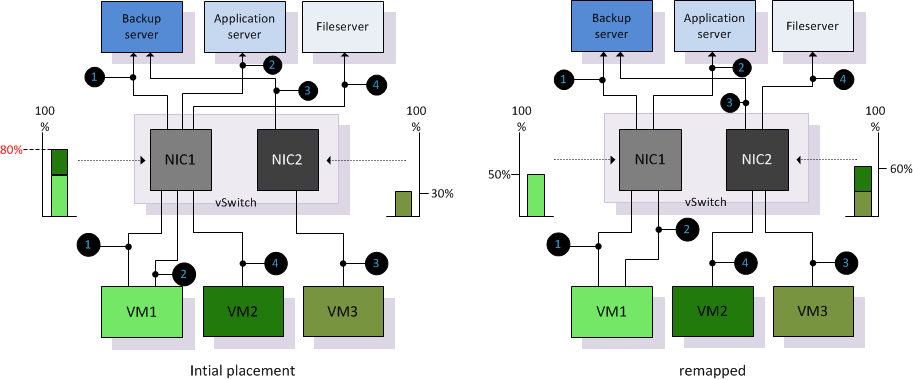

Load Based Teaming maps vNICs to pNICs and remaps the vNIC-to-PNIC affiliation if the load exceeds specific thresholds on an uplink.

LBT uses the same initial port assignment as the “originating port id” load balancing policy, resulting in the first vNIC being affiliated to the first pNIC, the second vNIC to the second pNIC, etc. After initial placement, LBT examines both ingress and egress load of each uplink in the team and will adjust the vNIC to pNIC mapping if an uplink is congested. The NIC team load balancer flags a congestion condition if an uplink experiences a mean utilization of 75% or more over a 30-second period.

Complexity – LBT requires standard Access or Trunk ports. LBT does not support EtherChannels. Because LBT is moving flows among the available uplinks of the vSwitch, it may create packets re-ordering. Even though the reshuffling process is not done often (worst case scenario every 30 seconds) it is recommended to enable PortFast or TrunkFast on the switch ports.

Additional overhead – The VMkernel will examine the congestion condition after each time window, this calculation creates a minor overhead opposed to using the static load-balancing policy “originating port-id”.

Utilization aware – vNIC to pNIC mappings will be adjusted if the VMkernel detects congestion on an uplink. In the previous example both VM1 and VM3 shared the same connection due to the IP-hash calculation. Both connections can share the same physical NIC as long as the utilization stays below the threshold. It is likely that both vNICs are mapped to separate physical NICs.

In the next example a third virtual machine is powered up and is mapped to NIC1. Utilization of NIC1 exceeds the mean utilization of 70% over a period of more than 30 seconds. After identifying congestion LBT remaps VM2 to NIC2 to decrease the utilization of NIC1.

Although LBT is not integrated in DRS it can be viewed as complimentary technology next to DRS. When DRS migrates virtual machines onto a host, it is possible that congestion is introduced on a particular physical NIC. Due to vNIC to pNIC mapping based on actual load, LBT actively tries to avoid congestion at physical NIC level and attempts to reallocate virtual machines. By remapping vNiCs to pNICs it will attempt to make as much bandwidth available to the virtual machine, which ultimately benefits the overall performance of the virtual machine.

Recommendations

When using distributed virtual Switches it is recommended to use Load-Based teaming instead of IP-hash. LBT has no additional requirements on the physical network layer, reduces complexity and is able to adjust to fluctuating workloads. Due to the remapping of vNICs to pNICs based on actual load, LBT attempts to allocate as much bandwidth possible where IP-hash just simply distributes connections across the available physical NICs.

Get notification of these blogs postings and more DRS and Storage DRS information by following me on Twitter: @frankdenneman

Great read! Thank you!

Another excellent article with the usual great summary for those time challenged readers (me). The only problem is holding all the detail in my head – it hurts…

Great Article Frank, after little downtime ;),

i wish to add, that LBT are available only for vDS, and i think this is a reason that many people using IP-Hash for vSS

Very good article, Frank!

Do you also recommend using LBT for iSCSI & NFS storage networks?

Lars

A great feature! I’m implementing this on my dvSwtiches.

Now if only an Alarm could be configured for a dvSwitch to trigger an email or warning event to notify you if one of the uplinks to the dvSwitch goes down.

Yes you will see an Event in the Tasks & Event list identifying “Lost uplink redundancy on DVPorts” but it will not trigger an Alarm.

No, I’m not joking. The current Alarm’s “Network connectivity lost”, “Network uplink redundancy lost” and “Network uplink redundancy degraded” do NOT trigger for dvSwitches. Only vSwitches.

Also, if you try to create a custom Alarm, and you attempt to set the Alarm Type Monitor: to “vNetwork Distributed Switch”, and try to add a trigger, you will NOT find an appropriate trigger for “dvPort down”. You will find triggers for “dvPort link was up” and “dvPort link was down” but neither of these will trigger when one of your dvSwitch uplinks is removed/failed.

I have opened a case with VMware regarding this, as it is sad that their advanced dvSwitches do not include the ability to monitor and Alert in the event of a network redundancy failure, link down, etc.

So if a Cisco switch is not in a etherchannel or lacp teamed link won’t it send the traffic to just one link towards the ESX host ?

And if it send it down on port #1 but it comes back from port #4 won’t there be errors in the Cisco switch ?

Excellent article, Frank !! As Lars asked above, do you recommend using LBT for NFS storage networks?

Good Post as usual,

I read it when it came but now I have an opportunity to implement it so… I am back

When using NFS with IP hash we can breakdown our NFS mounts by using alias on the filer to spread the load.

“LBT uses the same initial port assignment as the “originating port id””

The kernel interface used for NFS is connected to a single port right? If I understand properly, this will never load balance NFS traffic.

Does this make sens?

Thanks

G.

Great article Frank, helped me today to solve the IP-hash issue 🙂

Cheers!

Interesting article about this new alternative!

Can you explain why you recommend to enable PortFast/TrunkFast when using LBT? The only thing that needs to happen when a VM port is shifted from one switch port to another is to have the arp entry shifted, no? The secondary port is already online so the PortFast setting should not make any difference?

BR,

Stijn

Port fast won’t be needed IMO , only useful when there’s a link up-down event, what I read here is that this LBT technology could cause mac flip flopping (as IP-HASH does if you connect the pNICs to two different access-switched – which is most of the time you have no virtual port-channel between these 2 switches in place). So – I see a potential for continuing issues there – (since sysadmins and network guys rare converse between one another ;-(

I agree with the above, the Portfast will not be needed for this reason, since Spanning Tree only cares about the link being up/down, and not for specific MAC addresses showing up on different physical ports.

Great article anyway!

excellent article.

Guys Portfast is your friend. When doing maintenance, upgrades or updates that include reboots, keeps the peace between us and the networking team….