Disclaimer: This article contains references to the words master and slave. I recognize these as exclusionary words. The words are used in this article for consistency because it’s currently the words that appear in the software, in the UI, and in the log files. When the software is updated to remove the words, this article will be updated to be in alignment.

Please note that this article has been written in 2009. I do not know if Lefthand changed their solution. Please check with your HP representative for updates!

I recently had the opportunity to deliver a virtual infrastructure that uses HP Lefthand SAN solution. Setting up a Lefthand SAN is not that difficult, but there are some factors to take into consideration when planning and designing a Lefthand SAN properly. These are my lessons learned.

Lefthand, not the traditional Head-Shelf configuration

HP lefthand SANs are based on iSCSI and are formed by Storage nodes. In traditional storage architectures, a controller manages arrays of disk drives. A Lefthand SAN is composed of storage modules. A Network Storage Module 2120 G2 (NSM node) is basically an HP DL185 server with 12 SAS or SATA drivers running SAN/iQ software.

This architecture enables the aggregation of multiple storage nodes to create a storage cluster and this solves one of the toughest design questions when sizing a SAN. Instead of estimating growth and buying a storage array to “grow into”, you can add storage nodes to the cluster when needed. This concludes the sales pitch.

But this technique of aggregating separate NSM nodes into a cluster raises some questions. Questions such as;

- Where will the blocks of a single LUN be stored; all on one node, or across nodes?

- How are LUNs managed?

- How is datapath load managed?

- What is the impact of failure of a NSM node ?

Block placement and Replication level

The placement of blocks of a LUN depends on the configured replication level. Replication level is a feature called Network RAID Level. Network RAID stripes and mirrors multiple copies of data across a cluster of storage nodes. Up to four levels of synchronous replication at LUN level can be configured;

- None

- 2-way

- 3-way

- 4-way

Blocks will be stored on storage nodes according to the replication level. If a LUN is created with the default replication level of 2-way, two authoritative blocks are written at the same time to two different nodes. If a 3-way replication level is configured, blocks are stored on 3 nodes. 4-way = 4 nodes. (Replication cannot exceed the number of nodes in the cluster)

SAN IQ will always start to write the next block to the second node containing the previous block. A picture is worth a thousand words.

Node order

The data in which blocks are written to the LUN is determined not by node hostname but by the order in which the nodes are added to the cluster. The order of the placement of the nodes is extremely important if the SAN will span two locations. More information on this design issue later.

Virtual IP and VIP Load Balancing

When setting up a Lefthand Cluster, a Virtual IP (VIP) needs to be configured. A VIP is required for iSCSI load balancing and fault tolerance. One NSM node will act as the VIP for the cluster, if this node fails, the VIP function will automatically failover to another node in the cluster.

The VIP will function as the iSCSI portal, ESX servers use the VIP for discovery and to log in to the volumes. ESX servers can connect to volumes two ways. Using the VIP and using the VIP with the option load balancing (VIPLB) enabled. When enabling VIPLB on LUNs, the SAN/iQ software will balance connections to different nodes of the cluster.

Configure the ESX iscsi inititiator with the VIP as a destination address. The VIP will supply the ESX servers with a target address for each LUN. VIPLB will transfer initial communication to the gateway connection of the LUN. Running the vmkiscsi-util command shows the VIP as a portal and another ip address as target address of the LUN

root@esxhost00 vmfs]# vmkiscsi-util -i -t 58 -l

***************************************************************************

Cisco iSCSI Driver Version … 3.6.3 (27-Jun-2005 )

***************************************************************************

TARGET NAME : iqn.2003-10.com.lefthandnetworks:lefthandcluster:1542:volume3

TARGET ALIAS :

HOST NO : 0

BUS NO : 0

TARGET ID : 58

TARGET ADDRESS : 148.11.18.60:3260

SESSION STATUS : ESTABLISHED AT Fri Sep 11 14:51:13 2009

NUMBER OF PORTALS : 1

PORTAL ADDRESS 1 : 148.11.18.9:3260,1

SESSION ID : ISID 00023d000001 TSIH 3b1

Gateway Connection

This target address is what Lefthand calls a gateway connection. The gateway connection is described in the Lefthand SAN User Manual (page 561) as follows;

Use iSCSI load balancing to improve iSCSI performance and scalability by distributing iSCSI sessions for different volumes evenly across storage nodes in a cluster. ISCSI load balancing uses iSCSI Login-Redirect. Only initiators that support Login-Redirect should be used. When using VIP and load balancing, one iSCSI session acts as the gateway session. All I/O goes through this iSCSI session. You can determine which iSCSI session is the gateway by selecting the cluster, then clicking the iSCSI Sessions tab. The Gateway Connection column displays the IP address of the storage node hosting the load balancing iSCSI session.

SAN/IQ will designate a node to act as a gateway connection for the LUN, the VIP will send the IP address of this node as a target address to all the ESX hosts. This means every host that uses the LUN will connect to that specific node and this storage node will handle all IO for this LUN.

This leads to the question, how will the GC handle IO for blocks not locally stored on that node? When a block is requested that is stored on another node, the GC will fetch this block. All nodes are aware of which block is stored on which node. The GC node will fetch this block of one of the nodes it’s stored and will send the results back to the ESX host.

Gateway Connection failover

Most Clusters will host more LUNs than it has available nodes. This means that each node will host the gateway connection role of multiple LUNs. If a node fails, the GC role will be transferred to the other nodes in the cluster. But when a NSM node returns back online, the VIP will not failback the GC roles. This will create an unbalance it the cluster, which needs to be solved as quickly as possible. This can be done by issuing the RebalanceVIP for the volume from the cli.

Ken Cline asked me the question:

How do I know when I need to use this command? Is there a status indicator to tell me?

Well actually there isn’t and that is exactly the problem!

After a node failure, you need to be aware of this behavior and you will have to rebalance a volume yourself by running the RebalanceVIP command. The Lefthand CMC does not offer this option or some sort of alert.

Network Interface Bonds

How about the available bandwidth? Lefthand nodes come standard with two 1GB NICs. The two NICs can be placed in a bond. An NSM node has three NIC bond configurations;

- Active – Passive

- Link Aggregation (802.3 ad)

- Adaptive Load Balancing

The most interesting is the Adaptive Load Balancing (ALB). Adaptive Load Balancing combines the benefits of the increased bandwidth of 802.3ad with the network redundancy of Active-Passive. Both NICS are made active and they can be connected to different switches, no additional configuration on physical switch level is needed.

When an ALB bond is configured, it creates an interface. This interface balances traffic through both NICs. But how will this work with the iSCSI protocol? In RFC 3270 (http://www.ietf.org/rfc/rfc3720.txt) iSCSI uses command connection allegiance;

For any iSCSI request issued over a TCP connection, the corresponding response and/or other related PDU(s) MUST be sent over the same connection. We call this “connection allegiance”.

This means that the NSM node must use the same MAC address to send the IO back. How will this affect the bandwidth? As stated in the ISCSI SAN configuration guide; “ESX Server‐based iSCSI initiators establish only one connection to each target.”.

It looks like ESX will communicate with the gateway connection of the LUN with only NIC. I asked Calvin Zito (http://twitter.com/HPstorageGuy) to educate me on ALB and how it handles connection allegiance.

When you create a bond on an NSM, the bond becomes the ‘interface’ and the MAC address of one of the NICs becomes the MAC address for the bond. The individual NICs become ‘slaves’ at that point. I/O will be sent to and from the ‘interface’ which is the bond and the bonding logic figures out how to manage the 2 slaves behind the scenes. So with ALB, for transmitting packets, it will use both NICs or slaves, but they will be associated with the MAC of the bond interface, not the slave device.

The bond uses the same IP and MAC address of the first onboard NIC. This means the node will use both interfaces to transmit data, but only one to receive.

Chad Sakac (EMC), Andy Banta (VMware) and various other folks has written a multivendor post explaining how ESX and vSphere handles iSCSI traffic. A must-read!

http://virtualgeek.typepad.com/virtual_geek/2009/01/a-multivendor-post-to-help-our-mutual-iscsi-customers-using-vmware.html#more

Design issues;

When designing a Lefthand SAN, these points are worth considering;

Network RAID level Write performance

When 2-way replication is selected, blocks will be written on two nodes simultaneously, if a LUN is configured with 3-way replication, then blocks must be replicated to three nodes . Acknowledgements are given when blocks are written in cache on all the participating nodes. When selecting the replication level, keep in mind that higher protection levels leads to less write performance.

Raid Levels NSM

Network RAID offers protection for storage node failure, but it does not protect against disk failure within a storage node. Disk RAID levels need to be configured at Storage Node level, unlike most traditional arrays where raid level can be configured per LUN level. It is possible to mix storage nodes with different configurations of RAID within a cluster, but the this can lead a lower useable capacity.

For example, the cluster exists of 12 TB nodes running RAID 10. Each node will provide 6TB in usable storage. When adding two 12TB nodes running RAID 5, each provides 10 TB of usable storage. However, due to the restrictions of how the cluster uses capacity, the NSM nodes running RAID 5 will still be limited to 6 TB per storage node. This restriction is because the cluster operates at the smallest usable per-storage node capacity.

The RAID level of the storage node must first be set before it can join a Cluster. Check RAID level of clusternodes before configuring the new node, because you cannot change the RAID configuration without deleting data.

Combining Replication Levels with RAID levels

RAID levels will ensure data redundancy inside the storage node, while Replication levels will ensure data redundancy on the storage node level. Both higher RAID levels and Replication levels offer greater data redundancy, but will have an impact on capacity and performance. RAID5 with 2-way replication seems to be the sweet spot for most implementations, but when high available data protection is needed, Lefthand recommends 3-way replication with raid 5, ensuring triple mirroring with 3 parity blocks available.

I would not suggest RAID 0 with replication, because rebuilding a RAID set will always be quicker than copying an entire storage node over the network.

Node placement

Mentioned previously, the data in which blocks are written to the LUN is determined by the order in which the nodes are added to the cluster. When using 2-way replication, blocks are written to two consecutive nodes. When designing a cluster the order of the placement of the nodes is extremely important if the SAN will be placed in two separate racks or even better span two locations.

Because the 2-way replication writes blocks on two consecutive nodes, adding the storage nodes to the cluster in alternating order will ensure that data is written to each rack or site.

When nodes are added in the incorrect order or if a node is replaced, the general setting tab of the cluster properties allows you to “promote” or “demote” a storage node in the logical order. This list is the leading for the “write” order of the nodes.

Management Group and Managers

In addition to setting up data replication, it is important to setup managers. Managers play an important role in controlling data flow and access of clusters. Managers run inside a management group. Several storage nodes must be designated to run the manager’s service. Because managers use a voting algorithm, a majority of managers needs to be active to function. This majority is called a Quorum. If a quorum is lost, access to data is lost. Be aware that access to data is lost, not the data itself.

An odd number of managers is recommended, as a (low) even a number of managers can get in a certain state where no majority is determined. The maximum number of managers is 5.

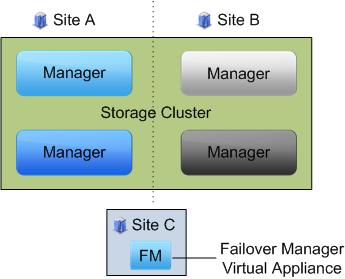

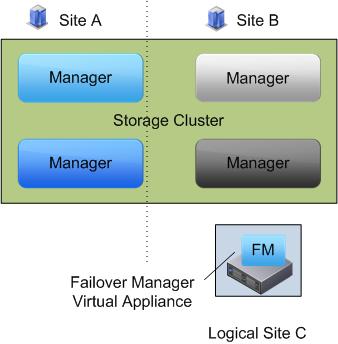

Failover manager

A failover manager is a special edition of a manager. Instead of running on a storage node, the failover manager runs as a virtual appliance. A failover manager only function is maintaining quorum. When designing a SAN spanning two sites, running a failover manager is recommended. The optimum placement of the failover manager is the third site. Place an even amount of managers in both sites and run the failover manager at an independent site.

If a third site is not available, run the failover manager local on a server, creating a logical separated site

Volumenames

And the last design issue, volume names cannot be changed. The volume name is the only setting that can’t be edited after creation. Plan your naming convention carefully, otherwise, you will end up recreating volumes and restoring data. If someone of HP is reading this, please change this behavior!

Get notification of these blogs postings and more DRS and Storage DRS information by following me on Twitter: @frankdenneman

We have been using LH since 07 and it is the most redundant SAN I have seen. We have lost a whole node to power issues and no one could tell the difference. Even during the rebuild the speed is wonderful. I would suggest looking at this for anyone in the market. Be sure to unplug the head units on other peoples SAN and see what happens…

“So with ALB, for transmitting packets, it will use both NICs or slaves, but they will be associated with the MAC of the bond interface, not the slave device.”

“The bond uses the same IP and MAC address of the first onboard NIC. This means the node will uses both interfaces to transmit data, but only one to receive.”

Doesn’t this mean that the same MAC address will be presented to the network switch on two different ports? Wouldn’t that cause CAM table pollution, which would have a serious negative impact on performance?

Ken, Good question.

The most trouble I had figuring out this SAN type, was the internal working of the ALB. Lefthand\HP haven’t documented this technology very extensively. That’s why I asked Calvin to confirm my suspicion.

I haven’t heard the network tribe complain about problems in the switches, I can ask them if you’d like. About performance,we approached near line speeds several time. With vSphere and round robin, the performance was quite impressive.

Frank, Ken,

I think Adaptive Load Balancing doesn’t work at L2 layer but at L3 layer.

A packets sent from the SAN will have mac address of one of the NICs in the “bond” but whatever the source mac address is (L2), the source IP (L3) will be the IP of the “bond”.

Packets sent to the SAN will go the “bond” interface because the ARP entry is linked to the mac address of the “bond”.

HP Teaming in Transmit Load Balancing with Fault Tolerance mode works the same way, see page 30 Figure 4-5 of http://h20000.www2.hp.com/bc/docs/support/SupportManual/c01415139/c01415139.pdf

What are you seeing from a performance standpoint? We are looking at the LH solution with the optional 10Gbps NICs connected into our CISCO Nexus 10Gbps switching platform. One would assume that iSCSI over 10Gbps should perform fairly well… but most of the reviews I am seeing are targeted at 1Gbps links or a bonded “2Gbps” link….

Because of how it scales over the nodes in the cluster, performance is always an agregate or the sum of all NIC’s in the cluster. With the two NIC’s per unit, using 5 nodes, you get 10Gb true network capacity, by using only 1Gb ports. More common is to have a requirement for more than one or two Gb’s on the application server and this is now where you might want to consider using 10Gb for that particular server. Then you need 10Gb ports in the SAN to connect that server but the storage cluster will still agregate using all the nodes so 1Gb uplinks is still fine from the storage layer.

If all of my active Windows server NICs go into one switch and all of my MPIO passive NICs go into another, will I be limited to using only one of the ALB bonded NICs? Or will the switch route the data through the ISL connection?

Don,

I do not understand your question. Would it be possible to rephrase it?

First of all, if you use windows systems with a Lefthand SAN, use the MPIO driver written by Lafthand, called the DSM (Device Specific Module)

The DSM does not use a gateway connection, it will send IO directly to the node containing the block.

Can anyone comment on SQL performance on a Lefthand. I’m looking at a SAN solution in a test lab for 200GB to 500Gb SQL DBs and VMs. Because we’re a HP shop, the vendor is pitching the Starter SAN for my lab environment. Need some more info regarding SAS or SATA setups. I’ve read that there will be a botteneck in SQL because the SQL VM is essentially using one nic. http://serverfault.com/questions/4478/how-does-the-lefthand-san-perform-in-a-production-environment

I have measure the Lefthand-SAN, the performance (especially random IO) is very good:

2 nodes a 8 Disk R5, 2way-Replication, IOMETER, 4k-blocks, random, 100% read or write:

read: 7700 IO/s

write: 1700 IO/s

4 nodes a 12 Disk R5, 2way-Replication, IOMETER, 4k-blocks, random, 100% read or read: 14460 IO/s

write: 3140 IO/s

About architecture needed by quorum use : with to datacenter and only two nodes in each. What will happen if network link between the two datacenter become down : no majority => no quorum => lost access to data ?

@HGU – with no quorum you will have to manually log into one of the nodes to make it active. The quorum is for auto-failover. (At least that is what it sounded like when I talked to the LH salesperson)

Sorry to relight an old thread, but i was doing some math of IOPS per disk using Rainer’s numbers and it seems like there are less IOPS per disk with the larger 12 bay chassis. Do we know if both of these are the same type of drive or is this an expected finding?

Excellent article!

Are you available for consulting (for example, I am trying to decide between LH and SvSAN)? Please respond via my email.

Thanks.

So do you have to run the rebalanceVip command on all nodes and volumes in the cluster? Or can you just run the commands on one node for all volumes?

The explanation of adaptive load balancing in this article is incorrect and misleading. The bond NIC modifies its answers to arp queries and arp responses on the fly making traffic with one L3 address appear to have multiple L2 addresses depending on which host you ask. This is how it can work without switch support.

Of course, it’s a little more complicated than that, and this is extremely well documented. A high level but more in depth overview can be read in the bonding driver documentation located in the linux kernel source tree at “Documentation/networking/bonding.txt”. Look under the documentation for the “mode” option under chapter 2. The mode in question is “balance-alb”.

If you want to know the gory details you can always read the code located at “drivers/net/bonding/bond_alb.c”.

What, you didn’t think lefthand re-implemented this from scratch, did you? 🙂

Michael,

Excellent stuff! Thanks.

I’ve talked a lot with the Lefthand engineers and they explained it to me like the article.

I’m gonna look at it and will rewrite the article.

Hi – first thanks for a great article – we are thinking of implementing LH and have a couple of questions I hope can be clarified.

In a single cluster two site implementation the nodes are separated by a wan link – how do I ensure that a lun created on the cluster is served locally to the server connected to it? I am guessing this would be done by LH knowing that the mac address of the initiator is in the arp table of the switch the node is connected to and that therefore that node should be used for the connection.

And

Data is replicated across a wan in a single cluster two site implementation are only the changed disk blocks replicated or is an entire file replcated?

Thanks

Lefthand does have an interesting design, one that should be possible to build yourself by using regular servers with some disk and some open source software. The design isn’t that complicated after all, just basically a network raid. A lot of this stuff is probably using open source software already in the lefthand products, as evident by the obviously linux based bonding..

It’s time for a solid open source SAN!

Hoi Frank,

I was kinda curious if you have found out how ALB really works.

Thanks!

Hi Frank

Interesting article thanks. We have a multisite LH installation since July 2010 and I have a question, pretty much the same as Rod Lee above. At the moment our ESX hosts are accessing some LUN’s across our intersite fibre link because the gateway IPs are hosted on the other site. Luckily its pretty a quick link and to date I use the rebalanceVIP command to try and shift the gateway IP between sites. This is pretty unreliable and can cause micro outages to the LUN in question. Is this really the way it should be working or is there another way around this?

I notice vSphere 4.1 is going to support a proper DSM but unfortunately Data Protector doesn’t support that yet.

Thanks

Russ..

Great article 🙂

We’re looking at a basic two-site, single-subnet multi-site cluster.

The current thing I’m trying to figure out is what if the iSCSI link fails, but the host(s) and primary LAN are still up?

HA doesn’t seem to cater for the storage disappearing from a host but the hosts still being able to see one another?

denke auch

Frank-

I wanted to say “thank you” this article helped bring me up to speed very quickly. I am currently looking at additionally changing a Lefthands raid level after implementation into a cluster. This should be interesting…I find this an extremely interesting feature.

Cheers,

Jeffrey

Hi Frank,

Did you ever checked Ken Clin’s his question?

I simulated his question and I can see this behavior on our iSCSI switches.

We made a support ticket with HP but so far we have no solution, yet …

regards

I am new to lefthand and need a some clarity about when the fail over manager is needed. If I have 2 Node VSA in the event of a failed node will the failover occur with automatically?

Frank,

What Version of SanIQ does your article reference to?

Greets

Oliver

I’ve got 2 nodes, where the volumes are in network raid 0 (none)

We could probably try to get another 2 for a network raid 10, however my logic tells me that converting from raid 0 to raid 10 is, well, not possible.

Do you know if this is possible in LH by only changing the properties in the volume?

Thank you sir Denneman…awesome.

Great article to get a jumstart in the design / configuration of a lefthand storage

Absolutely amazing article. I have recently implemented a dual node, single site environment and all this information has been reassuring and very helpful.

Hello, I know this is quite an old post but maybe someone will see this.

If one is using the new HP P4000 LeftHand Storage VSA that was recently announced with three ESXi hosts each running one VSA would the recommended local RAID be 10?? and for the replication be 2-way or 3-way??

How does one figure out what would become the usable storage vs. the storage used for striping etc.??

I know that RAID 10 is 50% of total disk storage??

Where’s a calculator that explains??

Thank you, Tom

Quick question: with a network raid-10 volume, is it possible to access the same data on the volume in two different sites (primary and secundary)?

detail: when we copy data to the network raid-10 volume in the primary site, the data does not appear on the secondary site (where the same volume is mounted to a drive letter as well)

Thank you in advance

Jan Willemsen

whos awesome? … you’re awesome!