vSphere introduced the HA admission control policy “Percentage of Cluster Resources Reserved”. This policy allows the user to specify a percentage of the total amount of available resources that will stay reserved to accommodate host failures. When using vSphere 4.1 this policy is the de facto recommended admission control policy as it avoids the conservative slots calculation method.

Reserved failover capacity

The HA Deepdive page explains in detail how the “percentage resources reserved” policy works, but to summarize; the CPU or memory capacity of the cluster is calculated as followed;The available capacity is the sum of all ESX hosts inside the cluster minus the virtualization overhead, multiplied by (1-percentage value).

For instance; a cluster exists out of 8 ESX hosts, each containing 70GB of available RAM. The percentage of cluster resources reserved is set to 20%. This leads to a cluster memory capacity of 448GB (70GB+70GB+70GB+70GB+70GB+70GB+70GB+70GB) * (1 – 20%). 112GB is reserved as failover capacity. Although the example zooms in on memory, the percentage set applies both CPU and memory resources.

Once a percentage is specified, that percentage of resources will be unavailable for active virtual machines, therefore it makes sense to set the percentage as low as possible. There are multiple approaches for defining a percentage suitable for your needs. One approach, the host-level-approach is to use a percentage that corresponds with the contribution of one or host or a multiplier of that. Another approach is the aggressive approach which sets a percentage that equals less than the contribution of one host. Which approach should be used?

Host-level

In the previous example 20% was used to be reserved for resources in an 8-host cluster. This configuration reserves more resources than a single host contributes to the cluster. High Availability’s main objective is to provide automatic recovery for virtual machines after a physical server failure. For this reason, it is recommended to reserve resource equal to a single host or a multiplier of that.

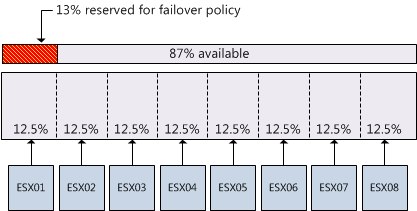

When using the per-host level of granularity in an 8-host cluster (homogeneous configured hosts), the resource contribution per host to the cluster is 12.5%. However, the percentage used must be an integer (whole number). Using a conservative approach it is better to round up to guarantee that the full capacity of one host is protected, in this example, the conservative approach would lead to a percentage of 13%.

Aggressive approach

I have seen recommendations about setting the percentage to a value that is less than the contribution of one host to the cluster. This approach reduces the amount of resources reserved for accommodating host failures and results in higher consolidation ratios. One might argue that this approach can work as most hosts are not fully loaded, however it eliminates the guarantee that after a failure all impacted virtual machines will be recovered.

As datacenters are dynamic, operational procedures must be in place to -avoid or reduce- the impact of a self-inflicted denial of service. Virtual machine restart priorities must be monitored closely to guarantee that mission critical virtual machines will be restarted before virtual machine with a lower operational priority. If reservations are set at virtual machine level, it is necessary to recalculate the failover capacity percentage when virtual machines are added or removed to allow the virtual machine to power on and still preserve the aggressive setting.

Expanding the cluster

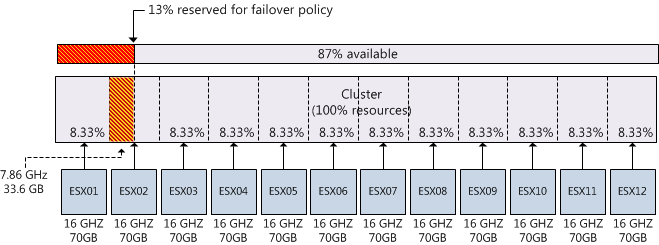

Although the percentage is dynamic and calculates capacity at a cluster-level, when expanding the cluster the contribution per host will decrease. If you decide to continue using the percentage setting after adding hosts to the cluster, the amount of reserved resources for a fail-over might not correspond with the contribution per host and as a result valuable resources are wasted. For example, when adding four hosts to an 8-host cluster while continue using the previously configured admission control policy value of 13% will result in a failover capacity that is equivalent to 1.5 hosts. The following diagram depicts a scenario where an 8 host cluster is expanded to 12 hosts; each with 8 2GHz cores and 70GB memory. The cluster was originally configured with admission control set to 13% which equals to 109.2 GB and 24.96 GHz. If the requirement is to be able to recover from 1 host failure 7,68Ghz and 33.6GB is “wasted”.

Maximum percentage

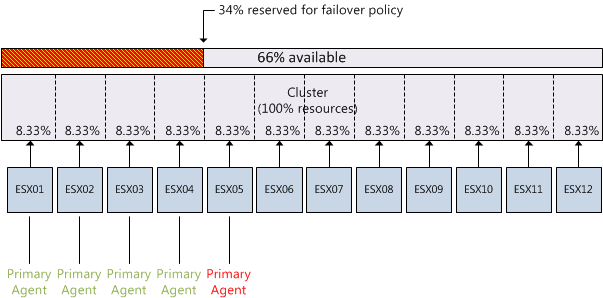

High availability relies on one primary node to function as the failover coordinator to restart virtual machines after a host failure. If all five primary nodes of an HA cluster fail, automatic recovery of virtual machines is impossible. Although it is possible to set a failover spare capacity percentage of 100%, using a percentage that exceeds the contribution of four hosts is impractical as there is a chance that all primary nodes fail.

Although configuration of primary agents and configuration of the failover capacity percentage are non-related, they do impact each other. As cluster design focus on host placement and rely on host-level hardware redundancy to reduce this risk of failing all five primary nodes, admission control can play a crucial part by not allowing more virtual machines to be powered on while recovering from a maximum of four host node failure.

This means that maximum allowed percentage needs to be calculated by summing the contribution per host x 4. For example the recommended maximum allowed configured failover capacity of a 12-host cluster is 34%, this will allow the cluster to reserve enough resources during a 4 host failure without over allocating resources that could be used for virtual machines.

Setting Correct Percentage of Cluster Resources Reserved

3 min read

One thing I can’t figure about using percentage is:

A cluster consist of 4 hosts, each host has 72GB memory and every host has a memory usage of around 64GB memory, no reservations set. Admision Control Policy is set to 25% reserved for failover capacity. Summary on the cluster report “current memory failover capacity 77%”. In my world, with the current load on the cluster, it could cause a lot of ballooning and swapping and maybe very bad performance in case of a host failure.

How does it calculate current memory failover capacity and does it take performance into account?

In the first part of your article you describe how the “percentage resources reserved” policy works as “The available capacity is the sum of all ESX hosts inside the cluster minus the virtualization overhead, multiplied by (1-percentage value). ”

However, in the example you provide, you dont seem to include the overhead – why the discrepancy?

Also, what is the overhead you are referring to? is it the memory overhead per VM the vmkernel incurs?

Ots, HA bases its decisions on reservations plus overhead memory if I remember correctly.

The questions I have is how does planned host outages like maintenance play together with failover capacity? I assume if you were in a full but valid state before maintenance the effect would be to keep you from powering on any new VM’s, but what would happen if you had an unplanned outage during the maintenance? Would HA wait until the host under maintenance rejoined the cluster and then restart all VM’s?

Hari,

I did include the overhead in the example, as most servers cannot be configured to have 70GB of virtual memory. The example used host configured with 72GB. I shall edit the post to clarify.

The overhead I refer to is running of the kernel. virtual machine overhead is subtracted from the available memory for virtual machines. For example, if the virtual machine is running in a resource pool of 10GB and the VM has 2GB of active memory and it has a memory overhead of 100 MB (fictitious number) 2.1 GB is subtracted from the resource pool and 7.9GB is left available for other virtual machines.

Andrew,

The HA cluster is unaware if host are under maintenance. When you place a host in maintenance mode, the HA agent is disabled. Thereby it no longer participates in the HA cluster. HA will not use the host resources for its calculation because the resources will not be available for the cluster.

The ability to perform maintenance and use Maintenance mode must be taken into account when designing the HA admission control. For instance, All the admission control policies do take into account that there must at least be 2 hosts to provide failover support.

Given a smaller cluster, say two servers, is it even worth using a percentage?

Thanks

Bob

Love the book BTW.

How would you recommend setting the reserved percentage when you have hosts with wildly differing amounts of resources (in our case, host memory ranges from 32GB to 128GB)?

Hi Franck,

“All the admission control policies do take into account that there must at least be 2 hosts to provide failover support.”

So in your 8 host cluster example, 25% should be set as cluster resource reserved right ?

thanks

Thanks for the excellent article, Frank! I

have a question when opting for “Aggressive Approach” . You mentioned,

”

One might argue that this approach can work as most hosts are not fully loaded, however it eliminates the guarantee that after a failure all impacted virtual machines will be recovered.

”

Since Admission Control is applied at the vCenter level and HA failover is not impacted by Admission Control, what factors can cause the VMs failing to boot up. Or will it be correct to imply the VMs will boot up, however with degraded performance depending on the cluster load?

Mohammed,

Admission control is not applied at the vCenter level. HA will control admission control during normal operations such as a VM power-on, but will ignore it when there is a failover event. Factors that result in a VM boot failure are usually inconsitent architecture in the cluser, such as missing VM network, missing connection to a datastore, Host-VM affinity rules with mandatory rules that are wrongly configured. It all comes down to the compatability list of the vm itself.