Recently a couple of consultants brought some unexpected behavior of vCloud Director to my attention. If the provider vDC is connected to a datastore cluster and a virtual disk or vApp is placed in the datastore, vCD displays an error when the datastores do not have enough free space available.

Last year I wrote an article (Storage DRS initial placement and datastore cluster defragmentation) describing storage DRS initial placement engine and it’s ability to move virtual machines around the datastore cluster if individual datastores did not have enough free space to store the virtual disk.

I couldn’t figure it out why Storage DRS did not defragment the datastores in order to place the vApp, thus I asked the engineers about this behavior. It turns out that this behavior is by design. When creating vCloud director the engineers optimized the initial placement engine of vCD for speed. When deploying a virtual machine, defragmenting a datastore cluster can take some time. To avoid waiting, vCD reports an error of not enough free space and relies on the vCloud administrator to manage and correct the storage layer. In other words, Storage DRS initial placement datastore cluster defragmentation is disabled in vCloud Director.

I can understand the choice the vCD engineers made, but I also believe in the benefit of having datastore cluster defragmentation. I’m interested in your opinion? Would you trade initial placement speed over reduced storage management?

Using Remote desktop connection on a Mac? Switch to CoRD

One of the benefits of working for VMware technical marketing, is that you have your own lab.

Luckily my lab is hosted by an external datacenter, which helps me avoid a costly power-bill at home each month 🙂 However, that means I need to connect to my lab remotely.

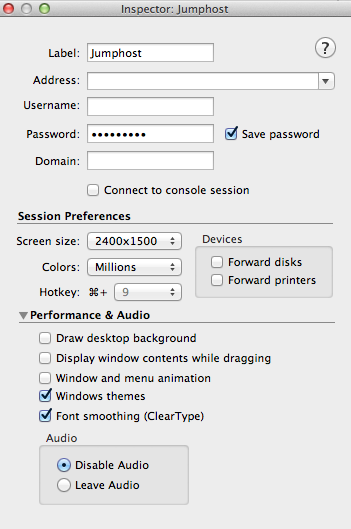

As a MAC user I used Remote Desktop Connection for MAC from Microsoft. One of the limiting factors of this RDP for MAC is the limited resolution of 1400 x 1050 px. The screens at home have a minimum resolution 2560 x 1440 px. This first world problem bugged me until today!

Today I found CoRD – http://cord.sourceforge.net/. CoRD allows me to connect to my servers with a resolution 2500 x 1600, using the full potential of my displays at home.

Another create option is the hotkey function, using a key combination I spin up a remote desktop connection. I love these kinds of shortcuts that help me reduce time spend navigating throughout the UI.

If you are using a MAC and often RDP into your lab, I highly recommend to download CoRD.

Btw, it’s free 😉

Expandable reservation on resource pools, how does it work?

It seems that the expandable reservation setting of a resource pool appears to be shrouded in mystery. How does it work, what is it for, and what does it really expand? The expandable reservation allows the resource pool to allocate physical resource (CPU/memory) protected by a reservation from a parent source to satisfy its child object reservation. Let’s dig a little deeper into this.

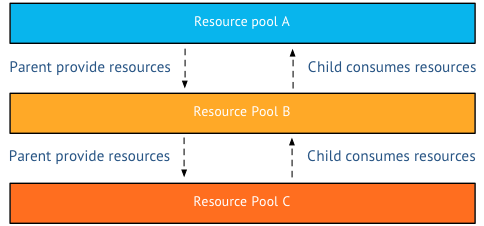

Parent-child relation

A resource pool provides resources to its child objects. A child object can either be a virtual machine or a resource pool. This is what called the parent-child relationship. If a resource pool (A), contains a resource pool (B), which contains a resource pool (C), then C is the child of B. B is the parent of C, but is the child of A, A is the parent of B. There is no terminology for the relation A-C as A only provides resource to B, it does not care if B provide any resource to C.

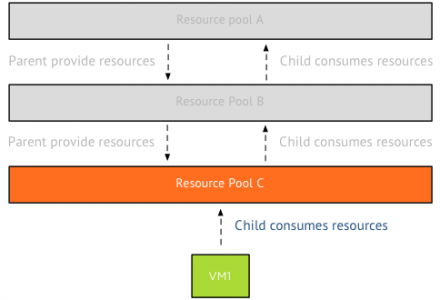

As a virtual machine is placed in to a resource pool, the virtual machine becomes a child-object of the resource pool. It is the responsibility of the resource pool to provide the resources the virtual machine requires. If a virtual machine is configured with a reservation, than it will request the physical resources from its parent resource pool.

Remember that a reservation guarantees that the resources protected by the reservation will and cannot be reclaimed by the VMkernel, even during memory pressure. Therefor the reservation of the virtual machine is directed to its parent and the parent must exclusively provide this to the virtual machine. It can only provide these resources from its own pool of protected resources. The resource pool can only distribute the resources it has obtained itself.

Protected or reserved resources?

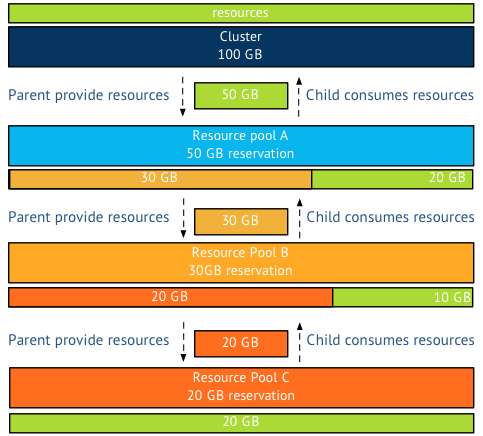

I’m deliberately calling a resource claimed by a reservation a protected resource, as the VMkernel cannot reclaim it. However when a resource pool is configured with a reservation, it immediately claims this memory from its parent. This goes on all the way up to the cluster level. The cluster is the root resource pool and all the resources provided by the ESXi hosts are owned by the resource pool and protected by a reservation. Therefor the cluster – root resource pool – contains and manages the protected pool of resources.

For example, the cluster has 100GB of resources, meaning that the root resource pool consists of 100GB of protected memory. Resource pool A is configured with a 50GB reservation, consuming this 50Gb from the root resource pool. However resource pool B is configured with a 30GB reservation, immediately claiming 30 GB of resources protected by the reservation of resource pool A. Leaving resource pool A with only 20 GB of protected resources for itself. Resource Pool C is configured with a 20GB memory reservation. Resource pool C claims this from its parent, resource pool B which is left with 10GB of protected resources for itself.

But what happens if the resource pool runs out of protected resources? Or is not configured with a reservation at all? In other words, If the child objects in the resource pool are configured with reservations that exceeds the reservation set on the resource pool, the resource pool needs to request protected resources from its parent. This can only be done if expandable reservation is enabled.

Please note that the resource pool request protected resources, it will not accept resources that are not protected by a reservation.

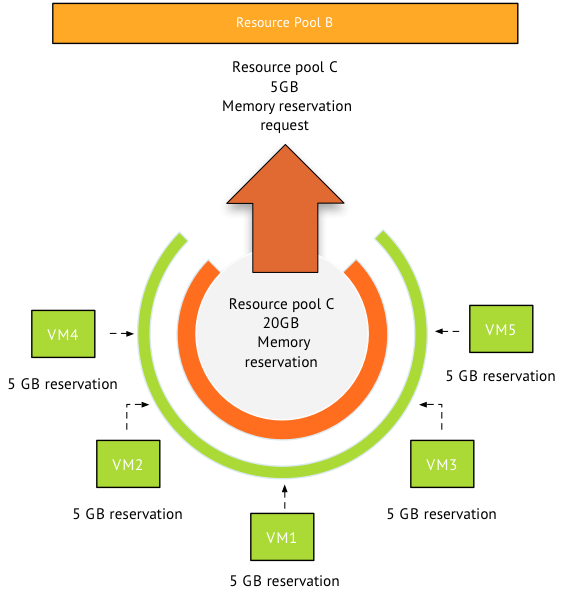

Now in this scenario, the five virtual machines in the resource pool are each configured with 5GB memory reservation, totaling it to 25GB. Resource pool C is configured with a 20GB memory reservation. Therefor resource pool is required to make a request for 5GB of protected memory resources on behalf of the virtual machines to its parent resource pool B.

If resource pool B does not have the protected resources itself, it can request these protected resources from its parent. This can only occur when the resource pool is configured with expandable reservation enabled. The last stop in the cluster it the cluster itself. What can stop this river of requests? Two things, the request for protected resources is stopped by a resource limit or by a disabled expandable reservation. If a resource pool has expandable reservation disabled, it will try to satisfy the reservation itself if it’s unable to do so, it will deny the reservation request. If a resource pool is set with a limit, the resource pool is limited to that amount of physical resources.

For example if the parent resource pool has a reservation and a limit of 20GB, the reservation on behalf of its child need to be satisfied by the protected pool itself otherwise it will deny the resource request.

Now lets use a more complex scenario, resource pool B is configured with expandable reservation enabled and a 30 GB reservation. A limit is set to 35GB. Resource pool C is requesting an additional 10GB on top of the 20GB it is already granted. Resource pool B is running 2 VM with a total reservation of 10GB. This means the protected pool of Resource pool B is servicing 20GB resource request from resource pool C and 10 GB for its own virtual machines. Its protected pool is depleted, the additional 10GB request of resource pool C is denied, as this would raise the protected pool of resource pool B to a total of 40GB memory, which exceeds the 35GB limit.

Virtual machine memory overhead

Please remember that each virtual machine is configured with a memory reservation. To run the virtual machine a small amount of memory resources are required by the VMkernel. This is called the virtual machine memory overhead. To be able to run a virtual machine inside a resource pool, either the expandable reservation should be enabled or a memory reservation is configured on the resource pool.

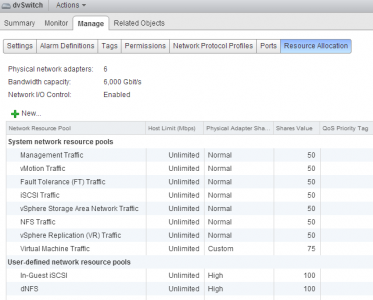

vSphere Storage Area Network Traffic system network resource pool -NetIOC

After posting the Network I/O Control primer I received a couple of questions about the vSAN traffic system network resource pool, such as:

What’s the “vSphere Storage Area Network Traffic” system network resource pool for?

I tried to further investigate by searching practically everywhere, but I didn’t manage to find any detailed description…

The vSphere Storage Area Network Traffic is a system network pool designed for a future vSphere storage feature that is not released yet. Unfortunately Network I/O Control exposes this system network resource pool in vSphere 5.1 already.

Although it is defined as system network resource pool, the vSphere client lists the network pool as user-defined, providing the impression that this pool can be assigned to other streams of traffic. Unfortunately this is not possible. The pool is a system network resource pool and therefor only available to traffic that is specifically tagged by the VMkernel.

I received the question if this network pool could be assigned to a third party NIC or an FCoE card. As mentioned, network pools only manage traffic that is assigned with the appropriate tag. Tagging of traffic is only done by the VMkernel and this functionality is not exposed to the user.

Although its exposed in the user-interface, this system network pool has no function and it will not have any affect on other network streams. It can be happily ignored.

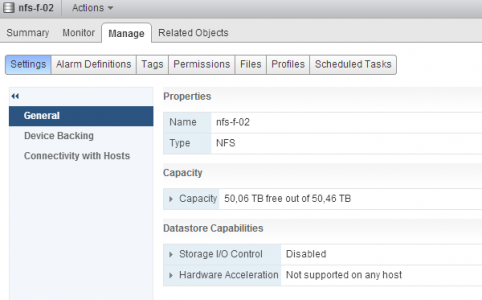

How to enable SIOC stats only mode?

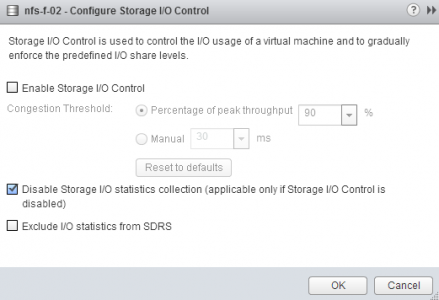

Today on twitter, David Chadwick, Cormac Hogan and I were discussing SIOC stats only mode. SIOC stats only mode gathers statistics to provide you insights on the I/O utilization of the datastore. Please note that Stats only mode does not enable the datastore-wide scheduler and will not enforce throttling. Stats only mode is disabled due to the (significant) increase of log data into the vCenter database.

SIOC stats only mode is available from vSphere 5.1 and can be enabled via the web client. To enable SIOC stats only mode go to:

- Storage view

- Select the datastore

- Select Manage

- Select Settings

By default both SIOC and SIOC stats only mode is disabled. Click on the edit button at the right side of the screen. Un-tick the check box “Disable Storage I/O statistics collection (applicable only if Storage I/O Control is disabled)”. Click on OK

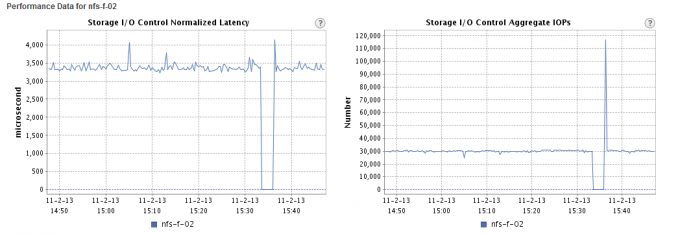

To test to see if there is any difference, I used a datastore that SIOC had enabled. I disabled SIOC and un-ticked the “Disable Storage I/O statistics collection (applicable only if Storage I/O Control is disabled)” option. I opened up the performance view and selected the “Realtime” Time Range.

- Storage view

- Select the datastore

- Select Monitor

- Select Performance

- Select “Realtime” Time range

At 15:35 I disabled SIOC, which explains the dip, at 15:36 SIOC stats only mode was enabled and it took vCenter roughly a minute to start displaying the stats again.

As all new vSphere 5.1 features, SIOC stats only mode can only be enabled via the vSphere web client.