There are some fundamental changes to vMotion scalability and performance in vSphere 5.0 one is the multi-nic support. One of the most visible changes is multi-NIC vMotion capabilities. In vSphere 5.0 vMotion is now capable of using multiple NICs concurrently to decrease lead time of a vMotion operation. With multi-NIC support even a single vMotion can leverage all of the configured vMotion NICs, contrary to previous ESX releases where only a single NIC was used.

Allocating more bandwidth to the vMotion process will result in faster migration times, which in turn affects the DRS decision model. DRS evaluates the cluster and recommends migrations based on demand and cluster balance state. This process is repeated each invocation period. To minimize CPU and memory overhead, DRS limits the number of migration recommendations per DRS invocation period. Ultimately, there is no advantage recommending more migrations that can be completed within a single invocation period. On top of that, the demand could change after an invocation period that would render the previous recommendations obsolete.

vCenter calculates the limit per host based on the average time per migration, the number of simultaneous vMotions and the length of the DRS invocation period (PollPeriodSec).

PollPeriodSec: By default, PollPeriodSec – the length of a DRS invocation period – is 300 seconds, but can be set to any value between 60 and 3600 seconds. Shortening the interval will likely increase the overhead on vCenter due to additional cluster balance computations. This also reduces the number of allowed vMotions due to a smaller time window, resulting in longer periods of cluster imbalance. Increasing the PollPeriodSec value decreases the frequency of cluster balance computations on vCenter and allows more vMotion operations per cycle. Unfortunately, this may also leave the cluster in a longer state of cluster imbalance due to the prolonged evaluation cycle.

Estimated total migration time: DRS considers the average migration time observed from previous migrations. The average migration time depends on many variables, such as source and destination host load, active memory in the virtual machine, link speed, available bandwidth and latency of the physical network used by the vMotion process.

Simultaneous vMotions: Similar to vSphere 4.1, vSphere 5 allows you to perform 8 concurrent vMotions on a single host with 10GbE capabilities. For 1GbE, the limit is 4 concurrent vMotions.

Design considerations

When designing a virtual infrastructure leveraging converged networking or Quality of Service to impose bandwidth limits, please remember that vCenter determine the vMotion limits based on the vMotion uplink physical NIC reported link speed. In other words, if the physical NIC reports at least 10GbE, link speed, vCenter allows 8 vMotions, but if the physical NIC reports less than 10GBe, but at least 1 GbE, vCenter allows a maximum of 4 concurrent vMotions on that host.

For example; HP Flex technology sets a hard limit on the flexnics, resulting in the reported link speed equal or less to the configured bandwidth on Flex virtual connect level. I’ve come across many Flex environments configured with more than 1GB bandwidth, ranging between 2GB to 8GB. Although they will offer more bandwidth per vMotion process, it will not offer an increase in the amount of concurrent vMotions.

Therefore, when designing a DRS cluster, take the possibilities of vMotion into account and how vCenter determines the concurrent number of vMotion operations. By providing enough bandwidth, the cluster can reach a balanced state more quickly, resulting in better resource allocation (performance) for the virtual machines.

**disclaimer: this article contains out-takes of our book: vSphere 5 Clustering Technical Deepdive**

Restart vCenter results in DRS load balancing

Recently I had to troubleshoot an environment which appeared to have a DRS load-balancing problem. Every time when a host was brought out of maintenance mode, DRS didn’t migrate virtual machines to the empty host. Eventually virtual machines were migrated to the empty host but this happened after a couple of hours had passed. But after a restart of vCenter, DRS immediately started migrating virtual machines to the empty host.

Restarting vCenter removes the cached historical information of the vMotion impact. vMotion impact information is a part of the Cost-Benefit Risk analysis. DRS uses this Cost-Benefit Metric to determine the return on investment of a migration. By comparing the cost, benefit and risks of each migration, DRS tries to avoid migrations with insufficient improvement on the load balance of the cluster.

When removing the historical information a big part of the cost segment is lost, leading to a more positive ROI calculation, which in turn results in a more “aggressive” load-balance operation.

Impact of oversized virtual machines part 2

In part 1 of the series of post on the impact of oversized virtual machines NUMA architecture, memory overhead reservation and share levels are reviewed, part 2 zooms in of the impact of memory overhead reservation and share levels on HA and DRS.

Impact of memory overhead reservation on HA Slot size

The VMware High Availability admission control policy “Host failures cluster tolerates” calculates a slot size to determine the maximum amount of virtual machines active in the cluster without violating failover capacity. This admission control policy determines the HA cluster slot size by calculating the largest CPU reservation, largest memory reservation plus it’s memory overhead reservation. If the virtual machine with the largest reservation (which could be an appropriate sized reservation) is oversized, its memory overhead reservation still can substantial impact the slot size.

The HA admission control policy “Percentage of Cluster Resources Reserved” calculate the memory component of its mechanism by summing the reservation plus the memory overhead of each virtual machine. Therefore allowing the memory overhead reservation to even have a bigger impact on admission control than the calculation done by the “Host Failures cluster tolerates” policy.

DRS initial placement

DRS will use a worst-case scenario during initial placement. Because DRS cannot determine resource demand of the virtual machine that is not running, DRS assumes that both the memory demand and CPU demand is equal to its configured size. By oversizing virtual machines it will decrease the options in finding a suitable host for the virtual machine. If DRS cannot guarantee the full 100% of the resources provisioned for this virtual machine can be used it will vMotion virtual machines away so that it can power on this single virtual machine. In case there are not enough resources available DRS will not allow the virtual machine to be powered on.

Shares and resource pools

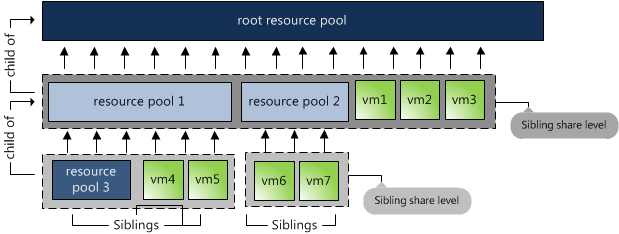

When placing a virtual machine inside a resource pool, its shares will be relative to the other virtual machines (and resource pools) inside the pool. Shares are relative to all the other components sharing the same parent; easier way to put it is to call it sibling share level. Therefore the numeric share values are not directly comparable across pools because they are children of different parents.

By default a resource pool is configured with the same share amount equal to a 4 vCPU, 16GB virtual machine. As mentioned in part 1, shares are relative to the configured size of the virtual machine. Implicitly stating that size equals priority.

Now lets take a look again at the image above. The 3 virtual machines are reparented to the cluster root, next to resource pools 1 and 2. Suppose they are all 4 vCPU 16GB machines, their share values are interpreted in the context of the root pool and they will receive the same priority as resource pool 1 and resource pool2. This is not only wrong, but also dangerous in a denial-of-service sense — a virtual machine running on the same level as resource pools can suddenly find itself entitled to nearly all cluster resources.

Because of default share distribution process we always recommend to avoid placing virtual machines on the same level of resource pools. Unfortunately it might happen that a virtual machine is reparented to cluster root level when manually migrating a virtual machine using the GUI. The current workflow defaults to cluster root level instead of using its current resource pool. Because of this it’s recommended to increase the number of shares of the resource pool to reflect its priority level. More info about shares on resource pools can be found in Duncan’s post on yellow-bricks.com.

Go to Part 3: Impact of oversized virtual machine.

Enhanced vMotion Compatibility

Enhanced vMotion Compatibility (EVC) is available for a while now, but it seems to be slowly adopted. Recently VMguru.nl featured an article “Challenge: vCenter, EVC and dvSwitches” which illustrates another case where the customer did not enable EVC when creating the cluster. There seem to be a lot of misunderstanding about EVC and the impact it has on the cluster when enabled.

What is EVC?

VMware Enhanced VMotion Compatibility (EVC) facilitates VMotion between different CPU generations through use of Intel Flex Migration and AMD-V Extended Migration technologies. When enabled for a cluster, EVC ensures that all CPUs within the cluster are VMotion compatible.

What is the benefit of EVC?

Because EVC allows you to migrate virtual machines between different generations of CPUs, with EVC you can mix older and newer server generations in the same cluster and be able to migrate virtual machines with VMotion between these hosts. This makes adding new hardware into your existing infrastructure easier and helps extend the value of your existing hosts.

EVC forces newer processors to behave like old processors

Well, this is not entirely true; EVC creates a baseline that allows all the hosts in the cluster that advertises the same feature set. The EVC baseline does not disable the features, but indicates that a specific feature is not available to the virtual machine.

Now it is crucial to understand that EVC only focuses on CPU features, such as SSE or AMD-now instructions and not on CPU speed or cache levels. Hardware virtualization optimization features such as Intel VT-Flexmigration or AMD-V Extended Migration and Memory Management Unit virtualization such as Intel EPT or AMD RVI will still be available to the VMkernel even if EVC is enabled. As mentioned before EVC only focuses of the availability of features and instructions of the existing CPUs in the cluster. For example features like SIMD instructions such as the SSE instruction set.

Let’s take a closer look, when selecting an EVC baseline, it will apply a baseline feature set of the selected CPU generation and will expose specific features. If an ESX host joins the cluster, only those CPU instructions that are new and unique to that specific CPU generation are hidden from the virtual machines. For example; if the cluster is configured with an Intel Xeon Core i7 baseline, it will make the standard Intel Xeon Core 2 feature plus SSE4.1., SSE4.2, Popcount and RDTSCP features available to all the virtual machines, when an ESX host with a Westmere (32nm) CPU joins the cluster, the additional CPU instruction sets like AES/AESNI and PCLMULQDQ are suppressed.

As mentioned in the various VMware KB articles, it is possible, but unlikely, that an application running in a virtual machine would benefit from these features, and that the application performance would be lower as the result of using an EVC mode that does not include the features.

DRS-FT integration and building block approach

When EVC is enabled in vSphere 4.1, DRS is able to select an appropriate ESX host for placing FT-enabled virtual machines and is able to load-balance these virtual machines, resulting in a more load-balanced cluster which likely has positive effect on the performance of the virtual machines. More info can be found in the article “DRS-FT integration”.

Equally interesting is the building block approach, by enabling EVC, architects can use predefined set of hosts and resources and gradually expand the ESX clusters. Not every company buys computer power per truckload, by enabling EVC clusters can grow clusters by adding ESX host with new(er) processor versions.

One potential caveat is mixing hardware of different major generations in the same cluster, as Irfan Ahmad so eloquently put it “not all MHz are created equal”. Meaning that newer major generations offer better performance per CPU clock cycle, creating a situation where a virtual machine is getting 500 MHz on a ESX host and when migrated to another ESX host where that 500 MHz is equivalent to 300 MHz of the original machine in terms of application visible performance. This increases the complexity of troubleshooting performance problems.

Recommendations?

No performance loss will be likely when enabling EVC. By enabling EVC, DRS-FT integration will be supported and organizations will be more flexible with expanding clusters over longer periods of time, therefor recommending enabling EVC on clusters. But will it be a panacea to stream of new major CPU generation releases? Unfortunately not! A possibility is to treat the newest hardware (Major releases) as a higher service as the older hardware and because of this create new clusters

Should or Must VM-Host affinity rules?

VMware vSphere 4.1 introduces a new affinity rule, called “Virtual Machines to Hosts” (VM-Host), which I described in the article “VM to Host affinity rule”. A short recap: VM-Host affinity rules are available in two flavors:

- Must run rules (Mandatory)

- Should run rules (Preferential)

By providing these two options a new problem arises for the administrator\architect, when will the need occur for using the mandatory rule and when is it desired to use preferential rules?

I think it all depends on the risk and limitations introduced by each rule. Let’s review difference between the rules, the behavior of each rule and the impact they have on cluster services and maintenance mode.

What is the difference between a mandatory and a preferential rule?

- A mandatory rule limits HA, DRS and the user in such a way that a virtual machine may not be powered on or moved to a ESX host that does not belong to the associated DRS host group.

- A preferential rule defines a preference to DRS to run virtual machine on the host specified in the associated DRS host group.

How does HA treat preferential rules?

VMware High Availability respects mandatory rules and obey mandatory rules when placing virtual machines after a host failover. It can only place virtual machines on the ESX hosts that are specified in the DRS host group. DRS does not communicate the existence of preferential rules to HA, therefore HA is not aware of these rules. HA cannot prevent placing the virtual machine on a ESX host that is not a part of the DRS host group, thereby violating the affinity rule. DRS will correct this violation during the next invocation.

How does DRS treat preferential rules?

During a DRS invocation, DRS runs the algorithm with preferential rules as mandatory rules and will evaluate the result. If the result contains violations of cluster constraints; such as over-reserving a host or over-utilizing a host leading to 100% CPU or Memory utilization, the preferential rules will be dropped and the algorithm is run again.

Limitations

In essence a VM-Host affinity rule restricts the number of hosts on which the virtual machines may be powered-on or to which virtual machines may migrate. Setting VM-Host affinity rules can limit load-balancing and evacuation for maintenance mode.

Load-balancing limitations

A certain level of risk is introduced when Mandatory VM-Host affinity rules are used. As a result of the restrictive behavior by only allowing virtual machines to start on ESX host associated in the DRS host group, it impacts HA’s ability to select a compatible ESX host to place the virtual machine.

In addition, using mandatory VM-Host affinity rules reduce the virtual machine placement options used by DRS when defragmenting the cluster. When using the HA “Percentage based” admission control resource fragmentation could occur.

During a failover a defragmentation will be requested by HA from DRS. DRS tries to migrate virtual machines to regain enough unfragmented resources to fit and start all virtual machines. Because DRS is allowed to use “multi-hop” migrations, DRS calculations usually creates “chain” of migrations during defragmentation of a host. For example: VM-A migrates to host 2 and VM-B migrates from host 2 to host 3. Mandatory rules narrow the playing field, allowing VM to only move around in associated DRS host group, reducing the overall options to transport virtual machines around the cluster, regardless of association with VM-host affinity rules.

Maintenance mode

DRS will not violate CPU and memory reservation to obey mandatory VM-Host affinity rules and it will not violate mandatory rules to allow reservations to be honored. During placement both requirements must be met and therefore DRS will only place a virtual machine if its reservation and the mandatory rule can be satisfied. This behavior will impact the ability of DRS to select a suitable compatible host to the place virtual machines during maintenance mode automated evacuations.

Conclusion

Well, it’s up to you to decide which rule is appropriate to use for separating workloads across the ESX hosts in the cluster. By knowing the impact and limitations introduced by mandatory rules, one might be able to make an informed decision.