I’m currently reviewing a design of a new virtual infrastructure. The VI uses multiple 10GB links to connect to a very large HP Lefthand san. I’m more a Fibre Channel guy, but I believe that this solution will smoke most mid-range FC-sans. I cannot wait to deploy the VI on the SAN. But I need to get used to some differences between ISCSI and fibre channel configurations.

[Read more…] about My first lefthand ISCSI VI architecture

Increasing the queue depth?

When it comes to IO performance in the virtual infrastructure one of the most recommended “tweaks” is changing the Queue Depth (QD). But most forget that the QD parameter is just a small part of the IO path. The IO path exists of layers of hardware and software components, each of these components can have a huge impact on the IO performance. The best results are achieved when the whole system is analysed and not just the ESX host alone.

To be honest I believe that most environments will profit more from a balanced storage design than adjusting the default values. But if the workload is balanced between the storage controllers and IO queuing still occurs, adjusting some parameters might increase IO performance.

Merely increasing the parameters can cause high latency up to the point of major slowdowns. Some factors need to be taking in consideration.

LUN queue depth

The LUN queue depth determines how many commands the HBA is willing to accept and process per LUN, if a single virtual machine is issuing IO, the QD setting applies but when multiple VM’s are simultaneously issuing IO’s to the LUN, the Disk.SchedNumReqOutstanding (DSNRO) value becomes the leading parameter.

Increasing the QD value without changing the Disk.SchedNumReqOutstanding setting will only be beneficial when one VM is issuing commands. It is considered best practise to use the same value for the QD and DSNRO parameters!

Read Duncan’s excellent article about the DSNRO setting.

Qlogic Execution Throttle

Qlogic has a firmware setting called „Execution Throttle” which specifies the maximum number of simultaneous commands the adapter will send. The default value is 16, increasing the value above 64 has little to no effect, because the maximum parallel execution of SCSI operations is 64.

(Page 170 of ESX 3.5 VMware SAN System Design and Deployment Guide)

If the QD is increased, execution throttle and the DSNRO must be set with similar values, but to calculate the proper QD the fan-in ratio of the storage port needs to be calculated.

Target Port Queue Depth

A queue exist on the storage array controller port as well, this is called the “Target Port Queue Depth“. Modern midrange storage arrays, like most EMC- and HP arrays can handle around 2048 outstanding IO’s. 2048 IO’s sounds a lot, but most of the time multiple servers communicate with the storage controller at the same time. Because a port can only service one request at a time, additional requests are placed in queue and when the storage controller port receives more than 2048 IO requests, the queue gets flooded. When the queue depth is reached, this status is called (QFULL), the storage controller issues an IO throttling command to the host to suspend further requests until space in the queue becomes available. The ESX host accepts the IO throttling command and decreases the LUN queue depth to the minimum value, which is 1!

The VMkernel will check every 2 seconds to check if the QFULL condition is resolved. If it is resolved, the VMkernel will slowly increase the LUN queue depth to its normal value, usually this can take up to 60 seconds.

Calculating the queue depth\Execution Throttle value

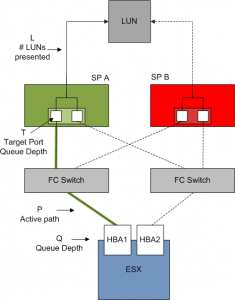

To prevent flooding the target port queue depth, the result of the combination of number of host paths + execution throttle value + number of presented LUNs through the host port must be less than the target port queue depth. In short T => P * q * L

T = Target Port Queue Depth

P = Paths connected to the target port

Q = Queue depth

L = number of LUN presented to the host through this port

Despite having four paths to the LUN, ESX can only utilize one (active) path for sending IO. As a result, when calculating the appropriate queue depth, you use only the active path for “Paths connected to the target port (P)” in the calculation, i.e. P=1.

But in a virtual infrastructure environment, multiple ESX hosts communicate with the storage port, therefore the QD should be calculated by the following formula:

T => ESX Host 1 (P * Q * L) + ESX Host 2 (P * Q * L) ….. + ESX Host n (P * Q * L)

For example an 8 ESX host cluster connects to 15 LUNS (L) presented by an EVA8000 (4 target ports)* An ESX server issues IO through one active path (P), so P=1 and L=15.

The execution throttle\queue depth can be set to 136,5=> T=2048 (1 * Q * 15) = 136,5

But using this setting one ESX host can fill the entire target port queue depth by itself, but the environment exists of 8 ESX hosts. 136,5/ 8 = 17,06

In this situation all the ESX Host communicate to all the LUNs through one port. Which does not happen in many situations if a proper load-balancing design is applied. Most arrays have two controllers and every controller has at least two ports. In the case of a controller failure, at least two ports are available to accept IO requests.

It is possible to calculate the queue depth conservatively to ensure a minimum decrease of performance when losing a controller during a failure, but this will lead to underutilizing the storage array during normal operation, which will hopefully be 99,999% of the time. It is better to calculate a value which utlilize the array properly without flooding the target port queue.

If you assume that multiple ports are available and that all LUNs are balanced across the available ports on the controllers, it will effectively quadruple the target port queue depth and therefore increase the values of the execution throttle in the example above to 68. Besides the fact that you cannot increase this value above 64, it is wise to decrease the value to a number below max value, it will create a buffer for safety

What’s the Best Setting for Queue Depth?

The examples mentioned are pure worst case scenario stuff, most of the time it is highly unlikely that all hosts perform at their maximum level at any one time. Changing the defaults can improve throughput, but most of the time it is just a shot in the dark. Although you are configuring your ESX hosts with the same values, not every load on the ESX server is the same. Every environment is different and so the optimal queue depths would differ. One needs to test and analyse its environment. Please do not increase the QD without analysing the environment; this can be more harmful than useful.

Get notification of these blogs postings and more DRS and Storage DRS information by following me on Twitter: @frankdenneman

HP CA and the use of LUN balancing scripts

Some of my customers use HP Continuous Access to replicate VM data between storage arrays. Lately a couple of LUN balancing powershell- and Perl scripts were introduced in the VMware community. First of all, there is nothing wrong with those scripts. For example, Justin Emerson wrote an excellent script that balances the active paths to an active/active SAN. But using an auto balance scripts when Continuous Access is used in the Virtual Infrastructure can result in added IO latency and unnecessary storage processor load. Here’s why: [Read more…] about HP CA and the use of LUN balancing scripts