A customer of mine wanted more information about the new DRS Resource Distribution Chart in vCenter 4.0, so I thought after writing the text for the customer, why not share this? The DRS Resource Distribution Chart was overhauled in vCenter 4.0 and is quite an improvement over the resource distribution chart featured in vCenter 2.5. Not only does it use a better format, the new charts produce more in-depth information.

[Read more…] about DRS Resource Distribution Chart

Resource pools and avoiding HA slot sizing

Virtual machines configured with large amounts of memory (16GB+) are not uncommon these days. Most of the time these “heavy hitters” run mission critical applications so it’s not unusual setting memory reservations to guarantee the availability of memory resources. If such a virtual machine is placed in a HA cluster, these significant memory reservations can lead to a very conservative consolidation ratio, due to the impact on HA slot size calculation. (For more information about slot size calculation, please review the HA deep dive page on yellow-bricks.com.)

There are options to avoid creation of large slot sizes. Such as not setting reservations, disabling strict admission control, using vSphere new admission control policy “percentage of cluster resources reserved” or creating a custom slot size by altering the advanced settings das.vmMemoryMinMB.

But what if you are still using ESX 3.5, must guarantee memory resources for that specific VM, do not want to disable strict admission control or don’t like tinkering with the custom slot size setting? Maybe using the resource pool workaround can be an option.

Resource pool workaround

During a conversation with my colleague Craig Risinger, author of the very interesting article “The resource pool priority pie paradox”, we discussed the lack of relation between resource pools reservation settings and High Availability. As Craig so eloquently put it:

“RP reservations will not muck around with HA slot sizes”

High Availability ignores resource pools reservation settings when calculating the slot size, so if a single VM is placed in a resource pools with memory reservation configured, it will have the same effect on resource allocation as per VM memory reservation, but does not affect the HA slot size.

By creating a resource pool with a substantial memory setting you can avoid decreasing the consolidation ratio of the cluster and still guarantee the virtual machine its resources. Publishing this article does not automatically mean that I’m advocating using this workaround on a regular basis. I recommend implementing this workaround very sparingly as creating a RP for each VM creates a lot of administrative overhead and makes the host and cluster view a very unpleasant environment to work in.

A possible scenario to use this workaround can be when implementing MS Exchange 2010 mailbox servers. These mailbox servers are notorious for demanding a huge amount of memory and listed by many organizations as mission critical servers.

To emphasize it once more, this is not a best practice! But it might be useful in certain scenarios to avoid large slots and therefore low consolidation ratios.

Impact of host local VM swap on HA and DRS

On a regular basis I come across NFS based environments where the decision is made to store the virtual machine swap files on local VMFS datastores. Using host-local swap can affect DRS load balancing and HA failover in certain situations. So when designing an environment using host-local swap, some areas must be focused on to guarantee HA and DRS functionality.

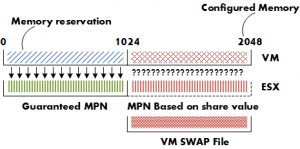

VM swap file

Lets start with some basics, by default a VM swap file is created when a virtual machine starts, the formula to calculate the swap file size is: configured memory – memory reservation = swap file. For example a virtual machine configured with 2GB and a 1GB memory reservation will have a 1GB swap file.

Reservations will guarantee that the specified amount of virtual machine memory is (always) backed by ESX machine memory. Swap space must be reserved on the ESX host for the virtual machine memory that is not guaranteed to be backed by ESX machine memory. For more information on memory management of the ESX host, please the article on the impact of memory reservation.

During start up of the virtual machine, the VMkernel will pre-allocate the swap file blocks to ensure that all pages can be swapped out safely. A VM swap file is a static file and will not grow or shrink not matter how much memory is paged. If there is not enough disk space to create the swap file, the host admission control will not allow the VM to be powered up.

Note: If the local VMFS does not have enough space, the VMkernel tries to store the VM swap file in the working directory of the virtual machine. You need to ensure enough free space is available in the working directory otherwise the VM is still not allowed to be powered up. Let alone ignoring the fact that you initially didn’t want the VM swap stored on the shared storage in the first place.

This rule also applies when migrating a VM configured with a host-local VM swap file as the swap file needs to be created on the local VMFS volume of the destination host. Besides creating a new swap file, the swapped out pages must be copied out to the destination host. It’s not uncommon that a VM has pages swapped out, even if there is not memory pressure at that moment. ESX does not proactively return swapped pages back into machine memory. Swapped pages always stays swapped, the VM needs to actively access the page in the swap file to be transferred back to machine memory but this only occurs if the ESX host is not under memory pressure (more than 6% free physical memory).

Copying host-swap local pages between source- and destination host is a disk-to-disk copy process, this is one of the reasons why VMotion takes longer when host-local swap is used.

Real-life scenario

A customer of mine was not aware of this behavior and had discarded the multiple warnings of full local VMFS datastores on some of their ESX hosts. All the virtual machines were up and running and all seemed well. Certain ESX servers seemed to be low on resource utilization and had a few active VMs, while other hosts were highly utilized. DRS was active on all the clusters, fully automated and a default (3 stars) migration threshold. It looked like we had a major DRS problem.

DRS

If DRS decide to rebalance the cluster, it will migrate virtual machines to low utilized hosts. VMkernel tries to create a new swap file on the destination host during the VMotion process. In my scenario the host did not contain any free space in the VMFS datastore and DRS could not VMotion any virtual machine to that host because the lack of free space. But the host CPU active and host memory active metrics were still monitored by DRS to calculate the load standard deviation used for its recommendations to balance the cluster. (More info about the DRS algorithm can be found on the DRS deepdive page). The lack of disk space on the local VMFS datastores influenced the effectiveness of DRS and limited the options for DRS to balance the cluster.

High availability failover

The same applies when a HA isolation response occurs, when not enough space is available to create the virtual machine swap files, no virtual machines are started on the host. If a host fails, the virtual machines will only power-up on host containing enough free space on their local VMFS datastores. It might be possible that virtual machines will not power-up at-all if not enough free disk space is available.

Failover capacity planning

When using host local swap setting to store the VM swap files, the following factors must be considered.

• Amount of ESX hosts inside cluster.

• HA configured host failover capacity.

• Amount of active virtual machines inside cluster.

• Consolidation ratio (VM per host).

• Average swap file size.

• Free disk space local VMFS datastores.

| Number of hosts inside cluster: | 6 |

| HA configured host failover capacity: | 1 |

| Active virtual machines: | 162 |

| Average consolidation ratio: | 27:1 |

| Average memory reservation: | 0GB |

| Average swap file size: | 4GB |

For the sake of simplicity, let’s assume that DRS balanced the cluster load and that all (identical) virtual machines are spread evenly across every host.

In case of a host failure, 27 VMs will be restarted on the remaining 5 hosts inside the cluster, HA will start 5.4 virtual machines per host, as it is impossible to start 0.4 VM, some ESX hosts will start 6 virtual machines, while other hosts will start 5 VM’s.

The average swap file size is 4GB, this requires at least 24 GB of free space to be available on the local VMFS datastores to start the VM’s. Besides the 24GB, enough free space needs to be available to for DRS to move multiple VMs around to rebalance the load across the cluster.

If the design of the virtual infrastructure incorporates site failover as well, enough free disk space on all the ESX hosts must be reserved to power-up all the affected virtual machines from the failed site.

Closing remarks

Using host local swap can be a valid option for some environments, but additional calculation of the factors mentioned above is necessary to ensure sustained HA and DRS functionality.

VCDX number 029

Monday 8th of February I was scheduled to participate in the defend session of the VCDX panel at Las Vegas. For people not familiar with the VCDX program, the defend panel is the final part of the extensive VCDX program.

My defend session was the first session of the week, so my panel members where fresh and eager to get started. Besides the three panel members, an observer and a facilitator where also present in the room. The session consisted out of three parts;

• Design defend session (75 minutes)

• Design session (30 minutes)

• Troubleshooting session (15 minutes)

During the design defend session you are required to present your design, I used a twelve deck slide presentation and included all blueprints\Visio drawings as appendix. This helped me a lot, as I am not a native English speaker using diagrams helped me to explain the layout.

There is no time limit on the duration of the presentation, but it is wise to keep it as brief as possible. During the session, the panel will try to address a number of sections and if they cannot address these sections this can impact your score.

The design and troubleshooting session you need to show you are able to think on your feet. One of the goals is to understand your though process. Thinking out loud and using the whiteboard will help you a lot.

So how was my experience? After meeting my panel members I started to get really nervous as one of the storage guru’s within VMware was on my panel. The other two panel members have an extreme good track record inside the company as well, so basically I was being judged by an all-star panel. I thought my presentation went well, but word of advice; read your submitted documentation on a regular basis before entering the defend panel as the smallest details can be asked.

After completing the design defend pane, I was asked to step outside. After the short break the design session and troubleshooting scenarios were next. I did not solve the design and troubleshooting scenarios, but that is really not the goal of those sections.

Thinking out loud in English can be challenging for non-native English speakers, so my advice is to try to practice this as much as possible. I did a test presentation for a couple of friends and discovered some areas to focus on before doing the defend part of the program.

After completing my defend panel, I was scheduled to participate as an observer on the remaining defend panel sessions the rest of the week. After multiple sessions as an observer and receiving the news that I passed the VCDX defend panel, I participated as a panel member on a defend session. Hopefully I will be on a lot more panels in the upcoming year, because sitting on the other side of the table is so much better than standing in front of it sweating like a pig. 🙂

Sizing VMs and NUMA nodes

Note: This article describes NUMA scheduling on ESX 3.5 and ESX 4.0 platform, vSphere 4.1 introduced wide NUMA nodes, information about this can be found in my new article: ESX4.1 NUMA scheduling

With the introduction of vSphere, VM configurations with 8 CPUs and 255 GB of memory are possible. While I haven’t seen that much VM’s with more than 32GB, I receive a lot of questions about 8-way virtual machines. With today’s CPU architecture, VMs with more than 4 vCPUs can experience a decrease in memory performance when used on NUMA enabled systems. While the actually % of performance decrease depends on the workload, avoiding performance decrease must always be on the agenda of any administrator.

Does this mean that you stay clear of creating large VM’s? No need to if the VM needs that kind of computing power, but the reason why I’m writing this is that I see a lot of IT departments applying the same configuration policy used for physical machines. A virtual machine gets configured with multiple CPU or loads of memory because it might need it at some point during its lifecycle. While this method saves time, hassle and avoid office politics, this policy can create unnecessary latency for large VMs. Here’s why:

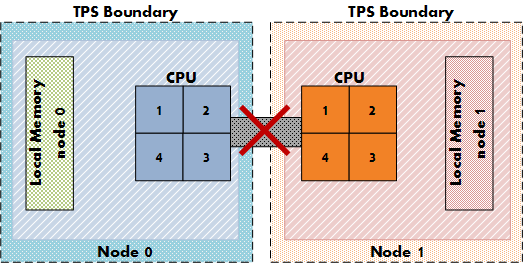

NUMA node

Most modern CPU’s, Intel new Nehalem’s and AMD’s veteran Opteron are NUMA architectures. NUMA stands for Non-Uniform Memory Access, but what exactly is NUMA? Each CPU get assigned its own “local” memory, CPU and memory together form a NUMA node. An OS will try to use its local memory as much as possible, but when necessary the OS will use remote memory (memory within another NUMA node). Memory access time can differ due to the memory location relative to a processor, because a CPU can access it own memory faster than remote memory.

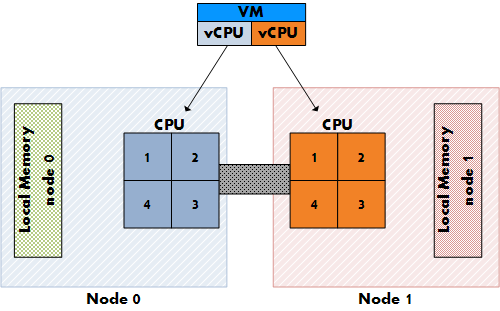

Figure 1: Local and Remote memory access

Accessing remote memory will increase latency, the key is to avoid this as much as possible. How can you ensure memory locality as much as possible?

VM sizing pitfall #1, vCPU sizing and Initial placement.

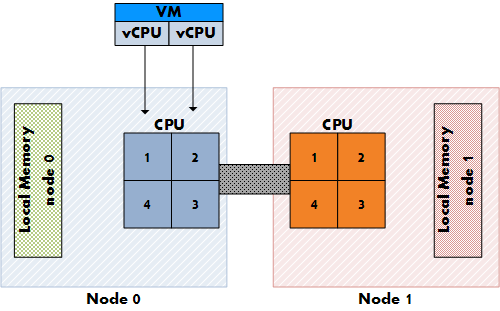

ESX is NUMA aware and will use the NUMA CPU scheduler when detecting a NUMA system. On non-NUMA systems the ESX CPU scheduler spreads load across all sockets in a round robin manner. This approach improves performance by utilizing as much as cache as possible. When using a vSMP virtual machine in a non-NUMA system, each vCPU is scheduled on a separate socket.

On NUMA systems, the NUMA CPU scheduler kicks in and use the NUMA optimizations to assigns each VM to a NUMA node, the scheduler tries to keep the vCPU and memory located in the same node. When a VM has multiple CPUs, all the vCPUs will be assigned to the same node and will reside in the same socket, this is to support memory locality as much as possible.

Figure 2: NON-NUMA vCPU placement

Figure 3: NUMA vCPU placement

At this moment, AMD and Intel offer Quad Core CPU’s, but what if the customer decides to configure an 8-vCPU virtual machine? If a VM cannot fit inside one NUMA node, the vCPUs are scheduled in the traditional way again and are spread across the CPU’s in the system. The VM will not benefit from the local memory optimization and it’s possible that the memory will not reside locally, creating added latency by crossing the intersocket connection to access the memory.

VM sizing pitfall #2: VM configured memory sizing and node local memory size

NUMA will assign all vCPU’s to a NUMA node, but what if the configured memory of the VM is greater than the assigned local memory of the NUMA node? Not aligning the VM configured memory with the local memory size will stop the ESX kernel of using NUMA optimizations for this VM. You can end up with all the VM’s memory scattered all over the server.

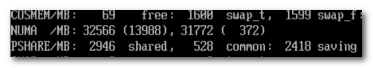

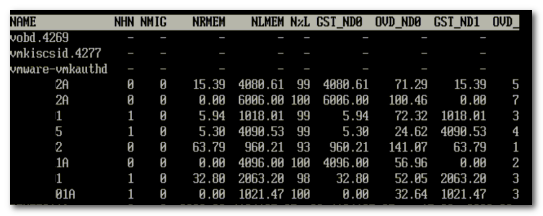

So how do you know how much memory every NUMA node contains? Typically each socket will get assigned the same amount of memory; the physical memory (minus service console memory) is divided between the sockets. For example 16GB will be assigned to each NUMA node on a two socket server with 32GB total physical. A quick way to confirm the local memory configuration of the NUMA nodes is firing up esxtop. Esxtop will only display NUMA statistics if ESX is running on a NUMA server. The first number list the total amount of machine memory in the NUMA node that is managed by ESX, the statistic displayed within the round brackets is the amount of machine memory in the node that is currently free.

Figure 4: esxtop memory totals

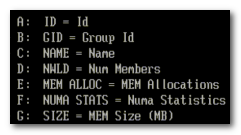

Let’s explore NUMA statistics in esxtop a little bit more based on this example. This system is a HP BL 460c with two Nehalem quad cores with 64GB memory. As shown, each NUMA node is assigned roughly 32GB. The first node has 13GB free; the second node has 372 MB free. It looks it will run out of memory space soon, luckily the VMs on that node still can get access remote memory. When a VM has a certain amount of memory located remote, the ESX scheduler migrates the VM to another node to improve locality. It’s not documented what threshold must be exceeded to trigger the migration, but its considered poor memory locality when a VM has less than 80% mapped locally, so my “educated” guess is that it will be migrated when the VM hit a number below the 80%. Esxtop memory NUMA statistics show the memory location of each VM. Start esxtop, press m for memory view, press f for customizing esxtop and press f to select the NUMA Statistics.

Figure 5: Customizing esxtop

Figure 6 shows the NUMA statistics of the same ESX server with a fully loaded NUMA node, the N%L field shows the percentage of mapped local memory (memory locality) of the virtual machines.

Figure 6: esxtop NUMA statistics

It shows that a few VMs access remote memory. The man pages of esxtop explain all the statistics:

| Metric | Explanation |

|---|---|

| NHN | Current Home Node for virtual machine |

| NMIG | Number of NUMA migrations between two snapshots. It includes balance migration, inter-mode VM swaps performed for locality balancing and load balancing |

| NRMEM (MB) | Current amount of remote memory being accessed by VM |

| NLMEM (MB) | Current amount of local memory being accessed by VM |

| N%L | Current percentage memory being accessed by VM that is local |

| GST_NDx (MB) | The guest memory being allocated for VM on NUMA node x. “x” is the node number |

| OVD_NDx (MB) | The VMM overhead memory being allocated for VM on NUMA node x |

Transparent page sharing and memory locality.

So how about transparent page sharing (TPS), this can increase latency if the VM on node 0 will share its page with a VM on node 1. Luckily VMware thought of that and TPS across nodes is disabled by default to ensure memory locality. TPS still works, but will share identical pages only inside nodes. The performance hit of accessing remote memory does not outweigh the saving of shared pages system wide.

Figure 7: NUMA TPS boundaries

This behavior can be changed by altering the setting VMkernel.Boot.sharePerNode. As most default settings in ESX, only change this setting if you are sure that it will benefit your environment, 99.99% of all environments will benefit from the default setting.

Take away

With the introduction of vSphere ESX 4, the software layer surpasses some abilities current hardware techniques can offer. ESX is NUMA aware and tries to ensure memory locality, but when a VM is configured outside the NUMA node limits, ESX will not apply NUMA node optimizations. While a VM still run correctly without NUMA optimizations, it can experience slower memory access. While the actually % of performance decrease depends on the workload, avoiding performance decrease if possible must always be on the agenda of any administrator.

To quote the resource management guide:

The NUMA scheduling and memory placement policies in VMware ESX Server can manage all VM transparently, so that administrators do not need to address the complexity of balancing virtual machines between nodes explicitly.

While this is true, administrators must not treat the ESX server as a black box; with this knowledge administrators can make informed decisions about their resource policies. This information can help to adopt a scale-out policy (multiple smaller VMs) for some virtual machines instead of a scale up policy (creating large VMs) if possible.

Beside the preference for scale up or scale out policy, a virtual environment will profit when administrator choose to keep the VMs as agile as possible. My advice to each customer is to configure the VM reflecting its current and near future workload and actively monitor its habits. Creating the VM with a configuration which might be suitable for the workload somewhere in its lifetime can have a negative effect on performance.

Get notification of these blogs postings and more DRS and Storage DRS information by following me on Twitter: @frankdenneman