Eric Siebert owner of vSphere-land.com started the second round of the bi-annual top 25 VMware virtualization blogs voting. The last voting was back in January and this is your chance to vote for your favorite virtualization bloggers and help determining the top 25 blogs of 2010.

This year my blog got nominated for the first time and entered the top 25 (no. 14) and I hope to stay in the top 25 after this voting round. My articles tend to focus primarily on resource management and cover topics such as DRS, CPU and memory scheduler to help you make an informed decision when designing or managing a virtual infrastructure. As noble as this may sound I know that these kinds of topics are not mainstream and I can understand that not everybody is interested to read about these topics week in week out.

Fortunately I’ve managed to get a blog post listed in the Top 5 Planet V12n blog post list at least once every month and referred on a regular basis by sites like Yellow-bricks.com (Duncan Epping), Scott Lowe, NTpro.nl (Eric Sloof) and Chad Sakac and of course many others. So it seems I’m doing something right.

This is my list of top 10 articles I’ve created this year:

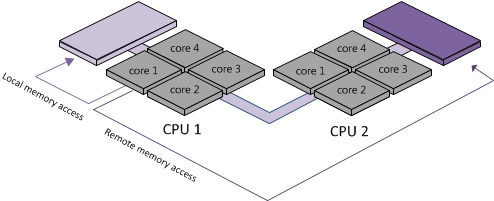

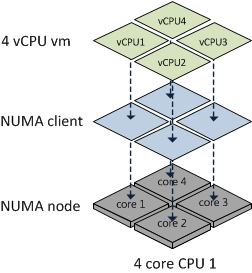

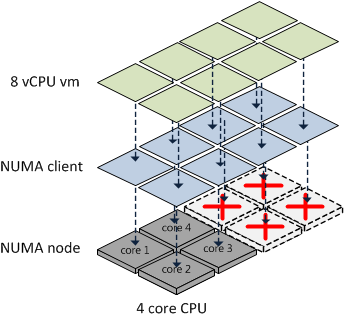

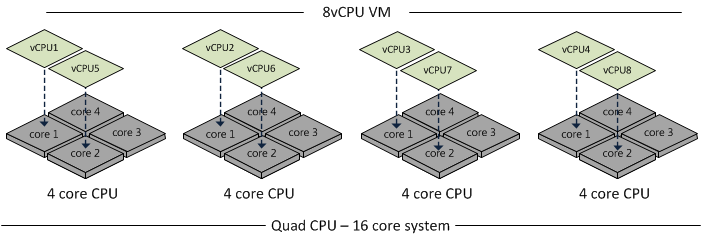

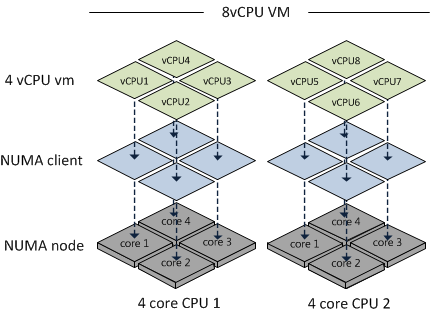

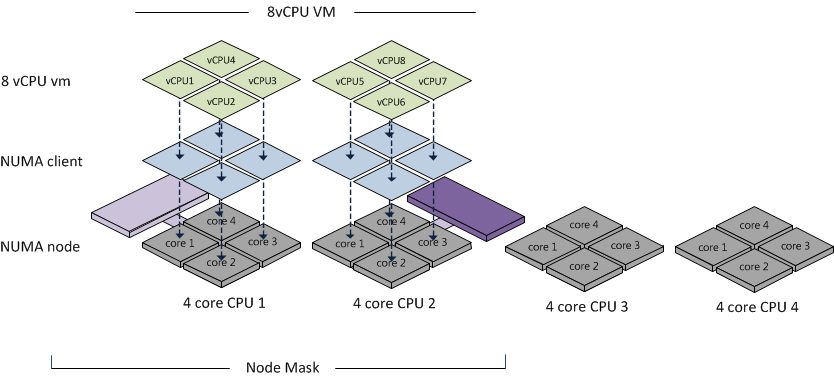

- ESX 4.1 NUMA scheduling

- vCloud Director Architecture

- VM to Host Affinity rule

- Memory Reclaimation, when and how?

- Reservations and CPU scheduling

- Resource pools and memory reservations

- ESX ALUA, TPGS and HP CA

- Removing an orphaned Nexus DVS

- Impact of Host local swap on HA and DRS

- Sizing VMs and NUMA nodes

Closing remarks

This year I’ve got to personally know a lot of bloggers and one thing that amazed me was the time and effort that each of these bloggers put into their work in their spare time. Please take a couple of minutes to vote at vSphere-land whether it’s for me or any of the other bloggers listed and reward them for their hard work.