If a cache miss occurs, the memory controller responsible for that memory line retrieves the data from RAM. Fetching data from local memory could take 190 cycles, while it could take the CPU a whopping 310 cycles to load the data from remote memory. Creating a NUMA architecture that provides enough capacity per CPU is a challenge considering the impact memory configuration has on bandwidth and latency. Part 2 of the NUMA Deep Dive covered QPI bandwidth configurations, with the QPI bandwidth ‘restrictions’ in mind, optimizing the memory configuration contributes local access performance the most. Similar to CPU, memory is a very complex subject and I cannot cover all the intricate details in one post. Last year I published the memory deep dive series and I recommend to review that series as well to get a better understanding of the characteristics of memory.

Memory Channel

The Intel Xeon microarchitecture contains one or two integrated memory controllers. The memory controller connects through a channel to the DIMMs. Sandy Bridge (v1) introduced quadruple memory channels. These multiple independent channels increase data transfer rates due to concurrent access of multiple DIMMs. When operating in quad-channel mode, latency is reduced due to interleaving. The memory controller distributes the data amongst the DIMM in an alternating pattern, allowing the memory controller to access each DIMM for smaller bits of data instead of accessing a single DIMM for the entire chunk of data. This provides the memory controller more bandwidth for accessing the same amount of data across channels instead of traversing a single channel storing all data into a single DIMM. In total, there are four memory channels per processor, each channel connect up to three DIMM slots. Within a 2 CPU system, eight channels are present, connecting the CPUs to a maximum of 24 DIMMs.

Quad-channel mode is activated when four identical DIMMs are put in quad-channel slots. When three identical DIMMs are used in Quad-channel CPU architectures, triple-channel is activated, when two identical DIMMs are used, the system will operate in dual-channel mode.

Please note that interleaving memory across channels is not the same as the Node Interleaving setting of the BIOS. When enabling Node Interleaving the system breaks down the entire memory range of both CPUs into a single memory address space consisting 4KB addressable regions and maps them in a round robin fashion from each node (more info can be found in part 1). Channel interleaving is done within a NUMA node itself.

Regions

When all four channels are populated the CPU interleaves memory access across the multiple memory channels. This configuration has the largest impact on performance, especially on throughput. To leverage interleaving optimally, the CPU creates regions. The memory controller groups memory across the channels as much as possible. When creating a 1 DIMM per Channel (DPC) configuration, the CPU creates one region (Region 0) and interleaves the memory access.

In this example, one DIMM is placed in a DIMM slot of each channel . Both NUMA nodes are configured identically. The total amount of memory is 128 GB, each NUMA node contains 64 GB. Each NUMA node benefits from the quad-channel mode and has its region 1 filled. Each NUMA node controls its own regions.

Unbalanced NUMA Configuration

In this example, an additional 64GB is installed in NUMA node 0. CPU 0 will create two regions and will interleave the memory across the four channels and benefit from the extra capacity. NUMA Node 0 contains 128 GB, NUMA node 1 contains 64 GB. However, this level of optimization of local bandwidth would not help the virtual machines who are scheduled to run on NUMA node 1, less memory available means it could require fetching memory remotely. Remote memory access experiences the extra latency of multi-hops and the bandwidth constraint of the QPI compared to local memory access.

Unbalanced Channel Configuration

The memory capacity is equally distributed across the NUMA Nodes, both nodes contain 96 GB of RAM. The CPUs create two regions, region 0 (64GB) interleaves across four channels, region 1 (32GB) interleaves across 2 channels. Native DIMM speed remains the same (MHz). However, some performance loss occurs due to control management overhead. With local and remote memory access in mind, this configuration does not provide a consistent memory performance. Data access is done across four channels for region 0 and two channels for region 1. Data access across the QPI to the other memory controller might fetch the data across two or four channels. LCC configurations contain a single memory controller whereas MCC and HCC contain two memory controllers. This memory layout creates an unbalance in load on memory controllers with MCC and HCC configuration as well.

When adding more DIMMs to the channel, the memory controller consumes more bandwidth for control commands. By adding more DIMMs, more management overhead is created which reduces the available bandwidth for read and write data. The question arises, do you solve the capacity requirement by using higher capacity DIMMS or take the throughput hit by moving to a 3 DIMMs per Channel (DPC) configuration.

DIMMS per Channel

When designing a system that provides memory capacity while maintaining performance requires combining memory ranking configuration , DIMMs per Channel and CPU SKU knowledge. Adding more DIMMS to the channel increases capacity, unfortunately, there is a downside when aiming for high memory capacity configurations and that is the loss of bandwidth. This has to do with the number of ranks per channel.

Ranks

DIMMs come in three rank configurations; single-rank, dual-rank or quad-rank configuration, ranks are denoted as (xR). Together the DRAM chips grouped into a rank contain 64-bit of data. If a DIMM contains DRAM chips on just one side of the printed circuit board (PCB), containing a single 64-bit chunk of data, it is referred to as a single-rank (1R) module. A dual rank (2R) module contains at least two 64-bit chunks of data, one chunk on each side of the PCB. Quad ranked DIMMs (4R) contains four 64-bit chunks, two chunks on each side.

To increase capacity, combine the ranks with the largest DRAM chips. A quad-ranked DIMM with 4Gb chips equals 32GB DIMM (4Gb x 8bits x 4 ranks). As server boards have a finite amount of DIMM slots, quad-ranked DIMMs are the most effective way to achieve the highest memory capacity. However, a channel supports a limited amount of ranks due to maximal capacitance.

Ivy Bridge (v2) contained a generation 2 DDR3 memory controller that is aware of the physical ranks behind the data buffer. Allowing the memory controller to adjust the timings and providing better back-to-back reads and writes. Gen 2 DDR3 systems reduce the latency gap between Registered DIMMs (RDIMMS) and Load Reduced DIMMs but most importantly it reduces the bandwidth gap.

Memory rank impacts the number of DIMMS supported per channel. Modern CPUs can support up to 8 physical ranks per channel. This means that if a large amount of capacity is required quad ranked RDIMMs or LRDIMMs should be used. When using quad ranked RDIMMs, only 2 DPC configurations are possible as 3 DPC equals 12 ranks, which exceeds the 8 ranks per memory rank limit of currents systems. The memory deep dive article Memory Subsystem Organisation covers ranks more in-depth.

Load Reduced DIMMs

Load Reduced DIMMs (LRDIMMs) buffer both the control and data lines from the DRAM chips. This decreases the electrical load on the memory controller allowing for denser memory configurations. DDR3 LRDIMMS experienced added latency due to the use of a buffer, DDR4 changed the design of the DIMM structure and placed the buffer closer to the DRAM chips removing the extra latency (For more info: Memory Deep Dive: DDR4 Memory).

DPC Bandwidth Impact

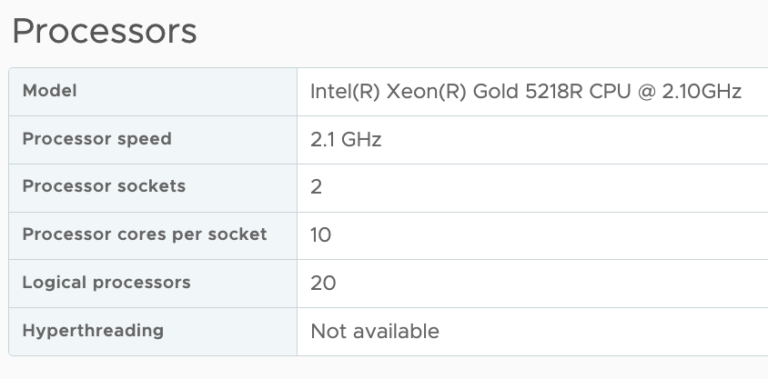

The CPU SKU determines the maximum memory frequency. Broadwell (v4) LLC support up to 2133 MHz, MCC and HCC configurations support up to 2400 MHz (source: ark.intel.com). Choosing between a 10 core E5-2630 v4 and a 12 core E5-2650 v4, does not only provide you 2 extra cores, it provides an additional memory bandwidth. 2133 MHz equals 17064 MB/s, whereas 2400 MHz has a theoretical bandwidth of 19200 MB/s. By moving to an MCC configuration, your not only increasing the core count, but you will increase the memory subsystem with 13%, each core will benefit from this.

The DIMM type and the DPC value of the memory configuration restrict the frequency. As mentioned, using more physical ranks per channel lowers the clock frequency of the memory banks. When more ranks per DIMM are used the electrical loading of the memory module increases. And as more ranks are used in a memory channel, memory speed drops restricting the use of additional memory. Therefore in certain configurations, DIMMs will run slower than their listed maximum speeds.

| RDIMM | 1 DPC | 2 DPC | 3 DPC | LRDIMM | 1 DPC | 2 DPC | 3 DPC | Source |

|---|---|---|---|---|---|---|---|---|

| Cisco | 2400 MHz | 2400 MHz | 1866 MHz | 2400 MHz | 2400 MHz | 2133 MHz | Cisco PDF | |

| Dell | 2400 MHz | 2400 MHz | 1866 MHz | 2400 MHz | 2400 MHz | 2133 MHz | Dell.com | |

| Fujitsu | 2400 MHz | 2400 MHz | 1866 MHz | 2400 MHz | 2400 MHz | 1866 MHz | Fujitsu PDF | |

| HP | 2400 MHz | 2400 MHz | 1866 MHz | 2400 MHz | 2400 MHz | 2400 MHz* | HP PDF | |

| Performance Drop | 0 | 0 | 28% | 0 | 0 | 12%/28% |

* I believe this is a documentation error from HP side, DDR4 standards support 2133 MHz with 3 DPC configurations.

When creating a system containing 384 GB, using 16GB and populat every DIMM slot results in a memory frequency of 1866 MHz, while using (and let’s not forget, paying for) 2400 MHZ RDIMMs.

Using previous examples of unbalanced NUMA or unbalanced channel configuration, you simply cannot create a 384GB configuration with 32GB DIMMs alone. The next correct configuration, leveraging quadruple channels, is a mix of 32 GB and 16 GB DIMMs.

Mixed configurations are supported however there are some requirements and limitations server vendors state when using mixed configurations:

- RDIMMS and LRDIMMS must not be mixed.

- RDIMMs of type x4 and x8 must not be mixed.

- The configuration is incrementing from bank 1 to 3 with decreasing DIMM sizes. The larger modules should be installed first.

To get the best performance, select the memory module with the highest rank configuration. Due to the limitation of 8 ranks per channel, Rx4 RDIMMs and LRDIMMS allow for the largest capacity configuration, while maintaining bandwidth. Looking at today’s memory prices, DDR4 32GB memory modules are the sweet spot.

Bandwidth and CAS Timings

The memory area of a memory bank inside a DRAM chip is made up of rows and columns. To access the data, the chip needs to be selected, then the row is selected, and after activating the row the column can be accessed. At this time the actual read command is issued. From that moment onwards to the moment the data is ready at the pin of the module, that is the CAS latency. It’s not the same as load-to-use as that is the round trip time measured from a CPU perspective.

CAS latencies (CL) increase with each new generation of memory, but as mentioned before latency is a factor of clock speed as well as the CAS latency. Generally, a lower CL will be better, however, they are only better when using the same base clock. If you have faster memory, higher CL could end up better. When DDR3 was released it offered two speeds, 1066MHz CL7 and 1333 MHz CL8. Today servers are equipped with 1600 MHz CL9 memory.DDR4 was released with 2133 MHz CL13. However, 2133 MHz CL15 is available at the major server vendors. To work out the unloaded latency is: (CL/Frequency) * 2000.

This means that 1600 MHz CL9 provides an unloaded latency of 11.25ns, while 2133 MHz CL15 provides an unloaded latency of 14.06ns. A drop of 24.9%. However, there is an interesting correlation with DDR4 bandwidth and CAS latency. Many memory vendors offer DDR4 2800 MHz CL14 to CL 16. When using the same calculation, 2800 MHz CL16 provides an unloaded latency of (16/2800) * 2000 = 11.42ns. Almost the same latency at DDR3 1600 MHz CL9! 2800 MHZ CL14 provides an unloaded latency of 10ns, resulting in similarly loaded latencies while providing more than 75% bandwidth.

Energy optimized

DDR3 memory runs at 1.5 V, low voltage DDR3 memory runs at 1.3V. There is currently no low-voltage extension for DDR4 (yet), however, DDR4 runs standard at 1.2 V. This reduction in power consumption is a big advantage of DDR4 as it provides energy savings of approximately 30% with the same data rate. Most BIOSes contain energy optimized settings reducing power consumption of memory. Due to the already low voltage, little power savings are gained. However bandwidth drops, Fujitsu states memory frequency drops down to 1866 MHz regardless of DPC configuration when using 2400 MHz memory modules. Be aware when configuring the BIOS and verify memory frequency is not modified when using particular energy settings.

NUMA system architecture configuration

In order to allow your virtual machines to get the best performance, especially consistent performance, care must be taken when designing and configuring an ESXi host. The selection of CPU die design, low core count, medium core count or high core count, impacts local memory bandwidth (2133 MHz vs 2400 MHz, Interconnect bandwidth (QPI 6.4 GT/s, 8.0 GT/s or 9.6 GT/s) and thus remote memory performance as well well as the cache snoop modes, HS with DR + OSB or Cluster-on-Die. These elements all have an important role in overall performance of your virtual datacenter. This concludes the physical configuration portion of the NUMA Deep Dive series, up next, VMkernel CPU and Memory Scheduling.

The 2016 NUMA Deep Dive Series:

Part 0: Introduction NUMA Deep Dive Series

Part 1: From UMA to NUMA

Part 2: System Architecture

Part 3: Cache Coherency

Part 4: Local Memory Optimization

Part 5: ESXi VMkernel NUMA Constructs

Part 6: NUMA Initial Placement and Load Balancing Operations

Part 7: From NUMA to UMA

This is what I’ve been seeking for years!

Thanks, Frank!

Hi Frank,

Thanks for the great articles. Your writings are easy to read and understand. May I know how these concepts are relevant in Public cloud for Large capacity VM instances(like 512 GB or 1TB Memory + multi cores CPUs).