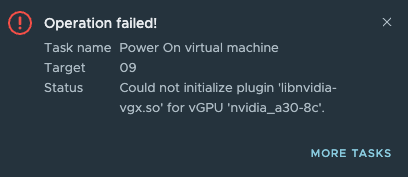

I was building a new lab with some NVIDIA A30 GPUs in a few hosts, and after installing the NVIDIA driver onto the ESXi host, I got the following error when powering up a VM with a vGPU profile:

Typically that means three things:

- Shared Direct passthrough is not enabled on the GPU

- ECC memory is enabled

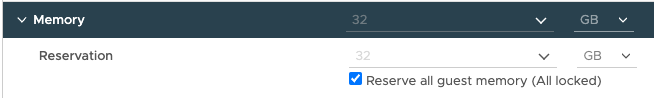

- VM Memory reservation was not set to protect its full memory range/

But shared direct passthrough was enabled, and because I was using a C-type profile and an NVIDIA A30 GPU, I did not have to disable ECC memory. According to the NVIDIA Virtual GPU software documentation: 3.4 Disabling and Enabling ECC Memory

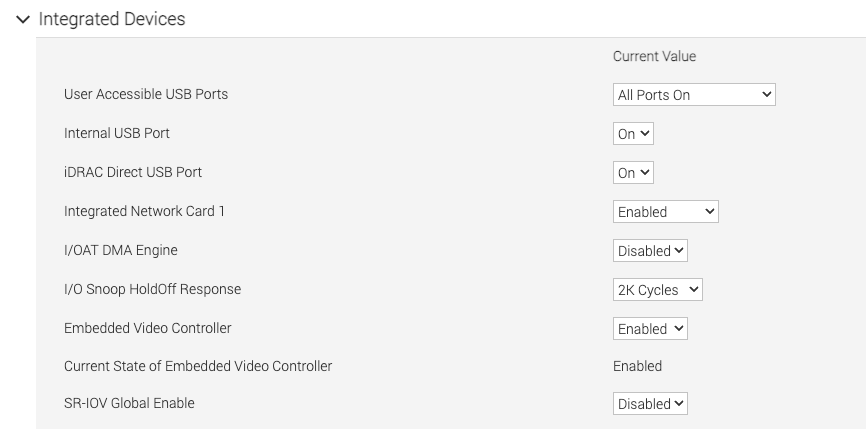

I discovered that my systems did not have SR-IOV enabled in the BIOS. By enabling “SR-IOV Global Enable” I could finally boot a VM

SR-IOV is also required if you want to use vGPU Multi-Instance GPU, so please check for this setting when setting up your ESXi hosts.

But for completeness’ sake, let’s go over shared direct passthrough and GPU ECC memory configurations and see how to check both settings:

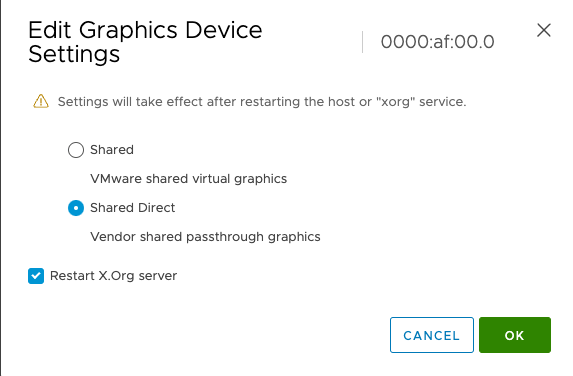

Shared Direct Passthrough

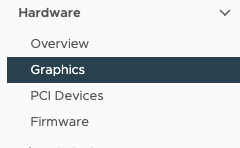

Step 1: Select the ESXi host with the GPU in the inventory view in vCenter

Step 2: Select Configure in the menu shown on the right side of the screen

Step 3: Select Graphics in the Hardware section

Select Shared Direct and click on OK.

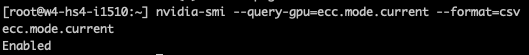

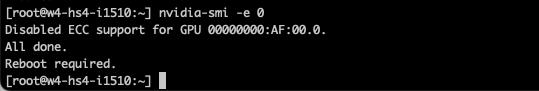

Disabling ECC Memory on the GPU Device

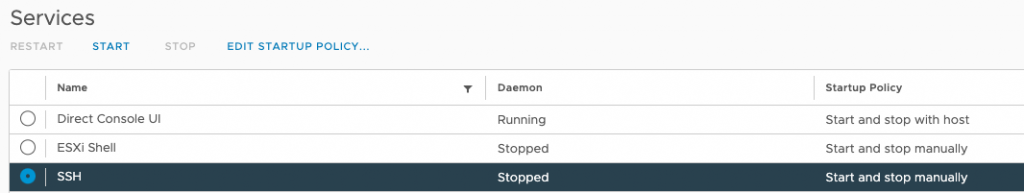

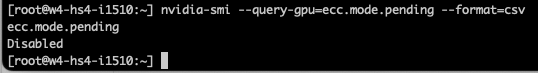

To disable ECC memory on the GPU Device, you must use the NVIDIA-SMI command, which you need to operate from the ESXi host shell. Ensure you have SSH enabled on the host. (Select ESXi host, go to configure, System, Services, select SSH and click on Start)

nvidia-smi --query-gpu=ecc.mode.current --format=csv

nvidia-smi -e 0

nvidia-smi --query-gpu=ecc.mode.pending --format=csv

Amazingly explained, I was facing the same issue with A10s.

Thank you Frank!