A lot of interesting material is written about configuring Quality of Service (QoS) on 10GB (converged) networks in Virtual Infrastructures. With the release of vSphere 4.1, VMware introduced a network QoS mechanism called Network I/O Control (NetIOC). The two most popular Blade systems; HP with Flex10 technology and Cisco UCS both offer traffic shaping mechanisms at hardware level.

Both NetIOC and Cisco UCS approach network Quality of Service with a sharing perspective, guaranteeing a minimum amount of bandwidth opposed to the HP Flex-10 technology, which isolates the available bandwidth and dedicate an X amount of bandwidth to a specified NIC.

When allocating bandwidths to the various network traffic streams most admins try to stay on the safe side and over-allocate bandwidth to virtual machine traffic. Obviously it is essential to guarantee enough bandwidth to virtual machines but bandwidth is finite, resulting in less bandwidth available to other types of traffic such as vMotion. Unfortunately by reducing the available bandwidth used for vMotion traffic can ultimately have negative effect on the performance of the virtual machines.

MaxMovesPerHost

In vSphere 4.1 DRS uses an adaptive technique called MaxMovesPerHost. This technique allows DRS to decide the optimum concurrent vMotions per ESX host for Load-Balancing operations. DRS will adapt the maximum concurrent vMotions per host (8) based upon the average migration time observed from previous migrations. Decreasing bandwidth available for vMotion traffic can result in a lower number of allowed concurrent vMotions.In turn the amount of allowed concurrent vMotions affects the number of migration recommendations generated by DRS. DRS will only calculate and generate the amount of migration recommendation is believes it can complete before the next DRS invocation. It limits the amount of generated migration recommendations, as there is no advantage in generating recommending migrations that cannot be complement before the next DRS invocation. During the next re-evaluation cycle, virtual machine resource demand can have changed rendering the previous recommendations obsolete

By limiting the amount of bandwidth available to vMotion, it can decrease the maximum amount of concurrent vMotions per host and could risk leaving the cluster imbalanced for a longer period of time.

Both NetIOC and Cisco UCS Class of Service (COS) Quality of Service can be used to guarantee a minimum amount of bandwidth available to vMotion during contention. Both techniques allow vMotion traffic to use all the available bandwidth if no contention occurs. HP uses a different approach, isolating and dedicating a specific amount of bandwidth to an adapter and thereby possible restricting specific workloads.

Bred Hedlund wrote an article explaining the fundamental differences in how bandwidth is handled between HP Flex-10 and Cisco UCS.

Cisco UCS intelligent QoS vs. HP Virtual Connect rate limiting

Recommendations for Flex-10

Due to the restrictive behavior of Flex-10, it is recommended to specifically take the adaptive nature of DRS into account and not restricting vMotion traffic too much when shaping network bandwidth for the configured FlexNics. It is recommended to monitor the bandwidth requirements of the virtual machines and adjust the rate limit for virtual machine traffic and vMotion traffic accordingly, reducing the possibility of delaying DRS to reach a steady state when a significant load imbalance in the cluster exits.

Recommendations for NetIOC and UCS QoS

Fortunately the sharing nature of NetIOC and UCS allow other network streams allocate bandwidth during periods without bandwidth contention. Despite this “plays well with other” nature, it is recommended to assign a minimum guarantee amount of bandwidth for vMotion traffic (NetIOC) or a custom Class of Service to the vMotion vNICs (UCS). Chances are that if virtual machines saturate the network, virtual machines are experiencing a high workload and DRS will try to provide the resources the virtual machines are entitled to.

VM settings: Prefer Partially Automated over Disabled

Due to requirements or constraints it might be necessary to exclude a virtual machine from automatic migration and stop it from moving around by DRS. Use the “Partially automated” setting instead of “Disabled” at the individual virtual machine automation level. Partially automated blocks automated migration by DRS, but keep the initial placement function. During startup, DRS is still able to select the most optimal host for the virtual machine. By selecting the “Disabled” function, the virtual machine is started on the ESX server it is registered and chances of getting an optimal placement are low(er).

An exception for this recommendation might be a virtualized vCenter server, most admins like to keep track of the vCenter server in case a disaster happens. After a disaster occurs, for example a datacenter-wide power-outage, they only need to power-up the ESX host on which the vCenter VM is registered and manually power-up the vCenter VM. An alternative to this method is to keep track of the datastore vCenter is placed on and register and power-on the VM on a (random) ESX host after a disaster. Slightly more work than disabling DRS for vCenter, but offers probably better performance of the vCenter Virtual Machine during normal operations.

Due to expanding virtual infrastructures and new additional features, vCenter is becoming more and more important for day-to-day operational management today. Assuring good performance outweighs any additional effort necessary after a (hopefully) rare occasion, but both methods have merits.

Resource pools and simultaneous vMotions

Many organizations have the bad habit to use resource pools to create a folder structure in the host and cluster view of vCenter. Virtual machines are being placed inside a resource pool to show some kind of relation or sorting order like operating system or types of application. This is not reason why VMware invented resource pools. Resource pools are meant to prioritize virtual machine workloads, guarantee and/or limit the amount of resources available to a group of virtual machines.

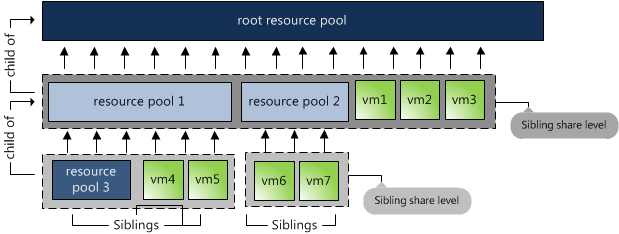

During design workshops I always try to convince the customer why resource pools should not to be used to create a folder structure. The main object I have for this is the sibling share level of resource pools and virtual machines.

Shares specify the priority for the virtual machine or resource pool relative to other resource pools and/or virtual machines with the same parent in the resource hierarchy. The key point is that shares values can be compared directly only among siblings: the ratios of shares of VM6:VM7 tells which VM is higher priority, but the shares of VM4:VM6 does not tell which VM has higher priority.

Many articles have been written about this, such as: “The resource pool priority-pie paradox”, (Craig Risinger) “Resource pools and shares” (Duncan Epping), “Don’t add resource pools for fun” (Eric Sloof) and “Resource pools caveats” (Bouke Groenescheij).

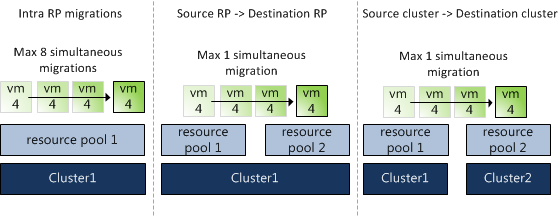

But another reason not to use resource pools as a folder structure is the limitation resource pools inflict on vMotion operations. Depending on the network speed, vSphere 4.1 allows 8 simultaneous vMotion operations, however simultaneous migrations with vMotion can only occur if the virtual machine is moving between hosts in the same cluster and is not changing its resource pool. This is recently confirmed in Knowledge Base article 1026102.

Fortunately simultaneous cross-resource-pool vMotions can occur if the virtual machines are migrating to different resource pools, but still one vMotion operation per target resource pool. Because clusters are actually implicit resource pools (the root resource pool), migrations between clusters are also limited to a single concurrent vMotion operation.

Using resource pools to create a folder structure can not only impact the availability of resources for the virtual machines, but can also hinder your daily (maintenance) operations if batches of virtual machines are being migrated to other resource pools.

DRS 4.1 Adaptive MaxMovesPerHost

Another reason to upgrade to vSphere 4.1 is the DRS adaptive MaxMovesPerHost parameter. The MaxMovesPerHost setting determines the maximum amount of migrations per host for DRS load balancing. DRS evaluates a cluster and recommends migrations. By default this evaluation happens every 5 minutes. There are limits to how many migrations DRS will recommend per interval per ESX host because there’s no advantage to recommending so many migrations that they won’t all be completed by the next re-evaluation, by which time demand could have changed anyway.

Be aware that there is no limit on max moves per host for a host entering maintenance or standby mode, but there’s a limit on max moves per host for load balancing. This can (but usually shouldn’t) be changed by setting the DRS Advanced Option “MaxMovesPerHost”. The default value is 8 and is set at the Cluster level. Remember, the MaxMovesPerHost is a cluster setting but configures the maximum migrations from a single host on each DRS invocation. This means you can still see 30 or 40 vMotion operations in the cluster during a DRS invocation.

In ESX/ESXi 4.1, the limit on moves per host will be dynamic, based on how many moves DRS thinks can be completed in one DRS evaluation interval. DRS adapts to the frequency it is invoked (pollPeriodSec, default 300 seconds) and the average migration time observed from previous migrations. In addition DRS follows the new maximum number of concurrent vMotion operations per host over depending on the Network Speed (1GB – 4 vMotions, 10GB – 8 vMotions).

Due to the adaptive nature the algorithm, the name of the setting is quite misleading as it’s no longer a maximum. The “MaxMovesPerHost” parameter will still exist, but its value might be exceeded by DRS. By leveraging the increased amount of concurrent vMotion operations per host and the evaluation of previous migration times DRS is able to rebalance the cluster in a fewer amount of passes. By using fewer amounts of passes, the virtual machines will receive their entitled resources much quicker which should positively affect virtual machine performance.

vSphere 4.1 – HA and DRS deepdive book

This is a complete repost of the article written by Duncan, as all publications about this book must be checked by VMware Legal, he wrote one article and speaks for the both of us.

URL: http://www.yellow-bricks.com/2010/08/24/soon-in-a-bookstore-near-you-ha-and-drs-deepdive

Over the last couple of months Frank Denneman and I have been working really hard on a secret project. Although we have spoken about it a couple of times on twitter the topic was never revealed. Months ago I was thinking about what a good topic would be for my next book. As I already wrote a lot of articles on HA it made sense to combine these and do a deepdive on HA. However a VMware Cluster is not just HA. When you configure a cluster there is something else that usually is enabled and that is DRS. As Frank is the Subject Matter Expert on Resource Management / DRS it made sense to ask Frank if he was up for it or not… Needless to say that Frank was excited about this opportunity and that was when our new project was born: VMware vSphere 4.1 – HA and DRS deepdive.

As both Frank and I are VMware employees we contacted our management to see what the options were for releasing this information to market. We are very excited that we have been given the opportunity to be the first official publication as part of a brand new VMware initiative, codenamed Rome. The idea behind Rome along with pertinent details will be announced later this year.

Our book is currently going through the final review/editing stages. For those wondering what to expect, a sample chapter can be found here. The primary audience for the book is anyone interested in high availability and clustering. There is no prerequisite knowledge needed to read the book however, the book will consist of roughly 220 pages with all the detail you want on HA and DRS. It will not be a “how to” guide, instead it will explain the concepts and mechanisms behind HA and DRS like Primary Nodes, Admission Control Policies, Host Affinity Rules and Resource Pools. On top of that, we will include basic design principles to support the decisions that will need to be made when configuring HA and DRS.

I guess it is unnecessary to say that both Frank and I are very excited about the book. We hope that you will enjoy reading it as much as we did writing it. Stay tuned for more info, the official book title and url to order the book.

Frank and Duncan