Lately I have received a couple of questions about Swap file placement. As I mentioned in the article “Storage DRS and alternative swap file locations”, it is possible to configure the hosts in the DRS cluster to place the virtual machine swapfiles on an alternative datastore. Here are the questions I received and my answer:

Question 1: Will placing a swap file on a local datastore increase my vMotion time?

Yes, as the destination ESXi host cannot connect to the local datastore, the file has to be placed on a datastore that is available for the new ESXi host running the incoming VM.Therefor the destination host creates a new swap file in its swap file destination. vMotion time will increase as a new file needs to be created on the local datastore of the destination host and swapped memory pages potentially need to be copied.

Question 2: Is the swap file an empty file during creation or is it zeroed out?

When a swap file is created an empty file equal to the size of the virtual machine memory configuration. This file is empty and does not contain any zeros.

Please note that if the virtual machine is configured with a reservation than the swap file will be an empty file with the size of (virtual machine memory configuration – VM memory reservation). For example, if a 4GB virtual machine is configured with a 1024MB memory reservation, the size of the swap file will be 3072MB.

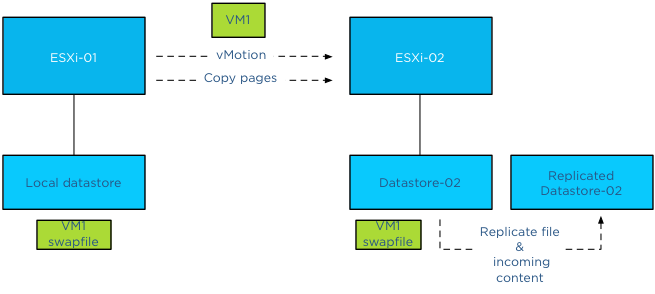

Question 3: What happens with the swap file placed on a non-shared datastore during vMotion?

During vMotion, the destination host creates a new swap file in its swap file destination. If the source swap file contains swapped out pages, only those pages are copied over to the destination host.

Question 4: What happens if I have an inconsistent ESXi host configuration of local swap file locations in a DRS cluster?

When selecting the option “Datastore specified by host”, an alternative swap file location has to be configured on each host separately. If one host is not configured with an alternative location, then the swap file will be stored in the working directory of the virtual machine. When that virtual machine is moved to another host configured with an alternative swap file location, the contents of the swap file is copied over to the specified location, regardless of the fact that the destination host can connect to the swap file in the working directory.

Question 5: What happens if my specified alternative swap file location is full and I want to power-on a virtual machine?

If the alternative datastore does not have enough space, the VMkernel tries to store the VM swap file in the working directory of the virtual machine. You need to ensure enough free space is available in the working directory otherwise the VM not allowed to be powered up.

Question 6: Should I place my swap file on a replicated datastore?

Its recommended placing the swap file on a datastore that has replication disabled. Replication of files increases vMotion time. When moving the contents of a swap file into a replicated datastore, the swap file and its contents need to replicated to the replica datastore as well. If synchronous replication is used, each block/page copied from the source datastore to the destination datastore, it needs to wait until the destination datastore receives an acknowledgement from its replication partner datastore (the replica datastore).

Question 7: Should I place my swap file on a datastore with snapshots enabled?

To save storage space and design for the most efficient use of storage capacity, it is recommended not to place the swap files on a datastore with snapshot enabled. The VMkernel places pages in a swap file if it’s there is memory pressure, either by an overcommitted state or the virtual machine requires more memory than it’s configured memory limit. It only retrieves memory from the swap file if it requires that particular page. The VMkernel will not transfer all the pages out of the swap file if the memory pressure on the host is resolved. It keeps unused swapped out pages in the swap file, as transferring unused pages is nothing more than creating system overhead. This means that a swapped out page could stay there as long as possible until the virtual machine is powered-off. Having the possibility of snapshotting idle and unused pages on storage could reduce the pools capacity used for snapshotting useful data.

Question 8: Should I place my swap file on a datastore on a thin provisioned datastore (LUN)?

This is a tricky one and it all depends on the maturity of your management processes. As long as thin provisioned datastore is adequately monitored for utilization and free space and controls are in place that ensures sufficient free space is available to cope with bursts of memory use, than it could be a viable possibility.

The reason for the hesitation is the impact a thin provisioned datastores has on the continuity of the virtual machine.

Placement of swap files by VMkernel is done at the logical level. The VMkernel determines if the swap file can be placed on the datastore based on its file size. That means that it checks the free space of a datastore reported by the ESX host, not the storage array. However the datastore could exist in a heavily over-provisioned datapool.

Once the swap file is created the VMkernel assumes it can store pages in the entire swap file, see question 2 for swap file calculation. As the swap file is just an empty file until the VMkernel places a page in the swap file, the swap file itself takes up a little space on the thin disk datastore. Now this can go on for a long time and nothing will happen. But what if the total reservation consumed, memory overcommit-level and workload spikes on the ESXi host layer are not correlated with the available space in the thin provisioning storage pool? Understand how much space the datastore could possibly obtain and calculate the maximum configured size of all existing swap files on the datastore to avoid an Out-of space condition.

(Alternative) VM swap file locations Q&A – part 2

Get notification of these blogs postings and more DRS and Storage DRS information by following me on Twitter: @frankdenneman

Mem.MinFreePct sliding scale function

One of the cool “under the hood” improvements vSphere 5 offers is the sliding scale function of the Mem.MinFreePct.

Before diving into the sliding scale function, let’s take a look at the Mem.MinFreePct function itself. MinFreePct determines the amount of memory the VMkernel should keep free. This threshold is subdivided in various memory thresholds, i.e. High, Soft, Hard and Low and is introduced to prevent performance and correctness issues.

The threshold for the low state is required for correctness. In other words, it protects the VMkernel layer from PSOD’s resulting from memory starvation. The soft and hard thresholds are about virtual machine performance and memory starvation prevention. The VMkernel will trigger more drastic memory reclamation techniques when it approaches the Low state. If the amount of free memory is just a bit less than the Min.FreePct threshold, the VMkernel applies ballooning to reclaim memory. The ballooning memory reclamation technique introduces the least amount of performance impact on the virtual machine by working together with the Guest operating system inside the virtual machine, however there is some latency involved with ballooning. Memory compressing helps to avoid hitting the low state without impacting virtual machine performance, but if memory demand is higher than the VMkernels’ ability to reclaim, drastic measures are taken to avoid memory exhaustion and that is swapping. However swapping will introduce VM performance degradations and for this reason this reclamation technique is used when desperate moments require drastic measurements. For more information about reclamation techniques I recommend reading the “disable ballooning” article.

vSphere 4.1 allowed the user to change the default MinFreePct value of 6% to a different value and introduced a dynamic threshold of the Soft, Hard and Low state to set appropriate thresholds and prevent virtual machine performance issues while protecting VMkernel correctness. By default vSphere 4.1 thresholds was set to the following values:

| Free memory state | Threshold | Reclamation mechanism |

| High | 6% | None |

| Soft | 64% of MinFreePct | Balloon, compress |

| Hard | 32% of MinFreePct | Balloon, compress, swap |

| Low | 16% of MinFreePct | Swap |

Using a default MinFreePct value of 6% can be inefficient in times where 256GB or 512GB systems are becoming more and more mainstream. A 6% threshold on a 512GB will result in 30GB idling most of the time. However not all customers use large systems and prefer to scale out than to scale up. In this scenario, a 6% MinFreePCT might be suitable. To have best of both worlds, ESXi 5 uses a sliding scale for determining its MinFreePct threshold.

| Free memory state threshold | Range |

| 6% | 0-4GB |

| 4% | 4-12GB |

| 2% | 12-28GB |

| 1% | Remaining memory |

Let’s use an example to explore the savings of the sliding scale technique. On a server configured with 96GB RAM, the MinFreePct threshold will be set at 1597.6MB, opposed to 5898.24MB if 6% was used for the complete range 96GB.

| Free memory state | Threshold | Range | Result |

| High | 6% | 0-4GB | 245.96MB |

| 4% | 4-12GB | 327.68MB | |

| 2% | 12-28GB | 327.68MB | |

| 1% | Remaining memory | 696.32MB | |

| Total High Threshold | 1597.60MB |

Due to the sliding scale, the MinFreePct threshold will be set at 1597.96MB, resulting in the following Soft, Hard and low threshold:

| Free memory state | Threshold | Reclamation mechanism | Threshold in MB |

| Soft | 64% of MinFreePct | Balloon | 1022.69 |

| Hard | 32% of MinFreePct | Balloon, compress | 511.23 |

| Low | 16% of MinFreePct | Balloon, compress, swap | 255.62 |

Although this optimization isn’t as sexy as Storage DRS or one of the other new features introduced by vSphere5 it is a feature of vSphere 5 that helps you drive your environments to higher consolidation ratios.

Re: impact of large pages on consolidation ratios

Gabe wrote an article about the impact of large pages on the consolidation ratio, I want to make something clear before the wrong conclusions are being made.

Large pages will be broken down if memory pressure occurs in the system. If no memory pressure is detected on the host, i.e the demand is lower than the memory available, the ESX host will try to leverage large pages to have the best performance.

Just calculate how big the Translation lookaside Buffer (TLB)is when a 2GB virtual machine use small pages (2048MB/4KB=512.000) or when using large pages 2048MB/2.048MB =1000. The VMkernel need to traverse the TLB through all these pages. And this is only for one virtual machine, imagine if there are 50 VMs running on the host.

Like ballooning and compressing, if there is no need to over-manage memory than ESX will not do it as it generates unnecessary load.

Using Large pages shows a different memory usage level, but there is nothing to worry about. If memory demand exceeds the availability of memory, the VMkernel will resort to share-before-swap and compress-before-swap. Resulting in collapsed pages and reducing the memory pressure.

Impact of oversized virtual machines part 3

In part 1 of the series of post on the impact of oversized virtual machines NUMA architecture, memory overhead reservation and share levels are reviewed, part 2 zooms in on the impact of memory overhead reservation and share levels on HA and DRS. This part looks at CPU scheduling, memory management and what impact oversized virtual machines have on the environment when a bootstorm occurs.

Multiprocessor virtual machine

In most cases, adding more CPUs to a virtual machine does not automatically guarantee increase throughput of the application, because some workloads cannot always take advantage of all the available CPUs. Sharing resources and scheduling these processes will introduce additional overhead.

For example, a four-way virtual machine is not four times as productive as a single-CPU system. If the application is unable to scale than the application will not benefit from these additional available resource.

Progress

Although relaxed co-scheduling reduces the requirement of the VMkernel to simultaneous schedule all vCPUs of the virtual machine, periodically scheduling the unused or idle vCPUs is still necessary to keep the progress of each vCPU in the virtual machine acceptably synchronized.

Esxtop also gives scheduling stats for SMP virtual machines;

%CRUN: All VCPUs want to run at once. CRUN is the amount of time between when a PCPU is told to run a certain VCPU on an SMP VM and when it is actually able to run that VM. This should be almost 0.

%CSTOP: If a VCPU gets ahead of another VCPU of the same SMP VM, then we ask the faster VCPU to stop until the other one can catch up. The time spent in this stopped state is CSTOP.

Single thread application

Only applications with multiple threads and allow them to be scheduled in parallel can benefit from multiprocessor systems. A single-threaded application can only be scheduled on one CPU at the time and will not benefit from the multiple CPUs available. The Guest OS is able to migrate the thread between the available CPUs, introducing unnecessary overhead such as interrupts or context switches and cache misses.

Timer interrupts

In older guest operating systems, the unused virtual CPUs still take timer interrupts, which consumes a small amount of additional CPU. Please refer to KB articles “High CPU Utilization of Inactive Virtual Machines – KB1077”

Configured memory

Oversizing the memory configuration of a virtual machine can impact the performance of the virtual machine itself or even worse, impact the other active virtual machines on the host and in the cluster. Using memory reservations on oversized virtual machines will make it go from bad to worse.

Application memory management

Excess memory is a problem when the application uses this memory opportunistically, in other words the application is hoarding memory. Java, SAP and often Oracle workloads assume it can use all the memory it detects. Because ESX cannot determine which memory is important to the virtual machine, it always backs memory pages of the virtual machine with physical pages. Besides creating a large memory footprint on the physical level, these kinds of applications add a third level of memory management as well.

Due to this additional management level, the Guest OS does not understand which pages are important and which are not. And because the Guest OS isn’t aware, it can not return inactive pages to the balloon driver when requested, therefor impacting the performance of the application during contention even more.

Setting memory reservation at virtual machine level will guarantee the availability of physical memory and will secure a certain level of application performance (if memory bound). However setting memory reservations at virtual machine level will impact the virtual infrastructure and the larger the memory reservation, the larger the impact. Visit “Impact of memory reservation” for more info.

To avoid these effects, it is recommended to monitor the behavior of the application over time and tune the configuration of the virtual machine and its reservation to get proper performance and limit the impact of its configured memory and the memory reservation.

NUMA node

If the virtual machines mentioned in the previous paragraph are configured with more memory than available in their home NUMA node, the system needs to fetch the memory from remote NUMA nodes. Accessing memory from remote nodes introduces latencies and generally reduced throughput of the vCPU. ESX does not communicate any NUMA information to the Guest OS and therefore both the Guest OS as well as the application are unaware of the non-uniform latency characteristics of the underlying platform. The Guest OS and application are therefor unable to prioritize which memory it will use.

If the virtual machine uses all the available memory of a NUMA node, it will lead to a higher degree of remote memory of all the other active virtual machines using the pCPU, leading to higher memory latencies and less throughput of the other virtual machines and eventually an intra-node migration. For more information about NUMA nodes, please read the articles: Sizing VMs and NUMA nodes and ESX 4.1 NUMA Scheduling.

Attempt to configure virtual machine with less memory than available in a NUMA node.

Swap file

During boot a swap file is created that equals the virtual machines configured memory minus the configured memory reservation. If no memory reservation is set, the virtual machine swap file (.vswap) equals the configured memory. Large virtual machines will generate an additional requirement for storing these large swap files reducing the consolidation ratio of virtual machines per VMFS datastore.

Bootstorms

A bootstorm is the occurrence of powering on a multitude of virtual machines simultaneously.

Virtual infrastructures running versions prior to ESX 4.1 can encounter memory contention when a bootstorm occurs of virtual machines running windows. Windows checks how much memory is available to the OS by zeroing out pages it detects. Transparent page sharing will collapse these pages but this will not occur immediately. Transparent Page Sharing is a cycle-driven process that tries to make a pass over the virtual machine memory with a timeframe of 3600 seconds. The level of contention will impact the speed of the TPS process. During a bootstorm, this zero-out behavior and delayed TPS process can introduce contention. Usually this contention is short-lived. Unfortunately during the startup phase of the guest OS the balloon driver will not be loaded and this situation can lead to compressing (10% of configured memory) and swapping useless data straight to disk.

ESXTOP will display swapped out memory but due to the nature of the data will show little to none swap-in.

ESX 4.1 uses a new technique called zero-page sharing. An in-depth post about this cool new technique will follow shortly.

End-note

This post concludes the three-part series about the impact of oversized virtual machines. The reason I wrote these articles is that I know many organizations still size their virtual machines on assumed peak loads happing somewhere in the (late) future of that service or application. Many organizations are using the same policy or method used for physical machines. The beauty of using virtual machines is the flexibility an organization has when it comes to determining the size of a machine during its lifecycle. Leverage these mechanisms and incorporate this in your service catalog and daily operations. Size the virtual machine according to its current or near-future workload.

Disable ballooning?

Recently, Paul Meehan submitted this question via a comment on the “Memory reclamation, when and how” article:

Hi,

we are currently considering virtualising some pretty significant SQL workloads. While the VMware best practices documents for SQL server inside VMware recommend turning on ballooning, a colleague who attended a deep dive with a SQL Microsoft MVP came back and the SQL guy strongly suggested that ballooning should always be turned off for SQL workloads. We have 165 SQL instances, some of which will need 5-10000 IOPS so performance and memory management is critical. Do you guys have a view on this from experience?

Thx,

Paul

I receive this kind of question a lot, whether it is SQL, Oracle or Citrix. And there always seem to be expert that is recommending disabling ballooning. Now this statement can be interpreted in two ways.

1. Disable the memory reclaimation mechanism by adding a particular parameter (sched.mem.maxmemctl) in the settings of the virtual machine.

– or –

2. Ensure that enough physical memory resources are available to the virtual machine to keep the VMkernel from reclaiming memory of that particular virtual machine.

I always hope that they mean guarantee enough memory to the virtual machine to stop the VMkernel from reclaiming memory from that specific VM. But unfortunately most specialists insist on disabling the mechanism.

Why is disabling the ballooning mechanism bad?

Many organizations that deploy virtual infrastructures rely on memory overcommitment to reach a higher consolidation ratio and higher memory utilization. In a virtual infrastructure not every virtual machine is actively using its assigned memory at the same time and not every virtual machine is making use of its configured memory footprint. To allow memory overcommitment, the VMkernel uses different virtual machine memory reclamation mechanisms.

1. Transparent Page Sharing

2. Ballooning

3. Memory compression

4. Host swapping

Except from Transparent Page Sharing, all memory reclamation techniques only become active when the ESX host experiences memory contention. The VMkernel will use a specific memory reclamation technique depending on the level of the host free memory. When the ESX host has 6% or less free memory available it will use the balloon driver to reclaim idle memory from virtual machines. The VMkernel selects the virtual machines with the largest amounts of idle memory (detected by the idle memory tax process) and will ask the virtual machine to select idle memory pages.

Now to fully understand the beauty of the balloon driver, it’s crucial to understand that the VMkernel is not aware of the Guest OS internal memory management mechanisms. Guest OS’s commonly use an allocated memory list and a free memory list. When a guest OS makes a request for a page, ESX will back that page with physical memory. When the Guest OS stops using the page internally, it will remove the page from the allocated memory list and place it on the free memory list. Because no data is changed, ESX will keep storing this data in physical memory.

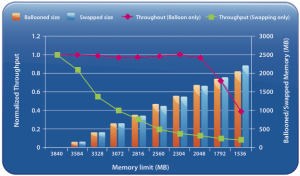

When the Balloon driver is utilized, the balloon driver request the guest OS to allocated a certain amount of pages. Typically the Guest OS will allocate memory that has been idle or registered in the Guest OS free list. If the virtual machine has enough idle pages no guest-level paging or even worse kernel level paging is necessary. Scott Drummonds tested an Oracle database VM against an OLTP load generation tool and researched the (lack of) impact of the balloon driver on the performance of the virtual machine. The results are displayed in this image:

Scott’s explanation:

Results of two experiments are shown on this graph: in one memory is reclaimed only through ballooning and in the other memory is reclaimed only through host swapping. The bars show the amount of memory reclaimed by ESX and the line shows the workload performance. The steadily falling green line reveals a predictable deterioration of performance due to host swapping. The red line demonstrates that as the balloon driver inflates, kernel compile performance is unchanged.

So the beauty of ballooning lies in the fact that it allows the guest OS itself to make the hard decision about which pages to be paged out without the hypervisor’s involvement. Because the Guest OS is fully aware of the memory state, the virtual machine will keep on performing as long as it has idle or free pages.

When ballooning is disabled

When we follow the recommendations of Non-VMware experts we would disable ballooning resulting in the following available memory reclamation techniques:

1. Transparent Page Sharing

2. Memory compression

3. Host-level swapping (.vswp)

Memory compression

Memory compression is offered in vSphere 4.1. The VMkernel will always try to compress memory before swapping. This feature is very helpful and a lot faster than swapping. However, the VMkernel will only compress a memory page if the compression ratio is 50% or more, otherwise the page will be swapped. Furthermore, the default size of the compression cache is 10%, if the compression cache is full, one compressed page must be replaced in order to make room for a new page. The older page will be swapped out. During heavy contention memory compression will become the first stop before ultimately ending up as a swapped page.

Increasing the memory compression cache can have a contradictive effect, as the memory compression cache is a part of the virtual machine memory usage, introducing memory pressure or contention due to configuring large memory compression caches.

Host-level Swapping

In contrast to ballooning, host-level swapping does not communicate with the Guest OS. The VMkernel has no knowledge about the status of the page in the Guest OS only that the physical page belongs to a specific virtual machine. Because the VMkernel is unaware of the content of the stored data inside the page and its significance to the Guest OS, it could happen that the VMkernel decides to swap out specific Guest OS kernel pages. Guest OS kernel pages will never be swapped by the Guest OS itself as they are crucial to maintaining kernel performance.

So by disabling ballooning, you have just deactivated the the most intelligent memory reclamation technique. Leaving the VMkernel with the option to either compress a memory page or just rip out complete random (and maybe crucial) page, significantly increasing the possibility of deteriorating the virtual machine performance. Which to me does not sound something worth recommending.

Alternative to disabling the balloon driver while guaranteeing performance?

The best option to guarantee performance is to use the resource allocation settings; shares and reservations.

Use shares to define priority levels and use reservations to guarantee physical resources even when the VMkernel is experiencing resource contention.

How reservations work are described in the articles: Setting reservations does have impact on the virtual infrastructure, described in the articles “Impact of Memory reservations” and “Resource Pools memory reservations” and “Reservations and CPU scheduling”.

However setting reservations will impact the virtual infrastructure, a well know impact of setting a reservation is on the HA slot size if the cluster is configured with “Host failures cluster tolerates”. More info on HA can be found in the HA deep dive on yellow-bricks. To circumvent this impact one might choose to configure the HA cluster with the HA policy “Percentage of cluster resources reserved as fail over spare capacity”. Due to the HA-DRS integration introduced in vSphere 4.1 the main caveat of dealing with defragmented clusters is dissolved.

Disabling the balloon-driver will likely worsen the performance of the virtual machine when the ESX host experiences resource contention. I suspect that the advice given by other-vendor-experts is to avoid memory reclamation and the only two build-in recommended mechanisms to help avoid memory reclaimation are the resource allocation unit settings: Shares and Reservations.