We’re extending the VMware Cloud Services overview series with a tech preview of the VMware Cloud Flex Compute service. Frances Wong shares a lot of interesting use cases and details with us in this episode!

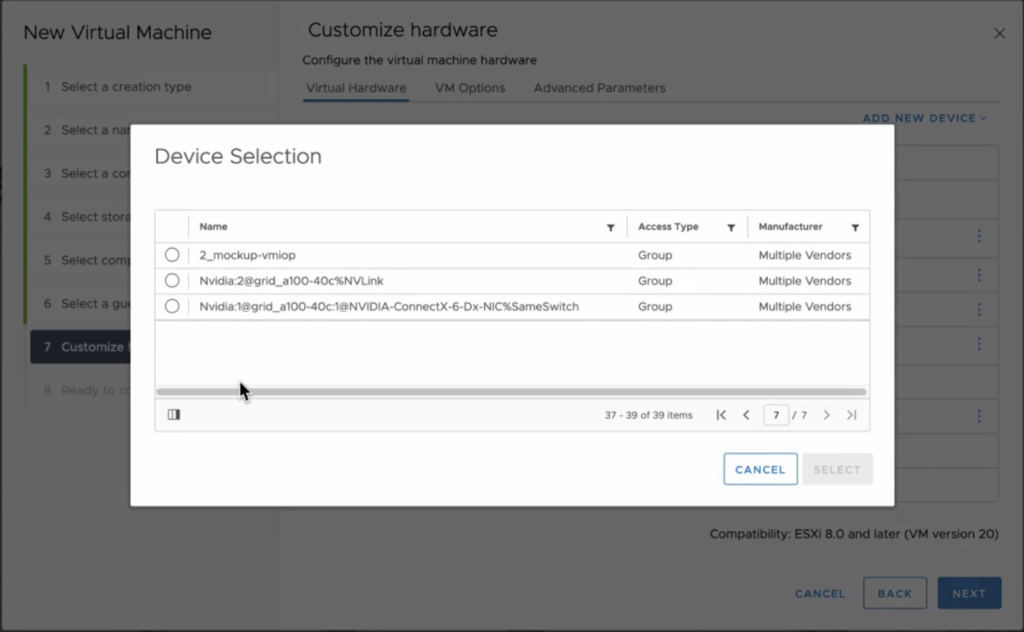

In short, VMware Cloud Flex Compute is a new approach to the Enterprise-grade VMware Cloud, but instead of obtaining a full SDDC, it is sliced, diced, sold, and deployed by fractional SDDC increments in the global cloud.

Make sure to follow Frances on Twitter (https://twitter.com/frances_wong) to keep up to date with her adventures, and check out the VMware website for more details on the Cloud Flex Compute offering!

Additional resources can be found here:

- Announcement – https://blogs.vmware.com/cloud/2022/08/30/announcing-vmware-cloud-flex-compute/

- Deep Dive – https://blogs.vmware.com/cloud/2022/08/30/vmware-cloud-flex-compute-deep-dive/

- Early Access Demo – https://vmc.techzone.vmware.com/?share=video2847&title=vmware-cloud-flex-compute-early-access-demo

Follow us on Twitter for updates and news about upcoming episodes: https://twitter.com/UnexploredPod. Last but not least, make sure to hit that subscribe button, rate where ever possible, and share the episode with your friends and colleagues!