VMware vSphere 4.1 introduces a new affinity rule, called “Virtual Machines to Hosts” (VM-Host), which I described in the article “VM to Host affinity rule”. A short recap: VM-Host affinity rules are available in two flavors:

- Must run rules (Mandatory)

- Should run rules (Preferential)

By providing these two options a new problem arises for the administrator\architect, when will the need occur for using the mandatory rule and when is it desired to use preferential rules?

I think it all depends on the risk and limitations introduced by each rule. Let’s review difference between the rules, the behavior of each rule and the impact they have on cluster services and maintenance mode.

What is the difference between a mandatory and a preferential rule?

- A mandatory rule limits HA, DRS and the user in such a way that a virtual machine may not be powered on or moved to a ESX host that does not belong to the associated DRS host group.

- A preferential rule defines a preference to DRS to run virtual machine on the host specified in the associated DRS host group.

How does HA treat preferential rules?

VMware High Availability respects mandatory rules and obey mandatory rules when placing virtual machines after a host failover. It can only place virtual machines on the ESX hosts that are specified in the DRS host group. DRS does not communicate the existence of preferential rules to HA, therefore HA is not aware of these rules. HA cannot prevent placing the virtual machine on a ESX host that is not a part of the DRS host group, thereby violating the affinity rule. DRS will correct this violation during the next invocation.

How does DRS treat preferential rules?

During a DRS invocation, DRS runs the algorithm with preferential rules as mandatory rules and will evaluate the result. If the result contains violations of cluster constraints; such as over-reserving a host or over-utilizing a host leading to 100% CPU or Memory utilization, the preferential rules will be dropped and the algorithm is run again.

Limitations

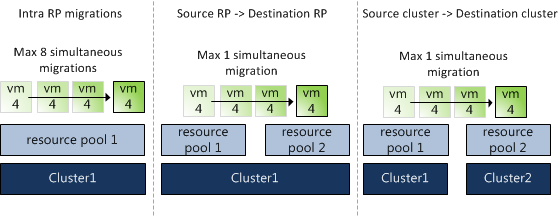

In essence a VM-Host affinity rule restricts the number of hosts on which the virtual machines may be powered-on or to which virtual machines may migrate. Setting VM-Host affinity rules can limit load-balancing and evacuation for maintenance mode.

Load-balancing limitations

A certain level of risk is introduced when Mandatory VM-Host affinity rules are used. As a result of the restrictive behavior by only allowing virtual machines to start on ESX host associated in the DRS host group, it impacts HA’s ability to select a compatible ESX host to place the virtual machine.

In addition, using mandatory VM-Host affinity rules reduce the virtual machine placement options used by DRS when defragmenting the cluster. When using the HA “Percentage based” admission control resource fragmentation could occur.

During a failover a defragmentation will be requested by HA from DRS. DRS tries to migrate virtual machines to regain enough unfragmented resources to fit and start all virtual machines. Because DRS is allowed to use “multi-hop” migrations, DRS calculations usually creates “chain” of migrations during defragmentation of a host. For example: VM-A migrates to host 2 and VM-B migrates from host 2 to host 3. Mandatory rules narrow the playing field, allowing VM to only move around in associated DRS host group, reducing the overall options to transport virtual machines around the cluster, regardless of association with VM-host affinity rules.

Maintenance mode

DRS will not violate CPU and memory reservation to obey mandatory VM-Host affinity rules and it will not violate mandatory rules to allow reservations to be honored. During placement both requirements must be met and therefore DRS will only place a virtual machine if its reservation and the mandatory rule can be satisfied. This behavior will impact the ability of DRS to select a suitable compatible host to the place virtual machines during maintenance mode automated evacuations.

Conclusion

Well, it’s up to you to decide which rule is appropriate to use for separating workloads across the ESX hosts in the cluster. By knowing the impact and limitations introduced by mandatory rules, one might be able to make an informed decision.