One setting most admins get caught off-guard is vSwitch Failback setting in combination with HA. If the management network vSwitch is configured with Active/Standby NICs and the HA isolation response is set to “Shutdown” VM or “Power-off” VM it is advised to set the vSwitch Failback mode to No. If left at default (Yes), all the ESX hosts in the cluster or entire virtual infrastructure might issue an Isolation response if one of the management network physical switches is rebooted. Here’s why:

Just a quick rehash:

Active\Standby

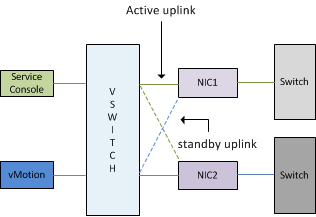

One NIC (vmnic0) is assigned as active to the management\service console portgroup, the second NIC (vmnic1) is configured as standby. The vMotion portgroup is configured with the first NIC (vmnic0) in standby mode and the second NIC as Active (vmnic1).

Failback

The Failback setting determines if the VMkernel will return the uplink (NIC) to active duty after recovery of a downed link or failed NIC. If the Failback setting is set to Yes the NIC will return to active duty, when Failback is set to No the failed NIC is assigned the Standby role and the administrator must manually reconfigure the NIC to the active state.

Effect of Failback yes setting on environment

When using the default setting of Failback unexpected behavior can occur during maintenance of a physical switch. Most switches, like those from Cisco, initiate the port after boot, so called Lights on. The port is active but is still unable to receive or transmitting data. The process from Lights-on to forwarding mode can take up to 50 seconds; unfortunately ESX is not able to distinguish between Lights-on status and forwarding mode, there for treating the link as usable and will return the NIC to active status again.

High Availability will proceed to transmit heartbeats and expect to receive heartbeats, after missing 13 seconds of heartbeats HA will try to ping its Isolation Address, due to the specified Isolation respond it will shut down or power-off the virtual machines two seconds later to allow other ESX hosts to power-up the virtual machines. But because it is common – recommended even – to configure each host in the cluster in an identical manner, each active NIC used by the management network of every ESX host connect to the same physical switch. Due to this design, once the switch is booted, a cluster wide Isolation response occurs resulting in a cluster wide outage.

To allow switch maintenance, it’s better to set the vSwitch failback mode to No. Selecting this setting introduces an increase of manual operations after failure or certain maintenance operations, but will reduce the change of “false positives” and cluster-wide isolation responses.

vSwitch Failback and High Availability

1 min read

Frank,

Nice article!

By “… setting vSwitch failover mode to No…”, I am assuming you mean the Failback option?

Regards,

Harold

http://www.twitter.com\hharold

LOL, yes I meant the failback mode.

Changed it.

Can figure out why I used the term failover.

Nice article Frank.

A question. You mention the process from Lights-on to forwarding can take up to 50 seconds. I usually see this when spanning-tree is enabled and Portfast is not enabled. With Portfast enabled I would assume the port would be up fast enough. Is this not the case?

Thanks Dennis,

Im talking about the time the Switch takes to initialize the ports. Not initializing the services of the port.

I believe Cisco recommends to disable services if you don’t use these on the ports, this will shorten the time to fully initialize the port.

@Frank

Ok. I understand,thanx for explaining. So with just a disconnect/connect of the network cable there is no problem if Portfast is enabled, but with a complete switch restart/start ports need to initialize which will take longer.

And if you use Active/Active teaming your in the clear right?

If for example Mgmt network is running through nic0 (teamed active/active with nic1) and nic0 has no link due to physicall switch reboot. Then the Mgmt network will failover to the other active nic: nic1. When the physical switch initializes the port to active and ESX treats the nic as active again no bad things will happen because the Mgmt network will still stay running via nic1. Correct?

@Dennis,

We recommends to enable Portfast\Trunkfast on switchports. This will speed up STP initialization process.

The example I used is for Active\Standby. If you have your management portgroup connected to two different phyiscal switches

and both are active/active than the problem described will not occur.

Hi Frank

Will the setting das.faliuredetectiontime to 60/75 seconds not offset this behaviour with regard to the ports and various settings around it with regard to failback? Also alternatively what about the failover detection policy in terms of beacon probing?

The problem is that initialization of the switch can take longer than 60/75 seconds. Rendering the das.failuredetectiontime useless.

Wouldn’t the alternative to disabling failback be creating a secondary management network with opposite Active/Standby NIC’s? In my mind, a secondary network is the better option because it does not introduce “an increase of manual operations after failure or certain maintenance operations”.

Thanks!

-ryan

Ryan, A secondary management network is preferable, but then you need to connect it to a seperate switching layer, otherwise you will see the same problem

You could always disable host monitoring when the network crew carry out their planned changes. Right?

-Jarle-

The 50 seconds is the time it takes to move a port from blocking to active using default spanning tree timers. People are generally using rapid spanning tree which shortens that time, and you should have portfast enabled anyway which will make the transition very fast.

I haven’t tested but I don’t think a port will show as being connected until the switch has completed its boot process. What switch models have you seen different behavior on?

This article is about switch intiating time, not the STP initialization process. The problem is that direct after a reboot the switch will place the port in “lights-on” mode. ESX will detects the light on and will treat the link as active. But the switch is just initiating, so no activity is possible. Most initializing cycles of switches take longer than 5 minutes, full-spec’ed core switches can take more than 30 minutes.

Frank, the problem you are describing is only possible if there are separate switching layers… With one switch none of this matters because the host is down while the switch reboots.

Frank, what if the Network Failover Detection is set to Beacon Probing? I assume that until there is full connectivity (ie. the switch is fully working and forwarding frames) the vmnic is treated as failed. So even if the ports are in the up state during initiation / boot process they are not forwarding frames and the vmnic state should be down, right?

So this addresses the specific port group settings for vSwitch0, and the Active/Standby with failback set to no seems logical. How about the NIC Teaming settings under the vSwitch0 properties? Should they be set to Active/Active, or Active/Standby as well? Shold Failback be set to no there as well?