When it comes to IO performance in the virtual infrastructure one of the most recommended “tweaks” is changing the Queue Depth (QD). But most forget that the QD parameter is just a small part of the IO path. The IO path exists of layers of hardware and software components, each of these components can have a huge impact on the IO performance. The best results are achieved when the whole system is analysed and not just the ESX host alone.

To be honest I believe that most environments will profit more from a balanced storage design than adjusting the default values. But if the workload is balanced between the storage controllers and IO queuing still occurs, adjusting some parameters might increase IO performance.

Merely increasing the parameters can cause high latency up to the point of major slowdowns. Some factors need to be taking in consideration.

LUN queue depth

The LUN queue depth determines how many commands the HBA is willing to accept and process per LUN, if a single virtual machine is issuing IO, the QD setting applies but when multiple VM’s are simultaneously issuing IO’s to the LUN, the Disk.SchedNumReqOutstanding (DSNRO) value becomes the leading parameter.

Increasing the QD value without changing the Disk.SchedNumReqOutstanding setting will only be beneficial when one VM is issuing commands. It is considered best practise to use the same value for the QD and DSNRO parameters!

Read Duncan’s excellent article about the DSNRO setting.

Qlogic Execution Throttle

Qlogic has a firmware setting called „Execution Throttle” which specifies the maximum number of simultaneous commands the adapter will send. The default value is 16, increasing the value above 64 has little to no effect, because the maximum parallel execution of SCSI operations is 64.

(Page 170 of ESX 3.5 VMware SAN System Design and Deployment Guide)

If the QD is increased, execution throttle and the DSNRO must be set with similar values, but to calculate the proper QD the fan-in ratio of the storage port needs to be calculated.

Target Port Queue Depth

A queue exist on the storage array controller port as well, this is called the “Target Port Queue Depth“. Modern midrange storage arrays, like most EMC- and HP arrays can handle around 2048 outstanding IO’s. 2048 IO’s sounds a lot, but most of the time multiple servers communicate with the storage controller at the same time. Because a port can only service one request at a time, additional requests are placed in queue and when the storage controller port receives more than 2048 IO requests, the queue gets flooded. When the queue depth is reached, this status is called (QFULL), the storage controller issues an IO throttling command to the host to suspend further requests until space in the queue becomes available. The ESX host accepts the IO throttling command and decreases the LUN queue depth to the minimum value, which is 1!

The VMkernel will check every 2 seconds to check if the QFULL condition is resolved. If it is resolved, the VMkernel will slowly increase the LUN queue depth to its normal value, usually this can take up to 60 seconds.

Calculating the queue depth\Execution Throttle value

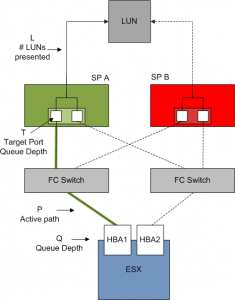

To prevent flooding the target port queue depth, the result of the combination of number of host paths + execution throttle value + number of presented LUNs through the host port must be less than the target port queue depth. In short T => P * q * L

T = Target Port Queue Depth

P = Paths connected to the target port

Q = Queue depth

L = number of LUN presented to the host through this port

Despite having four paths to the LUN, ESX can only utilize one (active) path for sending IO. As a result, when calculating the appropriate queue depth, you use only the active path for “Paths connected to the target port (P)” in the calculation, i.e. P=1.

But in a virtual infrastructure environment, multiple ESX hosts communicate with the storage port, therefore the QD should be calculated by the following formula:

T => ESX Host 1 (P * Q * L) + ESX Host 2 (P * Q * L) ….. + ESX Host n (P * Q * L)

For example an 8 ESX host cluster connects to 15 LUNS (L) presented by an EVA8000 (4 target ports)* An ESX server issues IO through one active path (P), so P=1 and L=15.

The execution throttle\queue depth can be set to 136,5=> T=2048 (1 * Q * 15) = 136,5

But using this setting one ESX host can fill the entire target port queue depth by itself, but the environment exists of 8 ESX hosts. 136,5/ 8 = 17,06

In this situation all the ESX Host communicate to all the LUNs through one port. Which does not happen in many situations if a proper load-balancing design is applied. Most arrays have two controllers and every controller has at least two ports. In the case of a controller failure, at least two ports are available to accept IO requests.

It is possible to calculate the queue depth conservatively to ensure a minimum decrease of performance when losing a controller during a failure, but this will lead to underutilizing the storage array during normal operation, which will hopefully be 99,999% of the time. It is better to calculate a value which utlilize the array properly without flooding the target port queue.

If you assume that multiple ports are available and that all LUNs are balanced across the available ports on the controllers, it will effectively quadruple the target port queue depth and therefore increase the values of the execution throttle in the example above to 68. Besides the fact that you cannot increase this value above 64, it is wise to decrease the value to a number below max value, it will create a buffer for safety

What’s the Best Setting for Queue Depth?

The examples mentioned are pure worst case scenario stuff, most of the time it is highly unlikely that all hosts perform at their maximum level at any one time. Changing the defaults can improve throughput, but most of the time it is just a shot in the dark. Although you are configuring your ESX hosts with the same values, not every load on the ESX server is the same. Every environment is different and so the optimal queue depths would differ. One needs to test and analyse its environment. Please do not increase the QD without analysing the environment; this can be more harmful than useful.

Get notification of these blogs postings and more DRS and Storage DRS information by following me on Twitter: @frankdenneman

Increasing the queue depth?

3 min read

Hi Frank

could you explain this formuala a bit more?

The execution throttle can be set to 136,5=> T=2048 (1 * Q * 15) = 136,5

where does the 136,5 come from? i understand this would be the Qlogic execution throttle value but i don’t get what the value means

thanks

Hi Paul,

I changed the text, i forgot to add the queue depth to that sentence. So subsitute execution throttle for queue depth.

The target port can accept 2048 outstanding IO’s in its queue before shutting down communications, the esx host in the example has 15 luns presented over that storage controller. IO is issued over one active path per LUN.

Now you can calculate a queue depth that will give you the best performance without flooding the queue.

If you set the queuedepth to 136 the ESX host is

able to issue 2040 IO’s (2048/15).

But (hopefully) you do not have one ESX host, so you will need to devide the target port queue with the amount of hosts….

Does the rest of the post make any sense?

On my blog someone suggested to keep the “DSNRO” lower than the QD. This way it won’t be possible for just one VM to fill up the entire queue for a host.

Great article again by the way, keep them coming!

Good question!

To my knowledge the qfull condition is handled by the qlogic driver itself. The QFULL status is not returned to the OS. But some storage devices return BUSY rather than QFULL, BUSY errors are logged in the /var/log/vmkernel.

But not every BUSY error is a Qfull error!

A KB article exists will SCSI sense codes: KB here

If you have suspicious error codes in the logs, you can check them at vmproffesional.com SCSI Error Decoder

Advice please:

I am an ESX admin, not a storage admin.

The institution where I work is using 1 LUN per VM (sometimes more than 1 e.g lun per vmdk).

Their environment currently consists of 3 hosts running 86 vm’s connected to 100 LUNs. Most LUNs have 2 paths. The SAN is active/passive.

According to your formula this would equal 2048/100/3*2=13.65.

What settings should I be using for QD and DSNRO? And shold I be telling the storage admins to stop assigning 1 LUN per VM? What are the implications of continuing down this path?

“When the queue depth is reached, (QFULL) the storage controller issues an IO throttling command to the host…”

How do you see that at the host level ?

Logs wise, what should I grep for ?

Thx,

One VM per LUN is a really nice situation.

The VM has its own path and does not need to compete with other VM’s sharing the LUN.

If more than one VM issues IO to the LUN, the VMkernel controlls the IO with some sort of “fairness” scheme, that means if two VM’s issue IO’s to the LUN, the vmkernel decides which VM can write the IO. This has to do with sector proximity and the amount of consecutive sequential IO’s allowed from one VM. You just gave me an idea of a new article 🙂

Did you every calculated the storage utilization rate with you vm-to LUN ratio?

How many free space do you have on every lun?

In ESX you will always communicate over one active path.

DSNRO setting does not apply in you situation, because you are hosting one VM per LUN.

The QD applies.

Please contact your SAN vendor to ask the target port queue depth before setting the QD.

How many storage controllers does your SAN have?

Hi Frank yes it makes perfect sense now was just what I was looking for cheers!

Paul.

Thx for the compliment,

Yes, the formula implies a 100% virtual situation, just to keep it “simple”.

When other systems connect to the storage arrays, you will need to take them into account as well.

Microsoft published two excellent documents, both are must reads for windows admins

Disk Subsystem Performance Analysis for Windows

and

Performance Tuning Guidelines for Windows Server 2003

In the Disk subsystem doc, the numberofrequest setting is explained:

NumberOfRequests

Both SCSIport and Storport miniport drivers can use a registry parameter to designate how much concurrency is allowed on a device by device basis.

The default is 16, which is much too small for a storage subsystem of any decent size unless quite a number of physical disks are being presented to the operating system by the controller.

Up until today I worked on virtual infrastructure which contained only windows systems, so I do not know the default linux and solaris queuedepths yet.

But I’m working on a new virtual infra for a new client of mine, which will host windows, linux and solaris vm’s. So I will post the settings soon.

Hi Frank,

Great post!

your formula implies a 100% virtual situation.

What about those physical guests connected to the same storage controllers?

When determining your QD settings you have to take them into account also.

-Arnim

As you write : you use the same value for QueueDepth, DSNRO and ExecutionThrottle. And you that the max ExcutionThrottle is 64.

So you never put QueueDepth greater than 64?

please tell me how to configure the adapter queue depth value (AQLEN) in esx4.

Лучшие новые статусы для Вконтакте.ру

How to change the QUEUE DEPTH in Windows Server 2008 r2 ?

Hi Frank,

Good post.

I have a genuine and simple question, I hope eveybody would like to know when should I go for altering QD from the default one. Suppose if my environment is running well with some slowness in the month end( billing application servers and all) and I don’t have any QD error with iscsi code 28 or 40 in my vmkernel repported. So, How should I check if there any possiblity of high I/O and I should go for it.

Thanks.