Today a new technical paper is available on vmware.com.

Description

The CPU scheduler is an essential component of vSphere 5.x. All workloads running in a virtual machine must be scheduled for execution and the CPU scheduler handles this task with policies that maintain fairness, throughput, responsiveness, and scalability of CPU resources. This paper describes these policies, and this knowledge may be applied to performance troubleshooting or system tuning. This paper also includes the results of experiments on vSphere 5.1 that show the CPU scheduler maintains or exceeds its performance over previous versions of vSphere.

If you are interested in CPU scheduling and in particular NUMA, download the paper: The CPU Scheduler in VMware vSphere 5.1

Hide all Getting Started Pages in vSphere 5.1 webclient in 3 easy steps

I’m rebuilding my lab and after I installed a new vCenter server I was confronted with those Getting Started tabs again. That reminded me that I promised someone at a VMUG to blog how to remove these tabs in one single operation.

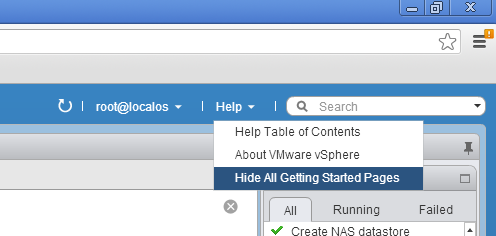

- Go to Help (located in the blue bar top right of your screen)

- Click on the arrow

- Select Hide All Getting Started Pages

Direct IP-storage and using NetIOC User-defined network resource pools for QoS

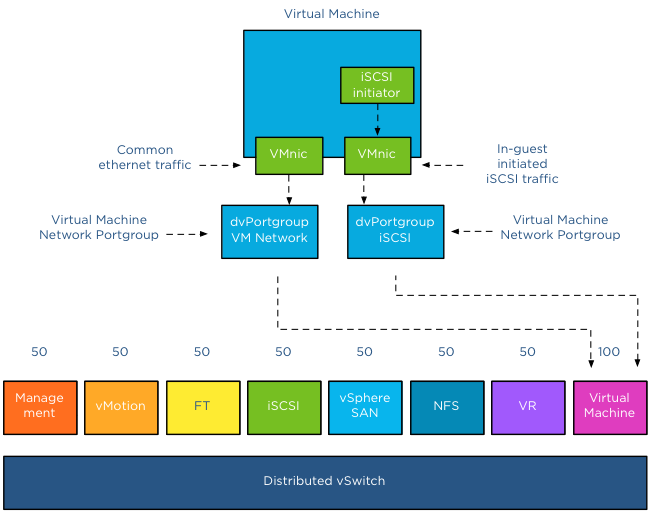

Some customers use iSCSI initiators inside the Guest OS to connect directly to a datastore on the array or using an NFS client inside the Guest OS to access remote NFS storage directly. Thereby circumventing the VMkernel storage stack of the ESX host. The virtual machine connects to the remote storage system via a VM network portgroup and therefore the VMkernel classifies this network traffic as virtual machine traffic. This “indifference” or non-discriminating behavior of the VMkernel might not suit you or might not help you to maintain service level agreements.

Isolate traffic

In the 1Gbe adapter world, having redundant and isolated uplinks assigned for different sorts of traffic is a simple way of not to worry about traffic congestion. However when using a small number of 10GbE adapters you need to be able to partition network bandwidth among the different types of network traffic flows. This is where NetIOC comes into play. Please read the “Primer on Network I/O Control” article to quickly brush up on your knowledge of NetIOC.

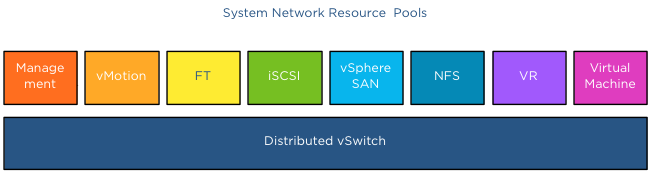

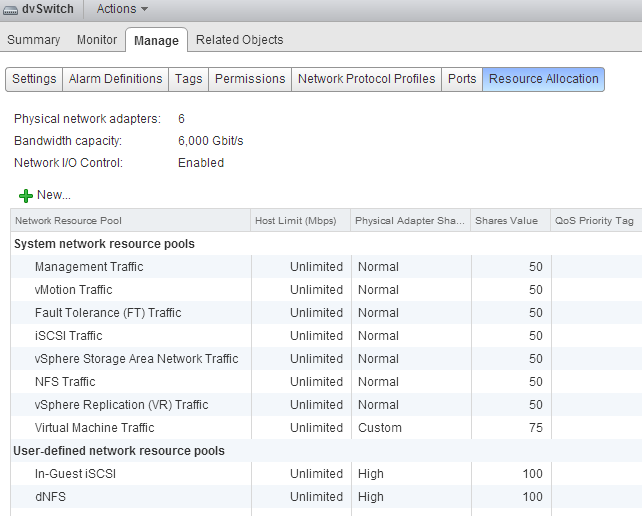

System network resource pools

By default NetIOC provides seven different system network resource pools. Six network pools are used to bind VMkernel traffic, such as NFS and iSCSI. One system network resource pool is used for virtual machine network traffic.

The network adapters you use to connect your IP-Storage from within the Guest OS connect to a virtual machine network portgroup. Therefor NetIOC binds this traffic to the virtual machine network resource pool. In result this traffic shares the bandwidth and prioritization with “common” virtual machine network traffic.

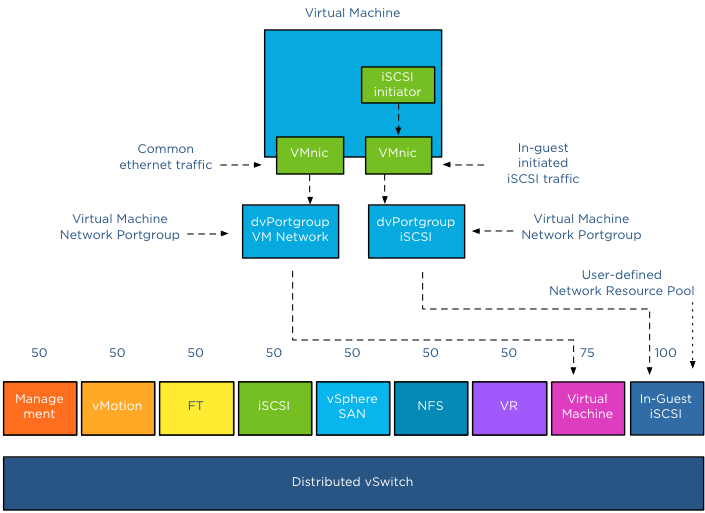

User-defined network resource pool

Most customers tend to prioritize IP storage traffic over network traffic induced by applications and the guest-OS. To ensure the IP-Storage traffic created by the NFS client or iSCSI initiator inside the Guest OS create a user-defined network resource pool. User-defined network resource pools are available from vSphere 5.0 and upwards. Make sure your distributed switch is at least version 5.0.

Shares: User-defined network resource pools are available to isolate and prioritize virtual machine network traffic. Configure the User-defined network resource pool with an appropriate number of shares. The number of shares will reflect the relative priority of this network pool compared to the other traffic streams using the same dvUplink.

QoS tag: Another benefit of creating a separate User-defined network resource pool is the ability to set a QoS tag specifically for this traffic stream. If you are using IEEE 802.1p tagging end-to-end throughout your virtual infrastructure ecosystem, setting the QoS tag on the User-defined network resource pool helps you to maintain the service level for your storage traffic.

Setup

In a greenfield scenario setup the User-defined resource pool first, that allows you to select the correct network pool during the creation of the dvPortgroups. If you already created dvPortgroups, you can assign the correct network resource pool once you create the network resource pool.

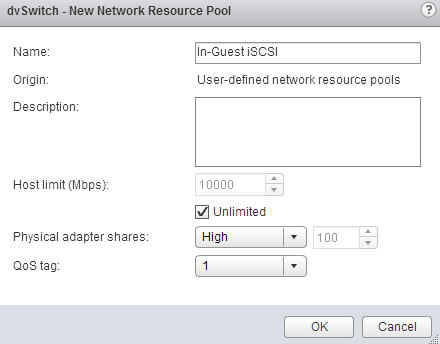

Create a user defined network resource pool:

1. Open your vSphere web client and go to networking.

2. Select the dvSwitch

3. Go to Manage

4. Select Resource Allocation tab

5. Click on the new icon.

6. Configure the network resource pool and click on OK

I already made a User-defined network resource pool called dNFS, the overview of available network resource pools on the dvSwitch looks like this:

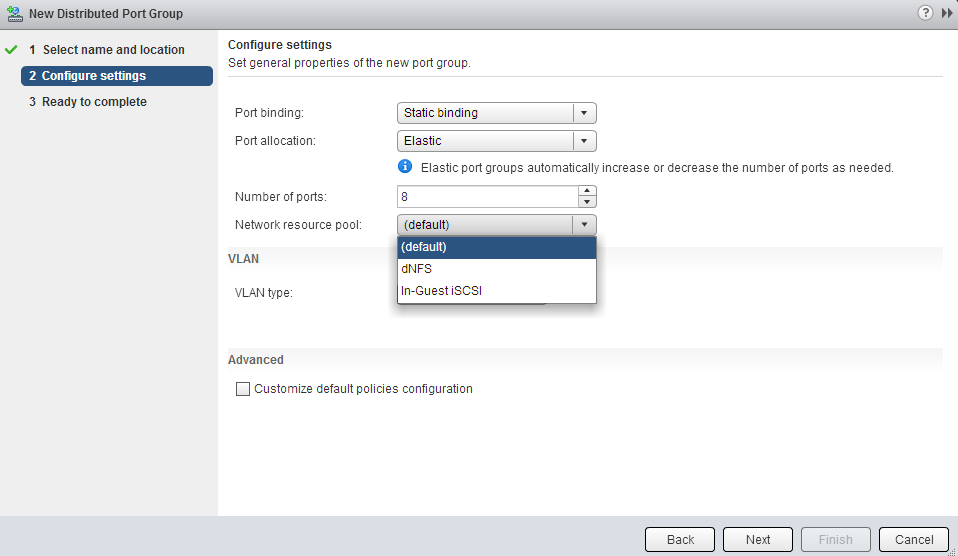

To map the network resource pool to the Distributed Port Group, create a new Distributed Port group, or edit an existing one and select the appropriate network resource pool:

Storage DRS Cluster shows error "Index was out of range. Must be non-negative and less than the size of the collection.

Recently I have noticed an increase of tweets mentioning the error “Index was out of range”

Error message:

When trying to edit the settings of a storage DRS cluster, or when clicking on SDRS scheduling settings an error pops up:

“An internal error occurred in the vSphere Client. Details: Index was out of range. Must be non-negative and less than the size of the collection. Parameter name: index”

Impact:

This error does not have any impact on Storage DRS operations and/or Storage DRS functionality and is considered a “cosmetic” issue. See KB article: KB 2009765.

Solution:

VMware vCenter Server 5.0 Update 2 fixes this problem. Apply Update 2 if you experience this error.

As the fix is included in the Update 2 for vCenter 5.0, I expect that vCenter 5.1 Update 1 will include the fix as well, however I cannot confirm any deliverables.

Storage DRS Cluster shows error "Index was out of range. Must be non-negative and less than the size of the collection.

Recently I have noticed an increase of tweets mentioning the error “Index was out of range”

Error message:

When trying to edit the settings of a storage DRS cluster, or when clicking on SDRS scheduling settings an error pops up:

“An internal error occurred in the vSphere Client. Details: Index was out of range. Must be non-negative and less than the size of the collection. Parameter name: index”

Impact:

This error does not have any impact on Storage DRS operations and/or Storage DRS functionality and is considered a “cosmetic” issue. See KB article: KB 2009765.

Solution:

VMware vCenter Server 5.0 Update 2 fixes this problem. Apply Update 2 if you experience this error.

As the fix is included in the Update 2 for vCenter 5.0, I expect that vCenter 5.1 Update 1 will include the fix as well, however I cannot confirm any deliverables.