Over the last few weeks, I watched many sessions of the NVIDIA Fall version of GTC. I created a list of interesting sessions for a group of people internally at VMware, but I thought the list might interest some outside VMware. It’s primarily focused on understanding NVIDIA’s product and services suite and not necessarily deep diving into technology or geeking out on core counts and speeds and feeds. If you found exciting sessions that I haven’t listed, please leave them in the comments below.

Data Science

Accelerating Data Science: State of RAPIDS [A31490]

Reason to watch: A 55-minute overview of the state of RAPIDS (the OS framework for data science), upcoming features, and improvements

Inference

Please note: Triton is part of the NVIDIA AI Enterprise stack (NVAIE)

How Hugging Face Delivers 1 Millisecond Inference Latency for Transformers in Infinity [A31172]

Reason to watch: A 50-minute session. Hugging Face is the dominant play for NLP Transformers. Hugging Face is pushing the open platform \ democratizing ML message.

Scalable, Accelerated Hardware-agnostic ML Inference with NVIDIA Triton and Arm NN [A31177]

Reason to watch: A 50-minute session covering ARM NN architecture and deploying models on far edge technology (Jetson\Pi’s) using NVIDIA Triton Inference Server

Reason to watch: 50 minutes overview of Triton architecture, features, customer case studies, and onyx runtime integration. It covers ONNX RT, which provides optimization for target platforms (inference).

Reason to watch: 45 minutes session covering Triton on AWS SageMaker and two customers sharing their deployment overview and their lessons learned.

No-Code Approach

NVIDIA TAO: Create Custom Production-Ready AI Models without AI Expertise [D31030]

Reason to watch: A 3-minute overview of TAO, an model adaptation framework that can fine-tune pre-trained models by feeding proprietary (smaller) datasets and optimizing it for the inference hardware architecture.

AI Life-cycle Management for the Intelligent Edge [A31160]

Reason to watch: A 50-minute session that covers NVIDIAs approach of Transfer Learning. NVIDIA provides a Pre-trained model, while customers optimize the model, NVIDIA TAO assists with future optimization for inference deployment. Fleet command to orchestrate the deployment of the model at the edge.

A Zero-code Approach to Creating Production-ready AI Models [A31176]

Reason to watch: A 35-minute session that explores TAO in more detail and provides a demo of the TAO (GUI-based) solution.

NVIDIA LaunchPad

Simplifying Enterprise AI from Develop to Deploy with NVIDIA LaunchPad [A31455]

Reason to watch: A 30-minute overview of the NVIDIA Cloud AI platform delivered through their partnership with Equinix. (Rapid testing and prototyping AI)

How to Quickly Pilot and Scale Smart Infrastructure Solutions with LaunchPad and Metropolis [A31622]

Reason to watch: A 30-minute overview of how to use Metropolis (Computer Vision AI Application Framework) and LaunchPad to accelerate POCs. (Zero Touch Testing and System Sizing). The session covers Metropolis Certification for system design (TS: 11:30) and the bare metal access.

NVIDIA and Cloud Integration

Reason to watch: A 40-minutes overview of NCG integration with Google Vertex AI (Google End-to-End ML AI Platform)

Automate Your Operations with Edge AI (Presented by Microsoft Azure) [A31707]

Reason to watch: A 15-minutes overview of Azure Percept. Azure Percept is an edge AI solution for IoT devices, now available on the Azure HCI stack.

Reason to watch: A 45-minute technical overview of the NVIDIA and VMware partnership demonstrating the key elements of the NVIDIA AI-Ready Enterprise Platform in detail.

NVIDIA EGX

Exploring Cloud-native Edge AI [A31166]

Reason to watch: A 50-minute overview of NVIDIA’s cloud-native platform (Kubernetes based, edge AI platform)

Retail

The One Retail AI Use Case That Stands Out Above the Rest [A31548]

Reason to watch: A 55-minute session providing great insights into real use-case of Everseen technology deployed at Kroger

One of the World’s Top Retailers is Bringing AI-Powered Convenience to a Store Near You [A31359]

Reason to watch: A 25-minute session providing insights into the challenges of deploying and using autonomous store technology.

DPU

Real-time AI Processing at the Edge [A31164]

Reason to watch: A 40-minute session covering the GPU+DPU converged accelerators (A40X and A100X), their architecture and their DOCA (Data Center on a Chip Architecture) and CUDA architecture and programming environment.

Programming the Data Center of the Future Today with the New NVIDIA DOCA Release [A31069]

Reason to watch: A 40-minute session covering DOCA architecture in detail.

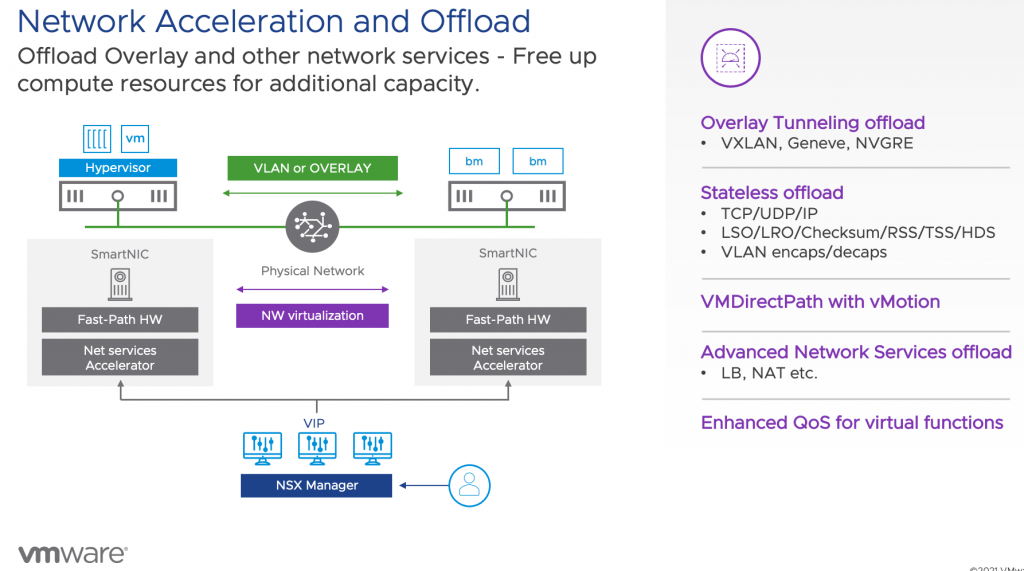

Reason to watch: A 50-minute session offering an excellent explanation of the different vGPU modes (native vs MIG mode). Starting from the 37 minute time stamp the presenters dive into the use of Network Function virtualization on smartNICs.

Developer / Engineer Type Sessions

CUDA New Features and Beyond [A31399]

Reason to watch: A 50-minutes overview of what’s new in the CUDA toolkit.

Reason to watch: A 50-minutes deep dive session on the end-to-end pipelines for vision-based AI

Accelerating the Development of Next-Generation AI Applications with DeepStream 6.0 [A31185]

Reason to Watch: A 50-minutes overview of DeepStream solution. DeepStream helps to develop and deploy vision-based AI

End-to-end Extremely Parallelized Multi-agent Reinforcement Learning on a GPU [A31051]

Reason to watch: A 40-minute deep dive session on how Salesforce worked on a framework to drastically reduce CPU-GPU communication