Recently, Paul Meehan submitted this question via a comment on the “Memory reclamation, when and how” article:

Hi,

we are currently considering virtualising some pretty significant SQL workloads. While the VMware best practices documents for SQL server inside VMware recommend turning on ballooning, a colleague who attended a deep dive with a SQL Microsoft MVP came back and the SQL guy strongly suggested that ballooning should always be turned off for SQL workloads. We have 165 SQL instances, some of which will need 5-10000 IOPS so performance and memory management is critical. Do you guys have a view on this from experience?

Thx,

Paul

I receive this kind of question a lot, whether it is SQL, Oracle or Citrix. And there always seem to be expert that is recommending disabling ballooning. Now this statement can be interpreted in two ways.

1. Disable the memory reclaimation mechanism by adding a particular parameter (sched.mem.maxmemctl) in the settings of the virtual machine.

– or –

2. Ensure that enough physical memory resources are available to the virtual machine to keep the VMkernel from reclaiming memory of that particular virtual machine.

I always hope that they mean guarantee enough memory to the virtual machine to stop the VMkernel from reclaiming memory from that specific VM. But unfortunately most specialists insist on disabling the mechanism.

Why is disabling the ballooning mechanism bad?

Many organizations that deploy virtual infrastructures rely on memory overcommitment to reach a higher consolidation ratio and higher memory utilization. In a virtual infrastructure not every virtual machine is actively using its assigned memory at the same time and not every virtual machine is making use of its configured memory footprint. To allow memory overcommitment, the VMkernel uses different virtual machine memory reclamation mechanisms.

1. Transparent Page Sharing

2. Ballooning

3. Memory compression

4. Host swapping

Except from Transparent Page Sharing, all memory reclamation techniques only become active when the ESX host experiences memory contention. The VMkernel will use a specific memory reclamation technique depending on the level of the host free memory. When the ESX host has 6% or less free memory available it will use the balloon driver to reclaim idle memory from virtual machines. The VMkernel selects the virtual machines with the largest amounts of idle memory (detected by the idle memory tax process) and will ask the virtual machine to select idle memory pages.

Now to fully understand the beauty of the balloon driver, it’s crucial to understand that the VMkernel is not aware of the Guest OS internal memory management mechanisms. Guest OS’s commonly use an allocated memory list and a free memory list. When a guest OS makes a request for a page, ESX will back that page with physical memory. When the Guest OS stops using the page internally, it will remove the page from the allocated memory list and place it on the free memory list. Because no data is changed, ESX will keep storing this data in physical memory.

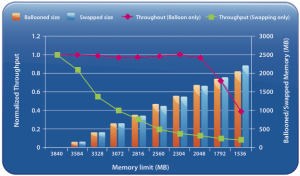

When the Balloon driver is utilized, the balloon driver request the guest OS to allocated a certain amount of pages. Typically the Guest OS will allocate memory that has been idle or registered in the Guest OS free list. If the virtual machine has enough idle pages no guest-level paging or even worse kernel level paging is necessary. Scott Drummonds tested an Oracle database VM against an OLTP load generation tool and researched the (lack of) impact of the balloon driver on the performance of the virtual machine. The results are displayed in this image:

Scott’s explanation:

Results of two experiments are shown on this graph: in one memory is reclaimed only through ballooning and in the other memory is reclaimed only through host swapping. The bars show the amount of memory reclaimed by ESX and the line shows the workload performance. The steadily falling green line reveals a predictable deterioration of performance due to host swapping. The red line demonstrates that as the balloon driver inflates, kernel compile performance is unchanged.

So the beauty of ballooning lies in the fact that it allows the guest OS itself to make the hard decision about which pages to be paged out without the hypervisor’s involvement. Because the Guest OS is fully aware of the memory state, the virtual machine will keep on performing as long as it has idle or free pages.

When ballooning is disabled

When we follow the recommendations of Non-VMware experts we would disable ballooning resulting in the following available memory reclamation techniques:

1. Transparent Page Sharing

2. Memory compression

3. Host-level swapping (.vswp)

Memory compression

Memory compression is offered in vSphere 4.1. The VMkernel will always try to compress memory before swapping. This feature is very helpful and a lot faster than swapping. However, the VMkernel will only compress a memory page if the compression ratio is 50% or more, otherwise the page will be swapped. Furthermore, the default size of the compression cache is 10%, if the compression cache is full, one compressed page must be replaced in order to make room for a new page. The older page will be swapped out. During heavy contention memory compression will become the first stop before ultimately ending up as a swapped page.

Increasing the memory compression cache can have a contradictive effect, as the memory compression cache is a part of the virtual machine memory usage, introducing memory pressure or contention due to configuring large memory compression caches.

Host-level Swapping

In contrast to ballooning, host-level swapping does not communicate with the Guest OS. The VMkernel has no knowledge about the status of the page in the Guest OS only that the physical page belongs to a specific virtual machine. Because the VMkernel is unaware of the content of the stored data inside the page and its significance to the Guest OS, it could happen that the VMkernel decides to swap out specific Guest OS kernel pages. Guest OS kernel pages will never be swapped by the Guest OS itself as they are crucial to maintaining kernel performance.

So by disabling ballooning, you have just deactivated the the most intelligent memory reclamation technique. Leaving the VMkernel with the option to either compress a memory page or just rip out complete random (and maybe crucial) page, significantly increasing the possibility of deteriorating the virtual machine performance. Which to me does not sound something worth recommending.

Alternative to disabling the balloon driver while guaranteeing performance?

The best option to guarantee performance is to use the resource allocation settings; shares and reservations.

Use shares to define priority levels and use reservations to guarantee physical resources even when the VMkernel is experiencing resource contention.

How reservations work are described in the articles: Setting reservations does have impact on the virtual infrastructure, described in the articles “Impact of Memory reservations” and “Resource Pools memory reservations” and “Reservations and CPU scheduling”.

However setting reservations will impact the virtual infrastructure, a well know impact of setting a reservation is on the HA slot size if the cluster is configured with “Host failures cluster tolerates”. More info on HA can be found in the HA deep dive on yellow-bricks. To circumvent this impact one might choose to configure the HA cluster with the HA policy “Percentage of cluster resources reserved as fail over spare capacity”. Due to the HA-DRS integration introduced in vSphere 4.1 the main caveat of dealing with defragmented clusters is dissolved.

Disabling the balloon-driver will likely worsen the performance of the virtual machine when the ESX host experiences resource contention. I suspect that the advice given by other-vendor-experts is to avoid memory reclamation and the only two build-in recommended mechanisms to help avoid memory reclaimation are the resource allocation unit settings: Shares and Reservations.